Performance Validity and Symptom Validity in Neuropsychological Assessment

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Journal of the International Neuropsychological Society (2012), 18, 1–7.

Copyright E INS. Published by Cambridge University Press, 2012.

doi:10.1017/S1355617712000240

DIALOGUE

Performance Validity and Symptom Validity in

Neuropsychological Assessment

Glenn J. Larrabee, Ph.D.

Independent Practice, Sarasota, Florida.

(RECEIVED November 14, 2011; FINAL REVISION February 7, 2012; ACCEPTED February 8, 2012)

Abstract

Failure to evaluate the validity of an examinee’s neuropsychological test performance can alter prediction of external

criteria in research investigations, and in the individual case, result in inaccurate conclusions about the degree of

impairment resulting from neurological disease or injury. The terms performance validity referring to validity of test

performance (PVT), and symptom validity referring to validity of symptom report (SVT), are suggested to replace less

descriptive terms such as effort or response bias. Research is reviewed demonstrating strong diagnostic discrimination for

PVTs and SVTs, with a particular emphasis on minimizing false positive errors, facilitated by identifying performance

patterns or levels of performance that are atypical for bona fide neurologic disorder. It is further shown that false positive

errors decrease, with a corresponding increase in the positive probability of malingering, when multiple independent

indicators are required for diagnosis. The rigor of PVT and SVT research design is related to a high degree of

reproducibility of results, and large effect sizes of d51.0 or greater, exceeding effect sizes reported for several

psychological and medical diagnostic procedures. (JINS, 2012, 18, 1–7)

Keywords: Malingering, False positive errors, Sensitivity, Specificity, Diagnostic probability, Research design

Bigler acknowledges the importance of evaluating the an external criterion. For example, grade point average and Full

validity of examinee performance, but raises concerns Scale IQ correlated below the expected level of strength until

about the meaning of ‘‘effort,’’ the issue of what ‘‘near pass’’ those subjects failing an SVT were excluded (Greiffenstein &

performance means (i.e., scores that fall just within the range of Baker, 2003); olfactory identification was only correlated with

invalid performance), the possibility that such scores may measures of brain injury severity (e.g., Glasgow Coma Scale)

represent ‘‘false positives,’’ and the fact that there are no for those subjects passing an SVT (Green, Rohling, Iverson, &

systematic lesion localization studies of Symptom Validity Gervais, 2003); California Verbal Learning Test scores did not

Test (SVT) performance. Bigler also discusses the possibility discriminate traumatic brain injury patients with abnormal CT

that illness behavior and ‘‘diagnosis threat’’ (i.e., the influence or MRI scans from those with normal scans until patients failing

of expectations) can affect performance on SVTs. He further SVTs were excluded (Green, 2007); patients with moderate

questions whether performance on SVTs may be related to or severe traumatic brain injury (TBI), 88% of whom had

actual cognitive abilities and to the neurobiology of drive, abnormal CT or MRI, plus patients with known cerebral

motivation and attention. Last, he raises concerns about the rigor impairment (stroke, aneurysm) did not differ from those with

of existing research underlying the development of SVTs. uncomplicated mild TBI, psychiatric disorders, orthopedic inju-

Bigler and I are in agreement about the need to assess the ries or chronic pain until those failing an SVT were dropped from

validity of an examinee’s performance. Failure to do so can comparison (Green, Rohling, Lees-Haley, & Allen, 2001). An

lead to misleading results. My colleagues and I (Rohling association between memory complaints and performance on the

et al., 2011) reviewed several studies in which performance California Verbal Learning Test, which goes counter to the oft-

on response bias indicators (another term for SVTs) attenuated replicated finding of no association between memory or cognitive

the correlation between neuropsychological test measures and complaints and actual test performance (Brulot, Strauss, &

Spellacy, 1997; Hanninen et al., 1994; Larrabee & Levin, 1986;

Correspondence and reprint requests to: Glenn J. Larrabee, 2650 Bahia Williams, Little, Scates, & Blockman, 1987), disappeared when

Vista Street, Suite 308, Sarasota, FL 34239. E-mail: glarrabee@aol.com those failing an SVT were excluded (Gervais, Ben-Porath,

12 G.J. Larrabee Wygant, & Green, 2008). Subsequent to the Rohling et al. (2011) hippocampal damage scored in the valid performance range on review, Fox (2011) showed that the association between neu- the recognition trials of the Word Memory Test (Goodrich- ropsychological test performance and presence/absence of brain Hunsaker & Hopkins, 2009). Similarly, psychiatric disorders injury only was demonstrated in patients passing SVTs. have not been found to impact PVT scores, including depression Some of the debate regarding symptom validity testing (Rees, Tombaugh, & Boulay, 2001), depression and anxiety results from use of the term ‘‘effort.’’ ‘‘Effort’’ suggests a (Ashendorf, Constantinou, & McCaffrey, 2004), and depression continuum, ranging from excellent, to very poor. SVTs are with chronic pain (Iverson, Le Page, Koehler, Shojania, & constructed, however, based on patterns of performance that Badii, 2007). are atypical in either pattern or degree, in comparison to the Illness behavior and ‘‘diagnosis threat’’ do not appear to performance of patients with bona fide neurologic disorder. impact PVT scores. Acute pain (cold pressor) has no impact on Consequently, SVTs demonstrate a discontinuity in performance performance on Reliable Digit Span (Etherton, Bianchini, Ciota, rather than a continuum of performance, with most neurologic & Greve, 2005) or on the TOMM (Etherton, Bianchini, Greve, patients either not showing the atypical pattern, or performing at & Ciota, 2005). Suhr and Gunstad (2005) did not find differ- ceiling on a particular SVT. Examples of atypical patterns of ences on the WMT for those mild TBI subjects in the ‘‘diagnosis performance include poorer performance on measures of atten- threat’’ condition compared to those in the non-threat group. tion than on measures of memory (Mittenberg, Azrin, Millsaps, Arguments that neurological mechanisms related to drive and & Heilbronner, 1993), or poorer performance on gross com- motivation underlie PVT performance are not supported in light pared to fine motor tests (Greiffenstein, Baker, & Gola, 1996). of PVT profiles which are typically valid for patients with sig- Examples of atypical degree include motor function perfor- nificant neurologic disease due to diverse causes, showing that mance at levels rarely seen in patients with severe neurologic these tasks require little in the way of effort, drive or motivation dysfunction (Greiffenstein, 2007). Consequently, performance and, as mentioned, general neurocognitive capacity; that is, is so atypical for bona fide neurologic disease that persons with performance is usually near ceiling even in contexts of severe significant neurologic disorders rarely fail ‘‘effort’’ tests. For objectively verified cerebral disorders. For example, on TOMM example, the meta-analysis of Vickery, Berry, Inman, Harris, & Trial 2, 21 aphasic patients averaged 98.7% correct, and 22 TBI Orey, 2001, reported a 95.7% specificity or 4.3% false positive patients (range of 1 day to 3 months of coma) averaged 98.2% rate. Additionally, modern SVT research typically sets specifi- correct; indeed, one patient with gunshot wound, right frontal city at 90% or better on individual tests, yielding a false positive lobectomy, and 38 days of coma scored 100% on Trial 2 rate of 10% or less (Boone, 2007; Larrabee, 2007; Morgan & (Tombaugh, 1996). In this vein, a patient with significant abulia Sweet, 2009). Consequently, if SVTs are unlikely to be failed by due to severe brain trauma, who would almost certainly require persons with significant neurologic dysfunction, then perfor- 24-hr supervision, and be minimally testable from a neu- mance on these tasks actually requires very minimal levels of ropsychological standpoint, would not warrant SVT or PVT neurocognitive capacity and consequently, very little ‘‘effort.’’ assessment. In such a patient, there would of course be a legit- As a result, I have recently advocated for referring to SVTs as imate concern about false positive errors on PVTs. As with any measures of performance validity to clarify the extent to which a mental test, consideration of context is necessary and expected. person’s test performance is or is not an accurate reflection of One of Bigler’s major concerns, the ‘‘near pass’’ (i.e., their actual level of ability (Bigler, Kaufmann, & Larrabee, performance falls just within the invalid range on a PVT), is 2010; Larrabee, 2012). This term is much more descriptive than not restricted to PVT investigations, it is a pervasive concern the terms ‘‘effort, ‘‘symptom validity,’’ or ‘‘response bias,’’ and in the field of assessment. One’s child does or does not reach in keeping with the longstanding convention of psychologists the cutoff to qualify for the gifted class or for help for a commenting on the validity of test results. Moreover, the term learning disability. One’s neuropsychological test score does ‘‘symptom validity’’ is actually more descriptive of subjective or does not reach a particular level of normality/abnormality complaint than it is of performance. Thus, I recommend that we (Heaton, Miller, Taylor, & Grant, 2004). Current PVT use two descriptive terms in evaluating the validity of an exam- research focuses on avoiding the error of misidentifying as inee’s neuropsychological examination: (1) performance validity invalid the performance of a patient with a bona fide condi- to refer to the validity of actual ability task performance, assessed tion who is actually producing a valid performance. More- either by stand-alone tests such as Dot Counting or by atypical over, there is a strong statistical argument for keeping false performance on neuropsychological tests such as Finger Tapping, positive errors at a minimum: Positive Predictive Power and (2) symptom validity to refer to the accuracy of symptomatic (PPP), or the probability of a diagnosis, is more dependent complaint on self-report measures such as the MMPI-2. upon Specificity (accurately diagnosing a person without the As previously noted, false positive rates are typically 10% or target disorder as not having the disorder) than Sensitivity less on individual Performance Validity Tests (PVTs). For (correctly identifying persons with the target disorder as example, the manual for the Test of Memory Malingering having the disorder; see Straus, Richardson, Glasziou, & (TOMM; Tombaugh, 1996) contains detailed information about Haynes, 2005). Since the basic formula for PPP is (True the performance of aphasic, TBI, dementia, and neurologic Positives) C (True Positives 1 False positives), the PVT patients, very few of whom (with the exception of dementia) investigator attempts to keep false positives at a minimum. perform below the recommended cutoff. Three patients with As noted in the previous meta-analysis (Vickery, Berry, severe anoxic encephalopathy and radiologically confirmed Inman, Harris, & Orey, 2001) as well as in recent reviews

Performance validity and symptom validity 3 (Boone, 2007; Larrabee, 2007), false positives are typically are atypical in pattern and degree for bona fide neurologic, 10% or less, with much lower sensitivities (56% per Vickery psychiatric or developmental disorders. It is the combined et al., 2001). Researchers also advocate reporting the char- improbability of findings, in the context of external incentive, acteristics of subjects identified as false positive cases in without any viable alternative explanation, that establishes individual PVT investigations (Larrabee, Greiffenstein, the intent of the examinee to malinger (Larrabee et al., 2007). Greve, & Bianchini, 2007; also see Victor, Boone, Serpa, Research using the Slick et al. MND criteria shows the Buehler, & Ziegler, 2009). This clarifies the characteristics of value of requiring multiple failures on PVTs and SVTs to those patients with truly severe mental disorders who fail determine probabilities of malingering in contexts with sub- PVTs on the basis of actual impairment. This information stantial external incentives. I (Larrabee, 2003a) demonstrated provides the clinician with concrete injury/clinical fact patterns that requiring failure of two embedded/derived PVTs and/or that legitimately correlate with PVT failure, thereby facilitating SVTs resulted in a sensitivity of .875 and specificity of .889 individual comparisons on a case by case basis (e.g., coma and for discriminating litigants (primarily with uncomplicated structural lesions in the brain; Larrabee, 2003a; unequivocally MTBI) performing significantly worse than chance from severe and obvious neurologic symptoms, Merten, Bossink, & clinical patients with moderate and severe TBI. The require- Schmand, 2007; or need for 24-hr supervision; Meyers & ment that patients fail 3 or more PVTs and SVTs resulted in a Volbrecht, 2003). Authors of PVTs have also included sensitivity of .542, but a specificity of 1.00 (i.e., there were no comparison groups with various neurologic, psychiatric and false positives). These data were replicated by Victor et al. developmental conditions to further reduce the chances of (2009) using a different set of embedded/derived indictors false positive identification on an individual PVT (Boone, in a similar research design yielding sensitivity of .838 and Lu, & Herzberg, 2002a, 2002b; Tombaugh, 1996). specificity of .939 for failure of any two PVTs, and sensitivity PVTs have two applications in current neuropsychological of .514 and specificity of .985 for failure of three or more PVTs. practice: (1) screening data for a research investigation to The drop in false alarm rate and increase in specificity remove effects of invalid performance (see Larrabee, Millis, going from two to three failed PVTs/SVTs, is directly related & Meyers, 2008) and (2) for evaluation of an individual to the PPP of malingering, as demonstrated by the metho- patient to determine if performance of that patient is valid, dology of chaining likelihood ratios (Grimes & Schulz, and forensically, to address the issue of malingering. Concerns 2005). The positive likelihood ratio is defined by the ratio of about false positives are of far greater import in the second sensitivity to the false positive rate. Hence, a score falling at a application of PVTs, since there really is no consequence to the particular PVT cutoff that has an associated sensitivity of .50 patient whose data are excluded from clinical research. and specificity of .90 would yield a likelihood ratio of .50 C Concerns about false positive identification (‘‘near passes’’) .10, or 5.0. If this is then premultiplied by the base rate odds in the individual case are addressed by the diagnostic criteria for of malingering (assume a malingering base rate of .40, per Malingered Neurocognitive Dysfunction (MND; Slick, Sherman, Larrabee, Millis, & Meyers, 2009, yielding a base rate odds & Iverson, 1999). Slick et al. define malingering as the of (base rate) C (1 – base rate) or (.40) C (1–.40) or .67), this volitional exaggeration or fabrication of cognitive dysfunc- value becomes .67 3 5.0 or 3.35. This can be converted back tion for the purpose of obtaining substantial material gain, to a probability of malingering by the formula (odds) C avoiding or escaping legally mandated formal duty or (odds 1 1), in this case, 3.35 C 4.35, or .77. If the indicators responsibility. Criteria for MND require a substantial exter- are independent, they can be chained, so that the post-test nal incentive (e.g., litigation, criminal prosecution), multiple odds after premultiplying one indicator by the base rate odds, sources of evidence from behavior (e.g., PVTs), and symptom become the new pretest odds by which a second independent report (e.g., SVTs) to define probable malingering, whereas indicator is multiplied. Thus, if a second PVT is failed at the significantly worse-than-chance performance defines definite same cut off yielding sensitivity of .50 and specificity of .90, malingering. Moreover, these sources of evidence must not be this yields a second likelihood ratio of 5.0, which is now the product of neurological, psychiatric or developmental fac- multiplied by the post-test odds of 3.35 obtained after failure tors (note the direct relevance of this last criterion to the issue of of the first indicator. This yields new post-test odds of 16.75, false positives). which can be converted back to a probability by dividing The Slick et al. criteria for MND have led to extensive 16.75 by 17.75 to yield a probability of malingering of .94, in subsequent research using these criteria for known-group settings with substantial external incentive. The interested investigations of detection of malingering (Boone, 2007; reader is referred to a detailed explanation of this methodology Larrabee, 2007; Morgan and Sweet, 2009). These criteria (Larrabee, 2008). have also influenced development of criteria for Malingered The method of chaining of likelihood ratios shows how the Pain Related Disability (MPRD; Bianchini, Greve, and probability of confidently determining malingered performance Glynn (2005). As my colleagues and I have pointed out is enhanced by requiring multiple PVT and SVT failure, con- (Larrabee et al., 2007), the diagnostic criteria for MND and sistent with other results (Larrabee, 2003a; Victor et al., 2009). MPRD share key features: (1) the requirement for a sub- Boone and Lu (2003) make a related point regarding the decline stantial external incentive, (2) the requirement for multiple in false positive rate by using several independent tests, each indicators of performance invalidity or symptom exaggeration, with a false positive rate of .10: failure of two PVTs yields a and (3) test performance and symptom report patterns that probability (false positive rate) of .01 (.1 3 .1), whereas failure of

4 G.J. Larrabee three PVTs yields a probability of .001 (.1 3 .1 3 .1), and failure non-dominant Finger Tapping score of 63.1 for a sample of of six PVTs yields a probability as low as one in a million simulators, which was essentially identical to the score of (.1 3 .1 3 .1 3 .1 3 .1 3 .1). Said differently, the standard of 63.0 for the simulators in Mittenberg, Rotholc, Russell, and multiple PVT and SVT failure protects against false positive Heilbronner (1996). In a criterion groups design, I reported diagnostic errors. Per Boone and Lu (2003), Larrabee (2003a; an optimal dominant plus non-dominant hand Finger Tapping 2008), and Victor et al. (2009), failure of three independent score of less than 63 for discriminating subjects with definite PVTs is associated with essentially no false positive errors, a MND from patients with moderate or severe TBI (Larrabee, highly compelling empirically-based conclusion in the context of 2003a), which was identical to the cutting score one would any form of diagnostic testing. obtain by combining the male and female subjects in the cri- PVT performance can vary in persons identified with terion groups investigation of Arnold et al. (2005). In a criterion multiple PVT failures and should be assessed throughout an groups design, I (Larrabee, 2003b) reported optimal MMPI-2 examination (Boone, 2009). Malingering can lower perfor- FBS Symptom Validity cutoffs of 21 or 22 in discriminating mance as much as 1 to 2 SD on select sensitive tests of memory subjects with definite MND from those with moderate or severe and processing speed (Larrabee, 2003a), and PVT failure can TBI, which was identical to the value of 21 or 22 for dis- lower the overall test battery mean (Green et al., 2001) by over 1 criminating subjects with probable MND from patients with SD. In the presence of malingering, poor performances are more moderate or severe TBI reported by Ross, Millis, Krukowski, likely the result of intentional underperformance, particularly Putnam, and Adams (2004). As already noted, Victor et al. in conditions such as uncomplicated mild TBI in which pro- (2009) obtained very similar sensitivities and specificities for nounced abnormalities are unexpected (McCrea et al., 2009), failure of any two or any three or more PVTs to the values I and normal range performances themselves are likely under- obtained for failure of any two or three or more PVTs or SVTs estimates of actual level of ability. (Larrabee, 2003a). Last, there is a lengthy history of strong experimental My colleagues and I have relied upon the similarity of design in PVT and SVT investigations. Research designs in findings in simulation and criterion group designs to link malingering are discussed over 20 years ago in Rogers’ first together research supporting the psychometric basis of MND book (Rogers, 1988). The two most rigorous and clinically criteria (Larrabee et al., 2007). The similarity of findings on relevant designs are the simulation design, and the ‘‘known individual PVTs for simulators and for litigants with definite groups’’ or ‘‘criterion group’’ designs (Heilbronner, et al., MND (defined by worse than chance performance) demon- 2009). In the simulation design, a non-injured group of sub- strates that worse-than-chance performance reflects intentional jects is specifically instructed to feign deficits on PVTs, underperformance; in other words, the definite MND subjects SVTs, and neuropsychological ability tests, which are then performed identically to non-injured persons feigning impair- contrasted with scores produced by a group of persons with ment who are known to be intentionally underperforming bona fide disorders, usually patients with moderate or severe because they have been instructed to do so. Additionally, the TBI. The resulting patterns discriminate known feigning PVT and neuropsychological test performance of persons with from legitimate performance profiles associated with mod- probable MND (defined by multiple PVT failure independent of erate and severe TBI, thereby minimizing false positive the particular PVT or neuropsychological test data being com- diagnosis in the TBI group. The disadvantage is that issues pared) did not differ from that of persons with definite MND, arise as to the ‘‘real world’’ generalization of non-injured establishing the validity of the probable MND criteria. Last, the persons feigning deficit compares to actual malingerers who paper by Bianchini, Curtis, and Greve (2006) showing a dose- have significant external incentives, for example, millions at effect relationship between PVT failure and amount of external stake in a personal injury claim. The second design, criterion incentive, supports that intent is causally related to PVT failure. groups, contrasts the performance of a group of litigating In closing, the science behind measures of performance subjects, usually those with alleged non-complicated mild and symptom validity is rigorous, well developed, replicable TBI, who have failed multiple PVTs and SVTs, commonly and specifically focused on keeping false positive errors at a using the Slick et al. MND criteria, with a group of clinical minimum. I have also argued for a change in terminology that patients, typically with moderate and severe TBI. This has the may reduce some of the confusion in this area, recommend- advantage of using a group with ‘‘real world’’ incentives, that ing the use of Performance Validity Test (PVT) for measures is unlikely to have significant neurological damage and per- directed at assessing the validity of a person’s performance, sistent neuropsychological deficits (McCrea et al., 2009), and Symptom Validity Test (SVT) for measures directed at holding false positive errors at a minimum by determining assessing the validity of a person’s symptomatic complaint. performance patterns that are not characteristic of moderate and severe TBI. Although random assignment cannot be used for the simulation and criterion group designs just described, ACKNOWLEDGMENT these designs are appropriate for case control comparisons. This manuscript has not been previously published electronically PVT and SVT research using simulation and criterion or in print. Portions of this study were presented as a debate at the group designs has, for the most part, yielded very consistent 38th Annual International Neuropsychological Society meeting on and replicable findings. For example, Heaton, Smith, Lehman, ‘‘Admissibility and Appropriate Use of Symptom Validity Science and Vogt (1978) reported an average dominant plus in Forensic Consulting’’ in Acapulco, Mexico, February, 2010,

Performance validity and symptom validity 5

moderated by Paul M. Kaufmann, J.D., Ph.D. Dr. Larrabee is Green, P. (2007). The pervasive influence of effort on neuropsy-

engaged in the full time independent practice of clinical neuropsychol- chological tests. Physical Medicine and Rehabilitation Clinics of

ogy with a primary emphasis in forensic neuropsychology. He is the North America, 18, 43–68.

editor of Assessment of Malingered Neuropsychological Deficits (2007, Green, P., Rohling, M.L., Iverson, G.L., & Gervais, R.O. (2003).

Oxford University Press), and Forensic Neuropsychology. A Scientific Relationships between olfactory discrimination and head injury

Approach (2nd Edition, 2012, Oxford University Press), and receives severity. Brain Injury, 17, 479–496.

royalties from the sale of these books. Green, P., Rohling, M.L., Lees-Haley, P.R., & Allen, L.M. (2001).

Effort has a greater effect on test scores than severe brain injury in

compensation claimants. Brain Injury, 15, 1045–1060.

Greiffenstein, M.F. (2007). Motor, sensory, and perceptual-motor

REFERENCES pseudoabnormalities. In G.J. Larrabee (Ed.). Assessment of

Arnold, G., Boone, K.B., Lu, P., Dean, A., Wen, J., Nitch, S., & malingered neuropsychological deficits (pp. 100–130). New

McPherson, S. (2005). Sensitivity and specificity of Finger York: Oxford University Press.

Tapping Test scores for the detection of suspect effort. Greiffenstein, M.F., & Baker, W.J. (2003). Premorbid Clues?

The Clinical Neuropsychologist, 19, 105–120. Preinjury scholastic performance and present neuropsychological

Ashendorf, L., Constantinou, M., & McCaffrey, R.J. (2004). The functioning in late postconcussion syndrome. The Clinical

effect of depression and anxiety on the TOMM in community- Neuropsychologist, 17, 561–573.

dwelling older adults. Archives of Clinical Neuropsychology, 19, Greiffenstein, M.F., Baker, W.J., & Gola, T. (1996). Motor

125–130. dysfunction profiles in traumatic brain injury and post-concussion

Bianchini, K.J., Curtis, K.L., & Greve, K.W. (2006). Compensation syndrome. Journal of the International Neuropsychological

and malingering in traumatic brain injury: A dose-response Society, 2, 477–485.

relationship? The Clinical Neuropsychologist, 20, 831–847. Grimes, D.A., & Schulz, K.F. (2005). Epidemiology 3. Refining

Bianchini, K.J., Greve, K.W., & Glynn, G. (2005). On the diagnosis clinical diagnosis with likelihood ratios. Lancet, 365, 1500–1505.

of malingered pain-related disability: Lessons from cognitive Hanninen, T., Reinikainen, K.J., Helkala, E.-L., Koivisto, K.,

malingering research. Spine Journal, 5, 404–417. Mykkanen, L., Laakso, M., y Riekkinen, R.J. (1994). Subjective

Bigler, E.D., Kaufmann, P.M., & Larrabee, G.J. (2010, February). memory complaints and personality traits in normal elderly subjects.

Admissibility and appropriate use of symptom validity science Journal of the American Geriatric Society, 42, 1–4.

in forensic consulting. Talk presented at the 38th Annual Meeting of Heaton, R.K., Miller, S.W., Taylor, M.J., & Grant, I. (2004).

the International Neuropsychological Society, Acapulco, Mexico. Revised comprehensive norms for an expanded Halstead-Reitan

Boone, K.B. (2007). Assessment of feigned cognitive impairment. Battery: Demographically adjusted neuropsychological norms

A neuropsychological perspective. New York: Guilford. for African American and Caucasian adults. Professional

Boone, K.B. (2009). The need for continuous and comprehensive Manual. Lutz, FL: Psychological Assessment Resources.

sampling of effort/response bias during neuropsychological Heaton, R.K., Smith, H.H., Jr., Lehman, R.A., & Vogt, A.J. (1978).

examinations. The Clinical Neuropsychologist, 23, 729–741. Prospects for faking believable deficits on neuropsychological testing.

Boone, K.B., & Lu, P.H. (2003). Noncredible cognitive perfor- Journal of Consulting and Clinical Psychology, 46, 892–900.

mance in the context of severe brain injury. The Clinical Heilbronner, R.L., Sweet, J.J., Morgan, J.E., Larrabee, G.J., Millis,

Neuropsychologist, 17, 244–254. S.R., & Conference Participants (2009). American Academy of

Boone, K.B., Lu, P., & Herzberg, D.S. (2002a). The b Test. Manual. Clinical Neuropsychology consensus conference statement on the

Los Angeles, CA: Western Psychological Services. neuropsychological assessment of effort, response bias, and

Boone, K.B., Lu, P., & Herzberg, D.S. (2002b). The Dot Counting malingering. The Clinical Neuropsychologist, 23, 1093–1129.

Test. Manual. Los Angeles, CA: Western Psychological Services. Iverson, G.L., Le Page, J., Koehler, B.E., Shojania, K., & Badii, M.

Brulot, M.M., Strauss, E., & Spellacy, F. (1997). Validity of the (2007). Test of Memory Malingering (TOMM) scores are not

Minnesota Multiphasic Personality Inventory-2 correction factors affected by chronic pain or depression in patients with

for use with patients with suspected head injury. The Clinical fibromyalgia. The Clinical Neuropsychologist, 21, 532–546.

Neuropsychologist, 11, 391–401. Larrabee, G.J. (2003a). Detection of malingering using atypical

Etherton, J.L., Bianchini, K.J., Ciota, M.A., & Greve, K.W. (2005). performance patterns on standard neuropsychological tests. The

Reliable Digit Span is unaffected by laboratory-induced pain: Clinical Neuropsychologist, 17, 410–425.

Implications for clinical use. Assessment, 12, 101–106. Larrabee, G.J. (2003b). Detection of symptom exaggeration with the

Etherton, J.L., Bianchini, K.J., Greve, K.W., & Ciota, M.A. (2005). MMPI-2 in litigants with malingered neurocognitive dysfunction.

Test of memory malingering performance is unaffected by The Clinical Neuropsychologist, 17, 54–68.

laboratory-induced pain: Implications for clinical use. Archives Larrabee, G.J. (Ed.) (2007). Assessment of malingered neuropsy-

of Clinical Neuropsychology, 20, 375–384. chological deficits. New York: Oxford.

Fox, D.D. (2011). Symptom validity test failure indicates invalidity Larrabee, G.J. (2008). Aggregation across multiple indicators

of neuropsychological tests. The Clinical Neuropsychologist, 25, improves the detection of malingering: Relationship to likelihood

488–495. ratios. The Clinical Neuropsychologist, 22, 666–679.

Gervais, R.O., Ben-Porath, Y.S., Wygant, D.B., & Green, P. (2008). Larrabee, G.J. (2012). Assessment of malingering. In G.J. Larrabee

Differential sensitivity of the Response Bias Scale (RBS) and (Ed.). Forensic neuropsychology: A scientific approach (2nd ed.,

MMPI-2 validity scales to memory complaints. The Clinical pp. 116–159). New York: Oxford University Press.

Neuropsychologist, 22, 1061–1079. Larrabee, G.J., Greiffenstein, M.F., Greve, K.W., & Bianchini, K.J.

Goodrich-Hunsaker, N.J., & Hopkins, R.O. (2009). Word Memory (2007). Refining diagnostic criteria for malingering. In G.J. Larrabee

Test performance in amnesic patients with hippocampal damage. (Ed.). Assessment of malingered neuropsychological deficits

Neuropsychology, 23, 529–534. (pp. 334–371). New York: Oxford.6 G.J. Larrabee Larrabee, G.J., & Levin, H.S. (1986). Memory self-ratings and Rogers, R. (1988). Researching dissimulation. In R. Rogers (Ed.), objective test performance in a normal elderly sample. Journal of Clinical assessment of malingering and deception (pp. 309–327). Clinical and Experimental Neuropsychology, 8, 275–284. New York: Guilford Press. Larrabee, G.J., Millis, S.R., & Meyers, J.E. (2008). Sensitivity to Rohling, M.L., Larrabee, G.J., Greiffenstein, M.F., Ben-Porath, Y.S., brain dysfunction of the Halstead-Reitan vs. an ability-focused Lees-Haley, P., Green, P., & Greve, K.W. (2011). A misleading neuropsychological battery. The Clinical Neuropsychologist, 22, review of response bias: Comment on McGrath, Mitchell, Kim, and 813–825. Hough (2010). Psychological Bulletin, 137, 708–712. Larrabee, G.J., Millis, S.R., & Meyers, J.E. (2009). 40 plus or minus Ross, S.R., Millis, S.R., Krukowski, R.A., Putnam, S.H., & Adams, 10, a new magical number: Reply to Russell. The Clinical K.M. (2004). Detecting probable malingering on the MMPI-2: An Neuropsychologist, 23, 746–753. examination of the Fake-Bad Scale in mild head injury. Journal of McCrea, M., Iverson, G.L., McAllister, T.W., Hammeke, T.A., Clinical and Experimental Neuropsychology, 26, 115–124. Powell, M.R., Barr, W.B., & Kelly, J.P. (2009). An integrated Slick, D.J., Sherman, E.M.S., & Iverson, G.L. (1999). Diagnostic review of recovery after mild traumatic brain injury (MTBI): criteria for malingered neurocognitive dysfunction: Proposed Implications for clinical management. The Clinical Neuropsy- standards for clinical practice and research. The Clinical chologist, 23, 1368–1390. Neuropsychologist, 13, 545–561. Merten, T., Bossink, L., & Schmand, B. (2007). On the limits of Straus, S.E., Richardson, W.S., Glasziou, P., & Haynes, R.B. effort testing: Symptom validity tests and severity of neurocog- (2005). Evidence-based medicine: How to practice and teach nitive symptoms in nonlitigant patients. Journal of Clinical and EBM (3rd ed.). New York: Elsevier Churchill Livingstone. Experimental Neuropsychology, 29, 308–318. Suhr, J.A., & Gunstad, J. (2005). Further exploration of the effect of Meyers, J.E., & Volbrecht, M.E. (2003). A validation of multiple ‘‘diagnosis threat’’ on cognitive performance in individuals with malingering detection methods in a large clinical sample. mild head injury. Journal of the International Neuropsychologi- Archives of Clinical Neuropsychology, 18, 261–276. cal Society, 11, 23–29. Mittenberg, W., Azrin, R., Millsaps, C., & Heilbronner, R. (1993). Tombaugh, T.N. (1996). TOMM. Test of Memory Malingering. Identification of malingered head injury on the Wechsler Memory New York: Multi-Health Systems. Scale-Revised. Psychological Assessment, 5, 34–40. Vickery, C.D., Berry, D.T.R., Inman, T.H., Harris, M.J., & Orey, Mittenberg, W., Rotholc, A., Russell, E., & Heilbronner, R. (1996). S.A. (2001). Detection of inadequate effort on neuropsychologi- Identification of malingered head injury on the Halstead- cal testing: A meta-analytic review of selected procedures. Reitan Battery. Archives of Clinical Neuropsychology, 11, Archives of Clinical Neuropsychology, 16, 45–73. 271–281. Victor, T.L., Boone, K.B., Serpa, J.G., Buehler, J., & Ziegler, E.A. Morgan, J.E., & Sweet, J.J. (Eds.), (2009) Neuropsychology of (2009). Interpreting the meaning of multiple symptom validity malingering casebook. New York: Psychology Press. test failure. The Clinical Neuropsychologist, 23, 297–313. Rees, L.M., Tombaugh, T.N., & Boulay, L. (2001). Depression Williams, J.M., Little, M.M., Scates, S., & Blockman, N. (1987). and the Test of Memory Malingering. Archives of Clinical Memory complaints and abilities among depressed older adults. Neuropsychology, 16, 501–506. Journal of Consulting and Clinical Psychology, 55, 595–598. doi:10.1017/S1355617712000392 DIALOGUE RESPONSE Response to Bigler Glenn J. Larrabee Bigler (this issue) and I apparently are in agreement about the positive errors due to TOMM scores falling in a ‘‘near miss’’ importance of symptom validity testing, and my recommen- zone just below cutoff. This interpretation suggests a con- dation to adopt a new terminology of ‘‘performance validity’’ tinuum of performance. Review of Bigler’s Figure 1 and to address the validity of performance on measures of ability, Locke et al.’s Table 2 shows that the frequency distribution of and ‘‘symptom validity’’ to address the validity of symptom TOMM scores does not, however, reflect a continuum but report on measures such as the MMPI-2. We appear to differ shows two discrete distributions: (1) a sample of 68 ranging on issues related to false positives and the rigor of perfor- from 45 to 50 (mean 5 49.31, SD 5 1.16) and (2) a sample mance and symptom validity research designs. of 19 ranging from 22 to 44 (mean 5 35.11, SD 5 6.55) The study by Locke, Smigielski, Powell, and Stevens [note Bigler interprets two distributions below 45, but the (2008) is cited by Bigler as demonstrating potential false sample size is too small to establish this presence]. Clearly,

Performance validity and symptom validity 7

Locke et al. did not view TOMM failures as false positives in studies (Nelson, Hoelzle, Sweet, Arbisi, & Demakis, 2010);

their sample. Although Locke et al. found that performance d 5 2.02 for the two-alternative forced choice Digit Memory

on neurocognitive testing was significantly lower in this Test (Vickery, Berry, Inman, Harris, & Orey, 2001). These

group, TOMM failure was not related to severity of brain effect sizes exceed those reported for several psychological and

injury, depression or anxiety; only disability status predicted medical tests (Meyer et al., 2001). Effect sizes of this magni-

TOMM failure. They concluded: ‘‘This study suggests that tude are striking, considering that the discrimination is between

reduced effort occurs outside forensic settings, is related to feigned performance and legitimate neuropsychological

neuropsychometric performance, and urges further research abnormalities, rather than between feigned performance and

into effort across various settings’’ (p. 273). normal performance. Reproducible results and large effect sizes

As previously noted in my primary review, several factors cannot occur without rigorous experimental design.

minimize the significance of false positive errors. First,

scores reflecting invalid performance are atypical in pattern

or degree for bona fide neurological disorder. Second, cutoff REFERENCES

scores are typically set to keep false positive errors at or Bigler, E.D. (2012). Symptom validity testing, effort, and neuro-

below 10%. Third, investigators are encouraged to specify psychological assessment. Journal of the International Neuro-

the characteristics of bona fide clinical patients who fail PVTs psychological Society, 18, 000–000.

representing ‘‘false positives,’’ to enhance the clinical use of Boone, K.B. (2007). Assessment of feigned cognitive impairment.

the PVT in the individual case. Fourth, appropriate use of A neuropsychological perspective. New York: Guilford.

PVTs in the individual case requires the presence of multiple Edlund, W., Gronseth, G., So, Y., & Franklin, G. (2004). Clinical

abnormal scores on independent PVTs, occurring in the practice guideline process manual: For the Quality Standards

context of external incentive, with no compelling neurologic, Subcommittee (QSS) and the Therapeutics and Technology

Assessment Subcommittee (TTA). St. Paul: American Academy

psychiatric or developmental explanation for PVT failure,

of Neurology.

before one can conclude the presence of malingering (cf.,

Jasinski, L.J., Berry, D.T.R., Shandera, A.L., & Clark, J.A. (2011).

Slick, Sherman, & Iverson, 1999). Use of the Wechsler Adult Intelligence Scale Digit Span subtest

Bigler also criticizes the research in this area as being, at best, for malingering detection: A meta-analytic review. Journal of

Class III level research (American Academy of Neurology, Clinical and Experimental Neuropsychology, 33, 300–314.

AAN, Edlund, Gronseth, So, & Franklin, 2004), noting the Larrabee, G.J. (2007) (Ed.), Assessment of malingered neuropsy-

research is typically retrospective, using samples of con- chological deficits. New York: Oxford.

venience, with study authors not blind to group assignment. Locke, D.E.C., Smigielski, J.S., Powell, M.R., & Stevens, S.R.

Review of the AAN guidelines, however, shows that retro- (2008). Effort issues in post-acute outpatient acquired brain injury

spective investigations using case control designs can meet rehabilitation seekers. Neurorehabilitation, 23, 273–281.

Class II standards (p. 20). Moreover, there is no requirement for Meyer, G.J., Finn, S.E., Eyde, L.D., Kay, G.G., Moreland, K.L.,

Dies, R.R., y Reed, G.M. (2001). Psychological testing and

masked or independent assessment, if the reference standards

psychological assessment. A review of evidence and issues.

for presence of disorder and the diagnostic tests are objective American Psychologist, 56, 128–165.

(italics added). The majority of studies cited in recent reviews Morgan, J.E., & Sweet, J.J. (Eds.) (2009). Neuropsychology of

(Boone, 2007; Larrabee, 2007; Morgan & Sweet, 2009) follow malingering casebook. New York: Psychology Press.

case control designs contrasting either non-injured simulators or Nelson, N.W., Hoelzle, J.B., Sweet, J.J., Arbisi, P.A., & Demakis,

criterion/known-groups of definite or probable malingerers, G.J. (2010). Updated meta-analysis of the MMPI-2 Symptom

classified using objective test criteria from Slick et al. (1999), Validity Scale (FBS): Verified utility in forensic practice. The

with groups of clinical patients with significant neurologic dis- Clinical Neuropsychologist, 24, 701–724.

order (usually moderate/severe TBI) and/or psychiatric disorder Nelson, N.W., Sweet, J.J., & Demakis, G.J. (2006). Meta-analysis of

(i.e., major depressive disorder). As such, these investigations the MMPI-2 Fake Bad Scale: Utility in forensic practice. The

would meet AAN Level II criteria. Clinical Neuropsychologist, 20, 39–58.

Slick, D.J., Sherman, E.M.S., & Iverson, G.L. (1999). Diagnostic

In my earlier review in this dialog, I described a high

criteria for malingered neurocognitive dysfunction: Proposed

degree of reproducibility of results in performance and

standards for clinical practice and research. The Clinical

symptom validity research. Additionally, the effect sizes Neuropsychologist, 13, 545–561.

generated by this research are uniformly large, for example, Vickery, C.D., Berry, D.T.R., Inman, T.H., Harris, M.J., & Orey,

d 5 21.34 for Reliable Digit Span (Jasinski, Berry, Shandera, S.A. (2001). Detection of inadequate effort on neuropsychologi-

& Clark, 2011); d 5 .96 for MMPI-2 FBS (Nelson, Sweet, & cal testing: A meta-analytic review of selected procedures.

Demakis, 2006), replicated at d 5 .95 incorporating 43 new Archives of Clinical Neuropsychology, 16, 45–73.Journal of the International Neuropsychological Society (2012), 18, 1–11.

Copyright E INS. Published by Cambridge University Press, 2012.

doi:10.1017/S1355617712000252

DIALOGUE

Symptom Validity Testing, Effort, and

Neuropsychological Assessment

Erin D. Bigler1,2,3,4

1Department of Psychology, Brigham Young University, Provo, Utah

2Neuroscience Center, Brigham Young University, Provo, Utah

3Department of Psychiatry, University of Utah, Salt Lake City, Utah

4The Brain Institute of Utah, University of Utah, Salt Lake City, Utah

(RECEIVED November 14, 2011; FINAL REVISION February 4, 2012; ACCEPTED February 8, 2012)

Abstract

Symptom validity testing (SVT) has become a major theme of contemporary neuropsychological research. However,

many issues about the meaning and interpretation of SVT findings will require the best in research design and methods

to more precisely characterize what SVT tasks measure and how SVT test findings are to be used in neuropsychological

assessment. Major clinical and research issues are overviewed including the use of the ‘‘effort’’ term to connote validity

of SVT performance, the use of cut-scores, the absence of lesion-localization studies in SVT research, neuropsychiatric

status and SVT performance and the rigor of SVT research designs. Case studies that demonstrate critical issues involving

SVT interpretation are presented. (JINS, 2012, 18, 1–11)

Keywords: Symptom validity testing, SVT, Effort, Response bias, Validity

Symptom validity testing (SVT) has emerged as a major theme been to use what are referred to as ‘‘stand-alone’’ SVT measures

of neuropsychological research and clinical practice. Neuro- that are separately administered during the neuropsychological

psychological assessment methods and procedures strive for examination (Sollman & Berry, 2011). SVT performance is

and require the most valid and reliable techniques to assess then used to infer validity or lack thereof for the battery of all

cognitive and neurobehavioral functioning, to make neuro- neuropsychological tests administered during that test session.

psychological inferences and diagnostic conclusions. From the The growth in SVT research has been exponential. Using

beginnings of neuropsychology as a discipline, issues of test the search words ‘‘symptom validity testing’’ in a National

reliability and validity have always been a concern (Filskov & Library of Medicine literature search yielded only one study

Boll, 1981; Lezak, 1976). However, neuropsychology’s initial before 1980, five articles during the 1980s, but hundreds

focus was mostly on test development, standardization and the thereafter. SVT research of the last decade has led to impor-

psychometric properties of a test and not independent measures tant practice conclusions as follows: (1) professional societies

of test validity. A variety of SVT methods are now available endorse SVT use (Bush et al., 2005; Heilbronner, Sweet,

(Larrabee, 2007). While contemporary neuropsychological test Morgan, Larrabee, & Millis, 2009), (2) passing a SVT infers

development has begun to more directly incorporate SVT valid performance, (3) SVT measures have good face validity

indicators embedded within the primary neuropsychological as cognitive measures, all have components that are easy for

instrument (Bender, Martin Garcia, & Barr, 2010; Miller et al., healthy controls and even for the majority of neurological

2011; Powell, Locke, Smigielski, & McCrea, 2011), traditional patients to pass with few or no errors, and (4) groups that

neuropsychological test construction and the vast majority of perform below established cut-score levels on a SVT gen-

standardized tests currently in use do not. Current practice has erally exhibit lower neuropsychological test scores. This last

observation has been interpreted as demonstrating that SVT

performance taps a dimension of effort to perform, where

Correspondence and reprint requests to: Erin D. Bigler, Department

of Psychology and Neuroscience Center, 1001 SWKT, Brigham Young SVT ‘‘failure’’ reflects non-neurological factors that reduce

University, Provo, UT 84602. E-mail: erin_bigler@byu.edu neuropsychological test scores and invalidates findings

12 E.D. Bigler

(West, Curtis, Greve, & Bianchini, 2011). On forced-choice

SVTs, the statistical improbability of below chance performance

implicates malingering (the examinee knows the correct answer

but selects the incorrect to feign impairment).

A quote from Millis (2009) captures the importance of

why neuropsychology must address the validity of test

performance:

All cognitive tests require that patients give their best effort

(italics added) when completing them. Furthermore, cogni-

tive tests do not directly measure cognition: they measure

behavior from which we make inferences about cognition.

People are able to consciously alter or modify their behavior,

including their behavior when performing cognitive tests.

Ostensibly poor or ‘‘impaired’’ test scores will be obtained

if an examinee withholds effort (e.g., reacting slowly to

reaction time tests). There are many reasons why people may

fail to give best effort on cognitive testing: financial com-

pensation for personal injury; disability payments; avoiding

or escaping formal duty or responsibilities (e.g., prison,

military, or public service, or family support payments or

other financial obligations); or psychosocial reinforcement

for assuming the sick role (Slick, Sherman, & Iverson, 1999).

‘‘y. Clinical observation alone cannot reliably differentiate

examinees giving best effort from those who are not.’’ (Millis

& Volinsky, 2001, p. 2409)

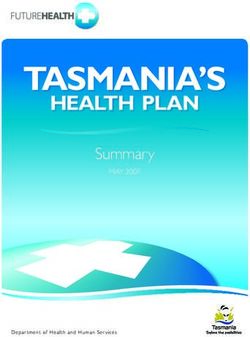

This short review examines key SVT concepts and Fig. 1. The distribution of Test of Memory Malingering (TOMM)

the ‘‘effort’’ term as used in neuropsychology. ‘‘Effort’’ scores that were above (green) the cut-point of 45 compared to those

seems to capture a clinical descriptive of patient test perfor- who performed below (blue-red). Note the bi-modal distribution of

mance that, at first blush, seems straightforward enough. those who failed, compared to the peaked performance of 50/50 correct

However, effort also has neurobiological underpinnings, a by those who pass. Below chance responding is the most accepted SVT

perspective often overlooked in SVT research and clinical feature indicative of malingering. As indicated by the shading of blue

application. Furthermore, does the term effort suggest coloration emerging into red, as performance approaches chance, that

intention, such as ‘‘genuine effort’’ – the patient is trying likely reflects considerable likelihood of malingering. However,

their best? Or if maximum ‘‘effort’’ is not being applied to recalling that all of these patients had some form of ABI, those in

blue, are much closer to the cut-point, raising the question of whether

test performance or exhibited by the patient, when does it

their neurological condition may contribute to their SVT performance.

reflect performance that may not be trustworthy? What is

meant by effort?

Below Cut-Score SVT Performance and

‘‘EFFORT’’ – ITS MULTIPLE MEANINGS Neuropsychological Test Findings: THE ISSUE

In the SVT literature when the ‘‘effort’’ term is linked An exemplary SVT study has been done by Locke,

with other nouns, verbs, and/or adjectives such statements Smigielski, Powell, and Stevens (2008). This study is singled

appear to infer or explain a patient’s mental state, including out for this review because it was performed at an academic

motivation. For example it is common to see commentaries medical center (many SVT studies are based on practitioners’

in the SVT literature indicating something about ‘‘cognitive clinical cases), had institutional review board (IRB) approval

effort,’’ ‘‘mental effort,’’ ‘‘insufficient effort,’’ ‘‘poor effort,’’ (most SVT studies do not), examined non-forensic (no case

‘‘invalid effort,’’ or even ‘‘faked effort.’’ Some of these terms was in litigation although some cases had already been

suggest that neuropsychological techniques, and in particular judged disabled and were receiving compensation), and was

the SVT measure itself, are capable of inferring intent? based on consecutive clinical referrals (independently diag-

Can they (see discussion by Dressing, Widder, & Foerster, nosed with some type of acquired brain injury [ABI] before

2010)? There are additional terms often used to describe the neuropsychological assessment) with all patients being

SVT findings including response bias, disingenuous, dissi- seen for treatment recommendations and/or actual treatment.

mulation, non-credible, malingered, or non- or sub-optimal, Figure 1 shows the distribution of pass/fail SVT scores where

further complicating SVT nomenclature. There is no agreed 21.8% performed below the SVT cut-point [pass Z 45/50

upon consensus definition within neuropsychology of what items correct on Trial 2 of the Test of Memory Malingering

effort means. (TOMM), Tombaugh, 1996], which Locke et al. defined asSymptom validity testing assessment 3

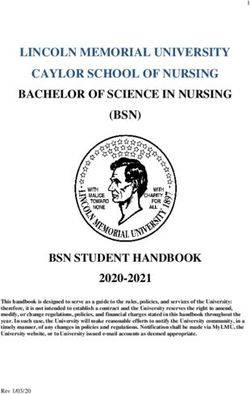

Fig. 2. MRI showing partial temporal lobectomy. Pre-Surgery Test of Memory and Malingering (TOMM): Trial 1 5 50/50;

Trial 2 5 50/50; Test of Neuropsychological Malingering (TNM) 5 90% correct (see Hall and Pritchard, 1996).

Post-Surgery: TOMM Trial 1 5 42/50; Trial 2 5 46/50; Delayed 5 44/50; Rey 15-Item 5 6/15; Word Memory Test

(WMT): IR 67.5%, 30 min delay 75%; Free Recall 5%; Free Recall Delay 5 7.5%; Free Recall Long Delay 5 0.0.

constituting a group of ABI patients exhibiting ‘‘sub-optimal’’ who fails SVT measures. In both cases, SVT failure is not below

effort. Of greater significance for neuropsychology is that the chance, both patients have distinct, bona fide and unequivocal

subjects in the failed SVT group, while being matched on all structural damage to critical brain regions involved in memory.

other demographic factors, performed worse and statistically Is not their SVT performance a reflection of the underlying

lower across most (but not all) neuropsychological test measures. damage and its disruption of memory performance? If a neuro-

As seen in Figure 1, the modal response is a perfect or near psychologist interpreted these test data as invalid because of

perfect TOMM score of 50/50 correct, presumably reflective ‘‘failed’’ SVT performance, is that not ignoring the obvious and

of valid test performance. The fact that all subjects had ABI committing a Type II error? So when is ‘‘failed’’ SVT perfor-

previously and independently diagnosed before the SVT being mance just a reflection of underlying neuropathology? For

administered, and most performed without error, is a testament example, cognitive impairment associated with Alzheimer’s

to the ease of performing an SVT. For those who scored below disease is sufficient to impair SVT performance resulting in

the cut-point and thereby ‘‘failed’’ the SVT measure, a distinct below recommended cut-score levels and therefore constituting

bi-modal distribution emerges. One group, shown in red (see

Figure 1), hovers around chance (clearly an invalid performance)

but the majority of others who fail, as shown in blue, hover much

closer to, but obviously below, the SVT cut-point.

For the purposes of this review, the distinctly above-

chance but below cut-score performing group will be labeled

the ‘‘Near-Pass’’ SVT group. It is with this group where the

clinician/researcher is confronted with Type I and II statis-

tical errors when attempting to address validity issues of

neuropsychological test findings. All current SVT methods

acknowledge that certain neurological conditions may influ-

ence SVT performance, but few provide much in the way of

guidelines as to how this problem should be addressed.

Type II Error and the Patient With a ‘‘Near-Pass

SVT’’ performance1

Two cases among many seen in our University-based neuro-

psychology program are representative of the problems with

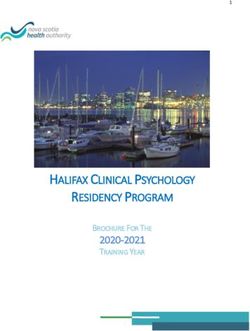

classifying neuropsychological test performance as invalid in Fig. 3. The fluid attenuated inversion recovery (FLAIR) sequence

the ‘‘Near-Pass’’ SVT performing patient. Figure 2 depicts magnetic resonance imaging (MRI) was performed in the sub-acute stage

the post-temporal lobectomy MRI in a patient with intractable demonstrating marked involvement of the right (arrow) medial temporal

epilepsy who underwent a partial right hippocampectomy. lobe region of this patient (see arrow). The patient was attempting to

Pre-surgery he passes all SVT measures but post-surgery return to college and was being evaluated for special assistance

passes some but not others. Figure 3 shows abnormal medial placement. On the Wechsler Memory Scale (WMS-IV) he obtained

temporal damage in a patient with herpes simplex encephalitis the following: WMS-IV: Audio Memory 5 87; Visual Memory 5 87;

Visual Word Memory 5 73; Immediate Memory 5 86; Delayed

1 Memory 5 84. Immediate Recall: 77.5% (Fail); Delayed Recall:

It is impossible to discuss SVT measures without discussing some by

name. This should not be considered as any type of endorsement or critique 72.5% (Fail); Consistency: 65.0% (Fail); Multiple Choice: 50.0%

of the SVT mentioned. SVT inclusion of named tests in this review occurred (Warning); Paired Associate: 50.0% (Warning); Free Recall: 47.5%;

merely on the basis of the research being cited. Test of Memory and Malingering (TOMM): Trial 1: 39, Trial 2: 47.You can also read