Gaussian Process Regression in Logarithmic Time

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Gaussian Process Regression in Logarithmic Time

Adrien Corenflos1 Zheng Zhao1 Simo Särkkä1

1 Department of Electrical Engineering and Automation, Aalto University, Finland

arXiv:2102.09964v3 [cs.LG] 10 Mar 2021

Abstract determined by either human experts or learned from data.

Additionally for certain datasets and covariance functions

choices, finding such sparse representation might prove dif-

The aim of this article is to present a novel paral- ficult.

lelization method for temporal Gaussian process

(GP) regression problems. The method allows for In the case of temporal or spatio-temporal GPs we can use

solving GP regression problems in logarithmic state-space smoothing methods [Hartikainen and Särkkä,

O(log N) time, where N is the number of time 2010, Särkkä et al., 2013] which scale linearly (O(N)) in

steps. Our approach uses the state-space represen- the number of training and test points. The idea is to reformu-

tation of GPs which in its original form allows late the GPs as solutions to stochastic differential equations

for linear O(N) time GP regression by leveraging (SDEs). By using this representation one can then leverage

the Kalman filtering and smoothing methods. By the Markov property and reduce the regression computa-

using a recently proposed parallelization method tional complexity from cubic to linear via the Kalman filter

for Bayesian filters and smoothers, we are able to and RTS smoother methods [Hartikainen and Särkkä, 2010].

reduce the linear computational complexity of the

Kalman filter and smoother solutions to the GP re-

gression problems into logarithmic span complex-

ity, which transforms into logarithm time complex-

ity when implemented in parallel hardware such as

a graphics processing unit (GPU). We experimen-

tally demonstrate the computational benefits one

simulated and real datasets via our open-source

implementation leveraging the GPflow framework.

1 INTRODUCTION

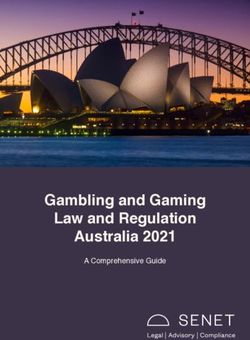

Figure 1: Average run time in seconds of predicting M =

10 000 test points for noisy sinusoidal data with RBF co-

Gaussian processes (GPs) [Rasmussen and Williams, 2006]

variance function for standard GP, SSGP, and PSSGP (ours).

are a family of useful priors used to solve regression and

x-axis is the number of training data points N. We can see

classification problems arising in machine learning. In their

that our method outperforms GP and SSGP by a factor 10.

naive form their complexity scales as O(N 3 ) where N is

the number of training data points, which is problematic

with large datasets. In literature people have proposed using The aim of this article is to develop parallel state-space

sparse GP methods, based on introducing U

N induc- GPs (PSSGPs) methods with which the computational com-

ing points, spectral samples, or basis functions which can plexity (in the sense of parallel span complexity) reduces

reduce the complexity down to O(U 2 N) [Rasmussen and to logarithmic O(log N) in the number of data points, both

Williams, 2006, Quiñonero-Candela and Rasmussen, 2005, at training and inference time (see Fig. 1). The conven-

Lázaro-Gredilla et al., 2010, Hensman et al., 2017, Solin tional Kalman filter and smoother based state-space GPs

and Särkkä, 2020]. However, the positions/shapes and num- (SSGPs) are not easily parallelizable as such and require

bers of inducing points or basis functions must typically be O(N) time also when run in parallel architectures. However,Särkkä and Ángel F. García-Fernández [2021] showed that 2 STATE-SPACE GAUSSIAN PROCESSES

by converting the Kalman filter and smoother into an asso-

ciative operator form it is possible to reduce their time (i.e., In this section, we quickly recall results about the state-

span) complexity to O(log N). This proves highly beneficial space formulation of Gaussian processes and present their

on parallel architectures such as graphic processing units temporal parallelization formulation.

(GPU) or the more recent tensor processing units (TPU)

[Jouppi et al., 2017]. In particular, one may then resort to

2.1 GPS IN STATE-SPACE FORM

modern computational libraries such as TensorFlow to op-

timize the hyperparameters of the GP model via automatic

As shown in Hartikainen and Särkkä [2010], a temporal GP

differentiation at a O(log N) cost.

regression problem of the form

As shown in [Grigorievskiy et al., 2017], is also possible to f (t) ∼ GP(0,C(t,t 0 )),

leverage the sparse matrix structure induced by state-spate (1)

representation together with parallel matrix computations to yk = f (tk ) + ek , ek ∼ N (0, σk2 ),

induce parallelism. This approach is related to the stochastic where k = 1, . . . , N, can be converted into a smoothing prob-

partial differential equation (SPDE) methods for Gaussian lem for a nx -dimensional continuous-discrete state-space

Markov fields [Lindgren et al., 2011] where parallel matrix model,

computations also provide computational benefits. How-

dx(t)

ever, although these methods can also reach O(log N) span = G x(t) + L w(t),

complexity in some special cases, they require computa- dt (2)

tions with large (although sparse) matrices – which can be yk = H x(tk ) + ek ,

memory expensive – and the logarithmic span complexity where nx depends on the covariance function at hand, f (t) ,

is hard to guarantee for all the subproblems [Grigorievskiy H x(t), and with initial conditions taken to be at its steady-

et al., 2017]. Other approaches to parallelization of Gaus- state

sian processes include, for example, local approximations

and mixtures of experts [Low et al., 2015, Liu et al., 2018, x(t0 ) ∼ N (0, P∞ ), (3)

Zhang and Williamson, 2019]. where P∞ is the solution of the Lyapunov equation [Brogan,

The contribution of our paper is four-fold: 2011]

0 = G> P∞ + P∞ G + L Q L> . (4)

1. We combine the formulation of state-space GPs with This is especially true in the case of Matérn kernels where

parallel representation of Kalman filters. this representation is exact and available in closed form

[Hartikainen and Särkkä, 2010], while more general station-

ary covariance functions such as the radial basis function

2. We extend the parallel formulation to missing measure- (RBF) can be approximated by a system of the form (2) of

ments for prediction in state-space GPs. increasing dimension up to an arbitrary precision by using

Taylor series or Páde approximants [Hartikainen and Särkkä,

3. We implement parallelized parameter learning via like- 2010, Särkkä and Piché, 2014] in spectral domain. State-

lihood maximization and Hamiltonian Monte Carlo space representations of various other covariance functions

methods on GPU. have been reviewed in Solin [2016] and Särkkä and Solin

[2019].

4. The method has been implemented as an exten- For instance, the state-space formulation for Matérn 3/2

sible library leveraging the GPflow framework, covariance function is

which can be accessed at https://github.com/ √ 0|

!

3 |t − t

EEA-sensors/parallel-gps. CMat. (t,t 0 ) =σ 2 1 +

`

√ ! (5)

In Section 2, we review the main results on the state-space 3 |t − t 0 |

× exp − ,

representation of Gaussian processes. In Section 3 we extend `

the formulation of parallel Kalman filtering and smoothing

to the case when there exist missing observations, which has the state-space representation

2

is necessary for its use in Gaussian processes regression 0 1 0 σ0

G= , L = , P0 = ,

problems. In Section 4 we then describe the end-to-end par- −λ 2 −2 λ 1 λ 2σ 2

0

allel formulation of state space Gaussian processes. Finally √ (6)

in Section 5 we illustrate the benefits and limitations of where λ = 3/`. The spectral density of the corresponding

our approach for prediction and parameters learning on a while noise isq = 4λ 3 σ 2 . The measurement model matrix

toy-model and two real datasets. is H = 1 0 .2.2 GP INFERENCE AS KALMAN FILTERING 3 TEMPORAL PARALLELIZATION OF

AND SMOOTHING KALMAN EQUATIONS

The continuous-time state-space model (2) can be dis- The formulation in terms of a continuous state space model

cretized into a discrete-time linear Gaussian state space presented in the previous section reduced the computational

model (LGSSM, e.g., [Särkkä and Solin, 2019]) of the form complexity of inference in compatible GPs to O(N) instead

of the cubic cost induced by naive GP methods or to a

xk = Fk−1 xk−1 + qk−1 lesser extent by their sparse approximations [Rasmussen

(7) and Williams, 2006]. In this section, we show how by us-

yk = H xk + ek , ing and extending the parallel state-space methodology of

where qk−1 ∼ N (0, Qk−1 ) and ek ∼ N(0, σk ). Särkkä and Ángel F. García-Fernández [2021] we can re-

duce the parallel computational complexity (i.e., the span

In the above model, complexity) down to O(log N).

Fk−1 = exp ((tk − tk−1 ) G) ,

Z tk −tk−1 3.1 PARALLEL KALMAN FILTER

Qk−1 = exp ((tk − tk−1 − s) G) (8)

0

In Särkkä and Ángel F. García-Fernández [2021], the au-

× L q L> exp ((tk − tk−1 − s) G)> ds.

thors introduce an equivalent formulation of Kalman fil-

The GP regression problem can now be solved by applying ters and smoothers in terms of an associative operator that

Kalman filter and smoother algorithms on the model (7) enables them to leverage distributed implementations of

[Hartikainen and Särkkä, 2010]. scan (prefix-sum) algorithms such as Blelloch [1989, 1990].

However their formulation doesn’t consider the possibility

of missing observations, which is a critical characteristic of

2.3 PARAMETER LEARNING IN STATE-SPACE inference in state space GP models to do a prediction on the

GPS test points [Särkkä et al., 2013], we therefore extend their

derivation to take these into account.

Additionally C(t,t 0 ) and σk2 in Equation (1) may depend

on hyperparameters θ : C(t,t 0 ; θ ), σk2 (θθ ) that need to be The method in Särkkä and Ángel F. García-Fernández

learned. To this end one can rely on likelihood-based tech- [2021] consists in representing the filter in terms of five

niques that require to compute the marginal likelihood quantities A, b, C, η , J, which are first initialized in parallel

of the observations p(y1 , . . . , yN | θ ), which can be done, and then combined using parallel associative scan [Blelloch,

given the filtering density p(xk | y1 , . . . , yk , θ ) = N (xk | 1989, 1990]. In order to cope with the missing observations,

mk (θθ ), Pk (θθ )), through the identity the initialization needs to be modified. At the time steps k,

where we have an observation, we initialize in the same way

N as in the original method:

p(y1 , . . . , yN | θ ) = ∏ N (yk | µk (θθ ),Vk (θθ )), (9)

k=1 Ak = (Inx − Kk H) Fk−1 ,

where µk = H Fk−1 mk−1 is the predictive observation mean bk = Kk yk ,

and Vk = H [Fk−1 Pk−1 F> > 2

k−1 + Qk−1 ]H + σk is the obser- Ck = (Inx − Kk H) Qk−1 , (10)

vation predictive variance, and we have omitted the depen-

Kk = Qk−1 H> S−1

k ,

dency on θ for notational simplicity.

>

Sk = H Qk−1 H + Rk ,

For example, we may then compute the maximum likelihood

estimate (MLE) by maximizing Equation (9) or equivalently and for k = 1 as

its logarithm, or more generally, we can also compute the

maximum a posteriori of the parameters θ if they are consid- S1 = HP∞ H> + R1 ,

ered to have a prior distribution p(θθ ) by maximizing instead K1 = P∞ H> S−1

1 ,

p(θθ | y1 , . . . , yN ) ∝ p(y1 , . . . , yN | θ ) p(θθ ). Finally, we can

A1 = 0, (11)

also sample from the posterior distribution p(θθ | y1 , . . . , yN )

using Markov chain Monte Carlo, and in particular if we b1 = K1 y1 ,

have access to its gradient with respect to θ , via Hamilto- C1 = P∞ − K1 S1 K>

1.

nian Monte Carlo [Neal, 2011]. Computing these gradients

has been made easier through the advent of automatic differ- The parameters of the second term are given as

entiation libraries such as TensorFlow [Abadi et al., 2015],

which we exploit in the experiments of this paper (in Sec- η k = F> > −1

k−1 H Sk yk ,

(12)

tion 5) by extending GPflow [Matthews et al., 2017]. Jk = F> > −1

k−1 H Sk HFk−1 ,for k = 1, . . . , N. In our case we actually have scalar obser- Proposition 1 (Equivalence of sequential and parallel

vations and Rk = σk , but we have retained the possibility for Kalman filters). For all k = 1, 2, . . . , N +M the Kalman filter

processing multiple observations at a time step by letting means and covariances are given by xk = b∗k and Pk = C∗k ,

the yk to be a vector and Rk a matrix. In the remainder of respectively.

this article we only consider uni-dimensional observations

yk ’s but the equations above could also be used for multi- This proof of this proposition is a bit lengthy and therefore

dimensional ones where the observation matrices would can be found in the Supplementary material.

only need to be stacked together.

Remark 1. The initialization Equations (13), (14), and (15)

In the case when k corresponds to a missing observation in the case of missing observations can intuitively be un-

we initialize in a slightly different way. By noticing that derstood as taking the local as taking the local gain Kk

fk (xk | xk−1 ) reduces to the transition distribution p(xk | approaching zero in Equations (10), (11), and (12), respec-

xk−1 ) = N (xk | Fk−1 xk−1 , Qk−1 ) for k > 1 and p(x1 ) for tively, or more generally as taking the limit of measurement

k = 1 so that initialization Equations (10), (11) and (12) in noise variance R to approach infinity.

the steady-state of the reduce to the following equations

Ak = Fk−1 , 3.2 PARALLEL RAUCH–TUNG–STRIEBEL

SMOOTHER

bk = 0, (13)

Ck = Qk−1 The prediction step of the GP then corresponds to running

a Kalman smoother (Rauch–Tung–Striebel smoother, see

for k > 1, and for k = 1 as Hartikainen and Särkkä [2010], Särkkä et al. [2013]) on the

filtering means and covariances xk and Pk obtained in paral-

A1 = 0,

lel in the previous section. We show in the Supplementary

b1 = 0, (14) that this can be done in parallel using the method proposed

C1 = P∞ , in Särkkä and Ángel F. García-Fernández [2021] even when

some observations are missing.

with for all k,

Similarly to parallel filter we first need to initialize the ele-

η k = 0, ments that will be passed to the associative scan algorithm.

(15) This can be done in parallel in the following way.

Jk = 0.

For k < N we have:

We prove that these equations indeed recover the filtering

−1

distribution in the Supplementary. Ek = Pk F> F P F>

+ Q ,

k k k k k

When the quantities A, b, C, η , J have been initialized they

gk = xk − Ek (Fk xk ) ,

can then be combined using parallel scan, with the associa-

tive operator Lk = Pk − Ek Fk Pk ,

and for k = N we have

(Ai j , bi j , Ci j , η i j , Ji j )

= (Ai , bi , Ci , η i , Ji ) ⊗ (A j , b j , C j , η j , J j ) EN = 0,

gN = xN ,

defined in the same way as in Särkkä and Ángel F. García- LN = PN .

Fernández [2021]:

We can then define the associative operator

Ai j = A j (Inx + Ci J j )−1 Ai ,

(Ei j , gi j , Li j ) = (Ei , gi , Li ) ⊗ (E j , g j , L j )

bi j = A j (Inx + Ci J j )−1 bi + Ci η j + b j ,

Ci j = A j (Inx + Ci J j )−1 Ci A>j + C j , corresponding to the smoothing operation is then defined as

−1

η i j = A>

i (Inx + J j Ci ) η j − J j bi + η i , Ei j = Ei E j ,

−1

Ji j = A>

i (Inx + J j Ci ) J j Ai + Ji . gi j = Ei g j + gi ,

Li j = Ei L j E>

i + Li .

Running a prefix-sum algorithm on the elements above with

the filtering operator ⊗ then produces a sequence of ele- Similarly to the filtering case, running a now backward

ments (A∗k , b∗k , C∗k , η ∗k , J∗k ), k = 1, . . . , N, of which the terms prefix sum algorithm produces the elements (E∗k , g∗k , L∗k ) for

xk , b∗k and Pk , C∗k will then correspond to the filtering k = 1, . . . , N such that the smoothing means and covariances

mean and covariance at time k, respectively. can be recovered as msk = g∗k and Psk = L∗k , respectively.Proposition 2 (Equivalence of sequential and parallel D ∈ Rnx ×nx , the continuous-discrete model

Kalman smoothers). For all k = 1, 2, . . . , N + M the Kalman

smoother means and covariances are given by msk = g∗k and dz(t)

Psk = L∗k respectively. = D−1 G D z(t) + D−1 L w(t),

dt (16)

yk = H D zk + ek ,

The proof of the above proposition can be found in the

Supplementary material. with initial condition given by z(0) ∼ N (0, D−1 P∞ D) is an

equivalent representation of the state-space model (2) with

initial covariance given by x(0) ∼ N (0, P∞ ) with P∞ given

4 TEMPORAL PARALLELIZATION OF in Equation (3), in the sense that for all t,

GAUSSIAN PROCESSES

f (t) = H D z(t).

A direct consequence of Propositions 1 and 2 is the fact that

the parallel and sequential versions of SSGP are equivalent,

so that their solutions will recover the same distribution. In particular it means that the gradient of p(y1 , . . . , yN | θ )

with respect to the covariance function and observation

In this section, we now provide the details of the steps model parameters θ is left unchanged by the choice of scal-

needed to create the linear Gaussian state-space model ing matrix D. This property allows us to condition our state

(LGSSM) representation of state-space GPs so that the end- space representation of the original GP using the scaling

to-end algorithm is parallelizable across the time dimension, matrix D∗ defined in Osborne [1960], and to compute the

resulting a total span complexity of O(log N) in the number gradient of the marginal log-likelihood of the observations

of observations N, from training to inference. with respect to the GP hyperparameters as if D∗ did not

depend on F and therefore the hyperparameters θ . This is

critical to obtain a stable gradient while not having to unroll

4.1 COMPUTATION OF THE STEADY-STATE

the loop necessary to compute D∗ .

COVARIANCE

To represent a stationary GP one must start the state-space

model SDE from the stationary initial condition Hartikainen 4.3 CONVERTING GPS INTO DISCRETE-TIME

and Särkkä [2010]. The (steady-state) initial covariance can STATE SPACE

be computed by solving the Lyapunov equation (4). The

complexity of this step is independent of the number of time In order to use the parallel formulation of Kalman filters and

steps and does not need explicit time-parallelization. smoothers in Section 3 we need to first form the continuous

state space model representation from the initial Gaussian

There exists a number of iterative methods for solving this process definition. This operation is independent of the num-

kind of algebraic equations [Hodel et al., 1996]. However, ber of measurements and therefore has a complexity of O(1).

in order to make automatic differentiation efficient, in this When it has been formed, we then need to transform it into

work, we used the closed form vectorization solution given a discrete-time LGSSM via the equations (8). In practice,

in Brogan [2011] (p. 229) which relies on matrix algebra, the discretization can be implemented using, for example,

and does not need any explicit looping. This is feasible matrix fractions or various other methods [Axelsson and

because we only need to numerically solve the Lyapunov Gustafsson, 2014, Särkkä and Solin, 2019]. These opera-

equation for small state dimensions. Furthermore, as this tions are fully parallelizable across the time dimension and

solution only involves matrix inversions and multiplications, therefore have a span complexity of O(1) when run on a

it is easily parallelizable on GPU architectures. parallel architecture.

It is worth noting that at learning phase, the discretization

4.2 BALANCING OF THE STATE SPACE MODEL only needs to happen at the training data points, therefore

not involving any further data manipulation. However, when

In practice the state space model obtained via discretization predicting, it is necessary to insert the M requested predic-

in Section 2 might be numerically unstable due to the transi- tion times at the right location in the training data set in

tion matrix having a poor conditioning number, this would order to be able to run the Kalman filter and smoother rou-

in turn result in inaccuracies in computing both the GP pre- tines. When done naively, this operation has a complexity

dictions and the marginal log-likelihood of the observations. O(M + N) using merging operation [Cormen et al., 2009],

To mitigate this issue we resort to balancing [Osborne, 1960] however it can be done in parallel with a span complexity

in order to obtain a transition matrix F which has rows and of O(log(min(M, N))). In addition, for some GP models,

columns that have equal norms, therefore obtaining a more such as Matérn GPs the discretization can be done offline,

stable auxiliary state space model. For any diagonal matrix because the discretization admits closed-forms.4.4 END-TO-END COMPLEXITY ANALYSIS OF

PARALLELIZED STATE SPACE GPS

The complexity analysis of the six stages for running the

parallellized state space GPs are in the following.

1. Complexity of forming the continuous state-space

model is O(1) in both work and span complexities.

2. Complexity of discretizing the state-spate model is

O(N) is work complexity and O(1) in span complexity.

3. Complexity of the parallel Kalman filtering and

smoothing operations for training is of O(N) in work

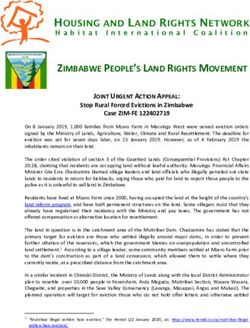

complexity and O(log N) is span complexity. Figure 2: Heatmap of wall time for predicting with GP,

SSGP, and PSSGP. y-axis is the number of training data

4. Complexity of merging the data for the prediction is

points, x-axis the number of test data points.

of work complexity O(N + M) and of span complexity

O(log(min(M, N))).

5.1 TOY MODEL

5. Complexity of the parallel Kalman filtering and

smoothing operations for prediction is of O(N + M) in We first study the behavior of our proposed methodology on

work complexity and O(log(N + M)) is span complex- a simple sinusoidal model given by

ity.

f (t) = sin(π t) + sin(2 π t) + sin(3 π t)

6. Automatic differentiation as an adjoint operation has (17)

the same computational graph as the parallel Kalman yk = f (tk ) + rk ,

filters and smoothers, and therefore has the same com-

with observations and prediction times being equally spaced

plexities as the training step, O(N) and O(log N).

on (0, 4), by increasing the number of training or testing

Putting together the above we can conclude that the total points we can therefore demonstrate the empirical time com-

work and span complexities of doing end-to-end inference plexity (in terms of wall clock or work span) of our proposed

to prediction on parallelized state space GPs are O(N + M) method compared to the standard GP and state space GP.

and O(log(N + M)), respectively.

Prediction We first present the results of running a pre-

diction on test points given some input data for a standard

5 EXPERIMENTS GP, state space GP, and our proposed parallel state space

GP on the sinusoidal data. We have taken the kernels to be

In this section, we illustrate the benefits of our approach successively Matérn32, Matérn52, and RBF (approximated

for inference and prediction on simulated and real datasets. to the 6th order for the state space GPs), corresponding to

Because GPUs are inherently massively parallelized archi- nx = 2, 3, 6 respectively. The training dataset and test dataset

tectures, they are typically not optimized for sequential have sizes ranging from 212 to 215 . As can be seen in Fig-

data processing, exposing a lower clock speed than cost- ures 1 and 2, our proposed method consistently outperforms

comparable CPUs. This makes running the standard state standard GP and SSGP across the chosen range of dataset

space GPs on GPU less attractive than running them on sizes with standard GP eventually running out of memory

CPU, contrarily to standard GPs which can leverage par- for too big datasets.

allelized linear algebra routines offered by modern GPU

architectures. In order to offer a fair comparison between Hyperparameters learning This speed improvement,

our proposed methodology, the standard GPs implementa- while interesting for simple prediction becomes critical

tion, and the standard state space GPs, we have therefore when one needs to learn the model hyperparameters, for

chosen to run the sequential implementation of state space instance via maximum a posteriori (MAP) or in a fully

GP on CPU while we run the standard GP and our proposed Bayesian fashion using Markov chain Monte Carlo methods

parallel state space GP on GPU. We verified empirically such as Hamiltonian Monte Carlo. Indeed, in this instance,

that running the standard state space GP on the same GPU not only does the user need to compute the marginal log-

architecture resulted in a temendous performance loss for it likelihood of the observations, they also need to compute

(∼ 100x slower), this justifies running it on CPU for bench- its gradient with respect to the parameters at hand in a

marking. All the results were obtained on using an AMD® timely manner so as to be able or sample from (or to find

Ryzen threadripper 3960x CPU with 128 GB DDR4 RAM, the maximum of) the posterior distribution of the GP pa-

and an Nvidia® GeForce RTX 3090 GPU with 24GB mem- rameters efficiently. While for small enough datasets it is

ory. efficiently achieved on GPU using automatic differentiationPSSGP is x10 and 43x faster than GP and SSGP when

N = 3200. Interpolating daily then took 0.14s for PSSGP,

while SSGP on CPU and GP on GPU where respectively 23

and 33 times slower.

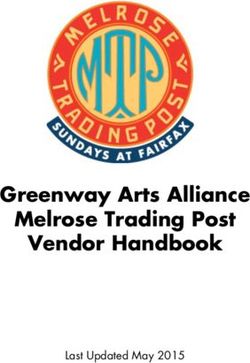

Figure 3: Posterior distribution of the GP parameters for 5.3 CO2 CONCENTRATION DATASET

PSSGP. From left to right: kernel lenghtscale, kernel vari-

ance, and observation model variance. In order to understand the impact of dimensionality of the

SDE we finally consider the Mauna Loa carbon dioxide

provided by frameworks such as GPflow Matthews et al. concentration dataset2 . We selected monthly and weekly

[2017] (relying on Abadi et al. [2015] to eventually com- data from year 1974 to 2021 which contains 3192 training

pute the reverse mode gradients), this is not the case for points. To model the periodic pattern of the data, we use the

longer datasets which arise in real-life. In this section we following composite covariance function

illustrate the benefits of our proposed algorithm to sample

from the posterior distribution of the lengthscale, variance Cco2 (t −t 0 ) = CPer. (t −t 0 )CMat. (t −t 0 ) +CMat. (t −t 0 ). (18)

and observation noise of a GP process with Matérn32 ker-

nel using the same data as generate above. The lengthscale, This is slightly different from the one suggested in [Solin

variance and observation noise where chosen to have priors and Särkkä, 2014] where the authors also add a RBF covari-

given by Normal distributions of means and variances (1, 3), ance to Cco2 , however we did not see any improvement in

(1, 3), and (0.1, 1) respectively. To do this we are sampling RMSE from adding this supplementary degree of freedom

1000 times from our posterior distribution using 10 parallel and therefore removed it so as to make the system more

HMC chains Neal [2011] with step-size 1e − 2, 10 leapfrog easily identifiable. This covariance function cannot be rep-

steps, and 100 burn-in samples. In Figure 3 we show the resented exactly by a finite dimensional SDE due to the

posterior samples obtained in ∼ 10 minutes using PSSGP presence of the quasi-periodic covariance function. How-

on the sine model with 5500 training points (i.e.regression problems on large datasets as illustrated by our Alexander Grigorievskiy, Neil Lawrence, and Simo Särkkä.

experiments on synthetic and real datasets. Parallelizable sparse inverse formulation Gaussian pro-

cesses (SpInGP). In IEEE International Workshop on

In addition we found that this method does not scale well

Machine Learning for Signal Processing (MLSP), pages

in the case when the representation of the Gaussian process

1–6, 2017.

involves a stochastic differential equation with high dimen-

sionality, more work is therefore needed in this direction as

J. Hartikainen and S. Särkkä. Kalman filtering and smooth-

to further reduce the complexity of parallel state-space GPs.

ing solutions to temporal Gaussian process regression

Moreover recent works [Yaghoobi et al., 2021] have sug-

models. In Proceedings of the IEEE International Work-

gested that this parallelization technique could also be used

shop on Machine Learning for Signal Processing (MLSP),

for non-linear state-space models, which would then make

pages 379–384, 2010.

it possible to extend the presented work to classification and

deep state-space Gaussian processes [Zhao et al., 2020].

James Hensman, Nicolas Durrande, and Arno Solin. Varia-

tional Fourier features for Gaussian processes. Journal

Acknowledgements of Machine Learning Research, 8(151):1–52, 2017.

The authors would like to thank Academy of Finland A Scottedward Hodel, Bruce Tenison, and Kameshwar R

(projects 321900 and 321891) as well as the doctoral school Poolla. Numerical solution of the Lyapunov equation

of Electrical Engineering, Aalto University for funding the by approximate power iteration. Linear Algebra and its

research. Applications, 236:205–230, 1996.

N. P. Jouppi, C. Young, N. Patil, D. Patterson, G. Agrawal,

References R. Bajwa, S. Bates, S. Bhatia, N. Boden, A. Borchers,

et al. In-datacenter performance analysis of a tensor

Martín Abadi, Ashish Agarwal, Paul Barham, Eugene processing unit. In Proceedings of the 44th Annual In-

Brevdo, Zhifeng Chen, Craig Citro, Greg S. Corrado, ternational Symposium on Computer Architecture, pages

Andy Davis, Jeffrey Dean, Matthieu Devin, Sanjay Ghe- 1–12, 2017.

mawat, Ian Goodfellow, Andrew Harp, Geoffrey Irving,

Michael Isard, Yangqing Jia, Rafal Jozefowicz, Lukasz Miguel Lázaro-Gredilla, Joaquin Quiñonero-Candela,

Kaiser, Manjunath Kudlur, Josh Levenberg, Dandelion Carl Edward Rasmussen, and Aníbal R. Figueiras-Vidal.

Mané, Rajat Monga, Sherry Moore, Derek Murray, Chris Sparse spectrum Gaussian process regression. Journal of

Olah, Mike Schuster, Jonathon Shlens, Benoit Steiner, Machine Learning Research, 11:1865–1881, 2010.

Ilya Sutskever, Kunal Talwar, Paul Tucker, Vincent Van-

houcke, Vijay Vasudevan, Fernanda Viégas, Oriol Vinyals, Finn Lindgren, Håvard Rue, and Johan Lindström. An ex-

Pete Warden, Martin Wattenberg, Martin Wicke, Yuan plicit link between Gaussian fields and Gaussian Markov

Yu, and Xiaoqiang Zheng. TensorFlow: Large-scale ma- random fields: The stochastic partial differential equation

chine learning on heterogeneous systems, 2015. Software approach. Journal of the Royal Statistical Society: Series

available from tensorflow.org. B (Statistical Methodology), 73(4):423–498, 2011.

Patrik Axelsson and Fredrik Gustafsson. Discrete-time so- Haitao Liu, Jianfei Cai, Yi Wang, and Yew Soon Ong. Gener-

lutions to the continuous-time differential lyapunov equa- alized robust Bayesian committee machine for large-scale

tion with applications to kalman filtering. IEEE Transac- Gaussian process regression. In International Conference

tions on Automatic Control, 60(3):632–643, 2014. on Machine Learning, pages 3131–3140, 2018.

G. E. Blelloch. Scans as primitive parallel operations. IEEE

Kian Hsiang Low, Jiangbo Yu, Jie Chen, and Patrick Jail-

Transactions on Computers, 38(11):1526–1538, 1989.

let. Parallel Gaussian process regression for big data:

G. E. Blelloch. Prefix sums and their applications. Technical Low-rank representation meets Markov approximation.

Report CMU-CS-90-190, School of Computer Science, In Proceedings of the AAAI Conference on Artificial In-

Carnegie Mellon University, 1990. telligence, volume 29, 2015.

William L. Brogan. Modern Control Theory. Pearson, 3rd Alexander G. de G. Matthews, Mark van der Wilk, Tom

edition, 2011. Nickson, Keisuke. Fujii, Alexis Boukouvalas, Pablo

León-Villagrá, Zoubin Ghahramani, and James Hensman.

Thomas H Cormen, Charles E Leiserson, Ronald L Rivest, GPflow: A Gaussian process library using TensorFlow.

and Clifford Stein. Introduction to Algorithms. MIT Journal of Machine Learning Research, 18(40):1–6, apr

press, 2009. 2017.Radford M. Neal. MCMC using Hamiltonian dynamics. In Michael Minyi Zhang and Sinead A. Williamson. Embar-

S. Brooks, A. Gelman, G. L. Jones, and X.-L. Meng, edi- rassingly parallel inference for Gaussian processes. Jour-

tors, Handbook of Markov Chain Monte Carlo, chapter 5. nal of Machine Learning Research, 20(169):1–26, 2019.

Chapman & Hall/CRC, 2011.

Zheng Zhao, Muhammad Emzir, and Simo Särkkä.

E. E. Osborne. On pre-conditioning of matrices. Journal of Deep state-space gaussian processes. arXiv preprint

the ACM, 7(4):338–345, October 1960. arXiv:2008.04733, 2020.

Joaquin Quiñonero-Candela and Carl Edward Rasmussen.

A unifying view of sparse approximate Gaussian process

regression. Journal of Machine Learning Research, 6:

1939–1959, 2005.

Carl E. Rasmussen and Christopher K.I. Williams. Gaussian

Processes for Machine Learning. MIT Press, 2006.

S. Särkkä, A. Solin, and J. Hartikainen. Spatiotempo-

ral learning via infinite-dimensional Bayesian filtering

and smoothing: A look at Gaussian process regression

through Kalman filtering. IEEE Signal Processing

Magazine, 30(4):51–61, 2013. doi: 10.1109/MSP.2013.

2246292.

Simo Särkkä and Ángel F. García-Fernández. Temporal

parallelization of Bayesian smoothers. IEEE Transactions

on Automatic Control, 66(1):299–306, 2021.

Simo Särkkä and Robert Piché. On convergence and accu-

racy of state-space approximations of squared exponen-

tial covariance functions. In Proceedings of the IEEE

International Workshop on Machine Learning for Signal

Processing (MLSP), pages 1–6, 2014.

Simo Särkkä and Arno Solin. Applied Stochastic Differential

Equations. Cambridge University Press, 2019.

Simo Särkkä, Arno Solin, and Jouni Hartikainen. Spatiotem-

poral learning via infinite-dimensional Bayesian filtering

and smoothing. IEEE Signal Processing Magazine, 30

(4):51–61, 2013.

Arno Solin. Stochastic Differential Equation Methods for

Spatio-Temporal Gaussian Process Regression. Doctoral

dissertation, Aalto University, Helsinki, Finland, 2016.

Arno Solin and Simo Särkkä. Explicit link between pe-

riodic covariance functions and state space models. In

Proceedings of the Seventeenth International Conference

on Artificial Intelligence and Statistics (AISTATS), vol-

ume 33, pages 904–912, 2014.

Arno Solin and Simo Särkkä. Hilbert space methods for

reduced-rank Gaussian process regression. Statistics and

Computing, 30(2):419–446, 2020.

Fatemeh Yaghoobi, Adrien Corenflos, Sakira Hassan, and

Simo Särkkä. Parallel iterated extended and sigma-point

Kalman smoothers. In To appear in Proceedings of IEEE

International Conference on Acoustics, Speech and Sig-

nal Processing (ICASSP), 2021.You can also read