LUDUS: An Optimization Framework to Balance Auto Battler Cards

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

PRELIMINARY PREPRINT VERSION: DO NOT CITE

The AAAI Digital Library will contain the published

version some time after the conference.

L UDUS: An Optimization Framework to Balance Auto Battler Cards

Nathaniel Budijono∗1 , Phoebe Goldman∗2 , Jack Maloney∗3 ,

Joseph B. Mueller4,5 , Phillip Walker5 , Jack Ladwig5 , Richard G. Freedman5

1

University of Minnesota Twin Cities

2

New York University

3

University of Wisconsin-Madison

4

University of Minnesota

5

SIFT

budij001@umn.edu, phoebe.goldman@nyu.edu, jmaloney3@wisc.edu,

jmueller@sift.net, pwalker@sift.net, jladwig@sift.net, rfreedman@sift.net

Abstract of the Coast n.d.b), Pokémon (The Pokémon Company n.d.),

Auto battlers are a recent genre of online deck-building and Yu-Gi-Oh! (Konami n.d.). In collectible card games, a

games where players choose and arrange cards that then com- battle between two decks is called a game. Because it en-

pete against other players’ cards in fully-automated battles. compasses and affects multiple games, the deck-building

As in other deck-building games, such as trading card games, process is called the metagame.

designers must balance the cards to permit a wide variety of Deck-building games and metagames are often described

competitive strategies. We present L UDUS, a framework that as being either healthy or unhealthy. The health of a

combines automated playtesting with global search to opti- metagame depends on a variety of factors, of which we

mize parameters for each card that will assist designers in highlight diversity. Diverse metagames are those where

balancing new content. We develop a sampling-based approx-

imation to reduce the playtesting needed during optimization.

many different decks/lineups coexist with similar overall

To guide the global search, we define metrics characterizing win rates.

the health of the metagame and explore their impacts on the Metagame balance in deck-building games poses a chal-

results of the optimization process. Our research focuses on lenge for game designers because a relatively small num-

an auto battler game we designed for AI research, but our ap- ber of cards are combined to form a large number of pos-

proach is applicable to other auto battler games. sible decks, and these decks are paired into an even larger

number of possible match-ups. Magic: the Gathering relies

1 Introduction on human playtesting in advance of releasing a set (DeTora

Auto battlers are a new and wildly popular genre of online 2017), but human playtesting before release has repeatedly

game. Auto Chess released in January of 2019 (Drodo Stu- failed to identify card designs or sets that lead to unhealthy

dio 2019), and within that same month was regularly seeing metagames. Even when it does successfully identify and fix

70,000 concurrent players (Tack 2019). By June, Dota Un- unhealthy metagames, human playtesting requires signifi-

derlords and Teamfight Tactics were among the most popu- cant labor and time from a number of skilled players.

lar online games in the world (Grayson 2019). Since then, In addition, digital card games like Hearthstone collect

entries like Hearthstone: Battlegrounds (Blizzard Entertain- and analyze data after release from consumer play (Zook

ment 2019), Fire Emblem Heroes: Pawns of Loki (Nintendo 2019). While inexpensive, this approach is insufficient on its

Mobile 2020), and Storybook Brawl (Good Luck Games own because it requires a significant number of consumers

2021) have further refined the genre. to play in unhealthy metagames in order to identify prob-

In an auto battler, a number of players (typically eight) lematic designs.

compete to build the best lineup of cards through a number Healthy and diverse metagames are desirable because

of rounds. Each round consists of a deck-building phase and they lead to more varied, and therefore more enjoyable, bat-

a battle. In the deck-building phase, each player improves tles. In a homogenous metagame, any two battles are rela-

their lineup by selecting cards from a shared pool and ar- tively similar. This reduced novelty bores players, who may

ranging their order. In the battle phase, two players’ lineups then reduce their involvement with the game and purchase

compete automatically. Players who lose too many of these fewer products. Also, unsatisfied players often share their

battles are eliminated. Play proceeds in rounds until all but opinions on social media, discouraging new players from

one player are eliminated. The remaining player wins. joining the game.

Auto battlers are in many ways similar to deck-building For example, Magic: the Gathering’s set Throne of El-

collectible card games like Magic: the Gathering (Wizards draine was criticized for containing a number of overpow-

∗

These authors contributed equally. ered cards. Decks that contained these powerful cards re-

Copyright c 2022, Association for the Advancement of Artificial liably beat decks without them. High-level players quickly

Intelligence (www.aaai.org). All rights reserved. converged on a small number of deck designs that best uti-lized the powerful Throne of Eldraine cards. On at least one expected to be common in the metagame. However, after our

occasion, a tournament was canceled due to lack of interest approach provides suggestions for grounded card instances,

(Triske 2019). Wizards of the Coast, the company respon- game designers can apply Xu et al.’s methods for a deeper

sible for Magic: the Gathering, banned a total of six cards analysis of the suggested card designs. If the designers have

from Throne of Eldraine in response (Wizards of the Coast some expected lineups based on the templates alone, then it

n.d.a; Duke 2019, 2020b,a). Even after the bans, high-profile should be possible to combine our methods. The informa-

professional players have complained on social media about tion available to the game designers would enable them to

Throne of Eldraine’s impact on the Magic: the Gathering explore not just which lineups are considered the best, but

metagame (Scott-Vargas 2020). also how the best lineups change for various card revisions.

In this paper, we introduce L UDUS, a framework to re- Many games that support competitive play over the in-

duce the cost of playtesting without exposing consumers to ternet collect data about what and how people are playing.

unhealthy metagames by automating the balancing process. Game designers can take advantage of various data science

Section 2 discusses other research related to auto battlers approaches to find trends in the logged data that inform them

and deck-building metagames. Section 3.1 develops an auto about how to balance the game (Nguyen, Chen, and Seif El-

battler game we designed to facilitate artificial intelligence Nasr 2015). In the virtual collectible card game Hearthstone,

(AI) research and presents an algorithm that efficiently and clusters of similar deck compositions implicitly describe

accurately approximates the average win rates of all the line- archetypes that are currently popular in the metagame. De-

ups in a metagame. Section 3.2 defines three metrics that signers can use this information to decide if some archetypes

evaluate the diversity of a metagame, and we theorize how are too common (implying they might be too powerful) and

game designers could define more precise metrics for their respond through updates to card descriptions, releasing new

own games. Section 3.3 explains how we use a genetic al- cards that counter the overused archetypes, or releasing new

gorithm to balance a metagame. Section 3.4 explores how cards that support the underused archetypes (Zook 2019).

game designers can use L UDUS and these metrics for in- This organizes human-operated playtesting at macro-scale

sights about their current card designs. Section 4 presents where designers can iterate on their game between updates.

experimental results evaluating the sampling-based approxi- Because human-operated playtesting is expensive

mation described in Section 3.1 and the genetic optimization resource-wise and rarely exhaustive enough to identify all

described in Section 3.3. Section 5 reviews the implications points of concern, automating the playtesting process can

of our experimental results. Section 6 considers possible ex- speed up the number of games played and discover edge

tensions to our work. cases humans might not consider. For deck-building games

where the players have agency and outcomes are nondeter-

2 Related Works ministic (shuffled decks, random outcomes of effects, etc.),

As the auto battler genre is still new, we were only able to the space of possible deck combinations and game states can

find one other work that specifically addresses the use of AI be immeasurable. Following in the footsteps of DeepMind’s

in their design. Xu et al. (2020) focused on autonomously work on AlphaGo (Silver et al. 2016), Stadia trained a

identifying the best lineups that players can construct given deep learning model through many games of self-play in

the available cards, game rules, and lineups that the game’s order to develop a function that could evaluate the quality

designers expect to be played the most. 1 . In a two-step pro- of a game state for a deck-building game (Kim and Wu

cess, their method first simulates play between randomly 2021). Their automated game-playing agent then competed

generated lineups and the designers’ expected lineups to cre- against itself with designer-constructed decks in order to

ate a training dataset for a neural network—the model learns compare the overall decks’ performance, accounting for

a mapping between a given lineup and its estimated win the nondeterministic outcomes through many games as

rate against the designers’ expected lineups. In the second statistical samples. A game designer may manually inspect

step, a genetic algorithm searches for lineups that optimize the outcomes of all the matches to determine whether any

the learned win rate function under various constraints that revisions to the cards are necessary.

define lineup construction rules throughout the auto battler Bhatt et al. (2018) investigated the deck-building aspect

game. Constraints account for drafting additional cards be- of Hearthstone with genetic algorithms evolving the decks to

tween rounds of play, which are not elements of our auto optimize defeating a specified opposing deck. Their game-

battler game (see Section 3.1 for a description). playing agents used a greedy, myopic search algorithm as an

Game designers may use the optimized lineups to evaluate experimental control while the evolving decks were the ex-

whether the cards are balanced and, if not, which cards need perimental cases. The resulting evolutionary paths yield in-

revision. Our method instead revises the cards directly, opti- sights into the diversity of the metagame. In contrast, Zook,

mizing the viability of the set of possible lineups that players Harrison, and Riedl (2019) altered the parameters of Monte

can construct. This alters the initial efforts of the game de- Carlo Tree Search to study how different types of players

signers to focus on the rules and some card templates with- performed with fixed decks in their own collectible card

out committing to specific grounded card instances. Because game. It revealed balance issues from the perspective of the

the templates are lifted representations of the actual cards, it game’s rules, such as a clear advantage to the player with the

is more difficult for designers to identify which lineups are first turn. To investigate more specific scenarios, Jaffe et al.

(2012) enabled designers to specify restrictions on player

1

Their specific auto battler uses ‘piece’ instead of ‘card.’ parameters and available cards. Their simulator ran multiplegames and summarized the results with respect to checking to itself and donates the remaining health points to its

various features of the win percentages and play logs. Chen player’s other cards evenly.

and Guy (2020) considered both simulating multiple games Armor Points The card starts with this many armor points.

and training a deep learning model to assess the metagame When the card would be damaged, it instead loses an

as they procedurally generated new cards using grammars. armor point. When the card has zero armor points, it is

damaged normally.

3 Methods Damage Split Percent When the card would be damaged,

To achieve the goals outlined in the introduction, L UDUS it instead receives this many percent of the damage to it-

contains an auto battler game with a number of special cards self and splits the remaining damage evenly to its player’s

with extra mechanics and a basic ‘vanilla’ card that only en- other cards.

gages in standard combat without extra mechanics. The de-

Middle Age Prior to entering combat, the card increases its

tails of these cards and their mechanics are explained below

attack by 1 if it has not participated in this many com-

in Section 3.1. The health and attack values of all cards can

bat phases. After this many combat phases, the card de-

be adjusted as well as some values pertaining to the extra

creases its attack by 1 before entering combat.

mechanics (such as how much damage to deal to an oppo-

nent’s card when a card dies). Multiple cards of the same Target Age When the card dies, if it participated in this

type that have different attack, health, or other values can be many combat phases, it deals this many damage points

considered in the same game. to each of the opponent’s cards. If the card participated

Beyond the auto battler itself, L UDUS also contains an in fewer than this many combat phases, it heals each of its

optimization framework to find configurations of available player’s other cards by health points equal to the number

cards that are balanced, fair, and elicit desired play expe- of combat phases in which the card participated.

riences. These criteria are obviously quite subjective; so Detonation Time When the card has been healed or dam-

game designers can easily plug new metrics into the exist- aged this many times, the card and its opponent’s card

ing framework as they develop new ways of capturing their both die.

goals and preferences describing the metagame. This paper

explores a few options as well. Other card mechanics without parameters include the

copying the attack of the opponent’s cards (morphing ene-

3.1 Playtesting Simulator mies) and swapping its health points and attack whenever its

attack is greater than its health points (survivalist).

To serve as a data source for quantitative analysis, we create

a playtesting simulator for an original auto battler game we Match Procedure Gameplay proceeds as follows in the

designed. This simulator is designed for research purposes, simulator:

allowing the user to create cards with new mechanics and

run tournaments between arbitrary lineups. 1. Each player in a tournament selects an ordered list of

All cards are equally available to all players, and there cards to be their lineup.

are no restrictions on the number of copies of a card al- 2. Prior to combat, arrange the lineup’s cards left-to-right.

lowed in players’ lineups or in the entire game. Some auto 3. Each combat phase pits the leftmost surviving card of

battlers have a drafting process in which cards are selected each player against each other. The cards take damage

or purchased from a pool, but drafting and/or deck-building equal to their opponent card’s attack. Cards with 0 or less

take different forms across different games (Blizzard Enter- health points die, and surviving cards are rearranged to be

tainment 2019; Good Luck Games 2021; Nintendo Mobile the rightmost card of their respective lineups.

2020). We do not explore variants of these processes in this

paper, but we will consider them in future work. 4. Repeat the combat phase until one or fewer players has a

surviving card or the maximum number of combat phases

Card Definition Cards have positive integer statistics in- has been reached. If both or neither players have at least

cluding health points, attack, and parameters pertaining to a one surviving card, the game is a draw. Otherwise, the

special mechanic. In our game, such special parameters in- player with a surviving card wins.

clude the following:

Win Rate Approximation We run a round-robin tour-

Explosion Damage When the card dies, it damages each of nament where each lineup plays one match against every

the opponent’s cards by this many health points. other lineup. Unfortunately, for n lineups, this results in

Heal Amount Prior to entering combat, the card restores n(n − 1)/2 = n2 /2 − n/2 matches. This quickly becomes

this many health points to its player’s rightmost card prohibitively large to simulate. We developed a sampling-

Attack Growth Per Hit When the card takes damage, its based approximation that runs a subset of the games in order

attack increases by this many points. to estimate the win rates of cards and lineups. It randomly

partitions the lineups into groups of uniform size and runs

Explosion Heal When the card dies, it heals each of its round-robin tournaments within these groups. For a group

player’s other cards by this many health points. tournament with k groups and n total lineups, this results in

Heal Donation Percent When the card would be healed, it an upper bound of k · (n/k)(n/k − 1)/2 = n2 /(2k) − n/2

instead restores this many percent of the health points matches in the case where n is divisible by k.3.2 Metrics

Bruiser Attack Versus Grow on Damage Health

Quantitative measures of metagame health are needed to

guide card statistic optimization. We present metrics built

upon the output data of the playtesting simulator.

The playtesting simulation outputs a data vector v, the

lineup payoff vector. Each entry vi ∈ [−1, 1] represents the

average payoff of the lineup at index i during the last round

Attack

of playtesting in the optimization process. A payoff of 1 in-

dicates a win. A payoff of 0 means a tie, and a loss results in

a payoff of -1. The average of these payoffs over the course

of the simulated tournament for a lineup make up the entries

of v.

We then transform v into another vector of length n that

maps a card to the average win rate of the lineups in which

the card appears. This vector w, the card win rate vector, Health

has entries wj ∈ [0, 1], the average win rate of the lineups in

which the card at index j appears. We present three metrics

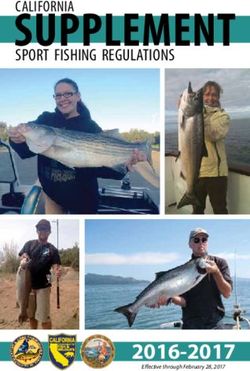

Figure 1: Heatmap showing how changes in the attack of

that evaluate the uniformity of this distribution of win rates

the bruiser card and the health of the grow-on-damage card,

among the cards.

while all other card parameters remain fixed, affect the stan-

Each of the following metrics operate on the card win rate

dard deviation metric.

vector.

Per-card Payoff

1X 2

3.3 Optimization

PCP = |payoff(wj )| (1)

n j We use the PyGAD python library (Gad 2021) for genetic

algorithms. This searches for the optimal values of spec-

Since average payoffs are equal to 0 when a lineup a card ified parameters, which may include health points, attack,

appears in wins as many matches as it loses, this is a metric and special parameters, with respect to the chosen metric.

to minimize if we want to punish overly powerful or terrible For our experiments, we use the standard deviation metric.

cards. The squared term disproportionately attacks outliers. We constrain the genetic algorithm to consider integer

Standard Deviation Metric values between 1 and 10, inclusive. While hyperparameter

v tuning may have yielded better results, we chose to run the

u 2

u X genetic algorithm with 8 solutions and 4 parents mating per

u1 1 X

SDM = t wj − wj (2) population for 32 generations in each experiment.

n j n j

3.4 Qualitative Analysis

Minimizing standard deviation of the win rates may avoid

Another way to understand the landscape of the metagame

cards with drastically higher or lower win rates compared to

is through more qualitative methods. We look at two differ-

other cards.

ent ways of plotting data about the game results that give

Entropy Metric insights into the health and stability of the metagame.

! The first plot sweeps over two variables, typically of the

w w

P j log P j

X

EM = − (3) same card, although not necessarily. This gives a visual rep-

j j wj j wj resentation of the metagame sensitivity under variations of

a configuration, which can lead to some interesting insights

When the entropy of the win rates is maximized, we ap-

about the relationships between cards.

proach a uniform distribution of win rates over the cards.

Take, for example, the plot in Figure 1 of the value of the

Other Metrics The L UDUS framework is flexible; re- standard deviation metric given various values for the attack

searchers and designers alike may develop their own met- of the bruiser card and the health of the grow-on-damage

rics corresponding to their own ideas of what constitutes a card. The bruiser card is a vanilla card with a high attack (al-

healthy metagame. For example, a game designer may know though variable in this situation) and one health point. The

from historical data that a certain distribution of win rates grow-on-damage card has one attack growth per hit. Initially

over cards or lineups correspond to a period of celebrated this card has one attack, and in this situation has a variable

parity in the metagame. The designer could conceivably use amount of health. If the grow on damage card is able to con-

the Wasserstein distance or Kullback-Leibler divergence be- sistently become a powerful threat, it can become too strong

tween this historical distribution and another distribution as and a necessity for high-level play, leading to an unbalanced

a metric. Minimizing this metric might maintain similar lev- metagame.

els of parity through future iterations of card releases. Met- In the figure, we can see that configurations in the upper

rics could also evaluate other measurable elements like av- left corner, where the attack of the bruiser is greater than the

erage turns per match. health of the grow on damage card, tend to be much moreRound Robin Win Rates Group Versus Round Robin Tournament Errors

Absolute Error (Win Rate)

Lineups

Group Size (Cards)

Win Rate

Figure 4: Absolute value of the difference in win rate be-

Figure 2: Distribution of win rates before optimization. tween a group tournament of varying size and a full round-

robin tournament of 1,728 possible lineups.

Round Robin Win Rates

3.5 Experiment Design

Our experiments share common phases.

1. Build lineups. We generate a set of cards and their asso-

ciated attack, health points, and special parameters. De-

Lineups

Lineups

pending on the experiment, a subset of these parameters

may vary between simulations during the optimization

process. From this set of cards, the lineups are permuta-

tions of 3 cards from the set, allowing for duplicates.

2. Run tournaments. This will be either be a group or a

round-robin tournament as described in Section 3.1.

3. Optimize. These tournaments are run within each itera-

Win Rate tion of our genetic algorithm to produce a metric estimat-

ing the best possible health of the metagame with respect

Figure 3: Distribution of win rates after optimization. to configurations of the card parameters.

4 Results

favorable than the configurations in the lower right corner, We explore applications of L UDUS to problems that game

where the opposite is true. This evidence supports the in- designers commonly face.

tuitive idea that the bruiser’s high attack is a good counter

to the grow on damage card because the bruiser card can 4.1 Group Versus Round-Robin Tournament

quickly remove the grow on damage card before it has had The first experiment investigates the efficacy of our

enough interactions to grow its damage to a large number. sampling-based approximation in Section 3.1. To do this, we

This kind of information can be quite useful to a game de- compare the win rates of a complete round-robin tournament

signer, who can see very clearly that the inclusion of a high- (every combination of lineups played) with the distribution

damage card can improve the metagame dramatically if the of win rates over a 16-fold tournament of randomly parti-

grow on damage card is found to be too powerful. tioned lineups into groups of various sizes, playing every

The second plot is a histogram of the lineup win rates af- combination of the lineups within those groups.

ter running a tournament amongst all possible lineups. The Figure 4 compares the win rates of each lineup in the

resulting distribution can have features that may be informa- group tournament versus the round-robin tournament, and

tive to game designers. For example, examine the difference we can see that at a group size of 256, the mean error be-

between the distributions in Figures 2 and 3 from tourna- comes negligible (about 0.0382). We use this group size in

ments using two configurations of the same card parameters. the other experiments rather than a round-robin of all 1,728

Given playtesting input from actual players and historical lineups.

data from other successful metagames, game designers can

interpret the changes new cards have brought and implement 4.2 Optimizing Cards without Special Mechanics

changes to the game or metrics for optimization that will The next experiment optimizes a set of four ‘vanilla’ cards

improve the experience of human players. with no extra mechanics. We expected that the ‘optimal’solution in terms of the standard deviation metric would optimized them alongside the first set fixed at the solution

have four identical cards because the interchangeable cards found previously—this is ten cards with variable parameters

should have zero standard deviation in the win rate. Due to for only the second set of cards.

the limited number of generations in our genetic algorithm, The optimizer improved the win rate standard deviation

we recognized that there was no guarantee it would find this from 0.0591 to 0.0541. This is a comparatively smaller im-

solution. From a game design perspective, a less-optimal so- provement than the previous experiments, but the initial state

lution seems more interesting in this case as a game with was already in much better condition than any of the previ-

only a single card is obviously uninteresting. We wanted to ous experiments. This is interesting because it could indicate

see what ‘almost-optimal’ solutions appear for this setup. that adding new cards to an already well-balanced set may

This observation also illustrates a case where the standard not disturb the balance as much as one might expect.

deviation metric fails to fully capture the qualities we wish

to optimize in our game.

The results of this experiment illustrate a different failure

5 Discussion

case of our standard deviation metric that we did not pre- We can see that the L UDUS framework yielded some very

dict. The genetic algorithm quickly found the solution (5/3), useful results from a game design perspective. Given a

(5/4), (8/3), (8/1) where (a,b) indicates a card with a attack small, yet representative, set of cards, our methods were able

and b health points. This solution had the optimal zero stan- to find more balanced configurations for cards that should

dard deviation—why? Any pairing of these cards will result create a far more enjoyable auto battler game to play.

in both cards killing each other simultaneously. Thus, every We also implemented an approximation method that con-

game ends after one round of combat phases in a tie, and siderably reduces the computation time necessary to evalu-

there is no variance in win rates amongst the lineups. ate configurations during optimization, especially for large

card sets. This method randomly breaks the tournament into

4.3 Optimizing Only Special Mechanics smaller groups that only compete within themselves. Our

The third experiment optimizes only the special mechanic empirical results indicate that under most circumstances, the

parameters of twelve cards. We assigned each card an in- approximations are close enough to the complete tourna-

tuitive value for its attack and health, which a game de- ment to produce satisfactory optimization results.

signer might do for initial context. L UDUS then optimized We applied the L UDUS framework to some standard prob-

the parameters for the special mechanics of these partially- lems game designers face and found promising results. No-

designed cards. We were interested to see how it optimized tably, the algorithm successfully optimized cards under var-

under these constraints and how impactful the special me- ious constraints from partially-designed cards to expansions

chanics would be for the overall balance. Table 1 describes of previously optimized card sets.

the cards used in this experiment. The optimizer improved Beyond the immediate results of this paper, we con-

the win rate standard deviation from 0.0751 to 0.0589. tributed an auto battler game and its associated tools. Given

the recent nature of this genre, we believe this is an impor-

4.4 Optimizing All Parameters tant contribution that will aid future research in the area.

After fixing the attack and health to focus solely on the spe-

cial mechanics, the next experiment tunes the attack, health, 6 Future Work

and special mechanics all at once. To reduce the huge di-

The genre of auto battlers is very young, and our research

mensionality of this experiment, we selected only five of the

only explores a narrow slice of questions in this new area.

twelve cards to optimize. They are listed in Table 2.

We discuss problems that extend our current work.

The optimizer improved the win rate standard deviation

from 0.0704 to 0.0584. This minimum is approximately the

same as the one achieved in the previous experiment, modi- 6.1 Other Auto Battler Features

fying only the values of the special mechanics. One impor- Unlike our game, some auto battlers have players iteratively

tant observation we made is that the optimizer was still mak- build their lineup between battles by purchasing cards from

ing good progress in the final generations of both our exper- an array of options. While our experiments were concerned

iments. This indicates that these might not be minima, and with fixed lineups of size 3, L UDUS’s ability to quantify,

compute time for additional generations could continue to qualify, and optimize balance for other deck-building rules

improve the card designs. remains to be assessed. We previously only considered the

case where any card may be replaced with any other card to

4.5 Optimizing After a Set Rotation create a new lineup. However, the purchasing value of cards

Another common scenario designers of deck-building is another dimension of their design; future work should

games encounter is releasing a new set of cards that main- consider comparing lineups that players can build after the

tain the compatibility, fairness, and competitiveness with the same number of battles and purchasing phases.

previously released cards. To apply L UDUS to this problem, In many auto battlers, cards have multiple special me-

we took the set of five cards from Section 4.4 with their op- chanics that each have variable parameters. Our work only

timized solution as the first set of cards released. Next, we considers cards with a single mechanic, but this should be

selected five additional cards, described in table Table 3, and extended to optimize design for such cards.Card Attack Health Special Parameter (see Section 3.1) Optimized Special parameter

Explode On Death 2 1 Explosion Damage 1

Friendly Vampire 1 3 Heal Amount 3

Grow On Damage 0 5 Attack Growth Per Hit 4

Heal On Death 1 2 Explosion Health 3

Health Donor 1 4 Heal Donation Percent 3

Ignore First Damage 2 1 Armor Points 1

Morph Attack 0 3 Morphing Enemies N/A

Pain Splitter 2 2 Damage Split Percent 7

Rampage 0 4 Middle Age 8

Survivalist 2 2 Survivalist N/A

Threshold 2 2 Target Age 5

Time Bomb 1 8 Detonation Time 7

Table 1: Variable and Fixed Parameters for the Optimize Special Mechanics Experiment

Card Optimized Attack Optimized Health Optimized Special Mechanic (see Section 3.1)

Survivalist 9 1 N/A

Morph Attack 5 5 N/A

Ignore First Damage 6 8 1

Explode On Death 4 1 3

Vanilla 7 2 N/A

Table 2: List of Cards in the First Set and Their Optimized Solution

Card Optimized Attack Optimized Health Optimized Special Mechanic (see Section 3.1)

Friendly Vampire 7 7 8

Grow On Damage 2 6 9

Heal On Death 3 9 1

Rampage 6 7 5

Vanilla 7 6 N/A

Table 3: List of Cards in the Second Set and Their Optimized Solution

6.2 Simulation Data would have solved an unmet need of validating the results.

All metrics we present are based on the average win rates of

lineups collected from the simulator. This does not take into 6.4 Human Playtesting

account individual lineup-versus-lineup win rates, which are Human playtesting is still required to determine the health

always 0 or 1 since our auto battler game is deterministic. of the metagame as we have not verified to what extent

One could represent these relations via a domination graph our metrics correspond to what humans deem as a healthy

with lineups as nodes and a directed edge to the lineup that metagame. Designers with a historically healthy metagame

wins the head-to-head matchup (Goldman et al. 2021), game can use those parameters to generate reference win rate dis-

matrices with each lineup as a strategy, and more. Further in- tributions over lineups and cards using our L UDUS frame-

vestigation is required to determine whether perturbations of work. These distributions can then shape new metrics and

card parameters causing a degradation in metagame health imply their ideal values for parity. Human playtesting feed-

correspond to any changes in these representations. There back could also motivate other metrics based on the game-

are also game design factors to consider besides win rates, play experience, such as the number of turns. While this

such as game durations and intensity levels. work is an example of how designers can readily ap-

ply the L UDUS framework, researching human playtesting-

6.3 Alternative Optimization Methods validated results marks an important avenue of future work.

Our choice of a genetic algorithm for optimization is some-

what arbitrary. Genetic algorithms can conveniently handle Acknowledgments

the familiar integer values of card parameters, unlike other The authors would like the thank the anonymous reviewers

optimization methods. Gradient- and Hessian-based meth- for their helpful feedback, Nathan Ringo for providing com-

ods are difficult to apply to this problem due to our parame- putational resources to run the experiments, and everyone at

ters not being differentiable. Had they been applicable, they SIFT for their support.References Konami. n.d. Yu-Gi-Oh! Trading Card Game. https://www.

Bhatt, A.; Lee, S.; de Mesentier Silva, F.; Watson, C. W.; yugioh-card.com/en/. Retrieved September 4, 2021.

Togelius, J.; and Hoover, A. K. 2018. Exploring the Hearth- Nguyen, T.-H. D.; Chen, Z.; and Seif El-Nasr, M. 2015.

stone Deck Space. In Proceedings of the Thirteenth Inter- Analytics-Based AI Techniques for a Better Gaming Expe-

national Conference on the Foundations of Digital Games, rience. In Rabin, S., ed., Game AI Pro 2: Collected Wisdom

FDG ’18, 1–10. Malmö, Sweden: Association for Comput- of Game AI Professionals, chapter 39, 481–500. New York:

ing Machinery. ISBN 9781450365710. A. K. Peters, Ltd. / CRC Press.

Blizzard Entertainment. 2019. Introducing Hearthstone Nintendo Mobile. 2020. Fire Emblem Heroes - Loki

Battlegrounds. https://playhearthstone.com/en-us/news/ Presents (Pawns of Loki) [Video]. YouTube. https://www.

23156373. Retrieved September 4, 2021. youtube.com/watch?v=Ldf3Np5lMhs.

Chen, T.; and Guy, S. 2020. Chaos Cards: Creating Novel Scott-Vargas, L. 2020. Luis Scott-Vargas on Twitter: ”Is

Digital Card Games through Grammatical Content Gener- Throne of Eldraine really legal for another year? It feels

ation and Meta-Based Card Evaluation. In Proceedings of like it’s dominated Standard for years already...” / Twit-

the AAAI Conference on Artificial Intelligence and Inter- ter. https://twitter.com/lsv/status/1339644691378282496?

active Digital Entertainment, volume 16, 196–202. Worch- lang=en. Retrieved September 6, 2021.

ester, Massachusetts, USA. Silver, D.; Huang, A.; Maddison, C. J.; Guez, A.; Sifre,

DeTora, M. 2017. Designing Hour of Devastation Cards L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou,

to Meet FFL Goals. https://magic.wizards.com/en/articles/ I.; Panneershelvam, V.; Lanctot, M.; Dieleman, S.; Grewe,

archive/play-design/designing-hour-devastation-cards- D.; Nham, J.; Kalchbrenner, N.; Sutskever, I.; Lillicrap, T.;

meet-ffl-goals-2017-07-07. Retrieved September 6, 2021. Leach, M.; Kavukcuoglu, K.; Graepel, T.; and Hassabis, D.

Drodo Studio. 2019. Auto Chess–Official Website. https: 2016. Mastering the Game of Go with Deep Neural Net-

//ac.dragonest.com/en. Retrieved September 4, 2021. works and Tree Search. Nature, 529: 484–489.

Duke, I. 2019. November 18, 2019 Banned and Restricted Tack, D. 2019. What Is Dota Auto Chess And Why Is

Announcement. https://magic.wizards.com/en/articles/ Everyone Playing It? https://www.gameinformer.com/2019/

archive/news/november-18-2019-banned-and-restricted- 01/14/what-is-dota-auto-chess-and-why-is-everyone-

announcement. Retrieved September 6, 2021. playing-it. Retrieved September 8, 2021.

Duke, I. 2020a. August 3, 2020 Banned and Restricted An- The Pokémon Company. n.d. Pokémon Trading Card Game

nouncement. https://magic.wizards.com/en/articles/archive/ | Pokemon.com. https://www.pokemon.com/us/pokemon-

news/august-8-2020-banned-and-restricted-announcement. tcg/. Retrieved September 4, 2021.

Retrieved September 6, 2021. Triske. 2019. [Magic: The Gathering] Come All Ye

Duke, I. 2020b. June 1, 2020 Banned and Restricted An- Christians and Learn of a Sinner: This Guy, Oko.

nouncement. https://magic.wizards.com/en/articles/archive/ https://www.reddit.com/r/HobbyDrama/comments/do8v2j/

news/june-1-2020-banned-and-restricted-announcement. magic the gathering come all ye christians and/. Re-

Retrieved September 6, 2021. trieved September 4, 2021.

Gad, A. F. 2021. PyGAD: An Intuitive Genetic Algorithm Wizards of the Coast. n.d.a. Banned and Restricted

Python Library. arXiv:2106.06158. Lists. https://magic.wizards.com/en/game-info/gameplay/

rules-and-formats/banned-restricted. Retrieved September

Goldman, P.; Knutson, C. R.; Mahtab, R.; Maloney, J.;

6, 2021.

Mueller, J. B.; and Freedman, R. G. 2021. Evaluating

Gin Rummy Hands Using Opponent Modeling and Myopic Wizards of the Coast. n.d.b. Magic: The Gathering | Offi-

Meld Distance. Proceedings of the AAAI Conference on Ar- cial Site for MTG News, Sets, and Events. https://magic.

tificial Intelligence, 35(17): 15510–15517. wizards.com/en. Retrieved September 4, 2021.

Good Luck Games. 2021. Storybook Brawl. https:// Xu, J.; Chen, S.; Zhang, L.; and Wang, J. 2020. Lineup Min-

storybookbrawl.com/. Retrieved September 4, 2021. ing and Balance Analysis of Auto Battler. In Proceedings of

the IEEE Sixth International Conference on Computer and

Grayson, N. 2019. A Guide to Auto Chess, 2019’s

Communications, 2300–2307. Chengdu, China.

Most Popular New Game Genre. https://kotaku.com/a-

guide-to-auto-chess-2019-s-most-popular-new-game-gen- Zook, A. 2019. Better Games through Data: How Blizzard

1835820155. Retrieved September 4, 2021. Uses Data Science for Epic Experiences. Recording avail-

able at https://youtu.be/ YSYVRdzUkE?t=2636. Last ac-

Jaffe, A.; Miller, A.; Andersen, E.; Liu, Y.-E.; Karlin, A.;

cessed September 4, 2021.

and Popović, Z. 2012. Evaluating Competitive Game Bal-

ance with Restricted Play. In Proceedings of the Eighth Zook, A.; Harrison, B.; and Riedl, M. O. 2019. Monte-Carlo

AAAI Conference on Artificial Intelligence and Interactive Tree Search for Simulation-based Strategy Analysis. CoRR,

Digital Entertainment, AIIDE’12, 26—-31. Stanford, Cali- abs/1908.01423: 1–9.

fornia, USA: AAAI Press.

Kim, J. H.; and Wu, R. 2021. Leveraging Machine Learning

for Game Development. https://ai.googleblog.com/2021/03/

leveraging-machine-learning-for-game.html.You can also read