Machines WELES: Policy-driven Runtime Integrity Enforcement of Virtual

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

W ELES: Policy-driven Runtime Integrity Enforcement of Virtual

Machines

Wojciech Ozga Do Le Quoc Christof Fetzer

TU Dresden, Germany TU Dresden, Germany TU Dresden, Germany

IBM Research Europe

arXiv:2104.14862v1 [cs.CR] 30 Apr 2021

May 3, 2021

Abstract provider, its employees, and the infrastructure protect the

tenant’s intellectual property as well as the confidentiality

Trust is of paramount concern for tenants to deploy their and the integrity of the tenant’s data. A malicious em-

security-sensitive services in the cloud. The integrity of ployee [8], or an adversary who gets into possession of

virtual machines (VMs) in which these services are de- employee credentials [74, 50], might leverage administra-

ployed needs to be ensured even in the presence of pow- tor privileges to read the confidential data by introspecting

erful adversaries with administrative access to the cloud. virtual machine (VM) memory [85], to tamper with com-

Traditional approaches for solving this challenge leverage putation by subverting the hypervisor [54], or to redirect

trusted computing techniques, e.g., vTPM, or hardware the tenant to an arbitrary VM under her control by alter-

CPU extensions, e.g., AMD SEV. But, they are vulnera- ing a network configuration [91]. We tackle the problem

ble to powerful adversaries, or they provide only load time of how to establish trust in a VM executed in the cloud.

(not runtime) integrity measurements of VMs. Specifically, we focus on the integrity of legacy systems

We propose W ELES, a protocol allowing tenants to es- executed in a VM.

tablish and maintain trust in VM runtime integrity of soft-

The existing attestation protocols focus on leveraging

ware and its configuration. W ELES is transparent to the

trusted hardware to report measurements of the execu-

VM configuration and setup. It performs an implicit at-

tion environment. In trusted computing [34], the trusted

testation of VMs during a secure login and binds the VM

platform module attestation [11] and integrity measure-

integrity state with the secure connection. Our prototype’s

ment architecture (IMA) [78] provide a means to en-

evaluation shows that W ELES is practical and incurs low

force and monitor integrity of the software that has been

performance overhead (≤ 6%).

executed since the platform bootstrap [7]. The virtual

TPM (vTPM) [19] design extends this concept by intro-

ducing a software-based trusted platform module (TPM)

1 Introduction that, together with the hardware TPM, provides integrity

measurements of the entire software stack — from the

Cloud computing paradigm shifts the responsibility of the

firmware, the hypervisor, up to the VM. However, this

computing resources management from application own-

technique cannot be applied to the cloud because an ad-

ers to cloud providers, allowing application owners (ten-

versary can tamper with the communication between the

ants) to focus on their business use cases instead of on

vTPM and the VM. For example, by reconfiguring the net-

hardware management and administration. However, trust

work, she can mount a man-in-the-middle attack to per-

is of paramount concern for tenants operating security-

form a TPM reset attack [53], compromising the vTPM

sensitive systems because software managing computing

security guarantees.

resources and its configuration and administration remains

out of their control. Tenants have to trust that the cloud A complementary technology to trusted computing,

1trusted execution environment (TEE) [46], uses hard- that provides integrity guarantees to legacy systems exe-

ware extensions to exclude the administrator and privi- cuted in the cloud. W ELES has noteworthy advantages.

leged software, i.e., operating system, hypervisor, from First, it supports legacy systems with zero-code changes

the trusted computing base. The Intel software guard ex- by running them inside VMs on the integrity-enforced ex-

tensions (SGX) [29] comes with an attestation protocol ecution environment. To do so, it leverages trusted com-

that permits remotely verifying application’s integrity and puting to enforce and attest to the hypervisor’s and VM’s

the genuineness of the underlying hardware. However, it integrity. Second, W ELES limits the system administra-

is available only to applications executing inside an SGX tor activities in the host OS using integrity-enforcement

enclave. Legacy applications executed inside an enclave mechanisms, while relying on the TEE to protect its own

suffer from performance limitations due to a small amount integrity from tampering. Third, it supports tenants con-

of protected memory [15]. The SGX adoption in the vir- necting from machines not equipped with trusted hard-

tualized environment is further limited because the pro- ware. Specifically, W ELES integrates with the secure shell

tected memory is shared among all tenants. (SSH) protocol [89]. Login to the VM implicitly performs

Alternative technologies isolating VMs from the un- an attestation of the VM.

trusted hypervisor, e.g., AMD SEV [52, 51] or IBM Our contributions are as follows:

PEF [44], do not have memory limitations. They sup- • We designed a protocol, W ELES, attesting to the VM’s

port running the entire operating system in isolation from runtime integrity (§4).

the hypervisor while incurring minimal performance over- • We implemented the W ELES prototype using state-of-

head [41]. However, their attestation protocol only pro- the-art technologies commonly used in the cloud (§5).

vides information about the VM integrity at the VM ini- • We evaluated it on real-world applications (§6).

tialization time. It is not sufficient because the loaded op-

erating system might get compromised later–at runtime–

with operating system vulnerabilities or misconfigura- 2 Threat model

tion [88]. Thus, to verify the runtime (post-initialization)

integrity of the guest operating system, one would still We require that the cloud node is built from the software

need to rely on the vTPM design. But, as already men- which source code is certified by a trusted third party [3]

tioned, it is not enough in the cloud environment. or can be reviewed by tenants, e.g., open-source soft-

Importantly, security models of these hardware tech- ware [13] or proprietary software accessible under non-

nologies isolating VM from the hypervisor assume threats disclosure agreement. Specifically, such software is typ-

caused by running tenants’ OSes in a shared execution ically considered safe and trusted when (i) it originates

environment, i.e., attacks performed by rogue operators, from trusted places like the official Linux git repository;

compromised hypervisor, or malicious co-tenants. These (ii) it passes security analysis like fuzzing [90]; (iii) it

technologies do not address the fact that a typical tenant’s is implemented using memory safe languages, like Rust;

OS is a complex mixture of software and configuration (iv) it has been formally proven, like seL4 [56] or Ever-

with a large vector attack. I.e., the protected application Crypt [72]; (v) it was compiled with memory corruption

is not, like in the SGX, a single process, but the kernel, mitigations, e.g., position-independent executables with

userspace services, and applications, which might be com- stack-smashing protection.

promised while running inside the TEE and thus exposes Our goal is to provide tenants with an runtime integrity

tenant’s computation and data to threats. These technolo- attestation protocol that ensures that the cloud node (i.e.,

gies assume it is the tenant’s responsibility to protect the host OS, hypervisor) and the VM (guest OS, tenant’s

OS, but they lack primitives to enable runtime integrity legacy application) run only expected software in the ex-

verification and enforcement of guest OSes. This work pected configuration. We distinguish between an inter-

proposes means to enable such primitives, which are nei- nal and an external adversary, both without capabilities of

ther provided by the technologies mentioned above nor by mounting physical and hardware attacks (e.g., [87]). This

the existing cloud offerings. is a reasonable assumption since cloud providers control

We present W ELES, a VM remote attestation protocol and limit physical access to their data centers.

2An internal adversary, such as a malicious administra- tamper-resistant. They cannot be written directly but

tor or an adversary who successfully extracted administra- only extended with a new value using a cryptographic

tors credentials [74, 50], aims to tamper with a hypervisor hash function: PCR_extend = hash(PCR_old_value ||

configuration or with a VM deployment to compromise data_to_extend).

the integrity of the tenant’s legacy application. She has The TPM attestation protocol [40] defines how to read

remote administrative access to the host machine that al- a report certifying the PCRs values. The report is signed

lows her to configure, install, and execute software. The using a cryptographic key derived from the endorsement

internal adversary controls the network that will allow her key, which is an asymmetric key embedded in the TPM

to insert, alter, and drop network packages. chip at the manufacturing time. The TPM also stores a

An external adversary resides outside the cloud. Her certificate, signed by a manufacturer, containing the en-

goal is to compromise the security-sensitive application’s dorsement key’s public part. Consequently, it is possible

integrity. She can exploit a guest OS misconfiguration or to check that a genuine TPM chip produced the report be-

use social engineering to connect to the tenant’s VM re- cause the report’s signature is verifiable using the public

motely. Then, she runs dedicated software, e.g., software key read from the certificate.

debugger or custom kernel, to modify the legacy applica-

Runtime integrity enforcement. The administrator has

tion’s behavior.

privileged access to the machine with complete control

We consider the TPM, the CPU, and their hardware fea-

over the network configuration, with permissions to in-

tures trusted. We rely on the soundness of cryptographic

stall, start, and stop applications. These privileges per-

primitives used by software and hardware components.

mit him to trick the DRTM attestation process because the

We treat software-based side-channel attacks (e.g., [58])

hypervisor’s integrity is measured just once when the hy-

as orthogonal to this work because of (i) the counter-

pervisor is loaded to the memory. The TPM report certi-

measures existence (e.g., [67]) whose presence is verifi-

fies this state until the next DRTM launch, i.e., the next

able as part of the W ELES protocol, (ii) the possibility

computer boot. Hence, after the hypervisor has been mea-

of provisioning a dedicated (not shared) machine in the

sured, an adversary can compromise it by installing an ar-

cloud.

bitrary hypervisor [75] or downgrading it to a vulnerable

version without being detected.

3 Background and Problem Statement Integrity measurement architecture (IMA) [7, 78, 43]

allows for mitigation of the threat mentioned above. Be-

ing part of the measured kernel, IMA implements an

Load-time integrity enforcement. A cloud node is a

integrity-enforcement mechanism [43], allowing for load-

computer where multiple tenants run their VMs in par-

ing only digitally signed software and configuration. Con-

allel on top of the same computing resources. VMs are

sequently, signing only software required to manage VMs

managed by a hypervisor, a privileged layer of software

allows for limiting activities carried out by an adminis-

providing access to physical resources and isolating VMs

trator on the host machine. A load of a legitimate kernel

from each other. Since the VM’s security depends on the

with enabled IMA and input-output memory management

hypervisor, it is essential to ensure that the correct hyper-

unit is ensured by DRTM, and it is attestable via the TPM

visor controls the VM.

attestation protocol.

The trusted computing [34] provides hardware and soft-

ware technologies to verify the hypervisor’s integrity. In Integrity auditing. IMA provides information on what

particular, the dynamic root of trust for measurements software has been installed or launched since the kernel

(DRTM) [81] is a mechanism available in modern CPUs loading, what is the system configuration, and whether

that establishes a trusted environment in which a hyper- the system configuration has changed. IMA provides a

visor is securely measured and loaded. The hypervi- tamper-proof history of collected measurements in a ded-

sor’s integrity measurements are stored inside the hard- icated file called IMA log. The tamper-proof property is

ware TPM chip in dedicated memory regions called plat- maintained because IMA extends to the PCR the measure-

form configuration registers (PCRs) [11]. PCRs are ments of every executable, configuration file, script, and

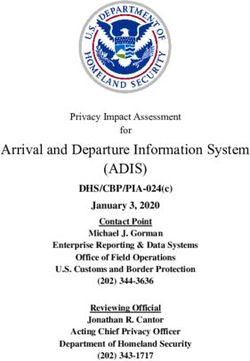

3a) modifies network ! b) hijacks the vTPM! c) proxy to a vTPM!

packages routing communication via a legitimate VM

Although the TLS helps protect the communication’s

integrity, lack of authentication between the vTPM and the

T T T

hypervisor still enables an adversary to fully control the

communication by placing a proxy in front of the vTPM.

TPM TPM TPM

driver driver driver A proxy

In more detail, an adversary can configure the hypervisor

hypervisor hypervisor hypervisor in a way it communicates with vTPM via an intermedi-

(virtual machine monitor) (virtual machine monitor) (virtual machine monitor)

TPM reset, ary software, which intercepts the communication (Fig-

A A replay of

ure 1b). She can then drop arbitrary measurements or per-

arbitrary

! measurements

form the TPM reset attack [53], thus compromising the

vTPMvm1 vTPMvm2 vTPM vTPMvm1 vTPMvm2 vTPM security guarantees.

host OS host OS host OS

tenant! malware or! To mitigate the attack, the vTPM must ensure the re-

- legitimate!

VM - compromised

VM

! T -

(verifier) A - misconfiguration

mote peer’s integrity (is it the correct hypervisor?) and

Figure 1: An adversary with root access to the hypervisor can

violate the security guarantees promised by the vTPM [19] de-

its locality (is the hypervisor running on the same plat-

sign. form?). Although the TEE local attestation gives infor-

mation about software integrity and locality, we cannot

dynamic library before such file or program is read or ex- use it here because the hypervisor cannot run inside the

ecuted. Consequently, an adversary who tampers with the TEE. However, suppose we find a way to satisfy the lo-

IMA log cannot hide her malicious behavior because she cality condition. In that case, we can leverage integrity

cannot modify the PCR value. She cannot overwrite the measurements (IMA) to verify the hypervisor’s integrity

PCR and she cannot reset it to the initial value without because among trusted software running on the platform

rebooting the platform. there can be only one that connects to the vTPM—the hy-

Problems with virtualized TPMs. The TPM chip can- pervisor. To satisfy the locality condition, we make the

not be effectively shared with many VMs due to a limited following observation: Only software running on the same

amount of PCRs. The vTPM [19] design addresses this platform has direct access to the same hardware TPM. We

problem by running multiple software-based TPMs ex- propose to share a secret between the vTPM and the hy-

posed to VMs by the hypervisor. This design requires ver- pervisor using the hardware TPM (§4.4). The vTPM then

ifying the hypervisor’s integrity before establishing trust authenticates the hypervisor by verifying that the hypervi-

with a software-based TPM. We argue that verifying the sor presents the secret in the pre-shared key TLS authen-

hypervisor’s integrity alone is not enough because an ad- tication.

ministrator can break the software-based TPM security

guarantees by mounting a man-in-the-middle (MitM) at- Finally, an adversary who compromises the guest OS

tack using the legitimate software, as we describe next. can mount the cuckoo attack [69] to impersonate the le-

Consequently, the vTPM cannot be used directly to pro- gitimate VM. In more detail, an adversary can modify the

vide runtime integrity of VMs. TPM driver inside a guest OS to redirect the TPM commu-

In the vTPM design, the hypervisor prepends a 4-byte nication to a remote TPM (Figure 1c). A verifier running

vTPM identifier that allows routing the communication to inside a compromised VM cannot recognize if he commu-

the correct vTPM instance. However, the link between nicates with the vTPM attached to his VM or with a re-

the vTPM and the VM is unprotected [31], and it is routed mote vTPM attached to another VM. The verifier is help-

through an untrusted network. Consequently, an adver- less because he cannot establish a secure channel to the

sary can mount a masquerading attack to redirect the VM vTPM that would guarantee communication with the lo-

communication to an arbitrary vTPM (Figure 1a) by re- cal vTPM. To mitigate the attack, we propose leveraging

placing the vTPM identifier inside the network package. the TEE attestation protocol to establish a secure commu-

To mitigate the attack, we propose to use the transport nication channel between the verifier and the vTPM and to

layer security (TLS) protocol [9] to protect the commu- use it to exchange a secret allowing the verifier to identify

nication’s integrity. the vTPM instance uniquely (§4.5).

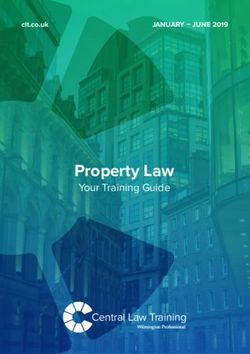

4hybrid cloud node (host OS) C trusted computing components cloud node (host OS) WELES

integrity measurements TLS deploys policy

policyA

hypervisor

A! returns keypublicA

virtual machine (VM) trusted execution! TLS

environment . tenant A signs with ! keyprivateA

C SSH authenticates VM! keyprivateA

trusted computing components D WELES VM’s SSH! with keypublicA VMA

private key sends integrity! policy !

measurements enforcement

B logical tenant separation

1 4 2

TLS deploys policy policyB

spawns connects to the VM! deploys " TLS returns keypublicB

new VM (identify with VM’s public key) the policy

tenant B signs with !

SSH authenticates VM! keyprivateB keyprivateB

TLS returns the VM’s ! SSH

with keypublicB VMB

TLS public key sends integrity! policy !

3 measurements enforcement

cloud provider tenant

Figure 2: The high-level overview of W ELES. The VM’s SSH Figure 3: Multiple tenants interacting with W ELES concur-

key is bound to the VM’s integrity state defined in the policy. rently. W ELES generates a dedicated SSH key for each de-

4 W ELES design ployed security policy and allows using it only if the VM’s in-

tegrity conforms to the security policy.

Our objective is to provide an architecture that: 1. pro- use the private key only if the platform matches the state

tects legacy applications running inside a VM from threats defined inside the policy. Finally, the tenant establishes

defined in §2, 2. requires zero-code changes to legacy trust with the VM during the SSH-handshake. He veri-

applications and the VM setup, 3. permits tenants to re- fues that the VM can use the private key corresponding

motely attest to the platform runtime integrity without to the previously obtained public key (Í). The tenant au-

possessing any vendor-specific hardware. thenticates himself in a standard way, using his own SSH

private key. His SSH public key is embedeed inside the

VM’s image or provisioned during the VM deployment.

4.1 High-level Overview

Figure 2 shows an overview of the cloud node running 4.2 Tenant Isolation and Security Policy

W ELES. It consists of the following four components:

(A) the VM, (B) the hypervisor managing the VM, pro- Multiple applications with different security requirements

viding it with access to physical resources and isolating might coexist on the same physical machine. W ELES al-

from other VMs, (C) trusted computing components en- lows ensuring that applications run in isolation from each

abling hypervisor’s runtime integrity enforcement and at- other and match their security requirements. Figure 3

testation, (D) W ELES, software executed inside TEE that shows how W ELES assigns each VM a pair of a public

allows tenants to attest and enforce the VMs’ integrity. and private key. The keys are bound with the application’s

The configuration, the execution, and the operation of policy and the VM’s integrity. Each tenant uses the public

the above components are subject to attestation. First, the key to verify that he interacts with his VM controlled by

cloud operator bootstraps the cloud node and starts W E - the integrity-enforced hypervisor.

LES . At the tenant’s request, the cloud provider spawns Listing 1 shows an example of a security policy. The

a VM (Ê). Next, the tenant establishes trust with W E - policy is a text file containing a whitelist of the hardware

LES (§4.5), which becomes the first trusted component TPM manufacturer’s certificate chain (line 4), DRTM in-

on a remote platform. The tenant requests W ELES to tegrity measurements of the host kernel (lines 6-9), in-

check if the hypervisor conforms to the policy (Ë), which tegrity measurements of the guest kernel (line 16), and le-

contains tenant-specific trust constraints, such as integrity gal runtime integrity measurements of the guest OS (lines

measurements (§4.2). W ELES uses IMA and TPM to ver- 18-20, 24). The certificate chain is used to establish

ify that the platform’s runtime integrity conforms to the trust in the underlying hardware TPM. W ELES compares

policy and then generates a VM’s public/private key pair. DRTM integrity measurements with PCR values certified

The public key is returned to the tenant (Ì). W ELES pro- by the TPM to ensure the correct hypervisor with enabled

tects access to the private key, i.e., it permits the VM to integrity-enforced mechanism was loaded. W ELES uses

5runtime integrity measurements to verify that only ex-

Listing 1: Example of the W ELES’s security policy

pected files and software have been loaded to the guest

1 host:

OS memory. A dedicated certificate (line 24) makes the 2 tpm: |-

integrity-enforcement practical because it permits more 3 -----BEGIN CERTIFICATE-----

4 # Manufacturer CA certificate

files to be loaded to the memory without redeploying the 5 -----END CERTIFICATE-----

6 drtm: # measurements provided by the DRTM

policy. Specifically, it is enough to sign software allowed 7 - {id: 17, sha256: f9ad0...cb}

to execute with the corresponding private key to let the 8 - {id: 18, sha256: c2c1a...c1}

9 - {id: 19, sha256: a18e7...00}

software pass through the integrity-enforcement mecha- 10 certificate: |-

11 -----BEGIN CERTIFICATE-----

nism. Additional certificates (lines 12, 27) allow for OS 12 # software update certificate

updates [68]. 13 -----END CERTIFICATE-----

14 guest:

15 enforcement: true

16 pcrs: # boot measurements (e.g., secure boot)

4.3 Platform Bootstrap 17 - {id: 0, sha256: a1a1f...dd}

18 measurements: # legal integrity measurement

digests

The cloud provider is responsible for the proper machine 19 - "e0a11...2a" # SHA digest over a startup

script

initialization. She must turn on support for hardware tech- 20 - "3a10b...bb" # SHA digest over a library

nologies (i.e., TPM, DRTM, TEE), launch the hypervisor, 21 certificate:

22 - |-

and start W ELES. Tenants detect if the platform was cor- 23 -----BEGIN CERTIFICATE-----

24 # certificate of the signer, e.g., OS updates

rectly initialized when they establish trust in the platform 25 -----END CERTIFICATE-----

(§4.5). 26 -----BEGIN CERTIFICATE-----

27 # software update certificate

First, W ELES establishes a connection with the hard- 28 -----END CERTIFICATE-----

ware TPM using the TPM attestation; it reads the TPM

The communication between the hypervisor and the

certificate and generates an attestation key following the

emulated TPM is susceptible to MitM attacks. Unlike

activation of credential procedure ([16] p. 109-111). W E -

W ELES, the hypervisor does not execute inside the TEE,

LES ensures it communicates with the local TPM using

preventing W ELES from using the TEE attestation to ver-

[84], but other approaches might be used as well [33, 69].

ify the hypervisor identity. However, W ELES confirms

Eventually, W ELES reads the TPM quote, which certifies

the hypervisor identity by requesting it to present a se-

the DRTM launch and the measurements of the hypervi-

cret when establishing a connection. W ELES generates a

sor’s integrity.

secret inside the TEE and seals it to the hardware TPM

via an encrypted channel ([10] §19.6.7). Only software

4.4 VM Launch running on the same OS as W ELES can unseal the secret.

Thus, it is enough to check if only trusted software ex-

The cloud provider requests the hypervisor to spawn a new

ecutes on the platform to verify that it is the legitimate

VM. The hypervisor allocates the required resources and

hypervisor who presents the secret.

starts the VM providing it with TPM access. At the end

of the process, the cloud provider shares the connection Figure 4 shows the procedure of attaching an emulated

details with the tenant, allowing the tenant to connect to TPM to a VM. Before the hypervisor spawns a VM, it

the VM. commands W ELES to emulate a new software-based TPM

W ELES emulates multiple TPMs inside the TEE be- (Ê). W ELES creates a new emulated TPM, generates a

cause many VMs cannot share a single hardware TPM secret, and seals the secret with the hardware TPM (Ë).

[19]. When requested by the hypervisor, W ELES spawns W ELES returns the TCP port and the sealed secret to the

a new TPM instance accessible on a unique TCP port. The hypervisor. The hypervisor unseals the secret from the

hypervisor connects to the emulated TPM and exposes it hardware TPM (Ì) and establishes a TLS connection to

to the VM as a standard character device. We further use the emulated TPM authenticating itself with the secret

the term emulated TPM to describe a TEE-based TPM (Í). At this point, the hypervisor spawns a VM. The

running inside the hypervisor and distinguish it from the VM boots up, the firmware and IMA send integrity mea-

software-based TPM proposed by the vTPM design. surements to the emulated TPM (Î). To protect against

6hypervisor trusted execution environment trusted execution environment cloud node (host OS)

WELES 6 challenge

generates ! 3 WELES

1 register VM a key pair signs with the

7

private key VM

emulated TPM verifies ! VM’s SSH

2 private key

the policy 8 signature

SSH server

generate!

2 secret

4 VM’s SSH! challenge! 5

hardware seal secret

3 unseal secret TPM

public key (SSH handshake)

HTTPs

9

SSH

4 establish TLS connection, auth with secret SSH client

deploys policy 1 signature"

VM HTTPs tenant (SSH handshake)

5 integrity measurement Figure 5: The high-level view of the attestation protocol. W E -

increase MC LES generates a SSH public/private key pair inside the TEE.

monotonic store "

counter (MC) 6 MC The tenant receives the public key as a result of the policy de-

ployment. To mitigate the MitM attacks, the tenant challenges

Figure 4: Attachment of an emulated TPM to a VM. W ELES

the VM to prove it has access to the private key. W ELES signs

emulates TPMs inside the TEE. Each emulated TPM is accessi-

the challenge on behalf of the VM if and only if the platform

ble via a TLS connection. To prevent the MitM attack, W ELES

integrity conforms with the policy.

authenticates the connecting hypervisor by sharing with him a

secret via a hardware TPM. To mitigate the rollback attack, the LES’s integrity and the underlying hardware genuineness.

emulated TPM increments the monotonic counter value on each The tenant establishes trust with W ELES during the TLS-

non-idempotent command. handshake, verifying that the presented certificate was is-

the rollback attack, each integrity measurement causes the sued to W ELES by the CA.

emulated TPM to increment the hardware-based mono- Although the tenant remotely ensures that W ELES is

tonic counter (MC) and store the current MC value inside trusted, he has no guarantees that he connects to his VM

the emulated TPM memory (Ï). To prevent the attach- controlled by W ELES because the adversary can spoof the

ment of multiple VMs to the same emulated TPM, W E - network [91] to redirect the tenant’s connection to an ar-

LES permits a single client connection and does not per- bitrary VM. To mitigate the threat, W ELES generates a

mit reconnections. An attack in which an adversary redi- secret and shares it with the tenant and the VM. When the

rects the hypervisor to a fake emulated TPM exporting a tenant establishes a connection, he uses the secret to au-

false secret is detected when establishing trust with the thenticate the VM. Only the VM which integrity conforms

VM (§4.5). to the policy has access to this secret.

Figure 5 shows a high-level view of the protocol. First,

4.5 Establish Trust the tenant establishes a TLS connection with W ELES to

deploy the policy (Ê). W ELES verifies the platform in-

The tenant establishes trust with the VM in three steps. tegrity against the policy (Ë), and once succeeded, it gen-

First, he verifies that W ELES executes inside the TEE erates the SSH key pair (Ì). The public key is returned

and runs on genuine hardware (a CPU providing the TEE to the tenant (Í) while the private key remains inside the

functionality). He then extends trust to the hypervisor and TEE. W ELES enforces that only a guest OS which runtime

VM by leveraging W ELES to verify and enforce the host integrity conforms to the policy can use the private key for

and guest OSes’ runtime integrity. Finally, he connects to signing. Second, the tenant initializes an SSH connection

the VM, ensuring it is the VM provisioned and controlled to the VM, expecting the VM to prove the possession of

by W ELES. the SSH private key. The SSH client requests the SSH

Since the W ELES design does not restrict tenants to server running inside the VM to sign a challenge (Î). The

possess any vendor-specific hardware and the existing SSH server delegates the signing operation to W ELES (Ï).

TEE attestation protocols are not standardized, we pro- W ELES signs the challenge using the private key (Ð) if

pose to add an extra level of indirection. Following the and only if the hypervisor’s and VM’s integrity match the

existing solutions [39], we rely on a trusted certificate policy. The SSH private key never leaves W ELES; only

authority (CA) that performs the TEE-specific attestation a signature is returned to the SSH server (Ñ). The SSH

before signing an X.509 certificate confirming the W E - client verifies the signature using the SSH public key ob-

7tained by the tenant from W ELES (Ò). The SSH server Intel SGX enclaves

also authenticates the tenant, who proves his identity us- WELES: !

monotonic counter service

ing his own private SSH key. The SSH server is config-

ured to trust his SSH public key. The tenant established WELES: !

QEMU

SSH" Linux

QEM

QEM

VM VM emulated TPM

trust in the remote platform as soon as the SSH handshake VM IMA

server

succeeds. WELES:

monitoring service

Alpine Linux

kernel-based virtual integrity measurement TPM

4.6 Policy Enforcement machine (KVM) architecture (Linux IMA) driver

Linux kernel

W ELES policy enforcement mechanism guarantees that Intel trusted ! Intel software guard TPM 2.0

the VM runtime integrity conforms to the policy. At execution technology (TXT) extensions (SGX)

hardware

the host OS, W ELES relies on the IMA integrity-

enforcement [43] to prevent the host kernel from loading Figure 6: The overview of the W ELES prototype implementa-

tion.

to the memory files that are not digitally signed. Specif-

ically, each file in the filesystem has a digital signature memory corruption mitigation techniques. Those tech-

stored inside its extended attribute. IMA verifies the sig- niques help mitigate the consequences of, for example,

nature issued by the cloud provider before the kernel loads buffer overflow attacks that might lead to privilege escala-

the file to the memory. The certificate required for signa- tion or arbitrary code execution. To restrict the host from

ture verification is loaded from initramfs (measured by the accessing guest memory and state, we follow existing

DRTM) to the kernel’s keyring. At the guest OS, IMA in- security-oriented commercial solutions [28] that disable

side the guest kernel requires the W ELES approval to load certain hypervisor features, such as hypervisor-initiated

a file to the memory. The emulated TPM, controlled by memory dump, huge memory pages on the host, memory

W ELES, returns a failure when IMA tries to extend it with swapping, memory ballooning through a virtio-balloon

measurement not conforming to the policy. The failure device, and crash dumps. For production implementa-

instructs IMA not to load the file. tions, we propose to rely on microkernels like formally

proved seL4 [56].

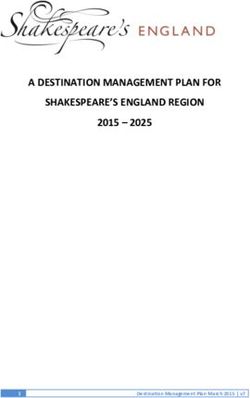

We rely on SGX as the TEE technology. The SGX re-

5 Implementation mote attestation [48] allows us to verify if the application

executes inside an enclave on a genuine Intel CPU. Other

5.1 Technology Stack

TEEs might be supported (§7). We implemented W ELES

We decided to base the prototype implementation on the in Rust [63], which preserves memory-safety and type-

Linux kernel because it is an open-source project support- safety. To run W ELES inside an SGX enclave, we use

ing a wide range of hardware and software technologies. the SCONE framework [15] and its Rust cross-compiler.

It is commonly used in the cloud and, as such, can demon- We also exploit the Intel trusted execution technology

strate the practicality of the proposed design. QEMU [17] (TXT) [38] as a DRTM technology because it is widely

and kernel-based virtual machine (KVM) [55] permit to available on Intel CPUs. We use the open-source soft-

use it as a hypervisor. We rely on Linux IMA [78] as an ware tboot [12] as a pre-kernel bootloader that establishes

integrity enforcement and auditing mechanism built-in the the DRTM with TXT to provide the measured boot of the

Linux kernel. Linux kernel.

We chose Alpine Linux because it is designed for se-

curity and simplicity in contrast to other Linux distribu- 5.2 Prototype Architecture

tions. It consists of a minimum amount of software re-

quired to provide a fully-functional OS that permits keep- The W ELES prototype architecture consists of three com-

ing a trusted computing base (TCB) low. All userspace ponents executing in SGX enclaves: the monitoring ser-

binaries are compiled as position-independent executables vice, the emulated TPM, and the monotonic counter ser-

with stack-smashing protection and relocation read-only vice.

8The monitoring service is the component that leverages The emulated TPM establishes trust with the MCS via

Linux IMA and the hardware TPM to collect integrity the TLS-based SGX attestation (§5.4) and maintains the

measurements of the host OS. There is only one monitor- TLS connection open until the emulated TPM is shut-

ing service running per host OS. It is available on a well- down. We implemented the emulated TPM to increase

known port on which it exposes a TLS-protected REST the MC before executing any non-idempotent TPM com-

API used by tenants to deploy the policy. We based this mand, e.g., extending PCRs, generating keys, writing to

part of the implementation on [84] that provides a mech- non-volatile memory. The MC value and the TLS cre-

anism to detect the TPM locality. The monitoring ser- dentials are persisted in the emulated TPM state, which

vice spawns emulated TPMs and intermediates in the se- is protected by the SGX during runtime and at rest via

cret exchange between QEMU and the emulated TPM. sealing. When the emulated TPM starts, it reads the MC

Specifically, it generates and seals to the hardware TPM value from the MCS and then checks the emulated TPM

the secret required to authenticate the QEMU process, and state freshness by verifying that its MC value equals the

passes this secret to an emulated TPM. value read from the MCS.

The emulated TPM is a software-based TPM emulator

based on the libtpms library [18]. It exposes a TLS-based 5.4 TLS-based SGX Attestation

API allowing QEMU to connect. The connection is au-

thenticated using the secret generated inside an SGX en- We use the SCONE key management system (CAS) [5]

clave and known only to processes that gained access to to perform remote attestation of W ELES components, ver-

the hardware TPM. We extracted the emulated TPM into ify SGX quotes using Intel attestation service (IAS) [14],

a separate component because of the libtpms implementa- generate TLS credentials, and distribute the credentials

tion, which requires running each emulator in a separate and the CAS CA certificate to each component during

process. initialization. W ELES components are configured to es-

The monotonic counter service (MCS) provides access tablish mutual authentication over TLS, where both peers

to a hardware monotonic counter (MC). Emulated TPMs present a certificate, signed by the same CAS CA, con-

use it to protect against rollback attacks. We designed the taining an enclave integrity measurement. Tenants do not

MCS as a separate module because we anticipate that due perform the SGX remote attestation to verify the monitor-

to hardware MC limitations (i.e., high latency, the lim- ing service identity and integrity. Instead, they verify the

ited number of memory overwrites [82]), a distributed ver- certificate exposed by a remote peer during the policy de-

sion, e.g., ROTE [62], might be required. Notably, the ployment when establishing a TLS connection to the mon-

MCS might also be deployed locally using SGX MC [22] itoring service. The production implementation might use

accessible on the same platform where the monitoring ser- Intel SGX-RA [57] to achieve similar functionality with-

vice and emulated TPM run. out relying on an external key management system.

5.3 Monotonic Counter Service 5.5 VM Integrity Enforcement

We implemented a monotonic counter service (MCS) as The current Linux IMA implementation extends the in-

a service executed inside the SGX enclave. It leverages tegrity digest of the IMA log entry to all active TPM PCR

TPM 2.0 high-endurance indices [10] to provide the MC banks. For example, when there are two active PCR banks

functionality. The MCS relies on the TPM attestation (e.g., SHA-1 and SHA-256), both are extended with the

to establish trust with the TPM chip offering hardware same value. We did a minor modification of the Linux

MC, and on the encrypted and authenticated communi- kernel. It permitted us to share with the emulated TPM

cation channel ([10] §19.6.7) to protect the integrity and not only the integrity digest but also the file’s measure-

confidentiality of the communication with the TPM chip ment and the file’s signature. We modified the content of

from the enclave. The MCS exposes a REST API over a the PCR_Extend command sent by the Linux IMA in a

TLS (§5.4), allowing other enclaves to increment and read way it uses the SHA-1 bank to transfer the integrity di-

hardware monotonic counters remotely. gest, the SHA-256 bank to transfer the file’s measurement

9digest, and the SHA-512 bank to transfer the file’s signa- tualization (SR-IOV) [23] technologies are turned on in

ture. In the emulated TPM, we intercept the PCR_extend the UEFI/BIOS configuration. The hyper-threading is

command to extract the file’s measurement and the file’s switched off. The enclave page cache (EPC) is configured

digest. We use obtained information to enforce the pol- to reserve 128 MiB of random-access memory (RAM).

icy; if the file is not permitted to be executed, the emu- On host and guest OSes, we run Alpine 3.10 with Linux

lated TPM process closes the TLS connection causing the kernel 4.19. We modified the guest OS kernel according

QEMU process to shut down the VM. to the description in §5.5. We adjusted quick emulator

(QEMU) 3.1.0 to support TLS-based communication with

5.6 SSH Integration the emulated TPM as described in §4.4. CPUs are on the

microcode patch level (0x5e).

To enable a secure connection to the VM, we relied on the

OpenSSH server. It supports the PKCS#11 [66] standard, 6.1 Micro-benchmarks

which defines how to communicate with cryptographic

devices, like TPM, to perform cryptographic operations Are TPM monotonic counters practical to handle

using the key without retrieving it from the TPM. the rollback protection mechanism? Strackx and

We configured an OpenSSH server running inside the Piessens [82] reported that the TPM 1.2 memory gets cor-

guest OS to use an SSH key stored inside the emulated rupted after a maximum of 1.450M writes and has a lim-

TPM running on the host OS. The VM’s SSH private key ited increment rate (one increment per 5 sec). We run an

is generated and stored inside the SGX enclave, and it experiment to confirm or undermine the hypothesis that

never leaves it. The SSH server, via PCRS#11, uses it those limitations apply to the TPM 2.0 chip. We were

for the TLS connection only when W ELES authorizes ac- continuously incrementing the monotonic counter in the

cess to it. The tenant uses his own SSH private key, which dTPM and the iTPM chips. The dTPM chip reached 85M

is not managed by W ELES. increments, and it did not throttle its speed. The iTPM

chip slowed down after 7.3M increments limiting the in-

crement latency to 5 sec. We did not observe any problem

6 Evaluation with the TPM memory.

We present the evaluation of W ELES in three parts. In the What is the cost of the rollback protection mechanism?

first part, we measure the performance of internal W ELES Each non-idempotent TPM operation causes the emulated

components and related technologies. In the second part, TPM to communicate with the MCS and might directly

we check if W ELES is practical to protect popular appli- influence the W ELES performance. We measured the la-

cations, i.e., nginx, memcached. Finally, we estimate the tency of the TPM-based MCS read and increment opera-

TCB of the prototype used to run the experiments men- tions. In this experiment, the MCS and the test client ex-

tioned above. ecute inside an SGX enclave. Before the experiment, the

test client running on the same machine establishes a TLS

Testbed. Experiments execute on a rack-based cluster

connection with the MCS. The connection is maintained

of Dell PowerEdge R330 servers equipped with an Intel

during the entire experiment to keep the communication

Xeon E3-1270 v5 CPU, 64 GiB of RAM, Infineon 9665

overhead minimal. The evaluation consists of sending 5k

TPM 2.0 discrete TPM chips (dTPMs). Experiments that

requests and measuring the mean latency of the MCS re-

use an integrated TPM (iTPM) run on Intel NUC7i7BNH

sponse.

machine, which has the Intel platform trusted technol-

ogy (PTT) [25] running on Intel ME 11.8.50.3425 pow- Table 1: The latency of main operations in the TPM-based

ered by Intel Core i7-7567U CPU and 8 GiB of RAM. MCS. σ states for standard deviation.

All machines have a 10 Gb Ethernet network interface

Read Increase

card (NIC) connected to a 20 Gb/s switched network. The

SGX, TXT, TPM 2.0, Intel virtualization technology for discrete TPM 42 ms (σ = 2 ms) 40 ms (σ = 2 ms)

directed I/O (VT-d) [24], and single root input/output vir- integrated TPM 25 ms (σ = 2 ms) 32 ms (σ = 1 ms)

10200 (6236 ms) swTPM

Latency (ms)

150 WELES Host OS, no IMA Host OS, with IMA Guest OS, with IMA

WELES MC

100 dTPM 1st file open 134ms

Latency ms

iTPM 75

50

50

0 25 baseline:

Create Create Quote PCR PCR < 40µs

Primary Read Extend 0

Figure 7: TPM operations latency depending on the TPM: 2nd+ file open

Latency µs

the swTPM, the emulated TPM without rollback protection 40

(W ELES TPM), the emulated TPM with rollback protection 20

(W ELES TPM with MC), the discrete TPM chip (dTPM), and

the integrated TPM (iTPM). 0

100 1k 10k 100k 1M 10M

File size [bytes]

Table 1 shows that the MCS using iTPM performs from

1.25× to 1.68× faster than its version using dTPM. The Figure 8: File opening times with and without Linux IMA.

read operation on the iTPM is faster than the increment

impacts the opening time of files depending on their size.

operation (25 ms versus 32 ms, respectively). Differently,

Figure 8 shows that the IMA inside the guest OS incurs

on dTPM both operations take a similar amount of time

higher overhead than the IMA inside the host OS. It is pri-

(about 40 ms).

marily caused by i) the higher latency of the TPM extend

What is the cost of running the TPM emulator in- command (∼ 43 ms) that is dominated by a slow network-

side TEE and with the rollback protection mecha- based monotonic counter, ii) the IMA mechanism itself

nism? Is it slower than a hardware TPM used by that has to calculate the cryptographic hash over the entire

the host OS? As a reference point to evaluate the em- file even if only a small part of the file is actually read,

ulated TPM’s performance, we measured the latency of and iii) the less efficient data storage used by the VM (vir-

various TPM commands executed in different implemen- tualized storage, QCOW format). In both systems, the

tations of TPMs. The TPM quotes were generated with IMA takes less than 70 ms when loading files smaller than

the elliptic curve digital signature algorithm (ECDSA) us- 1 MB (99% of files in the deployed prototype are smaller

ing the P-256 curve and SHA-256 bit key. PCRs were than 1 MB). Importantly, IMA measures the file only once

extended using the SHA-256 algorithm. Figure 7 shows unless it changes. Figure 8 shows that the next file reads

that except for the PCR extend operation, the SGX-based take less than 40 µs regardless of the file size.

TPM with rollback protection is from 1.2× to 69× faster

than hardware TPMs and up to 6× slower than the un-

6.2 Macro-benchmarks

protected software-based swTPM. Except for the create

primary command, which derives a new key from the We run macro-benchmarks to measure performance

TPM seed, we did not observe performance degradation degradation when protecting popular applications, i.e.,

when running the TPM emulator inside an enclave. How- the nginx web-server [4] and the memcached cache sys-

ever, when running with the rollback protection, the TPM tem [2], with W ELES. We compare the performance of

slows down the processing of non-idempotent commands four variants for each application running on the host op-

(e.g., PCR_Extend) due to the additional time required to erating system (OS) (native), inside a SCONE-protected

increase the MC. Docker container on the host OS (SCONE), inside a guest

OS (VM), inside a W ELES-protected guest OS with roll-

How much IMA impacts file opening times? Before

back protection turned on (W ELES). Notably, W ELES

the kernel executes the software, it verifies if executable,

operates under a weaker threat model than SCONE. We

related configuration files, and required dynamic libraries

compare them to show the tradeoff between the security

can be loaded to the memory. The IMA calculates a cryp-

and performance.

tographic hash over each file (its entire content) and sends

the hash to the TPM. We measure how much this process How much does W ELES influence the throughput of

11before the throughput started to degrade (latency

2 VMs 3 VMs 4 VMs

and QEMU with support for gnutls, TPM and KVM.

Latency [ms]

4 4 4

3 3 3 To estimate the TCB of nginx and memcached executed

2 2 2 with W ELES, we added their source code sizes (including

1 1 1

0 0 0 musl libc) to the source code size of the software stack

200 150 250 150 200 250 150 200

250 required to run it (92.49 MB). Table 3 shows that the eval-

Throughput (k.req/s) native WELES

uated setup increases the TCB 13× and 32× for nginx and

Figure 11: W ELES scalability memcached, respectively. When compared to SCONE,

W ELES prototype increases the TCB from 8× to 12×.

SCONE offers not only stronger security guarantees (con-

Table 3: The TCB (source code size in MB) of nginx and mem-

cached when running in SCONE and W ELES. All variants in- fidentiality of the tenant’s code and data) but also requires

clude the musl libc library. to trust a lower amount of software code. However, this

comes at the cost of performance degradation (§6.2) and

+musl libc +SCONE +W ELES the necessity of reimplementing or at least recompiling a

Nginx 7.3 12.3 (1.6×) 97.69 (13.4×) legacy application to link with the SCONE runtime.

Memcached 2.9 7.9 (2.7×) 93.29 (32.2×)

tions of MCS, such as ROTE [62], offer much faster MC 7 Discussion

increments (1–2 ms) than the presented TPM-based pro-

totype. We estimated that using W ELES with a fast MC 7.1 Alternative TEEs

would slow down VM boot time only by 1.13×.

The W ELES design (§4) requires a TEE that offers a re-

Does W ELES incur performance degradation when mote attestation protocol and provides confidentiality and

multiple VMs are spawned? We run memcached con- integrity guarantees of W ELES components executing in

currently in several VMs with and without W ELES to ex- the host OS. Therefore, the SGX used to build the W E -

amine W ELES scalability. We then calculate the perfor- LES prototype (§5) might be replaced with other TEEs. In

mance degradation between the variant with and without particular, W ELES implementation might leverage Sanc-

W ELES. We do not compare the performance degradation tum [30], Keystone [60], Flicker [65], or L4Re [73] as

between different number of VMs, because it depends on an SGX replacement. W ELES might also leverage ARM

the limited amount of shared network bandwidth. Each TrustZone [?] by running W ELES components in the se-

VM run with one physical core and 1 GB of RAM. Fig- cure world and exploiting the TPM attestation to prove its

ure 11 shows that when multiple VMs are concurrently integrity.

running on the host OS, W ELES achieves 0.96×–0.97×

of the native throughput.

7.2 Hardware-enforced VM Isolation

6.3 Trusted Computing Base Hardware CPU extensions, such as AMD secure en-

crypted virtualization (SEV) [45], Intel multi-key total

As part of this evaluation, we estimate the TCB of soft-

memory encryption (MKTME) [26], Intel trust domain

ware components by measuring the source code size (us-

extensions (TDX) [27], are largely complementary to the

ing source lines of code (SLOC) as a metric) used to build

W ELES design. They might enrich W ELES design by

the software stack.

providing the confidentiality of the code and data against

How much does the W ELES increase the TCB? We rogue operators with physical access to the machine, com-

estimate the software stack TCB by calculating size of promised hypervisor, or malicious co-tenants. They also

the source code metrics using CLOC [32], lib.rs [1], and consider untrusted hypervisor excluding it from the W E -

Linux stat utility. For the Linux kernel and QEMU, we LES TCB. On the other hand, W ELES complements these

calculated code that is compiled with our custom configu- technologies by offering means to verify and enforce the

ration, i.e., Linux kernel with support for IMA and KVM, runtime integrity of guest OSes. Functionality easily

13available for bare-metal machines (via a hardware TPM) 8 Related work

but not for virtual machines.

VM attestation is a long-standing research objective. The

existing approaches vary from VMs monitoring systems

7.3 Trusted Computing Base focusing on system behavior verification [42, 76, 70], in-

trusion detection systems [49, 77], or verifying the in-

The prototype builds on top of software commonly used

tegrity of the executing software [35, 20]. W ELES fo-

in the cloud, which has a large TCB because it supports

cuses on the VM runtime integrity attestation. Following

different processor architectures and hardware. W ELES

Terra [35] architecture, W ELES leverages VMs to provide

might be combined with other TEE and hardware exten-

isolated execution environments constrained with differ-

sions, resulting in a lower TCB and stronger security guar-

ent security requirements defined in a policy. Like Scal-

antees. Specifically, W ELES could be implemented on

able Attestation [20], W ELES uses software-based TPM to

top of a microkernel architecture, such as formally ver-

collect VM integrity measurements. W ELES extends the

ified seL4 [56, 71], that provides stronger isolation be-

software-based TPM functionality by enforcing the policy

tween processes and a much lower code base (less than

and binding the attestation result with the VM connection,

10k SLOC [56]), when compared to the Linux kernel.

as proposed by IVP [80]. Unlike the idea of linking the

Comparing to the prototype, QEMU might be replaced

remote attestation quote to the TLS certificate [37], W E -

with Firecracker [13], a virtual machine monitor writ-

LES relies on the TEE to restrict access to the private key

ten in a type-safe programming language that consists of

based on the attestation result. Following TrustVisor [64],

46k SLOC (0.16× of QEMU source code size) and is used

W ELES exposes trusted computing methods to legacy ap-

in production by Amazon AWS cloud. The TCB of the

plications by providing them with dedicated TPM func-

prototype implementation might be reduced by removing

tionalities emulated inside the hypervisor. Unlike oth-

superfluous code and dependencies. For example, most of

ers, W ELES addresses the TPM cuckoo attack at the VM

the TPM emulator functionalities could be removed fol-

level by combining integrity enforcement with key man-

lowing the approach of µTPM [64]. W ELES API could

agement and with the TEE-based remote attestation. Al-

be built on top of the socket layer, allowing removal of

ternative approaches to TPM virtualization exist [83, 36].

HTTP dependencies that constitute 41% of the prototype

However, the cuckoo attack remains the main problem.

implementation code.

W ELES enhances the vTPM design [19] mostly because

of the simplicity; no need for hardware [83] or the TPM

7.4 Integrity Measurements Management specification [36] changes.

Hardware solutions, such as SEV [45], IBM PEF [44],

The policy composed of digests is sensitive to software TDX [27], emerged to isolate VMs from the untrusted hy-

updates because newer software versions result in differ- pervisor and the cloud administrator. However, they lack

ent measurement digests. Consequently, any software up- the VM runtime integrity attestation, a key feature pro-

date of an integrity-enforced system would require a pol- vided by W ELES. W ELES is complementary to them.

icy update, which is impractical. Instead, W ELES sup- Combining these technologies allows for better isolation

ports dedicated update mirrors serving updates containing of VM from the hypervisor and the administrator and for

digitally signed integrity measurements [68, 21]. Other runtime integrity guarantees during the VM’s runtime.

measurements defined in the policy can be obtained from

the national software reference library [3] or directly from

the IMA-log read from a machine executed in a trusted 9 Conclusion

environment, e.g., development environment running on

tenant premises. The amount of runtime IMA measure- This paper presented W ELES, the virtual machine (VM)

ments can be further reduced by taking into account pro- attestation protocol allowing for verification that security-

cesses interaction to exclude some mutable files from the sensitive applications execute in the VM composed and

measurement [47, 79]. controlled by expected software in expected configura-

14tion. W ELES provides transparent support for legacy ap- https://www.techspot.com/news/

plications, requires no changes in the VM configuration, 40280-google-fired-employees-for-breaching-user-pr

and permits tenants to remotely attest to the platform html, accessed on March 2020.

runtime integrity without possessing any vendor-specific

hardware by binding the VM state to the SSH connec- [9] The Transport Layer Security Protocol Version

tion. W ELES complements hardware-based trusted execu- 1.2. https://tools.ietf.org/html/rfc5246,

tion environment (TEE), such as AMD SEV, IBM PEF, In- accessed on March 2020.

tel TDX, by providing runtime integrity attestation of the [10] TPM Library Part 1: Architecture, Fam-

guest OS. Finally, W ELES incurs low performance over- ily "2.0", Level 00, Revision 01.38.

head (≤ 6%) but has much larger trusted computing base http://www.trustedcomputinggroup.org/

(TCB) compared to pure TEE approaches, such as Intel resources/tpm_library_specification,

software guard extensions (SGX). accessed on March 2020.

[11] TPM Library Specification, Family "2.0",

References Level 00, Revision 01.38. http://www.

trustedcomputinggroup.org/resources/

[1] Lib.rs: Find quality Rust libraries. https://lib.

tpm_library_specification, accessed on March

rs, accessed on March 2020.

2020.

[2] memcached. https://memcached.org, accessed

on March 2020. [12] Trusted Boot (tboot). https://sourceforge.net/

projects/tboot/, accessed on March 2020.

[3] National Software Reference Library

(NSRL). https://www.nist.gov/

[13] A MAZON W EB S ERVICES , I. O . I . A . Firecracker:

itl/ssd/software-quality-group/

secure and fast microVMs for serverless comput-

national-software-reference-library-nsrl/

ing. http://firecracker-microvm.github.io,

about-nsrl/nsrl-introduction, accessed on

accessed on March 2020.

March 2020. [14] A NATI , I., G UERON , S., J OHNSON , S., AND

[4] NGINX. https://www.nginx.com, accessed on S CARLATA , V. Innovative Technology for CPU

March 2020. Based Attestation and Sealing. In Proceedings of the

2nd International Workshop on Hardware and Ar-

[5] SCONE Configuration and Attestation service. chitectural Support for Security and Privacy (2013),

https://sconedocs.github.io/CASOverview/, vol. 13 of HASP ’13, ACM.

accessed on March 2020.

[15] A RNAUTOV, S., T RACH , B., G REGOR , F.,

[6] SWTPM - Software TPM Emulator. https:// K NAUTH , T., M ARTIN , A., P RIEBE , C., L IND ,

github.com/stefanberger/swtpm, accessed on J., M UTHUKUMARAN , D., O’K EEFFE , D., S TILL -

March 2020. WELL , M., G OLTZSCHE , D., E YERS , D.,

K APITZA , R., P IETZUCH , P., AND F ETZER , C.

[7] TCG Infrastructure Working Group Architec- SCONE: Secure Linux Containers with Intel SGX.

ture Part II - Integrity Management, Speci- In Proceedings of OSDI (2016).

fication Version 1.0, Revision 1.0. https:

//trustedcomputinggroup.org/wp-content/ [16] A RTHUR , W., AND C HALLENER , D. A Practical

_ _

uploads/IWG ArchitecturePartII v1.0.pdf, Guide to TPM 2.0: Using the Trusted Platform Mod-

accessed on March 2020. ule in the New Age of Security. Apress, 2015.

[8] TechSpot News. Google fired em- [17] B ELLARD , F. QEMU, a Fast and Portable Dynamic

ployees for breaching user privacy. Translator. In Proceedings of the Annual Conference

15You can also read