Clip Art Retrieval Combining Raster and Vector Methods

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Clip Art Retrieval Combining Raster and Vector

Methods

Pedro Martins∗ , Rui Jesus∗ , Manuel J. Fonseca† , Nuno Correia∗

∗ CITI - Dept of Computer Science, FCT, New University of Lisbon, 2829-516 Caparica, Portugal

Email:rjesus@deetc.isel.ipl.pt

† Department of Computer Science and Engineering, INESC-ID/IST/Technical University of Lisbon,

R. Alves Redol, 9, 1000-029 Lisboa, Portugal

Email: mjf@inesc-id.pt

Abstract—Clip art databases can be composed by raster im- each one. The majority of the proposed image retrieval systems

ages or by vector drawings. There are technologies for searching [2], [3] are based on methods that use only one format and

and retrieving clip arts for both image formats but research has they are able to retrieve relevant clip art images. However,

been done separately, focusing on either format, without taking these systems do not exploit the benefits of another format, in

benefits of both research fields as a whole. This paper describes a terms of features available and its easy extraction. They also

study where the benefits of combining information extracted from

vector and raster images to retrieve clip arts are evaluated and

do not propose methods that combine features obtained from

discussed. Color and texture features are extracted from raster raster images with information extracted from vector graphics.

images and geometry and topology features are extracted from This paper describes and discusses the main issues of a

vector images. The paper presents several comparisons between study conducted to evaluate the combination of information

different combinations with several descriptors. The results of

extracted from both formats to retrieve clip art images. We

the study show the effectiveness of the solutions that combines

both types of features. exploit the benefits of extracting geometric and topological

information from vector images with the color and texture

information obtained from raster images. To evaluate the

I. I NTRODUCTION

generalization capability of this strategy to other types of

Clip Arts are collections of synthetic digital drawings that images (different from clip arts) that usually have only a raster

can be imported and used in a wide range of documents representation, we also described and evaluate a vectorization

and applications. Generally, these collections are organized in method of raster images.

several categories that identify the elements in the drawings

The remainder of this paper is organized as followed.

using metadata. Most of the time, this information needs to

First, the related work is described. Section III summarizes

be manually introduced, which is a very time consuming pro-

the main features of the study. Section IV presents the feature

cedure. Moreover, the user needs to know which information

extraction techniques and the methods used to segment and to

was used to categorize the collection. Some of these collections

vectorize raster images. The next section describes the clip art

can have hundreds or thousands of images and for this reason,

retrieval system used in our experiments. Then, in section VI

there is interest in developing techniques that can speed up the

is described and discussed the results obtained by our study.

process of searching and categorizing of these drawings.

Finally, the conclusions and future work are presented.

During the lasts years, many CBIR (Content-based Image

Retrieval) approaches [1] have been proposed to address this II. R ELATED W ORK

problem applied to images in general. However, clip arts are

In our study we analysed the behavior of several image

images with specific characteristics which should be included

features combined with information obtained from vector

in the design of new CBIR systems to retrieve images from a

drawings. This section presents the main techniques that had

clip art collection.

been used to describe raster images in content-based image

Currently, clip art databases are composed by images with retrieval. It also describes the main approaches that have been

more elaborated drawings (http://openclipart.org/) compared proposed to retrieve vector drawings.

with the initial collections used for instance, in Microsoft

Word. Clip art drawings are not anymore simple shapes with A. Raster Characteristics

minimal color and almost without texture. This means the color

In the last decades, image retrieval systems have used color,

and texture methods used in CBIR system can also be applied

texture, shape, topology and point of interest [1]. Some of

in clip arts since there are tools (http://xmlgraphics.apache.org/

these descriptors are described in the MPEG-7 standards [4].

batik/) to convert vector images in raster images.

Color is one of the most recognizable visual features, and there

It is common to find clip art images in two formats, raster are examples where using only color is possible to separate

images and vector graphics. Each format has specific properties different classes of objects [5]. Examples of color descriptors

that can be explored in order to extract the suitable features of are: Color Histograms [5] and Color Moments[6]. One of theoldest and most used is the color histogram, which in some extraction and (3) search engine. The first block contains the

cases can be a very robust and effective image descriptor [5]. clip art image database. Due to the nature of the clip arts and

Strickler and Orengo [6] describe other techniques to improve the features extracted, the database includes two representa-

the performance of color histograms, such as the Cumulative tions of each clip art: raster version and vector images of the

Color Histograms and Color Moments. clip arts. The second component provides methods to extract

features. It is described in section IV. The main function of the

Texture is another attribute used to characterize raster search engine (third component) is to return to the user a set

images. Descriptors obtained from the Gabor filter bank, Edge of relevant images according to the query. At this stage, it is

Histograms and descriptors based on points of interest [7] have based on a query-by-example strategy. Our testing system also

been used in databases where images have several distinctive includes a vectorization method of raster images (explained

textures [8]. Descriptors obtained using the bank of Gabor below). In our study, we also test the performance of the sytem

filters have been widely used in image processing, including using the created image vector from the raster image, in order

classification and segmentation, image recognition and image to compare it with the results obtained with the original vector

retrieval [1], [9]. drawing. Several image ranking algorithms are implemented

Multiple shape descriptors are proposed in the MPEG- for each type of feature descriptor and for different descriptor

7 [4], [10], such as a MultiLayer Eigen Vector Descriptor combinations (see section V). We use this system to evaluate

(MLEV) and a descriptor based on the Angular Radial Trans- the results in combining features from different representations

form (ART). The ART descriptor can describe objects made up of the clip art.

by one or more regions and contains information about pixel

distribution within a 2D region. This descriptor is also invariant

Raster Query

to rotation and resistant to segmentation. An evaluation of the image

database Retrieved

MPEG-7 standards [10] mentions this descriptor is best suited Clip art

Images

Feature retrieval

for shapes made up of multiple separate regions, like clip arts, extraction system

Vector

logos and emblems. graphic

image

database

B. Vector Characteristics Descriptor

database

Vector drawings, due to their structure, require different

approaches from raster images. While the latter resort to color

and texture as main features to describe their content, vector Fig. 1. System components overview.

drawings are mainly described using the shape of their visual

elements and the spatial arrangement and relationships among

those objects. A. Vectorization of Raster Images

Park and Um [11] presented a system that supports retrieval

To avoid the need to use two replicas of the same image

of complex 2D drawings based on a dominant shape, secondary

(raster and vector) and to generalize our approach to other

shapes and their spatial relationship (inclusion and adjacency).

images (different from clip arts), a vectorization algorithm

The shapes are stored in a complex graph structure and

was developed. This generates an implicit simplification of

classified using a set of basic shapes.

the image itself. The original vector images are represented

Fonseca et al. presented an approach for the description of by different lines, curves and polygons, when these images

complex vector drawings and sketches, which uses two spatial were converted to raster images all these elements are merged

relationships, inclusion and adjacency, to describe the spatial into a single plane, causing a loss of image complexity by

arrangement and a set of geometric features to describe the removing the information of overlapped elements.

geometry of each visual element in the drawing [12]. Liang

et al. [13] developed a solution for drawing retrieval based First, we segment the raster image into color regions. Then,

on Fonseca et al. approach [12], but using eight topological we apply a processing mask to separate each region using the

relationships and relevance feedback. pixel label matrix produced by the segmentation algorithm. We

use a contour finding algorithm based on [15] to get the region

Pu and Ramani, developed an approach that analyzes edges. To create the vector image, we iterate through each

drawings as a whole [14]. Authors proposed two methods to region edges to draw each region. After that, we fill it with the

describe drawings. One uses the 2.5D spherical harmonics and mean color calculated by mean shift clustering [16]. The vector

the other uses a 2D shape histogram. images are saved in the same format as the original images and

can be used instead to extract the topogeo descriptor.

Recently, Sousa e Fonseca improved their previous ap-

proach by including distance in the topology graph used to

describe the spatial arrangement of the visual elements of the IV. F EATURE E XTRACTION

drawing [2].

In this section we describe the methods used to obtain

III. C LIP A RT R ETRIEVAL S YSTEM image descriptor vectors. The descriptor vectors will be used

when comparing images in the retrieval system. The section

To provide our study, we build a clip art image retrieval sys- starts presenting and discussing some pre-processing methods

tem that is used in our experiments. The system is composed that were applied and describes the information extracted in

by three components (see Fig. 1): (1) database, (2) feature raster and in vector images.A. Pre-processing D. Topology and Geometry Features

Before any information is extracted from the images we To describe the clip arts in the vectorial format we decided

apply several transformations to enhance our algorithms per- to use Sousa and Fonseca solution [2]. Their approach uses

formance. Some clip arts have large backgrounds and the a topology graph to describe the spatial arrangement of the

clip art drawing is not centered. To remove the irrelevant drawing, where visual elements are represented as nodes and

background we build a bounding box around the clip art topological relationships of inclusion and adjacency are coded

drawing and a new image is created with all data inside it. through the specification of links between the nodes. While

Thus, only the clip art drawing remains and the irrelevant these relationships are weakly discriminating, they do not

background is removed. We decided to use 400 pixels (keeping change with rotation and translation.

image size ratio) as maximum height and width in our system, To avoid the comparison of graphs during the retrieval pro-

as it offered a good trade off between image quality and cess, authors use the graph spectrum [22] to convert graphs into

performance. To smooth the image and remove any remaining multidimensional vectors (descriptors). To obtain the spectrum

artifacts we apply a median blur filter after. The system used, of the topology graph, authors compute the eigenvalues from

interprets transparent backgrounds as black. Some images its adjacency matrix.

include transparent backgrounds and large number of clip arts

include monochromatic images, as in Fig. 3. To solve this, we Combined with the topological information, authors also

detect the transparent background and fill it with a single color. extract the geometry of each visual element in the drawing

After testing some colors we decided to use the background to describe its content. In summary, we describe the vectorial

color that is visualized in figures 2b and 2c. representation of the clip art using the geometry of its entities

and their topological organization (topogeo descriptor).

V. I MAGE D ISTANCE M EASURING

B. Color Features

To retrieve images similar to the query, the descriptors

Based on previous work [17], two color descriptors are extracted from query images are compared with the descriptors

selected: color moments [6] and color regions [17]. This color stored in our database. As in our database, queries are made

moments descriptor extracts information of the image color using a raster or vector drawing representation of the clip art,

distribution in a 4 × 4 grid. For the color regions features, depending on the descriptors used to compare the images. To

we segment the image by color, using a segmentation system reduce statistical errors caused by the range of values of the

proposed in [18]. Then, it is created a descriptor for each descriptor vectors, each descriptor is normalized [17].

detected region. Finally, it is assigned a Bag-of- Features (BoF)

To compare the image query with the clip arts in the

descriptor [17] based on the regions present in the image.

database, a distance function between the descriptors vectors is

To compare the effects on performance, we downsampled computed. The process of comparing the query image with the

the color set on the color-based feature descriptors using a clip art images in the database is similar for each descriptor.

modified version of the downsampling algorithm described in After the distance to each vector is calculated it is divided by

[19]. The color set consists of twelve colors different from the the maximum distance found. We normalize the distances in

chosen background color. The choice of the colors is based this way, as part of the process that is used to combine image

in studies [20], [21] that suggest there are approximately 12 descriptors in queries. Thus, the distance used for the color

color which almost every culture can distinguish as different moments and Gabor filter bank is,

or ’Just-not the same’(JNS).

dist(IQ ; I)

d(IQ , I) = (1)

maxDistance

C. Texture Features and Point of Interest

where IQ and I represent the feature vector of the query

We decided to use texture and point of interest features and of a clip art image of the database respectively. The

besides color to compare the different descriptors and their function dist is the Manhattan distance when using color

performance on clip art images. Based on previous works [17], moments descriptor and the Euclidean distance for the Gabor

we select the texture features based on the Gabor filter bank filter bank. Concerning the features that are organized in BoFs,

and David Lowe’s Scale Invariant Feature Transform (SIFT) the cosine distance is applied.

[7]. We also use a bag-of-features strategy when applying the To calculate the distance between images using a combi-

SIFT descriptor [17]. nation of descriptors, we calculate their distance using each

descriptor separately (equation 1) and compute the average

value of each distance. Then, we divide the average value

by the maximum distance found to normalize it. Equation 2,

summarizes the distance function used when calculating the

distance between the query image and images in the database

using multiple descriptors.

(a) (b) (c)

n

1

P

Fig. 2. Color Reducing: 2a) original image; 2b) full color set; 2c) n di (IQ , I)

JNS color set. i=0

d(IQ , I) = (2)

maxdmetric. This measure combines the concepts of precision,

relevance ranking and recall. It is computed using equation

3,

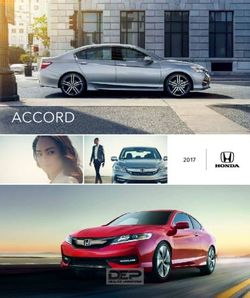

(a) Bulbs (b) Car (c) Fish (d) Flower n

P

[P @(r) × rel(Ir )]

r=1

AvgP = . (3)

total relevant images

(e) Hammer (f) Magn. Glass (g) Phone (h) Sword where n is the number of retrieved results, P @(r) symbol-

izes the ’precision at’ measure which refers to the precision of

the results until the rth position and rel(I) is a binary function

that returns 1 if the image is relevant and 0 otherwise. To

evaluate several queries we used the mean average precision

(i) Tree (j) TV (k) Misc (MAP), the average AvgP across all the queries. Table II shows

the MAP results obtained in this test by each descriptor: color

Fig. 3. Clip art categories in the database. moments (CM), Gabor filters features (Gbr), color regions

(CR) descriptor, SIFT descriptor, topology and geometry

TABLE I. C LIP ART TEST DATASETS .

features obtained from original images (TP) and from raster

Categories DataSet1 DataSet2 images (rTP).

Bulbs 10 41

Car 10 168

Fish 10 88 TABLE II. R ETRIEVAL MAP RESULTS .

Flower 10 309 Category CM Gbr CR SIFT TP rTP

Hammer 10 29 Bulbs 0,34 0,33 0,23 0,24 0,11 0,19

Magn. Glass 10 21 Car 0,37 0,57 0,32 0,23 0,28 0,16

Phone 10 50 Fish 0,20 0,24 0,25 0,21 0,17 0,20

Sword 10 53 Flower 0,31 0,26 0,22 0,23 0,12 0,13

Tree 10 73 Hammer 0,18 0,12 0,17 0,20 0,26 0,16

TV 10 165 Magn. Glass 0,38 0,18 0,18 0,19 0,17 0,16

Misc - 11888 Phone 0,49 0,48 0,52 0,21 0,22 0,18

Total 100 12885 Sword 0,62 0,41 0,41 0,31 0,21 0,20

NQueries 100 20 Tree 0,23 0,57 0,28 0,18 0,21 0,20

TV 0,52 0,24 0,26 0,37 0,16 0,14

Where n is the number of used descriptors and maxd is the Avg 0,36 0,34 0,28 0,24 0,19 0,17

maximum average distance of the combined descriptors. After

computing the distances, the results are ordered by ascending These individual results set our base of comparison. The

order of distance and returned to the user. original vectorial images (TP) showed similar results in almost

all the retrieval tests, when compared to the simplified vector

VI. E XPERIMENTS images created from the raster images (rTP). In comparison

with the raster descriptors, this descriptor had weaker results

To conduct our study we provide several test with the in almost every category except in the ”Hammer” category.

descriptors described in section IV, using two different col-

lections of clip arts. One with 100 images divided into 10 Among the raster descriptors the color moments obtained

categories (dataset1) and another with approximately 13000 the best results, achieving a MAP of 36%. This result was

images divided into 11 categories (dataset2). All the images obtained using the JNS color set. We decided to use it instead

were obtained from the OpenClipArt library1 . To find the better of the full color set based on a set of previous experiments. In

combination of descriptors, first, we tested each descriptor these experiments, the JNS color set increased the performance

separately and then with others. We evaluated every algorithm in approximately 2% in MAP.

globally and by category, the evaluation metrics and tests are

described in the next sections. The highest results we achieved were in the ”Sword”,

”Phone” and ”Tree” categories. This was due to two properties:

(1) most images in these categories have similar colors (a

A. Controlled dataset large number are monochromatic) and (2) the images had

Table I and Fig. 3 show the results of the manual label- lesser width than others allowing the descriptor to extract more

ing and examples of images in the data sets. The first test relevant information.

performed uses the dataset1 where each image was previously The BoF descriptor of color regions (CR) did not get such

classified into 10 different categories: Bulbs, Car, Fish, Flower, good results when compared with the CM descriptor. The

Hammer, Magnifying Glass, Phone, Sword, Tree and TV (see process of finding the correct number of codewords that make

figure 5). In this experiment, each image of this data set is up the codebook was difficult. Despite that, we still achieved

used as query-by example. our best results in the ”Fish” and ”Phone” categories, approx-

In order to include the position of the relevant clip arts in imately 25% and 52% MAP respectively, with this descriptor,

the results, we measured the results using the average precision using a codebook with 500 codewords. This measured a 4%

increase in MAP in both categories compared to the color

1 http://openclipart.org/ moments descriptor.We kept the default parameters in the texture descriptor

extraction algorithm using the Gabor filters [9]. We obtained

a MAP of 34%, very close to color moments descriptor,

the second best overall results in queries using only one

descriptor. It obtained the highest results in the ”Car” and

”Tree” categories with a MAP of approximately 57% in both

categories.

Finally, the SIFT BoF descriptors showed weaker results

than the texture filters extracted with the Gabor filters.

B. Large size dataset

To evaluate the performance of the algorithms with a larger

number of images, we built a more realistic dataset using a

collection with around 13000 images (dataset2). From each Fig. 4. Retrieval of 5 relevant images.

category of the dataset1, 2 images are removed to be used as

image query and the remaining ones are added to the dataset2 • Color moments and texture extracted with the Gabor

collection. These images were divided manually into the same filters.

categories as in the first test. However, the new collection is • Color moments and BoF from SIFT descriptors.

composed by a large number of random clip arts. Therefore,

we added a ”Misc” (Miscellaneous) category, to include all • Color moments and topogeo descriptor.

the images that do not belong to any of the former categories.

Table I shows the number of images that each category has These combinations categorize images using color, texture,

after each image in the collection was labeled. shape and topology. Then, we ran the two tests described in the

For the second test we used the R-precision metric to previous sections. In the first test, the first two combinations

evaluate our results. We chose 5 for the value R. To evaluate showed improved results, while adding the TP descriptors

across multiple queries we used the average R-precision of reduced the performance. Table IV shows the results obtained

each query. The results are shown in table III and figure 4. with larger database. The combination with the SIFT descrip-

tors decreased the performance while the combination with TP

TABLE III. AVERAGE R-P RECISION RESULTS FOR RETRIEVAL OF 5 improved the result obtained using only the CM descriptors.

RELEVANT IMAGES .

We tested three more combination, where we com-

Category CM Gbr SIFT TP rTP

Bulbs 0,027 0,003 0,002 0,005 0,004 bined three descriptors: CM/SIFT/TP, CM/Gbr/TP and

Car 0,061 0,337 0,049 0,009 0,031 CM/Gbr/rTP. In the first test, with the controlled database, the

Fish 0,007 0,012 0,008 0,015 0,008 CM/Gbr/TP combination had the second best result overall.

Flower 0,361 0,084 0,262 0,049 0,054

Hammer 0,007 0,003 0,006 0,003 0,014

When compared to the Color Moments/Gabor combination,

Magn. Glass 0,174 0,008 0,005 0,004 0,004 which was the best in the first test, this descriptor had almost

Phone 0,276 0,020 0,031 0,013 0,019 the same MAP, with 0,3 % less using the CM/Gbr/rTP

Sword 0,199 0,097 0,037 0,008 0,004

Tree 0,020 0,064 0,004 0,011 0,006

combination.

TV 0,193 0,073 0,066 0,015 0,015

Avg 0,13 0,07 0,05 0,02 0,01 In the second test (see table IV), the combination

CM/Gbr/TP scored our best results overall, with the highest

The results we obtained on this test were weaker but the average R-precision, as you can see in Fig. 4. We also tested

performance of the descriptors was analogous with the results this algorithm with the simplified vector images. This version

obtained in the previous test. The color moments descriptor of the algorithm had almost the same average R-precision of

achieved the best results overall once again. Also the second the combination that use the original vector drawings. It also

best result continued to be the Gabor filter bank descriptor. achieved better results in 5 categories, as we can se in table

The greatest decrease in performance was seen in the BoF IV. This combination has the advantage of to avoid the need of

descriptor made from SIFT descriptors. Compared to the other two copies of the query image, because it generates the vector

raster descriptors the SIFT descriptor obtained the weakest images from the raster versions.

results in most categories besides the ”Flower” category.

Our results showed that the combination of certain descrip-

The simplified vector images showed improved results. tors representing distinct characteristics improved the retrieval

Comparing to the descriptors extracted from the original vector results. The higher degree of information makes the approach

images, the difference between the average values obtained to be more robust in a database with a larger number of

in the second test is minimal, but we could see significant images. We could have used a shape descriptor to extract

improvements in the ”TV” and ”Sword” categories. information from raster images, like the ART but it does not

extract topology information. Besides, the results showed by

C. Descriptor Combination the topology and geometry extracted from the original vector

images and those created by our vectorization of raster images,

To compensate the disadvantages found in some algorithms had similar results. This means that it is possible to retrieve

we combined the following pairs of descriptors: clip art images using only the raster version.TABLE IV. AVERAGE R-P RECISION RESULTS WITH COMBINATION OF DESCRIPTORS FOR RETRIEVAL OF 5 RELEVANT IMAGES .

Category CM/Gbr CM/SIFT CM/TP CM/SIFT/TP CM/Gbr/TP CM/Gbr/rTP

Bulbs 0,008 0,009 0,039 0,015 0,012 0,009

Car 0,387 0,066 0,084 0,088 0,364 0,366

Fish 0,009 0,008 0,006 0,008 0,010 0,010

Flower 0,482 0,340 0,443 0,384 0,548 0,477

Hammer 0,005 0,004 0,008 0,007 0,005 0,006

Magn. Glass 0,265 0,042 0,279 0,047 0,323 0,335

Phone 0,127 0,099 0,290 0,106 0,209 0,275

Sword 0,356 0,099 0,264 0,135 0,342 0,414

Tree 0,048 0,015 0,024 0,017 0,052 0,040

TV 0,212 0,184 0,100 0,163 0,256 0,153

Avg 0,19 0,09 0,15 0,10 0,21 0,21

VII. C ONCLUSIONS [8] J. Huang, S. R. Kumar, M. Mitra, W.-J. Zhu, and R. Zabih,

“Image indexing using color correlograms,” in Proceedings of the

While other approaches have used only one image format 1997 Conference on Computer Vision and Pattern Recognition

to retrieve clip arts, we presented a study which shows that (CVPR ’97), ser. CVPR ’97. Washington, DC, USA: IEEE

Computer Society, 1997, pp. 762–. [Online]. Available: http:

a solution based on the combination of information extracted //dl.acm.org/citation.cfm?id=794189.794514

from raster and vector drawings has better results. We used

[9] B. S. Manjunath and W. Y. Ma, “Texture features for browsing

different algorithms to extract features from clip arts. We also and retrieval of image data,” IEEE Trans. Pattern Anal. Mach.

developed a vectorization algorithm to convert raster images Intell., vol. 18, no. 8, pp. 837–842, Aug. 1996. [Online]. Available:

into vector images. We evaluated the different algorithms using http://dx.doi.org/10.1109/34.531803

two collections of clip arts (100 and 13000 images). The [10] M. Bober, “Mpeg-7 visual shape descriptors,” IEEE Trans. Cir. and

study shows minimal diferences in terms of retrieval results Sys. for Video Technol., vol. 11, no. 6, pp. 716–719, Jun. 2001.

[Online]. Available: http://dx.doi.org/10.1109/76.927426

when using the vectorial images obtained from raster. This

means, we can apply this strategy to other databases (different [11] J. Park and B. Um, “A New Approach to Similarity Retrieval of

2D Graphic Objects Based on Dominant Shapes,” Pattern Recognition

from clip art). We achieved the highest results in our tests, by Letters, vol. 20, pp. 591–616, 1999.

combining color moments, texture extracted with the Gabor [12] M. J. Fonseca, A. Ferreira, and J. A. Jorge, “Content-Based Retrieval of

filter bank and the topology and geometry. This combination Technical Drawings,” International Journal of Computer Applications

uses more descriptors characterizing the images according in Technology (IJCAT), vol. 23, no. 2–4, pp. 86–100, 2005.

to different features at the same time. If we improve on [13] S. Liang, Z.-X. Sun, B. Li, and G.-H. Feng, “Effective sketch retrieval

the algorithms we used or use another descriptor for similar based on its contents,” in Proceedings of Machine Learning and

features we could probably improve our results even further. Cybernetics, vol. 9, 2005, pp. 5266–5272.

[14] J. Pu and K. Ramani, “On visual similarity based 2d drawing retrieval,”

Journal of Computer Aided Design, vol. 38, no. 3, pp. 249–259, 2006.

ACKNOWLEDGMENT [15] S. Suzuki and K. Be, “Topological structural analysis of digitized

binary images by border following,” Computer Vision, Graphics, and

This work was supported in part by national funds through Image Processing, vol. 30, no. 1, pp. 32–46, Apr. 1985. [Online].

FCT - Fundação para a Ciência e a Tecnologia, under project Available: http://dx.doi.org/10.1016/0734-189X(85)90016-7

PEst - OE/EEI/LA0021/2011 and project Crush PTDC/EIA- [16] D. Comaniciu and P. Meer, “Mean shift: A robust approach toward

feature space analysis,” IEEE Transactions on Pattern Analysis and

EIA/108077/2008. Machine Intelligence, vol. 24, pp. 603–619, 2002.

[17] R. Jesus, R. Dias, R. Frias, A. Abrantes, and N. Correia, “Sharing

personal experiences while navigating in physical spaces,” in 5th Work-

R EFERENCES shop on Multimedia Information Retrieval, ACM SIGIR Conference on

[1] R. Datta, D. Joshi, J. Li, and J. Z. Wang, “Image retrieval: Research and Development in information Retrieval (SIGIR07), 2007.

Ideas, influences, and trends of the new age,” ACM Comput. [18] C. M. Christoudias, “Synergism in low level vision,” in Proceedings of

Surv., vol. 40, no. 2, pp. 5:1–5:60, May 2008. [Online]. Available: the 16 th International Conference on Pattern Recognition (ICPR’02)

http://doi.acm.org/10.1145/1348246.1348248 Volume 4 - Volume 4, ser. ICPR ’02. Washington, DC, USA:

[2] P. Sousa and M. J. Fonseca, “Sketch-based retrieval of drawings using IEEE Computer Society, 2002, pp. 40 150–. [Online]. Available:

spatial proximity,” Journal of Visual Languages and Computing (JVLC), http://dl.acm.org/citation.cfm?id=846227.848599

vol. 21, no. 2, pp. 69–80, April 2010. [19] J. C. Amante and M. J. Fonseca, “Fuzzy color space segmentation

to identify the same dominant colors as users.” in 18th International

[3] J. R. Smith and S.-F. Chang, “Visualseek: a fully automated

Conference on Distributed Multimedia Systems (DMS’12). Miami

content-based image query system,” in Proceedings of the fourth

Beach, USA: Knowledge Systems Institute, 2012, pp. 48–53.

ACM international conference on Multimedia, ser. MULTIMEDIA ’96.

New York, NY, USA: ACM, 1996, pp. 87–98. [Online]. Available: [20] B. Berlin and P. Kay, Basic Color Terms: Their Universality and

http://doi.acm.org/10.1145/244130.244151 Evolution. University of California Press, 1969. [Online]. Available:

http://books.google.pt/books?id=xatoCJbhifkC

[4] F. Pereira and R. Koenen, “Mpeg-7: A standard for multimedia content

description,” Int. J. Image Graphics, vol. 1, no. 3, pp. 527–546, 2001. [21] E. Y. Chang, B. Li, and C. Li, “Toward perception-based image

retrieval,” in Proceedings of the IEEE Workshop on Content-based

[5] M. J. Swain and D. H. Ballard, “Color indexing,” Int. J. Comput.

Access of Image and Video Libraries (CBAIVL’00), ser. CBAIVL ’00.

Vision, vol. 7, no. 1, pp. 11–32, Nov. 1991. [Online]. Available:

Washington, DC, USA: IEEE Computer Society, 2000, pp. 101–.

http://dx.doi.org/10.1007/BF00130487

[Online]. Available: http://dl.acm.org/citation.cfm?id=791222.791948

[6] M. A. Stricker and M. Orengo, “Similarity of color images,” in Storage [22] D. Cvetković, P. Rowlinson, and S. S. c, Eigenspaces of Graphs.

and Retrieval for Image and Video Databases (SPIE)’95. SPIE, 1995, Cambridge University Press, United Kingdom, 1997.

pp. 381–392.

[7] D. G. Lowe, “Distinctive image features from scale-invariant keypoints,”

Int. J. Comput. Vision, vol. 60, no. 2, pp. 91–110, Nov. 2004. [Online].

Available: http://dx.doi.org/10.1023/B:VISI.0000029664.99615.94You can also read