Generalized Few-Shot Semantic Segmentation

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Generalized Few-Shot Semantic Segmentation Zhuotao Tian† Xin Lai† Li Jiang† Michelle Shu‡ Hengshuang Zhao§ Jiaya Jia† † ‡ § The Chinese University of Hong Kong Cornell University University of Oxford Abstract the situation where only a few labeled data of novel classes arXiv:2010.05210v1 [cs.CV] 11 Oct 2020 are available. Without fine-tuning, both support and query Training semantic segmentation models requires a large samples are sent to FS-Seg models to yield query samples amount of finely annotated data, making it hard to quickly predictions based on the information provided by support adapt to novel classes not satisfying this condition. Few-Shot samples. Segmentation (FS-Seg) tackles this problem with many con- There are several inherent limitations to apply FS-Seg straints. In this paper, we introduce a new benchmark, called in practice. First, FS-Seg requires support samples to con- Generalized Few-Shot Semantic Segmentation (GFS-Seg), to tain classes that exist in query samples. It may be overly analyze the generalization ability of segmentation models strong in many situations to have this prior knowledge. Also, to simultaneously recognize novel categories with very few providing support samples in the same classes requires man- examples as well as base categories with sufficient examples. ual selection. Second, FS-Seg models are only evaluated Previous state-of-the-art FS-Seg methods fall short in GFS- on novel classes, while test samples in normal semantic Seg and the performance discrepancy mainly comes from the segmentation may contain both the base and novel classes. constrained training setting of FS-Seg. To make GFS-Seg Experiments in Section 5 show that SOTA FS-Seg models tractable, we set up a GFS-Seg baseline that achieves de- can not well tackle the practical situation of evaluation on cent performance without structural change on the original both base and novel classes due to the constraints of FS-Seg. model. Then, as context is the key for boosting performance With these facts, in this paper, we set up a new benchmark, on semantic segmentation, we propose the Context-Aware called Generalized Few-Shot Semantic Segmentation (GFS- Prototype Learning (CAPL) that significantly improves per- Seg). The difference between GFS-Seg and FS-Seg is that, formance by leveraging the contextual information to update during evaluation, GFS-Seg does not require forwarding class prototypes with aligned features. Extensive experi- support samples that contain the same target classes in the ments on Pascal-VOC and COCO manifest the effectiveness test (query) samples to make the prediction. GFS-Seg can of CAPL, and CAPL also generalizes well to FS-Seg. also perform well on novel classes without sacrificing the accuracy on base classes when making predictions on them simultaneously, achieving the essential step towards practical use of semantic segmentation in more challenging situations. 1. Introduction Inspired by [13, 27], we design a baseline for GFS-Seg The development of deep learning has provided signifi- with decent performance. Considering that contextual re- cant performance gain to semantic segmentation tasks. Rep- lation is essential for semantic segmentation, we further resentative semantic segmentation methods [54, 4] have ben- propose the Context-Aware Prototype Learning (CAPL) that efited a wide range of applications for robotics, automatic provides significant performance gain to the baseline by up- driving, medical imaging, etc. However, once these frame- dating the weights of base prototypes with aligned features. works are trained, without sufficient fully-labeled data, they The baseline method and the proposed CAPL can be applied are unable to deal with unseen classes in new applications. to normal semantic segmentation models, e.g., PSPNet [54] Even if the required data of novel classes are ready, fine- and DeepLab [4]. Also, CAPL demonstrates its effectiveness tuning still costs additional time and resources. in the setting of FS-Seg by improving PANet[41] by a large In order to fast adapt to novel classes with only limited margin. Our overall contribution is threefold. labeled data, Few-Shot Segmentation (denoted as FS-Seg) • We extend the classic Few-Shot Segmentation (FS- [31] models are trained on well-labeled base classes and Seg) and propose a more practical setting – Gener- then tested on previously unseen novel classes. As shown alized Few-Shot Semantic Segmentation (GFS-Seg). in Figure 1, during training, FS-Seg divides data into the support and query sets. Samples of support set aim to pro- • We design the first baseline for GFS-Seg that yields vide FS-Seg models with target class information to identify decent performance without major structural change target regions in query samples, with the purpose to mimic on normal semantic segmentation models. We also 1

show that previous SOTA FS-Seg models can not well data augmentation helps models achieve better performance

tackle the task of GFS-Seg. by creating more training examples [51, 15, 42].

• We propose the Context-Aware Prototype Learning Few-Shot Segmentation (FS-Seg) places semantic seg-

(CAPL) that brings significant performance gains to mentation in the few-shot scenario [31, 33, 25, 28, 48, 17, 45,

baseline models in both settings of GFS-Seg and FS- 12, 9, 56, 2, 34, 46, 23, 37, 11, 24, 38, 43, 40, 20, 52], where

Seg, and it is general to be applied to various normal dense pixel labeling is performed on new classes with only

semantic segmentation models. a few support samples. OSLSM [31] first introduces this

setting in segmentation and provides a solution by yielding

2. Related Work weights of the final classifier for each query-support pair dur-

ing evaluation. The idea of the prototype was used in PL [7]

Semantic Segmentation Semantic segmentation is a fun- where predictions are based on the cosine similarity between

damental while challenging topic that asks models to accu- pixels and the prototypes. Additionally, prototype alignment

rately predict the label for each pixel. FCN [32] is the first regularization was introduced in PANet [41]. Predictions

framework designed for semantic segmentation by replac- can be also generated by convolutions. PFENet [38] uses

ing the last fully-connected layer in a classification network the prior knowledge from pre-trained backbone to help find

with convolution layers. To get per-pixel predictions, some the region of the interest, and the spatial inconsistency be-

encoder-decoder style approaches [26, 1, 29] are adopted to tween the query and support samples is alleviated by Feature

help refine the outputs step by step. The receptive field is vi- Enrichment Module (FEM). CANet [49] concatenates the

tal for semantic segmentation thus dilated convolution [4, 47] support and query feature and applies Iterative Optimization

is introduced to enlarge the receptive field. Context infor- Module (IOM) to refine prediction. PGNet [48] proposes

mation plays a important role for semantic segmentation Graph Attention Unit (GAU) that establishes the element-

and some context modeling architectures are introduced like to-element correspondence between the query and support

global pooling [22] and pyramid pooling [4, 54, 53, 44]. features in multiple scales.

Meanwhile, attention models [55, 50, 19, 18] are also shown Though FS-Seg models perform well on identifying novel

to be effective for capturing the long-range relationships classes given the corresponding support samples, as shown

inside scenes. Despite the success of these powerful segmen- in Section 5, even the state-of-the-art FS-Seg model can not

tation frameworks, they cannot be easily adapted to unseen well tackle the practical setting that contains both base and

classes without fine-tuning on sufficient annotated data. novel classes.

Few-Shot Learning Few-shot learning aims at making 3. Task Description

prediction on novel classes with only a few labeled exam-

ples. Popular solutions include meta-learning based methods We revisit previous few-shot segmentation followed by

[3, 10, 30] and metric-learning ones [39, 36, 35]. In addi- presenting our new benchmark – Generalized Few-Shot Se-

tion, data augmentation helps models achieve better perfor- mantic Segmentation.

mance by combating overfitting. Therefore, synthesizing

new training samples or features based on few labeled data 3.1. Classic Few-Shot Segmentation

is also a feasible solution for tackling the few-shot problem We call the task [31] classic Few-Shot Segmentation (FS-

[51, 15, 42]. Seg) where data is split into two sets for support S and

Generalized few-shot learning was proposed in [15]. In query Q. An FS-Seg model needs to make predictions on

the generalized few-shot setting, models are first trained on Q based on the class information provided by S, and it is

base classes (representation learning) and then a few labeled trained on base classes C b and tested on previously unseen

data of novel classes together with the base ones are used novel classes C n (C b ∩ C n = ∅). To help adapt to novel

to help models gather information from novel classes (low- classes, the episodic paradigm was proposed in [39] to train

shot learning). After these two training phases, models can and evaluate few-shot models. Each episode is formed by

predict labels from both base and novel classes on a set of a support set S and a query set Q of the same class c. In

previously unseen images (test set). The proposed GFS-Seg a K-shot task, the support set S contains K samples S =

is motivated by the generalized few-shot setting, but dense {S1 , S2 , ..., SK } of class c. Each support sample Si is a pair

pixel labeling in semantic segmentation is different with the of {ISi , MSi } where ISi and MSi are the support image and

single image classification that does not contain contextual label of c.

information. For the query set, Q = {IQ , MQ } where IQ is the input

query image and MQ is the ground truth mask of the class

Few-Shot Segmentation Few-shot learning aims at mak- c. The input data batch used for model training is the query-

ing prediction on novel classes with only a few labeled exam- support pair {IQ , S} = {IQ , IS1 , MS1 , ..., ISK , MSK }.

ples. Popular solutions include meta-learning based methods The ground truth mask MQ of the query image IQ is not ac-

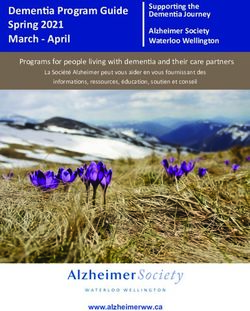

[3, 10, 30] and metric-learning ones [39, 36, 35, 13]. Besides, cessible for the model. It is to evaluate the prediction of theClassic 2-way K-shot Semantic Segmentation Masks Generalized K-shot Semantic Segmentation Construct one time for all test images.

Novel Class 1 Novel Class 2 (2 novel classes) Novel Class 1 Novel Class 2 Novel Class ! ( + , C)

Embedding

(2, C)

… Generation

Masks (all classes)

Embedding

Novel classes:

…

Generation

… Base classes:

1

Feature Extractor

Feature Extractor

K Classifier

1 Support Set ( , C) New Classifier

K Support Set

Dist

(H, W, 3) (H, W, 3)

Dist

(H, W, + )

(H, W, C) Query Image (H, W, C)

An Episode Query Image

(H, W, 2)

(a) (b)

Figure 1. Illustrations of (a) classic Few-Shot Segmentation (FS-Seg) and (b) Generalized Few-Shot Semantic Segmentation (GFS-Seg).

‘Dist’ can be any method measuring the distance/similarity between each feature and prototype and makes predictions based on that

distance/similarity. GFS-Seg models make predictions on base and novel classes simultaneously without being affected by redundant classes,

but FS-Seg models only predict novel classes provided by the support set.

query image in each episode. More details for the episodic To better distinguish between FS-Seg and GFS-Seg, we

training paradigm in FS-Seg are included in [31]. illustrate a 2-way K-shot task of FS-Seg and a case of GFS-

In summary, there are two key criteria in the classic Few- Seg with the same query image in Figure 1, where Cow and

Shot Segmentation task. (1) Samples of testing classes C n Motorbike are novel classes, and Person and Car are base

are not seen by the model during training. (2) The model classes.

requires its support samples to contain target classes existing FS-Seg model (Figure 1(a)) is limited to predicting binary

in query samples to make the predictions. segmentation masks only for the classes whose prototypes

are available in the episode. Person on the right and Car at

3.2. Generalized Few-Shot Semantic Segmentation the top are missing without providing prototypes, even if the

Criterion (1) of classic few-shot segmentation evaluates model is trained on these base classes for sufficient iterations.

model generalization ability to new classes with only a few In addition, if redundant novel classes that do not appear

provided samples. Criterion (2) makes this setting not prac- in the query image (e.g., Aeroplane) are provided by the

tical in many cases since users may not know exactly how support set of (a), they adversely affect performance because

many and what classes are contained in each test image. So FS-Seg has a prerequisite that the query images must contain

it is hard to feed the support samples that contain the same the classes provided by support samples.

classes as the query sample to the model. As shown in Section 5.2, FS-Seg models only learn to

In addition, even if users have already known that there predict the foreground masks for the given novel classes, the

are N classes contained in the test image, relation module performance is therefore much degraded in our proposed

[36] based FS-Seg models [49, 17] may need to process N K generalized setting where all possible base and novel classes

additional manually selected support samples to make pre- require prediction. Contrarily, GFS-Seg (Figure 1(b)) identi-

dictions over all possible classes for the test image. This is fies base and novel classes simultaneously without the prior

insufficient in real applications, where models are supposed knowledge of classes in each query image, and extra support

to output predictions of all classes in the test image directly classes (e.g., Aeroplane at the top-left of Figure 1(b)) do not

without processing N K additional images. affect the models.

In GFS-Seg, base classes C b have sufficient labeled train-

ing data. Each novel class cni ∈ C n only has limited K 4. Our Method

labeled data (e.g., K = 1, 5, 10). Similar to FS-Seg, mod-

els in GFS-Seg are first trained on base classes C b to learn In this section, we present the motivation and details

good representations. Then, when training is accomplished, of Context-Aware Prototype Learning (CAPL) that aims to

after acquiring the information of N novel classes from the tackle GFS-Seg. CAPL is built upon Prototype Learning

limited N K support samples, GFS-Seg models are evalu- (PL) [35, 7]. The idea of PL and the baseline for GFS-Seg

ated on images of the test set to predict labels from both are introduced first.

base and novel classes C b ∪ C n , rather than from only novel

classes C n in the setting of FS-Seg. Therefore the major 4.1. Prototype Learning in FS-Seg

evaluation metric of GFS-Seg is the mIoU of all classes. The In Few-Shot Segmentation (FS-Seg) frameworks [7, 41]

episodic paradigm is not feasible in GFS-Seg, since the input of a N -way K-shot FS-Seg task (N novel classes and each

data during evaluation is no longer the query-support pair novel class has K support samples), all support samples

{IQ , IS , MS } used in FS-Seg, and instead is only the query sij (i ∈ {1, 2, ..., N }, j ∈ {1, 2, ..., K}) are first processed

image IQ as testing common semantic segmentation models. by a feature extractor F and mask average pooling. Theyare then averaged over K shots to form N prototypes pi that can be utilized for further improvement. However, in

(i ∈ {1, 2, ..., N }). GFS-Seg, there is no such limitation on classes contained

i

in each test image – contextual cue always plays an impor-

K

◦ F (sij )]h,w

P

1 X h,w [mj tant role in semantic segmentation (e.g., PSPNet [54] and

pi = ∗ P i

, i ∈ {1, 2, ..., N }, (1)

K j=1 h,w [mj ]h,w Deeplab [6]), especially in the proposed setting (GFS-Seg).

For example, Dog and People are base classes. The

where mij ∈ Rh,w,1 is the class mask for class ci on learned base prototypes only capture the contextual rela-

F(sij ) ∈ Rh,w,c . sij represents the j-th support image of tion between Dog and People during training. If Sofa is a

class ci , and ◦ is the Hadamard Product. After acquiring N novel class and some instances of Sofa in support samples

prototypes, for query features of the feature map F(q), the appear with Dog (e.g., a dog is lying on the sofa), merely

predictions are the labels of the prototypes that they are clos- mask-pooling in each support sample of Sofa to form the

est to. The distance metric can be either Euclidean distance novel prototype may result in the base prototype of Dog

[35], cosine similarity[39], or relation module [36]. losing the contextual co-occurrence information with Sofa

and hence yield inferior results. Thus, for GFS-Seg, reason-

4.2. Baseline for GFS-Seg able utilization of contextual information is the key to better

FS-Seg only requires identifying targets from novel performance.

classes, but our generalized setting in GFS-Seg requires

predictions on both base and novel classes. However, it is Inference We propose the Context-Aware Prototype

hard and also inefficient for FS-Seg model to form proto- Learning (CAPL) to tackle GFS-Seg by effectively enriching

types for base classes via Eq. (1) by forwarding all samples the contextual information to the classifier. Let N b and N n

of base classes to the feature extractor, especially when the denote the total number of base C b and novel classes C n

training set is large. respectively. We use nb (nb 6 N b ) to denote the number of

The common semantic segmentation frameworks can be extra base classes cb,i ∈ C b (i ∈ {1, ..., nb }) contained in

decomposed into two parts of feature extractor and classifier. N n × K support samples of previously unseen novel classes

cn,u ∈ C n (u ∈ {1, ..., N n }). Before evaluation, the novel

Feature extractor projects input image into c-dimensional

space and then the classifier of size N b × c makes predic- prototypes are formed as Eq. (1). The final prototype pb,i for

tions on N b base classes. In other words, the classifier of base class cb,i is the weighted sum of the weights of original

b

size N b ×c can be seen as N b base prototypes (P b ∈ RN ,c ). classifier pb,i b,i

cls and the new base prototypes pf eat generated

Since forwarding all base samples to form the base proto- from support features, expressed as

types is impractical, inspired by low-shot learning methods PN n PK P i,u u

u=1 j=1 h,w [mj ◦ F (sj )]h,w

[13, 27], we learn the classifier via back-propagation during pb,i

f eat = PN n PK P i,u

,

training on base classes. u=1 j=1 h,w [mj ]h,w (3)

Specifically, our baseline for GFS-Seg is trained on base b n

i ∈ {1, ..., n }, u ∈ {1, ..., N }

classes as normal segmentation frameworks. After training,

n

the prototypes P n ∈ RN ,c for N n novel classes are formed pb,i = γ i ∗ pb,i i b,i

i ∈ {1, ..., nb },

cls + (1 − γ ) ∗ pf eat , (4)

as Eq. (1) with N × K support samples. P n and P b are

n

b n

then concatenated to form P all ∈ RN +N ,c to simulta- where mi,uj represents the binary mask for base class c

b,i

on

neously predict the base and novel classes. Since the dot F(sj ), and sj is the j-th support sample of novel class cn,u .

u u

product used by classifier of common semantic segmentation The dynamic weight γ balances the impact of the old and

frameworks produces different norm scales of F(sij ) that new base prototypes. To facilitate understanding, we provide

negatively impact the average operation in Eq. (1), like [41], a visual illustration of a 7-class 2-shot example in Figure 2.

we adopt cosine similarity as the distance metric d to yield We note that γ is data-dependent and its value is condi-

output O for pixels in query sample q ∈ Rh,w,3 as tioned on each pair of old and new prototypes as shown

in Figure 2(b). For base class cb,i , γ i is calculated as

exp(αd(F(qx,y ), pi ))

Ox,y = arg max P i

, (2) γ i = G(pb,i b,i

cls , pf eat ) where G is a two-layer Multilayer Per-

i pi ∈P all exp(αd(F(qx,y ), p ))

ceptron (MLP) (2c × c followed by c × 1, where c is the

where x ∈ {1, ..., h}, y ∈ {1, ..., w}, i ∈ {1, ..., N b + N n }, dimension number of pb,i b,i i

cls and pf eat ) that predicts γ based

and α is set to 10 in all experiments. on pb,i b,i

cls and pf eat with the sigmoid activation function. The

MLP is learned during training.

4.3. Context-Aware Prototype Learning (CAPL)

We further note that directly applying the weighted sum of

Motivation Prototype Learning (PL) is applicable to few- Pfbeat and Pcls

b

is difficult because the former is the averaged

shot classification and FS-Seg, but it works inferiorly for support features and the latter is by classifier’s weights, thus

GFS-Seg. In the setting of FS-Seg, target labels of query they are in different feature spaces. To make it tractable,

samples are only from novel classes. Thus there is no essen- we modify the normal training scheme by letting the feature

tial co-occurrence interaction between novel and base classes extractor F produce Pfbeat that is well-aligned with Pcls b

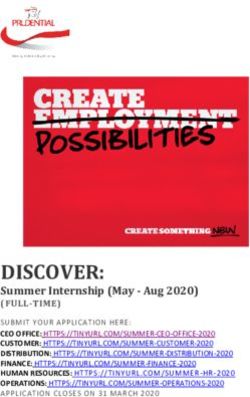

.cow motor New Classifier Generation of

Feature Extractor

(1~ ! )

Masked

Average

Pooling ( , C)

∗ ( − )

( + , C)

(Original Classifier)

Novel Class: ( , 2C)

motor cow ∗

Base Class:

person car

(1~ ! )

sheep bus

dog ( , 1) Adaptive

( ! + 1~ ! )

( , C) ( + , C)

Support Set

(a) New Classifier Formation (b) Generation

Figure 2. Visual illustration of the new classifier formation process before the inference phase of CAPL. As shown in (a), the weights

(prototypes) of Nn novel classes (e.g. motor and cow) are directly set by the averaged novel features. Also, the weights of nb base classes

(e.g. person, car, sheep and bus) that appear with novel classes in support samples are enriched by the weighted sum of averaged features

and the original weights. Finally, the weights of rest Nb − nb base classes (e.g. dog) are kept. The generation process of the adaptive

weighing factor γ is shown in (b).

Training Let B denote the training batch size and N b de- Because the updated classifier is based on both original

note the number of all base classes. We randomly select b B2 c learned classifier and features extracted from support sam-

training samples as the ‘Fake Support’ samples and the rest ples, the weights of base classes in the learned classifier are

samples as ‘Fake Query’ ones. Let Nfb denote the number of important. To help the model generate decent representation

base classes contained in ‘Fake Support’ samples. We ran- pb,i

f eat to form new classifiers without compromising the qual-

Nfb

domly select b 2 c as the ‘Fake Novel’ classes C

FN

and set ity of original classifier pb,i

cls , we use both Pcls and Pupdate to

b

the rest

N

Nfb − b 2f c as the ‘Fake Context’ classes C F C . The generate the training loss. Let Lcls denote the cross-entropy

FN FC (CE) loss of the output generated by the original classifier

selected C and C classes are both base classes, but Pcls , and let Lupdate represent the CE loss produced by the

they mimic the behavior of real novel and base classes during

updated weights Pupdate . The final training loss L is the

inference respectively. The formation of the updated pro-

average of Lcls and Lupdate .

totypes pb,i b b

update (i ∈ {1, 2, ..., Nf , ..., N }) during training

is

5. Experiments

γ ∗ pb,i b,i

i i

cls + (1 − γ ) ∗ pf eat ci ∈ C F C

5.1. Settings

pb,i

update = pb,i

f eat ci ∈ C F N

b,i

pcls Otherwise

The baseline model shown in this section is based on

(5) PSPNet [54] with ResNet-50 [16]. More experiments, e.g.,

Pb B2 c P i i DeepLab-V3[5], are shown in the supplementary file. Our

j=1 h,w [mj ◦ F (sj )]h,w experiments are conducted on Pascal-VOC [8] with aug-

pb,i

f eat = Pb B2 c P , ci ∈ C F C ∪ C F N

j=1

i

h,w [mj ]h,w

mented data from [14] and COCO [21]. Following Pascal-

(6) 5i [31], 20 classes in Pascal-VOC are evenly divided into

Specifically, ‘Fake Context’ classes C F C act as the base four splits and each split contains 5 classes to cross-validate

classes contained in support samples of novel classes during the performance of models. Specifically, for four splits:

b spliti = {5i − 4, ..., 5i}(i ∈ {1, 2, 3, 4}), when using one

testing. The features of ‘Fake Context’ classes update Pcls

with γ as shown in Eq. (5). Also, features of ‘Fake Novel’ split for providing novel classes, classes of the other three

classes C F N yield ‘Fake Novel’ prototypes via Eq. (6) splits and the background class {0} are treated as 16 base

that directly replace the learned weights of base classes in classes. Different from FS-Seg where evaluation is per-

b formed only on the novel classes of test images, GFS-Seg

Pcls . Finally, weights of the rest classes are kept as the

original ones. Following Eqs. (5) and (6), we get the updated models predict labels from both base and novel classes si-

classifier during training that helps the feature extractor learn multaneously on each image from the validation set.

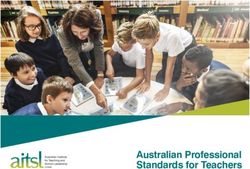

to provide the classifier-aligned features during inference. As the number of either base or novel classes increases,Novel Class: bus car chair cat cow

Figure 3. Visual comparison. Images from the top to bottom are input, ground truth, baseline and CAPL respectively. Novel classes are bus,

car, cat, chair and cow. The white color stands for the label ‘Do Not Care’.

the generalized few-shot task becomes more challenging. To – CANet is a relation-based model and PANet is a cosine-

further analyze the performance in the setting with more based model. Specifically, CANet uses convolutions as a

novel classes, we propose a variant of Pascal-5i : Pascal- relation module [36] to process the concatenated query and

10i that divides 20 classes into two splits and each has 10 support features to yield prediction on the query image, but

classes: spliti = {10i − 9, ..., 10i}(i ∈ {1, 2}). When PANet generates results by measuring the cosine similarity

using one split for providing 10 novel classes, classes of between every query feature and prototypes got from sup-

the other one split and the background class {0} are treated port samples. Though FS-Seg only requires evaluation of

as 11 base classes. Experiments on COCO-20i are also novel classes, if the prototypes (averaged features) of base

included because COCO is a rather challenging dataset with classes are available, the FS-Seg models should also be able

an overwhelming number of classes. Each split of COCO- to identify the regions of base classes in query images.

20i contains 60 base and 20 novel classes. We follow PANet We use the publicly available codes and follow the default

[41] to split COCO into four splits and report the averaged training configuration of these two frameworks. We modify

mIoU as the final performance. For stable results, we follow the inference code to feed all prototypes (base and novel)

[38] to evaluate 5,000 and 20,000 episodes on Pascal and for each query image. The base prototypes are formed by

COCO respectively. averaging the features belonging to base classes in all train-

In the following sections, we first apply FS-Seg models to ing samples. As shown in Table 1, CANet and PANet do

the proposed GFS-Seg setting and make comparisons with not perform well compared to our CAPL, especially on base

our model. Then, we manifest the effectiveness of CAPL classes. The performance gap between FS-Seg models and

by comparing the baseline with different training/inference our baseline in the setting of GFS-Seg is still obvious when

strategies. For each dataset, we take the average of all splits testing on Pascal-10i as shown in Table 1. The results of the

as the final performance. We note that the following ex- two representative FS-Seg models in Table 1 also indicate

perimental results are the averaged results of five different that the cosine-based models can be more effective than the

random seeds, i.e., {123, 321, 456, 654, 999}. Our code will relation-based ones in GFS-Seg.

be made publicly available. We note that the results of novel mIoU of PANet and

CANet in Table 1 are under the GFS-Seg setting and thus

5.2. Comparison with FS-Seg Models they are different from that in the setting of FS-Seg reported

in their papers. This discrepancy is caused by different

To show that models in the setting of FS-Seg are unable evaluation settings. In GFS-Seg, models are required to

to perform well when facing both base and novel classes, we identify all classes in a given testing image, including both

evaluate two representative FS-Seg frameworks (PANet [41] base and novel classes, while in FS-Seg, models only need

and CANet [49]) in the setting of GFS-Seg. to find the pixels belonging to one specific novel class with

The major difference between CANet and PANet is about a prior knowledge of what the target class is. Therefore,

‘Dist’ shown in Figure 1(a) relating to the method for pro- it is much harder to identify the novel classes under the

cessing query and support feature and making predictions interference of base classes in GFS-Seg.Table 1. Comparisons with FS-Seg models in GFS-Seg. Base: mIoU results of all base classes. Novel: mIoU results of all novel classes. Total: mIoU results of all (base + novel) classes. Pascal-5i Pascal-10i Methods Shot Base Novel Total Base Novel Total CANet 1 8.73 2.42 7.23 9.59 1.79 5.88 PANet 1 31.88 11.25 26.97 26.27 10.79 18.90 CAPL 1 63.98 16.44 52.66 52.10 15.04 34.45 CANet 5 9.05 1.52 7.26 9.55 1.66 5.79 PANet 5 32.95 15.25 28.74 28.75 15.40 22.39 CAPL 5 65.66 20.23 54.84 55.45 19.01 38.10 CANet 10 8.96 1.57 7.20 9.42 2.09 5.93 PANet 10 32.96 16.26 28.98 28.87 16.08 22.78 CAPL 10 65.62 23.32 55.55 55.90 19.07 38.37 More specifically, the main reason that FS-Seg models ing because models need to be more discriminative for both fall short is that the episodic training scheme of FS-Seg only base and novel classes to make accurate predictions – more focuses on adapting models to be discriminative between novel classes is detrimental to the predictions on base classes background and foreground, where the decision boundary due to the additional noise brought by the limited support for each episode only lies between one target class and the samples, and more base classes overwhelms the limited infor- background in each query sample. Also, FS-Seg requires mation of novel classes. Results in Table 2 and 3 still demon- query images to contain the classes provided by support sam- strate the robustness of the proposed method in these cases. ples, while GFS-Seg distinguishes between not only multiple Also, as the shot number increases, more co-occurrence in- novel classes but also all possible base classes simultane- formation can be captured and therefore CAPL can bring ously without the prior knowledge of what classes are con- more performance gain to the baseline. tained in query samples. The reproduced results of CANet and PANet in FS-Seg are placed in the supplementary. 5.4. Apply CAPL to FS-Seg 5.3. Effectiveness of CAPL FS-Seg is an extreme case of GFS-Seg. As PANet[41] To verify our proposed CAPL, we make comparisons is a cosine-based FS-Seg model, to validate the proposed with the baseline in the settings of different shots (i.e., 1, 5 CAPL in the setting of FS-Seg, in Table 4, we implement and 10) and different datasets. CAPL as an auxiliary loss to train the PANet with ResNet- Since the training of CAPL is modified to pick ‘Fake 50 and CAPL brings significant improvement (PANet-C) to Novel’ and ‘Fake Context’ samples to align the averaged fea- the PANet by serving as an inter-class regularizer that helps ture and learned weights for building prototypes, we modify model be more robust to different contexts. the training scheme of the baseline (denoted as ‘CAPL-Tr’) accordingly for a fair comparison. Because the baseline only replaces the novel prototypes during the evaluation, there- 5.5. Comparison with AMP fore for CAPL-Tr, only ‘Fake Novel’ is sampled and used to replace the base prototypes during training. ‘CAPL-Te’ in Adaptive Masked Proxies (AMP) [34] has achieved the table represents that only the inference strategy of CAPL promising performance on FS-Seg by fusing new proxies is performed on the baseline and there is no modification to with the previously learned class signatures. Both CAPL the training phase. We set γ to the converged mean values and AMP use weight imprinting and weighted sum for base of CAPL models for CAPL-Te models. class representation refinement. However, there are two in- The results in Table 2 show that the training and inference herent differences: 1) AMP involves an additional search strategies of CAPL complement each other – both are indis- for a fixed α shared by weighted summations of all classes, pensable. CAPL-Tr brings minor improvement to the base- while our weighting factor is adaptive to different classes line with only the training alignment is implemented, and because it is conditioned on the input prototypes without CAPL-Te proves that, without the proposed training strategy, additional searching epochs. 2) The weighted summation is the inference method of CAPL produces sub-optimal results only performed at the inference stage in AMP and the model due to misalignment between the original classifier and new is trained in a normal fashion, but our model meta-learns weights obtained by averaging support features. the behavior during training to better accomplish the context Either more novel classes (11 base and 10 novel classes) enrichment during inference. Therefore AMP is between in Pascal-10i or more base classes (60 base and 20 novel our baseline and CAPL-Te and is less competitive to CAPL. classes) in COCO-20i can make this setting more challeng- More experiments are shown in the supplementary.

Table 2. Ablation study of CAPL in GFS-Seg. Base: mIoU results of all base classes. Novel: mIoU results of all novel classes. Total: mIoU results of all (base + novel) classes. Pascal-5i Pascal-10i Methods Shot Base Novel Total Base Novel Total Baseline 1 62.57 14.02 50.99 51.05 13.68 33.25 CAPL-Tr 1 62.79 15.53 51.54 49.91 14.10 32.86 CAPL-Te 1 63.43 14.09 51.68 51.38 13.30 33.25 CAPL 1 63.98 16.44 52.66 52.10 15.04 34.45 Baseline 5 64.04 17.01 52.96 52.40 16.44 35.32 CAPL-Tr 5 64.29 19.60 53.65 52.17 17.17 35.5 CAPL-Te 5 64.80 17.01 53.42 55.18 16.80 36.90 CAPL 5 65.66 20.23 54.84 55.45 19.01 38.10 Baseline 10 63.76 18.63 53.01 52.28 17.60 35.77 CAPL-Tr 10 63.52 21.08 53.41 51.93 19.41 36.45 CAPL-Te 10 65.02 18.76 54.00 55.04 17.89 37.35 CAPL 10 65.62 23.32 55.55 55.90 19.07 38.37 Table 3. The effectiveness of CAPL on COCO-20i in GFS-Seg. Table 5. Comparison with baseline model on base classes. Splits PANet-C: PANet implemented with CAPL. Base: mIoU results of from Pascal-10i are marked with ? and the other splits without ? all base classes. Novel: mIoU results of all novel classes. Total: are from Pascal-5i . The mIoU results of base classes are shown in mIoU results of all (base + novel) classes. this table. Methods Shot Base Novel Total Methods Split-1 Split-2 Split-3 Split-4 Split-1? Split-2? PANet 1 11.39 9.69 10.97 Baseline 70.22 62.37 60.78 70.99 53.15 58.09 PANet-C 1 11.56 17.13 15.94 CAPL 70.57 62.04 60.86 71.39 53.37 57.03 Baseline 1 39.21 8.20 31.56 CAPL 1 43.51 10.56 35.38 PANet 5 11.68 12.62 11.91 PANet-C 5 16.00 19.04 16.75 5.7. Comparison on Base Classes Baseline 5 38.73 8.08 31.16 To demonstrate that our proposed CAPL does not affect CAPL 5 42.30 9.67 34.24 the base class training, we show comparison on base classes PANet 10 11.74 12.78 11.99 with the baseline model in Table 5. Both two models are PANet-C 10 16.07 19.37 16.88 evaluated without support images, therefore the mIoU results Baseline 10 37.46 5.62 29.60 for novel classes are zero and we only show the mIoU results CAPL 10 40.20 6.11 31.79 of base classes. Based on results shown in Table 5, we can conclude that CAPL can not only quickly adapt to previously unseen novel classes as shown in other experiments, but it Table 4. Class mIoU results in FS-Seg. also yields robust results on base classes. Methods Shot Pascal-5i Pascal-10i COCO-20i PANet 1 49.49 46.69 26.17 PANet-C 1 53.91 53.70 31.39 6. Conclusion PANet 5 56.56 53.24 33.51 PANet-C 5 60.30 61.14 36.34 We have presented the new benchmark of Generalized Few-Shot Semantic Segmentation (GFS-Seg) with a novel solution – Context-Aware Prototype Learning (CAPL). Dif- 5.6. Visual Illustration ferent from the classic Few-Shot Segmentation (FS-Seg), GFS-Seg aims at identifying both base and novel classes Visual examples in GFS-Seg are shown in Figure 3. The that recent state-of-the-art FS-Seg models fall short. Our major issue of the baseline is that the results are more likely proposed CAPL helps baseline gain significant performance to be negatively affected by other prototypes of classes that improvement by enriching the context information brought are not in the test image, especially for predictions near the by novel classes to the base classes with aligned features. boundary area. With enriched context information, CAPL CAPL has no structural constraints on the base model and yields less false predictions than the baseline. Both our base- thus it can be easily applied to normal semantic segmenta- line method and CAPL can be easily applied to any normal tion frameworks. CAPL also generalizes well to the classic semantic segmentation model without structural constraints. setting of FS-Seg.

References [19] Haijie Tian Yong Li Yongjun Bao Zhiwei Fang and Han- qing Lu Jun Fu, Jing Liu. Dual attention network for scene [1] Vijay Badrinarayanan, Alex Kendall, and Roberto Cipolla. segmentation. In CVPR, 2019. 2 Segnet: A deep convolutional encoder-decoder architecture [20] Xiang Li, Tianhan Wei, Yau Pun Chen, Yu-Wing Tai, and Chi- for image segmentation. TPAMI, 2017. 2 Keung Tang. FSS-1000: A 1000-class dataset for few-shot [2] Ayan Kumar Bhunia, Ankan Kumar Bhunia, Shuvozit Ghose, segmentation. In CVPR, 2020. 2 Abhirup Das, Partha Pratim Roy, and Umapada Pal. A deep [21] Tsung-Yi Lin, Michael Maire, Serge J. Belongie, James Hays, one-shot network for query-based logo retrieval. PR, 2019. 2 Pietro Perona, Deva Ramanan, Piotr Dollár, and C. Lawrence [3] Qi Cai, Yingwei Pan, Ting Yao, Chenggang Yan, and Tao Mei. Zitnick. Microsoft COCO: common objects in context. In Memory matching networks for one-shot image recognition. ECCV, 2014. 5 In CVPR, 2018. 2 [22] Wei Liu, Andrew Rabinovich, and Alexander C. Berg. [4] Liang-Chieh Chen, George Papandreou, Iasonas Kokkinos, Parsenet: Looking wider to see better. arXiv, 2015. 2 Kevin Murphy, and Alan L. Yuille. Deeplab: Semantic image [23] Weide Liu, Chi Zhang, Guosheng Lin, and Fayao Liu. Cr- segmentation with deep convolutional nets, atrous convolu- net: Cross-reference networks for few-shot segmentation. In tion, and fully connected crfs. TPAMI, 2018. 1, 2 CVPR, 2020. 2 [5] Liang-Chieh Chen, George Papandreou, Florian Schroff, and [24] Yongfei Liu, Xiangyi Zhang, Songyang Zhang, and Xuming Hartwig Adam. Rethinking atrous convolution for semantic He. Part-aware prototype network for few-shot semantic image segmentation. arXiv, 2017. 5 segmentation. In ECCV, 2020. 2 [6] Liang-Chieh Chen, Yukun Zhu, George Papandreou, Florian [25] Khoi Nguyen and Sinisa Todorovic. Feature weighting and Schroff, and Hartwig Adam. Encoder-decoder with atrous boosting for few-shot segmentation. In ICCV, 2019. 2 separable convolution for semantic image segmentation. In [26] Hyeonwoo Noh, Seunghoon Hong, and Bohyung Han. Learn- ECCV, 2018. 4 ing deconvolution network for semantic segmentation. In ICCV, 2015. 2 [7] Nanqing Dong and Eric P. Xing. Few-shot semantic segmen- [27] Hang Qi, Matthew Brown, and David G. Lowe. Low-shot tation with prototype learning. In BMVC, 2018. 2, 3 learning with imprinted weights. In CVPR, 2018. 1, 4 [8] Mark Everingham, Luc Van Gool, Christopher K. I. Williams, [28] Kate Rakelly, Evan Shelhamer, Trevor Darrell, Alexei A. John M. Winn, and Andrew Zisserman. The pascal visual Efros, and Sergey Levine. Few-shot segmentation propagation object classes (VOC) challenge. IJCV, 2010. 5 with guided networks. arXiv, 2018. 2 [9] Zhibo Fan, Jin-Gang Yu, Zhihao Liang, Jiarong Ou, Changxin [29] Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-net: Gao, Gui-Song Xia, and Yuanqing Li. FGN: fully guided Convolutional networks for biomedical image segmentation. network for few-shot instance segmentation. In CVPR, 2020. In MICCAI, 2015. 2 2 [30] Andrei A. Rusu, Dushyant Rao, Jakub Sygnowski, Oriol [10] Chelsea Finn, Pieter Abbeel, and Sergey Levine. Model- Vinyals, Razvan Pascanu, Simon Osindero, and Raia Hadsell. agnostic meta-learning for fast adaptation of deep networks. Meta-learning with latent embedding optimization. In ICLR, In ICML, 2017. 2 2019. 2 [11] Siddhartha Gairola, Mayur Hemani, Ayush Chopra, and Balaji [31] Amirreza Shaban, Shray Bansal, Zhen Liu, Irfan Essa, and Krishnamurthy. Simpropnet: Improved similarity propagation Byron Boots. One-shot learning for semantic segmentation. for few-shot image segmentation. In IJCAI, 2020. 2 In BMVC, 2017. 1, 2, 3, 5 [12] Naiyu Gao, Yanhu Shan, Yupei Wang, Xin Zhao, Yinan Yu, [32] Evan Shelhamer, Jonathan Long, and Trevor Darrell. Fully Ming Yang, and Kaiqi Huang. SSAP: single-shot instance convolutional networks for semantic segmentation. TPAMI, segmentation with affinity pyramid. In ICCV, 2019. 2 2017. 2 [13] Spyros Gidaris and Nikos Komodakis. Dynamic few-shot [33] Mennatullah Siam and Boris N. Oreshkin. Adaptive masked visual learning without forgetting. In CVPR, 2018. 1, 2, 4 weight imprinting for few-shot segmentation. In ICCV, 2019. 2 [14] Bharath Hariharan, Pablo Arbelaez, Lubomir D. Bourdev, [34] Mennatullah Siam, Boris N. Oreshkin, and Martin Jägersand. Subhransu Maji, and Jitendra Malik. Semantic contours from AMP: adaptive masked proxies for few-shot segmentation. In inverse detectors. In ICCV, 2011. 5 ICCV, 2019. 2, 7 [15] Bharath Hariharan and Ross B. Girshick. Low-shot visual [35] Jake Snell, Kevin Swersky, and Richard S. Zemel. Prototypi- recognition by shrinking and hallucinating features. In Hallu- cal networks for few-shot learning. In NeurIPS, 2017. 2, 3, cinating, 2017. 2 4 [16] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. [36] Flood Sung, Yongxin Yang, Li Zhang, Tao Xiang, Philip H. S. Deep residual learning for image recognition. In CVPR, 2016. Torr, and Timothy M. Hospedales. Learning to compare: 5 Relation network for few-shot learning. In CVPR, 2018. 2, 3, [17] Tao Hu, Pengwan Yang, Chiliang Zhang, Gang Yu, Yadong 4, 6 Mu, and Cees G. M. Snoek. Attention-based multi-context [37] Pinzhuo Tian, Zhangkai Wu, Lei Qi, Lei Wang, Yinghuan guiding for few-shot semantic segmentation. In AAAI, 2019. Shi, and Yang Gao. Differentiable meta-learning model for 2, 3 few-shot semantic segmentation. In AAAI, 2020. 2 [18] Zilong Huang, Xinggang Wang, Lichao Huang, Chang Huang, [38] Zhuotao Tian, Hengshuang Zhao, Michelle Shu, Zhicheng Yunchao Wei, and Wenyu Liu. Ccnet: Criss-cross attention Yang, Ruiyu Li, and Jiaya Jia. Prior guided feature enrichment for semantic segmentation. In ICCV, 2019. 2 network for few-shot segmentation. TPAMI, 2020. 2, 6

[39] Oriol Vinyals, Charles Blundell, Tim Lillicrap, Koray Kavukcuoglu, and Daan Wierstra. Matching networks for one shot learning. In NeurIPS, 2016. 2, 4 [40] Haochen Wang, Xudong Zhang, Yutao Hu, Yandan Yang, Xianbin Cao, and Xiantong Zhen. Few-shot semantic seg- mentation with democratic attention networks. In ECCV, 2020. 2 [41] Kaixin Wang, JunHao Liew, Yingtian Zou, Daquan Zhou, and Jiashi Feng. Panet: Few-shot image semantic segmentation with prototype alignment. In ICCV, 2019. 1, 2, 3, 4, 6, 7 [42] Yu-Xiong Wang, Ross B. Girshick, Martial Hebert, and Bharath Hariharan. Low-shot learning from imaginary data. In CVPR, 2018. 2 [43] Boyu Yang, Chang Liu, Bohao Li, Jianbin Jiao, and Qixi- ang Ye. Prototype mixture models for few-shot semantic segmentation. In ECCV, 2020. 2 [44] Maoke Yang, Kun Yu, Chi Zhang, Zhiwei Li, and Kuiyuan Yang. Denseaspp for semantic segmentation in street scenes. In CVPR, 2018. 2 [45] Yuwei Yang, Fanman Meng, Hongliang Li, King N. Ngan, and Qingbo Wu. A new few-shot segmentation network based on class representation. In VCIP, 2019. 2 [46] Yuwei Yang, Fanman Meng, Hongliang Li, Qingbo Wu, Xiao- long Xu, and Shuai Chen. A new local transformation module for few-shot segmentation. In MMM, 2020. 2 [47] Fisher Yu and Vladlen Koltun. Multi-scale context aggrega- tion by dilated convolutions. In Yoshua Bengio and Yann LeCun, editors, ICLR, 2016. 2 [48] Chi Zhang, Guosheng Lin, Fayao Liu, Jiushuang Guo, Qingyao Wu, and Rui Yao. Pyramid graph networks with connection attentions for region-based one-shot semantic seg- mentation. In ICCV, 2019. 2 [49] Chi Zhang, Guosheng Lin, Fayao Liu, Rui Yao, and Chunhua Shen. Canet: Class-agnostic segmentation networks with iterative refinement and attentive few-shot learning. In CVPR, 2019. 2, 3, 6 [50] Hang Zhang, Kristin J. Dana, Jianping Shi, Zhongyue Zhang, Xiaogang Wang, Ambrish Tyagi, and Amit Agrawal. Context encoding for semantic segmentation. In CVPR, 2018. 2 [51] Hongguang Zhang, Jing Zhang, and Piotr Koniusz. Few-shot learning via saliency-guided hallucination of samples. In CVPR, 2019. 2 [52] Xiaolin Zhang, Yunchao Wei, Yi Yang, and Thomas Huang. Sg-one: Similarity guidance network for one-shot semantic segmentation. arXiv, 2018. 2 [53] Hengshuang Zhao, Xiaojuan Qi, Xiaoyong Shen, Jianping Shi, and Jiaya Jia. Icnet for real-time semantic segmentation on high-resolution images. In ECCV, 2018. 2 [54] Hengshuang Zhao, Jianping Shi, Xiaojuan Qi, Xiaogang Wang, and Jiaya Jia. Pyramid scene parsing network. In CVPR, 2017. 1, 2, 4, 5 [55] Hengshuang Zhao, Yi Zhang, Shu Liu, Jianping Shi, Chen Change Loy, Dahua Lin, and Jiaya Jia. Psanet: Point- wise spatial attention network for scene parsing. In ECCV, 2018. 2 [56] Kai Zhu, Wei Zhai, and Yang Cao. Self-supervised tuning for few-shot segmentation. In IJCAI, 2020. 2

You can also read