Correct Me If You Can: Learning from Error Corrections and Markings

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Correct Me If You Can: Learning from Error Corrections and Markings

Julia Kreutzer∗ and Nathaniel Berger∗ and Stefan Riezler†,∗

∗

Computational Linguistics & † IWR

Heidelberg University, Germany

{kreutzer, berger, riezler}@cl.uni-heidelberg.de

Abstract reference structures (Ranzato et al., 2016; Bah-

arXiv:2004.11222v1 [cs.CL] 23 Apr 2020

danau et al., 2017; Kreutzer et al., 2017; Sokolov

Sequence-to-sequence learning involves a et al., 2017). An alternative approach that proposes

trade-off between signal strength and an- to considerably reduce human annotation effort by

notation cost of training data. For ex- allowing to mark errors in machine outputs, for ex-

ample, machine translation data range ample erroneous words or phrases in a machine

from costly expert-generated translations translation, has recently been proposed and been

that enable supervised learning, to weak investigated in simulation studies by Marie and

quality-judgment feedback that facilitate Max (2015); Domingo et al. (2017); Petrushkov

reinforcement learning. We present the et al. (2018). This approach takes the middle

first user study on annotation cost and ground between supervised learning from error

machine learnability for the less popu- corrections as in machine translation post-editing1

lar annotation mode of error markings. (or from translations created from scratch) and

We show that error markings for trans- reinforcement learning from sequence-level ban-

lations of TED talks from English to dit feedback (this includes self-supervised learning

German allow precise credit assignment where all outputs are rewarded uniformly). Error

while requiring significantly less human markings are highly promising since they suggest

effort than correcting/post-editing, and that an interaction mode with low annotation cost, yet

error-marked data can be used success- they can enable precise token-level credit/blame

fully to fine-tune neural machine transla- assignment, and thus can lead to an effective fine-

tion models. grained discriminative signal for machine learning

and data filtering.

1 Introduction Our work is the first to investigate learning from

Successful machine learning for structured output error markings in a user study. Error corrections

prediction requires the effort of annotating suf- and error markings are collected from junior pro-

ficient amounts of gold-standard outputs—a task fessional translators, analyzed, and used as train-

that can be costly if structures are complex and ex- ing data for fine-tuning neural machine translation

pert knowledge is required, as for example in neu- systems. The focus of our work is on the learn-

ral machine translation (NMT) (Bahdanau et al., ability from error corrections and error markings,

2015). Approaches that propose to train sequence- and on the behavior of annotators as teachers to

to-sequence prediction models by reinforcement a machine translation system. We find that error

learning from task-specific scores, for example markings require significantly less effort (in terms

BLEU in machine translation (MT), shift the prob- of key-stroke-mouse-ratio (KSMR) and time) and

lem by simulating such scores by evaluating ma- result in a lower correction rate (ratio of words

chine translation output against expert-generated marked as incorrect or corrected in a post-edit).

Furthermore, they are less prone to over-editing

c 2020 The authors. This article is licensed under a Creative

1

Commons 3.0 licence, no derivative works, attribution, CC- In the following we will use the more general term error cor-

BY-ND. rections and MT specific term post-edits interchangeably.than error corrections. Perhaps surprisingly, agree- 3 User Study on Human Error Markings

ment between annotators of which words to mark and Corrections

or to correct was lower for markings than for post-

edits. However, despite of the low inter-annotator The goal of the annotation study is to compare the

agreement, fine-tuning of neural machine transla- novel error marking mode to the widely adopted

tion could be conducted successfully from data an- machine translation post-editing mode. We are in-

notated in either mode. Our data set of error cor- terested in finding an interaction scenario that costs

rections and markings is publicly available.2 little time and effort, but still allows to teach the

machine how to improve its translations. In this

section we present the setup, measure and com-

2 Related Work pare the observed amount of effort and time that

went into these annotations, and discuss the relia-

Prior work closest to ours is that of Marie and Max bility and adoption of the new marking mode. Ma-

(2015); Domingo et al. (2017); Petrushkov et al. chine learnability, i.e. training of an NMT system

(2018), however, these works were conducted by on human-annotated data is discussed in Section 4.

simulating error markings by heuristic matching

of machine translations against independently cre- 3.1 Participants

ated human reference translations. Thus the ques- We recruited 10 participants that described them-

tion of the practical feasibility of machine learning selves as native German speakers and having ei-

from noisy human error markings is left open. ther a C1 or C2 level in English, as measured by

User studies on machine learnability from hu- the Common European Framework of Reference

man post-edits, together with thorough perfor- levels. 8 participants were students studying trans-

mance analyses with mixed effects models, have lation or interpretation and 2 participants were stu-

been presented by Green et al. (2014); Bentivogli dents studying computational linguistics. All par-

et al. (2016); Karimova et al. (2018). Albeit show- ticipants were paid 100e for their participation in

casing the potential of improving NMT through the study, which was done online, and limited to a

human corrections of machine-generated outputs, maximum of 6 hours, and it took them between 2

these works do not consider “weaker” annotation and 4.5 hours excluding breaks. They agreed to the

modes like error markings. User studies on the usage of the recorded data for research purposes.

process and effort of machine translation post-

editing are too numerous to list—a comprehensive 3.2 Interface

overview is given in Koponen (2016). In contrast The annotation interface has three modes: (1)

to works on interactive-predictive translation (Fos- markings, (2) corrections, and (3) the user-choice

ter et al., 1997; Knowles and Koehn, 2016; Peris mode, where annotators first choose between (1)

et al., 2017; Domingo et al., 2017; Lam et al., and (2) before submitting their annotation. While

2018), our approach does not require an online in- the first two modes are used for collecting train-

teraction with the human and allows to investigate, ing data for the MT model, the third mode is used

filter, pre-process, or augment the human feedback for evaluative purposes to investigate which mode

signal before making a machine learning update. is preferable when given the choice. In any case,

Machine learning from human feedback beyond annotators are presented the source sentence, the

the scope of translations, has considered learn- target sentence and an instruction to either mark or

ing from human pairwise preferences (Christiano correct (aka post-edit) the translation or choose an

et al., 2017), from human corrective feedback editing mode. They also had the option to pause

(Celemin et al., 2018), or from sentence-level re- and resume the session. No document-level con-

ward signals on a Likert scale (Kreutzer et al., text was presented, i.e., translated sentences were

2018). However, none of these studies has consid- judged in isolation, but in consecutive order like

ered error markings on tokens of output sequences, they appeared in the original documents to provide

despite its general applicability to a wide range of a reasonable amount of context. They received

learning tasks. detailed instructions (see Appendix A) on how

to proceed with the annotation. Each annotator

2

https://www.cl.uni-heidelberg.de/ worked on 300 sentences, 100 for each mode, and

statnlpgroup/humanmt/ an extra 15 sentences for intra-annotator agree-To investigate the sources of variance affecting

time and effort, we use Linear Mixed Effect Mod-

els (LMEM) (Barr et al., 2013) and build one with

KSMR as response variable, and another one for

the total edit duration (excluding breaks) as re-

sponse variable, and with the editing mode (cor-

recting vs. marking) as fixed effect. For both re-

sponse variables, we model users5 , talks and tar-

get lengths6 as random effects, e.g., the one for

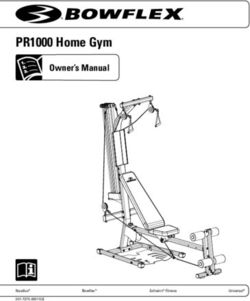

Figure 1: Interface for marking of translation outputs follow- KSMR:

ing user choice between markings and post-edits.

KSMR ∼ mode + (1 | user id) + (1 | talk id)

ment measures that were repeated after each mode. + (1 | trg length) (1)

After the completion of the annotation task they

answered a survey about the preferred mode, the We use the implementation in the R package

perceived editing/marking speed, user-choice poli- lmer4 (Bates et al., 2015) and fit the models

cies, and suggestions for improvement. A sreen- with restricted maximum likelihood. Inspecting

shot of the interface showing a marking operation the intercepts of the fitted models, we confirm that

is shown in Figure 1. The code for the interface is KSMR is significantly (p = 0.01) higher for post

publicly available3 . edits than for markings (+3.76 on average). The

variance due to the user (0.69) is larger than due to

3.3 Data the talk (0.54) and the length (0.05)7 . Longer sen-

We selected a subset of 30 TED talks to create the tences have a slightly higher KSMR than shorter

three data sets from the IWSLT17 machine trans- ones. When modeling the topics as random effects

lation corpus4 . The talks were filtered by the fol- (rather than the talks), the highest KSMR (judging

lowing criteria: single speakers, no music/singing, by individual intercepts) was obtained for physics

low intra-line final-sentence punctuation (indicat- and biodiversity and the lowest for language and

ing bad segmentation), length between 80 and 149 diseases. This might be explained by e.g. the MT

sentences. One additional short talk was selected training data or the raters expertise.

for testing the inter- and intra-annotator reliability. Analyzing the LMEM for editing duration, we

We filtered out those sentences where model hy- find that post-editing takes on average 42s longer

pothesis and references were equal, in order to save than marking, which is significant at p = 0.01.

annotation effort where it is clearly not needed, The variance due to the target length is the largest,

and also removed the last line from every talk (usu- followed by the one due to the talk and the one

ally “thank you”). For each talk, one topic of a set due to the user is smallest. Long sentences have

of keywords provided by TED was selected. See a six time higher editing duration on average than

Appendix B for a description of how data was split shorter ones. With respect to topics, the longest

across annotators. editing was done for topics like physics and evolu-

tion, shortest for diseases and health.

3.4 Effort and Time

3.4.1 Annotation Quality

Correcting one translated sentence took on aver-

age approximately 5 times longer than marking The corrections increased the quality, measured

errors, and required 42 more actions, i.e., clicks by comparison to reference translations, by 2.1

and keystrokes. That is 0.6 actions per character points in BLEU and decreased TER by 1 point.

for post-edits, while only 0.03 actions per charac- While this indicates a general improvement, it has

ter for markings. This measurement aligns with to be taken with a grain of salt, since the post-edits

the unanimous subjective impression of the partic- 5

Random effects are denoted, e.g., by (1|user id)).

6

ipants that they were faster in marking mode. Target lengths measured by number of characters were

binned into two groups at the limit of 176 characters.

3 7

https://github.com/StatNLP/ Note that KSMR is already normalized by reference length,

mt-correct-mark-interface hence the small effect of target length. In a LMER for the

4

https://sites.google.com/site/ raw action count (clicks+key strokes), this effect had a larger

iwsltevaluation2017/ impact.src I am a nomadic artist. src Each year, it sends up a new generation of shoots.

hyp Ich bin ein nomadischer Künstler. ann Jedes Jahr sendet es eine neue Generation von Shoots.

sim Jedes Jahr sendet es eine neue Generation von Shoots.

pe Ich bin ein nomadischer Künstler.

ref Jedes Jahr wachsen neue Triebe.

ref Ich wurde zu einer nomadischen Künstlerin.

src He killed 63 percent of the Hazara population.

src I look at the chemistry of the ocean today. ann Er starb 63 Prozent der Bevölkerung Hazara.

hyp Ich betrachte heute die Chemie des Ozeans. sim Er starb 63 Prozent der Bevölkerung Hazara.

pe Ich erforsche täglich die Chemie der Meere. ref Er tötete 63% der Hazara-Bevölkerung.

ref Ich untersuche die Chemie der Meere der Gegenwart. src They would ordinarily support fish and other wildlife.

ann Sie würden Fisch und andere wild lebende Tiere unterstützen.

src There’s even a software called cadnano that allow . . . sim Sie würden Fisch und andere wild lebende Tiere unterstützen.

hyp Es gibt sogar eine Software namens Caboano, die . . . ref Normalerweise würden sie Fisch und andere Wildtiere ernähren.

pe Es gibt sogar eine Software namens Caboano, die . . .

ref Es gibt sogar eine Software namens ”cadnano”, . . . Table 2: Examples of markings to illustrate differences be-

src It was a thick forest. tween human markings (ann) and simulated markings (sim).

Marked parts are underlined. Example 1: “es” not clear from

hyp Es war ein dicker Wald.

context, less literal reference translation. Example 2: Word

pe Es handelte sich um einen dichten Wald. omission (preposition after “Bevölkerung”) or incorrect word

ref Auf der Insel war dichter Wald. order is not possible to mark. Example 3: Word order differs

between MT and references, word omission (“ordinarily”) not

Table 1: Examples of post-editing to illustrate differences marked.

between reference translations (ref ) and post-edits (pe). Ex-

ample 1: The gender in the German translation could not be

inferred from the context, since speaker information is un-

available to post-editor. Example 2: “today” is interpreted as How good are the markings? Markings, in con-

adverb by the NMT, this interpretation is kept in the post-edit trast, are less prone to over-editing, since they have

(“telephone game” effect). Example 3: Another case of the fewer degrees of freedom. They are equally ex-

“telephone game” effect: the name of the software is changed

by the NMT, and not corrected by post-editors. Example 4: posed to problem (3) of missing context, and an-

Over-editing by post-editor, and more information in the ref- other limitation is added: Word omissions and

erence translation than in the source.

word order problems cannot be annotated. Table 2

gives a set of examples that illustrate these prob-

are heavily biased by the structure, word choice lems. While annotators were most likely not aware

etc. by the machine translation, which might not of problems (1) and (2), they might have sensed

necessarily agree with the reference translations, that information was missing, as well as the ad-

while still being accurate. ditional limitations of markings. The simulation

of markings from references as used in previous

How good are the corrections? We therefore

work (Petrushkov et al., 2018; Marie and Max,

manually inspect the post-edits to get insights into

2015) seems overly harsh for the generated target

the differences between post-edits and references.

translations, e.g., marking “Hazara-Bevölkerung”

Table 1 provides a set of examples8 with their anal-

as incorrect, even though it is a valid translation of

ysis in the caption. Besides the effect of “liter-

“Hazara population”.

alness” (Koponen, 2016), we observe three major

problems:

Mode Intra-Rater (Mean / Std.) α Inter-Rater α

1. Over-editing: Editors edited translations even Marking 0.522 / 0.284 0.201

though they are adequate and fluent. Correction 0.820 / 0.171 0.542

User-Chosen 0.775 / 0.179 0.473

2. “Telephone game” effect: Semantic mistakes

(that do not influence fluency) introduced by Table 3: Intra- and Inter-rater agreement calculated by Krip-

pendorff’s α.

the MT system flow into the post-edit and re-

main uncorrected, when more obvious correc-

tions are needed elsewhere in the sentence. How reliable are corrections and markings?

In addition to the absolute quality of the anno-

3. Missing information: Since editors only ob-

tations, we are interested in measuring their re-

serve a portion of the complete context, i.e.,

liability: Do annotators agree on which parts of

they do not see the video recording of the

a translation to mark or edit? While there are

speaker or the full transcript of the talk, they

many possible valid translations, and hence many

are not able to convey as much information as

ways to annotate one given translation, it has been

the reference translations.

shown that learnability profits from annotations

8

Selected because of their differences to references. with less conflicting information (Kreutzer et al.,2018). In order to quantify agreement for both

modes on the same scale, we reduce both anno- 1.00

tations to sentence-level quality judgments, which

for markings is the ratio of words that were marked

as incorrect in a sentence, and for corrections the 0.75

ratio of words that was actually edited. If the hy-

pothesis was perfect, no markings nor edits would

Correction Rate

be required, and if it was completely wrong, all 0.50

of it had to be marked or edited. After this reduc-

tion, we measure agreement with Krippendorff’s α

(Krippendorff, 2013), see Table 3.

0.25

Which mode do annotators prefer? In the

user-choice mode, where annotators can choose

for each sentence whether they would like to mark 0.00

or correct it, markings were chosen much more fre- marking

Editing Mode

post_edit

quently than post-edits (61.9%). Annotators did

not agree on the preferred choice of mode for the Figure 2: Correction rate by annotation mode. The correc-

tion rate describes the ratio of words in the translation that

repeated sentences (α = −0.008), which indicates were marked as incorrect (in marking mode) or edited (in

that there is no obvious policy when one of the post-editing mode). Means are indicated with diamonds.

modes would be advantageous over the other. In

the post-annotation questionnaire, however, 60%

of the participants said they generally preferred This is partially caused by the reduced degrees

post-edits over markings, despite markings being of freedom in marking mode, but also underlines

faster, and hence resulting in a higher hourly pay. the general trend towards over-editing when in

post-edit mode. If markings and post-edits were

To better understand the differences in modes,

used to compute a quality metric based on the

we asked them about their policies in the user-

correction rate, translations are judged as much

choice mode where for each sentence they would

worse in post-editing mode than in marking mode

have to decide individually if they want to mark

(Figure 2). This also holds for whole sentences,

or post-edit it. The most commonly described pol-

where 273 (26.20%) were left un-edited in mark-

icy is decide based on error types and frequency:

ing mode, and only 3 (0.29%) in post-editing

choose post-edits when insertions or re-ordering is

mode.

needed, and markings preferably for translations

with word errors (less effort than doing a lookup

4 Machine Learnability of NMT from

or replacement). One person preferred post-edits

for short translations, markings for longer ones,

Human Markings and Corrections

another three generally preferred markings gener- The hypotheses presented to the annotators were

ally, and one person preferred post-edits. Where generated by an NMT model. The goal is to use

annotators found the interface to need improve- the supervision signal provided by the human an-

ments was (1) in the presentation of inter-sentential notation to improve the underlying model by ma-

context, (2) in the display of overall progress and chine learning. Learnability is concerned with the

(3) an option to edit previously edited sentences. question of how strong a signal is necessary in or-

For the marking mode they requested an option to der to see improvements in NMT fine-tuning on

mark missing parts or areas for re-ordering. the respective data.

Do markings and corrections express the same Definition. Let x = x1 . . . xS be a sequence

translation quality judgment? We observe that of indices over a source vocabulary VS RC , and

annotators find more than twice as many token cor- y = y1 . . . yT a sequence of indices over a tar-

rections in post-edit mode than in marking mode9 get vocabulary VT RG . The goal of sequence-to-

9

sequence learning is to learn a function for map-

The automatically assessed translation quality for the base-

line model does not differ drastically between the portions ping a input sequence x into an output sequences

selected per mode. y. For the example of machine translation, yis a translation of x, and a model parameterized Domain train dev test

by a set of weights θ is optimized to maximize WMT17 5,919,142 2,169 3,004

pθ (y | x). This quantity is further factorized into IWSLT17 206,112 2,385 1,138

QT probabilities over single tokens pθ (y |

conditional Selection 1035 corr / 1042 mark 1,043

x) = t=1 pθ (yt | x; ySystem TER ↓ BLEU ↑ METEOR ↑ more appropriate comparison than to references,

1 WMT baseline 58.6 23.9 42.7 but for that purpose we conduct a small human

Error Corrections evaluation study. Three bilingual raters receive

120 translations of the test set (∼10%) and the

2 Full 57.4? 24.6? 44.7?

3 Small 57.9? 24.1 44.2? corresponding source sentences for each mode and

judge whether the translation is better, as good as,

Error Markings

or worse than the baseline: 64% of the translations

4 0/1 57.5? 24.4? 44.0?

5 -0.5/0.5 57.4? 24.6? 44.2?

obtained from learning from error markings are

6 random 58.1? 24.1 43.5? judged at least as good as the baseline, compared to

Quality Judgments

65.2% for the translations obtained from learning

from error corrections. Table 6 shows the detailed

7 from corrections 57.4? 24.6? 44.7?

8 from markings 57.6? 24.5? 43.8? proportions excluding identical translations.

Table 5: Results on the test set with feedback collected from System > BL = BL < BL

humans. Decoding with beam search of width 5 and length

penalty of 1. Significant (p : better than the baseline, <

out-of-domain model. worse than the baseline.

The “small” model trained with error correc-

tions is trained on one fifth of the data, which is

Effort vs. Translation Quality. Figure 3 illus-

comparable to the effort it takes to collect the er-

trates the relation between the total time spent on

ror markings. Both error corrections and mark-

annotations and the resulting translation quality for

ings can be reduced to sentence-level quality judg-

corrections and markings trained on a selection of

ments, where all tokens receive the same weight

#marked subsets of the full annotated data: The overall trend

in Eq. δ = hyptokens or δ = #corrected

hyptokens . In addi- shows that both modes benefit from more training

tion, we compare the markings against a random

data, with more variance for the marking mode,

choice of marked tokens per sentence.12 We see

but also a steeper descent. From a total annota-

that both models trained on corrections and mark-

tion amount of approximately 20,000s on (≈ 5.5h),

ings improve significantly over the baseline (rows

markings are the more efficient choice.

2 and 3). Tuning the weights for (in)correct tokens

makes a small but significant difference for learn- 4.2.1 LMEM Analysis

ing from markings (rows 4 and 5). These human We fit a LMEM for sentence-level quality scores

markings lead to significantly better models than of the baseline, and three runs each for the NMT

random markings (row 6). When reducing both systems fine-tuned on markings and post-edits re-

types of human feedback to sentence-level quality spectively, and inspect the influence of the system

judgments, no loss in comparison to error correc- as a fixed effect, and sentence id, topic and source

tions and a small loss for markings (rows 7 and length as random effects.

8) is observed. We suspect that the small margin

between results for learning from corrections and TER ∼system + (1 | talk id/sent id)

markings is due to evaluating against references.

+ (1 | topic) + (1 | src length)

Effects like over-editing (see Section 3.4.1) pro-

duce training data that lead the model to generate The fixed effect is significant at p = 0.05, i.e., the

outputs that diverge more from independent refer- quality scores of the three systems differ signifi-

ences and therefore score lower than deserved un- cantly under this model. The global intercept lies

der all metrics except for METEOR. at 64.73, the one for marking 1.23 below, and the

Human Evaluation. It is infeasible to collect one for post-editing 0.96 below. The variance in

markings or corrections for all our systems for a TER is for the largest part explained by the sen-

tence, then the talk, the source length, and the least

12

Each token is marked with probability pmark = 0.5. by the topic.References

● Bahdanau, D., Brakel, P., Xu, K., Goyal, A.,

●

● Lowe, R., Pineau, J., Courville, A., and Ben-

● ●

59.0

● mode gio, Y. (2017). An actor-critic algorithm for

● ● ●

mark sequence prediction. In Proceedings of the In-

pe

● ternational Conference on Learning Represen-

tations (ICLR), Toulon, France.

TER

● size

●

58.5 ●

●

●

0 Bahdanau, D., Cho, K., and Bengio, Y. (2015).

250

● ● ● 500 Neural machine translation by jointly learning

● 750

● 1000 to align and translate. In Proceedings of the In-

ternational Conference on Learning Represen-

● tations (ICLR), San Diego, CA.

58.0

● Barr, D. J., Roger, L., Scheepers, C., and Tily, H. J.

0 20000 40000 60000

(2013). Random effects structure for confirma-

Annotation Duration [s] tory hypothesis testing: Keep it maximal. J.

Mem. Lang, 68(3):255–278.

Figure 3: Improvement in TER for training data of

varying size: lower is better. Scores are collected Bates, D., Mächler, M., Bolker, B., and Walker,

across two runs with a random selection of k ∈ S. (2015). Fitting linear mixed-effects mod-

[125, 250, 375, 500, 625, 750, 875] training data points.

els using lme4. Journal of Statistical Software,

67(1):1–48.

Bentivogli, L., Bisazza, A., Cettolo, M., and Fed-

5 Conclusion

erico, M. (2016). Neural versus phrase-based

machine translation quality: a case study. In

We presented the first user study on the annotation Proceedings of the 2016 Conference on Empir-

process and the machine learnability of human er- ical Methods in Natural Language Processing

ror markings of translation outputs. This annota- (EMNLP), Austin, TX.

tion mode has so far been given less attention than Bottou, L., Curtis, F. E., and Nocedal, J. (2018).

error corrections or quality judgments, and has un- Optimization methods for large-scale machine

til now only been investigated in simulation stud- learning. SIAM Review, 60(2):223–311.

ies. We found that both according to automatic

evaluation metrics and by human evaluation, fine- Celemin, C., del Solar, J. R., and Kober, J. (2018).

tuning of NMT models achieved comparable gains A fast hybrid reinforcement learning framework

by learning from error corrections and markings. with human corrective feedback. Autonomous

However, error markings required several orders of Robots.

magnitude less human annotation effort. Chen, M. X., Firat, O., Bapna, A., Johnson, M.,

In future work we will investigate the integration Macherey, W., Foster, G., Jones, L., Schus-

of automatic markings into the learning process, ter, M., Shazeer, N., Parmar, N., Vaswani, A.,

and we will explore online adaptation possibilities. Uszkoreit, J., Kaiser, L., Chen, Z., Wu, Y., and

Hughes, M. (2018). The best of both worlds:

Combining recent advances in neural machine

Acknowledgments translation. In Proceedings of the 56th Annual

Meeting of the Association for Computational

Linguistics (ACL), Melbourne, Australia.

We would like to thank the anonymous reviewers

for their feedback, Michael Staniek and Michael Christiano, P. F., Leike, J., Brown, T., Martic, M.,

Hagmann for the help with data processing and Legg, S., and Amodei, D. (2017). Deep re-

analysis, and Sariya Karimova and Tsz Kin Lam inforcement learning from human preferences.

for their contribution to a preliminary study. The In Advances in Neural Information Processing

research reported in this paper was supported in Systems (NIPS), Long Beach, CA.

part by the German research foundation (DFG) un- Clark, J. H., Dyer, C., Lavie, A., and Smith, N. A.

der grant RI-2221/4-1. (2011). Better hypothesis testing for statisti-cal machine translation: Controlling for opti- Kreutzer, J., Uyheng, J., and Riezler, S. (2018). mizer instability. In Proceedings of the 49th An- Reliability and learnability of human bandit nual Meeting of the Association for Computa- feedback for sequence-to-sequence reinforce- tional Linguistics: Human Language Technolo- ment learning. In Proceedings of the 56th An- gies (ACL-HLT), Portland, OR. nual Meeting of the Association for Computa- Domingo, M., Peris, Á., and Casacuberta, F. tional Linguistics (ACL), Melbourne, Australia. (2017). Segment-based interactive-predictive Krippendorff, K. (2013). Content Analysis. An In- machine translation. Machine Translation, troduction to Its Methodology. Sage, third edi- 31(4):163–185. tion. Foster, G., Isabelle, P., and Plamondon, P. (1997). Lam, T. K., Kreutzer, J., and Riezler, S. (2018). A Target-text mediated interactive machine trans- reinforcement learning approach to interactive- lation. Machine Translation, 12(1-2):175–194. predictive neural machine translation. In Pro- Gehring, J., Auli, M., Grangier, D., Yarats, D., and ceedings of the 21st Annual Conference of the Dauphin, Y. (2017). Convolutional sequence to European Association for Machine Translation sequence learning. In Proceedings of the 55th (EAMT), Alicante, Spain. Annual Meeting of the Association for Compu- Lam, T. K., Schamoni, S., and Riezler, S. (2019). tational Linguistics (ACL), Vancouver, Canada. Interactive-predictive neural machine transla- Green, S., Wang, S. I., Chuang, J., Heer, J., Schus- tion through reinforcement and imitation. In ter, S., and Manning, C. D. (2014). Human ef- Proceedings of Machine Translation Summit fort and machine learnability in computer aided XVII (MTSUMMIT), Dublin, Ireland. translation. In Proceedings the onference on Lavie, A. and Denkowski, M. J. (2009). The me- Empirical Methods in Natural Language Pro- teor metric for automatic evaluation of machine cessing (EMNLP), Doha, Qatar. translation. Machine Translation, 23(2-3):105– Karimova, S., Simianer, P., and Riezler, S. (2018). 115. A user-study on online adaptation of neural ma- Marie, B. and Max, A. (2015). Touch-based chine translation to human post-edits. Machine pre-post-editing of machine translation output. Translation, 32(4):309–324. In Proceedings of the Conference on Empiri- Knowles, R. and Koehn, P. (2016). Neural inter- cal Methods in Natural Language Processing active translation prediction. In Proceedings of (EMNLP), Lisbon, Portugal. the Conference of the Association for Machine Papineni, K., Roukos, S., Ward, T., and Zhu, W.-J. Translation in the Americas (AMTA), Austin, (2002). Bleu: a method for automatic evalua- TX. tion of machine translation. In Proceedings of Koponen, M. (2016). Machine Translation Post- the 40th Annual Meeting of the Association for Editing and Effort. Empirical Studies on the Computational Linguistics (ACL), Philadelphia, Post-Editing Process. PhD thesis, University of PA. Helsinki. Peris, Á., Domingo, M., and Casacuberta, F. Kreutzer, J., Bastings, J., and Riezler, S. (2019). (2017). Interactive neural machine translation. Joey NMT: A minimalist NMT toolkit for Computer Speech & Language, 45:201–220. novices. In Proceedings of the 2019 Confer- Petrushkov, P., Khadivi, S., and Matusov, E. ence on Empirical Methods in Natural Lan- (2018). Learning from chunk-based feedback guage Processing and the 9th International in neural machine translation. In Proceedings of Joint Conference on Natural Language Process- the 56th Annual Meeting of the Association for ing (EMNLP-IJCNLP), Hong Kong, China. Computational Linguistics (ACL), Melbourne, Kreutzer, J., Sokolov, A., and Riezler, S. Australia. (2017). Bandit structured prediction for neural Ranzato, M., Chopra, S., Auli, M., and Zaremba, sequence-to-sequence learning. In Proceedings W. (2016). Sequence level training with recur- of the 55th Annual Meeting of the Association rent neural networks. In Proceedings of the In- for Computational Linguistics (ACL), Vancou- ternational Conference on Learning Represen- ver, Canada. tation (ICLR), San Juan, Puerto Rico.

Sennrich, R., Haddow, B., and Birch, A. (2016). like to stop highlighting on, and release

Neural machine translation of rare words with the mouse button while over that word.

subword units. In Proceedings of the 54th An- • If you want to take a short break (get a coffee,

nual Meeting of the Association for Computa- etc.), click on “pause” to pause the session.

tional Linguistics (ACL), Berlin, Germany. We’re measuring time it takes to work on each

Snover, M., Dorr, B., Schwartz, R., Micciulla, L., sentence, so please do not overuse this button

and Makhoul, J. (2006). A study of transla- (e.g. do not press pause while you’re making

tion edit rate with targeted human annotation. your decisions), but also do not feel rushed if

In Proceedings of the Conference of the Asso- you feel uncertain about a sentence.

ciation for Machine Translation in the Americas • Instead, if you want to take a longer break,

(AMTA), Cambridge, MA. just log out. The website will return you re-

turn you to the latest unannotated sentence

Sokolov, A., Kreutzer, J., Sunderland, K., when you log back in. If you log out in the

Danchenko, P., Szymaniak, W., Fürstenau, H., middle of an annotation, your markings or

and Riezler, S. (2017). A shared task on bandit post-edits will not be saved.

learning for machine translation. In Proceedings • After completing all sentences (ca. 300),

of the Second Conference on Machine Transla- you’ll be asked to fill a survey about your ex-

tion, Copenhagen, Denmark. perience.

Turchi, M., Negri, M., Farajian, M. A., and Fed- • Important:

erico, M. (2017). Continuous learning from hu- – Please do not use any external dictionar-

man post-edits for neural machine translation. ies or translation tools.

The Prague Bulletin of Mathematical Linguis- – You might notice that some sentences re-

tics (PBML), 1(108):233–244. appear, which is desired. Please try to be

consistent with repeated sentences.

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit,

– There is no way to return and re-edit

J., Jones, L., Gomez, A. N., Kaiser, L., and

previous sentences, so please make sure

Polosukhin, I. (2017). Attention is all you need.

you’re confident with the edits/markings

In Advances in Neural Information Processing

you provided before you click “submit”.

Systems (NIPS), Long Beach, CA.

B Creating Data Splits

Appendix In order to have users see a wider range of talks,

each talk was split into three parts (beginning, mid-

A Annotator Instructions dle, and end). Each talk part was assigned an an-

notation mode. Parts were then assigned to users

The annotators received the following instructions:

using the following constraints:

• You will be shown a source sentence, its

• Each user should see nine document parts.

translation and an instruction.

• No user should see the same document twice.

• Read the source sentence and the translation.

• Each user should see three sections in post-

• Follow the instruction by either marking the

editing, marking, and user-choice mode.

incorrect words of the translation by clicking

• Each user should see three beginning, three

on them or highlighting them, correcting the

middle, and three ending sections.

translation by deleting, inserting and replac-

• Each document should be assigned each of

ing words or parts of words, or choosing be-

the three annotation modes.

tween modes (i) and (ii), and then click “sub-

To avoid assigning post-editing to every beginning

mit”.

section, marking to every middle section, and user-

– In (ii), if you make a mistake and want

choice to every ending section, assignment was

to start over, you can click on the button

done with an integer linear program with the above

“reset”.

constraints. Data was presented to users in the

– In (i), to highlight, click on the word you

order [Post-edit, Marking, User Chosen, Agree-

would like to start highlighting from,

ment].

keep the mouse button pushed down,

drag the pointer to the word you wouldYou can also read