Multi-Robot Motion Planning with Dynamics Guided by Multi-Agent Search - IJCAI

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI-18)

Multi-Robot Motion Planning with Dynamics Guided by Multi-Agent Search∗

Duong Le and Erion Plaku

Department of Electrical Engineering and Computer Science, Catholic University of America

Washington DC, USA

{05le, plaku}@cua.edu

Abstract conflicts with the plans of agents 1, . . . , i − 1 [Silver, 2005;

Sturtevant and Buro, 2006; Barer et al., 2014]. Decoupled ap-

This paper presents an effective multi-robot motion proaches are fast but suboptimal. To provide optimality, cen-

planner that enables each robot to reach its desired tralized approaches based on A* [Standley and Korf, 2011;

location while avoiding collisions with the other Goldenberg et al., 2014; Svancara and Surynek, 2017],

robots and the obstacles. The approach takes into M* [Wagner and Choset, 2015], increasing-cost tree search

account the differential constraints imposed by the [Sharon et al., 2013], and conflict-based search [Sharon et

underlying dynamics of each robot and generates al., 2015] operate over the composite space of all the agents

dynamically-feasible motions that can be executed [Felner et al., 2017]. Solvers have also used auctions [Amir

in the physical world. The crux of the approach is et al., 2015], answer-set programming [Erdem et al., 2013],

the sampling-based expansion of a motion tree in or rules [Khorshid et al., 2011; Botea and Surynek, 2015].

the continuous state space of all the robots guided

In a continuous setting, sampling-based motion planning

by multi-agent search over a discrete abstraction.

has often been used. To account for dynamics, RRT [LaValle

Experiments using vehicle models with nonlinear

and Kuffner, 2001], KPIECE [Şucan and Kavraki, 2012],

dynamics operating in complex environments show

GUST [Plaku, 2015], and others expand a motion tree by

significant speedups over related work.

adding collision-free and dynamically-feasible trajectories as

branches. For multi-robot problems, sampling-based motion

1 Introduction planners can be used in a decoupled or centralized frame-

work. Decoupled approaches plan the motions one robot

Robotic teams provide a viable venue to enhance automation, at a time, treating robots 1, . . . , i − 1 as moving obstacles

increase productivity, and reduce operational costs in trans- when planning the motions of robot i. Decoupled approaches

portation, exploration, inspection, surveillance, search-and- work well in open environments but have difficulty find-

rescue, and many other applications. In these settings, robots ing solutions in cluttered environments where coordination

are often required to move to different locations while avoid- among robots becomes necessary since the motions planned

ing collisions with obstacles and other robots. for robots 1, . . . , i − 1 could make it impossible for robot i to

Multi-robot motion planning is challenging since it is no reach its goal. Centralized approaches treat the robots as one

longer sufficient to plan the motions of the robots indepen- system and operate over the resulting composite state space.

dently as the plans of one robot can interfere with the plans of They are probabilistically complete, but do not scale well due

another robot or even prevent it from reaching the goal. The to the high dimensionality of the composite state space.

planned motions should also obey the underlying dynamics

To develop an effective multi-robot motion planner, we

of each robot, which impose differential constraints on the

present a framework that couples the ability of sampling-

velocity, acceleration, direction, and turning radius. This is

based motion planning to handle the complexity arising from

essential to ensure that the planned motions can be executed

geometric and differential constraints imposed by the obsta-

by the robots in the physical world.

cles and underlying robot dynamics with the ability of multi-

Related work has considered various settings for the multi-

agent pathfinding to find non-conflicting routes over discrete

robot motion-planning problem. In a discrete setting, i.e.,

abstractions. The underlying idea is to use multi-agent search

multi-agent pathfinding, each robot is a point with no dynam-

as a heuristic to guide sampling-based motion planning as it

ics that in one step can move to an adjacent vertex in a graph

expands a motion tree in the composite state space of all the

abstraction of the world. To solve this NP-hard problem

[Yu and LaValle, 2013], decoupled approaches often plan the robots. The expansion in the composite state space allows the

approach to obtain probabilistic completeness, which ensures

paths for each agent one at a time, ensuring that agent i avoids

that a solution will be found, when it exists, with probability

∗

This paper is an abridged version of the paper “Cooperative approaching one. Scalability and efficiency are obtained by

Multi-Robot Sampling-Based Motion Planning with Dynamics” that using multi-agent search as a heuristic to guide the motion-

won the Best Robotics Paper award at ICAPS, 2017. tree expansion. As with any heuristic, a critical aspect is the

5314Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI-18)

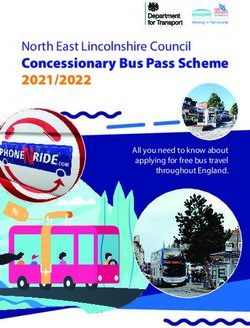

design of an appropriate relaxed problem setting. We obtain Environment Robot Models

bounding box: W M = {M1 , . . . , Mn }

the discrete abstraction by ignoring the vehicle dynamics and obstacles: O = {O1 , . . . , Om } Mi = hPi , Si , Ai , fi , sinit

i , Gi i

using probabilistic roadmaps to create a network of roads that

captures the connectivity of the environment, making it easy Discrete Abstraction Multi-Agent

roadmap

to reach each goal from any location. R1 × . . . × Rn , Ri = (VRi , ERi , costRi ) Pathfinding

routes

hc1 , . . . , cn i

Multi-agent search and sampling-based motion planning hσ1 , . . . , σn i

Equivalence Classes

work in tandem. At each iteration of a core loop, multi- Motion Tree

Γ = {Γkey1 , . . . , Γkeyk }

agent search is first invoked to find non-conflicting routes T = (VT , ET )

key = hc1 , . . . , cn i ∈ VR1 × . . . × VRn

over S1 × . . . × Sn

over the discrete abstraction. At a second step, sampling- routes(Γkey ) = hσ1 , . . . , σn i

based motion planning seeks to expand the motion tree along Expand Equivalence Class Select Equivalence Class

the routes. When little or no progress is made, the routes follow routes σ1 , . . . , σn argmaxΓhc ,...,cn i ∈Γ w(Γhc1 ,...,cn i )

1

are penalized and the multi-agent search is invoked again to

find alternative routes. This synergistic combination makes

Figure 1: Schematic illustration of the approach.

it possible to effectively plan collision-free and dynamically-

feasible motions that enable each robot to reach its goal. Ex-

periments using vehicle models with nonlinear dynamics op-

erating in unstructured, obstacle-rich, environments demon-

strate the scalability and efficiency of the approach, yielding

significant speedups over related work.

2 Problem Formulation

Each robot model Mi = hPi , Si , Ai , fi i is defined by its ge-

ometric shape Pi , state space Si , action space Ai , and under-

lying dynamics fi expressed as a set of differential equations

ṡ = fi (s, a), s ∈ Si , a ∈ Ai . (1)

As an example, a vehicle model defines the state in terms of

the position (x, y), orientation θ, steering angle ψ, and veloc- Figure 2: An example of a roadmap.

ity v. The vehicle is controlled by setting the acceleration acc

and steering rate ω. The motion equations are defined as

ẋ = v cos(θ) cos(ψ), ẏ = v sin(θ) cos(ψ), (2) motion planning; (iii) expansion of a motion tree in the com-

posite state space of all the robots; (iv) using the discrete ab-

θ̇ = v sin(ψ)/L, v̇ = acc, ψ̇ = ω, (3) straction to partition the motion tree into equivalence classes;

where L is the distance from the back to the front wheels. and (v) interplay of sampling-based motion planning and

The new state snew ∈ S obtained by applying a control multi-agent search to effectively expand the motion tree along

action a ∈ Ai to a state s ∈ Si is computed by a function routes obtained by the multi-agent search. A schematic rep-

resentation is shown in Fig. 1.

snew ← SIMULATE (s, a, fi , dt), (4)

which numerically integrates fi for one time step dt. Start-

ing from s ∈ S and applying actions ha1 , . . . , a`−1 i in suc- 3.1 Discrete Abstraction via Roadmaps

cession gives rise to a dynamically-feasible trajectory ζ :

{1, . . . , `} → Si , where ζ(1) ← s and ∀j ∈ {2, . . . , `}: The discrete abstraction provides a relaxed problem repre-

sentation, obtained by ignoring the robot dynamics. Specif-

ζ(j) ← SIMULATE (ζ(j − 1), aj−1 , fi , dt). (5) ically, the robot state is reduced to just the position and ori-

The multi-robot motion-planning problem is defined by entation (referred to as configuration) and each robot is al-

lowed to translate and rotate in any direction. To solve the

hW, O, G1 , . . . , Gn , M1 , . . . , Mn , sinit init

1 , . . . , sn i. (6) multi-robot motion-planning problem in this relaxed setting,

a roadmap Ri = (VRi , ERi ) is constructed for each robot

The world W contains obstacles O = {O1 , . . . , Om } and

Mi , seeking to make it easy to reach each goal from any

goals G = {G1 , . . . , Gn }. The objective is to compute trajec-

location in the environment. The roadmap is initially popu-

tories ζ1 , . . . , ζn , one for each robot, so that ζi : {1, . . . , `} →

lated by adding collision-free configurations from each goal

Si starts at the initial state siniti ∈ Si , reaches the goal Gi , and G1 , . . . , Gn . Leveraging PRM [Kavraki et al., 1996], the

avoids collisions with the obstacles and the other robots.

roadmap is further populated by sampling collision-free con-

figurations and connecting neighboring configurations with

3 Method collision-free paths whenever possible, as shown in Fig. 2.

The approach has several components: (i) a discrete ab- Dense roadmaps are preferred in order to provide alternative

straction based on a relaxed problem setting; (ii) multi-agent routes along which the robots can move to reach their goals.

search over the discrete abstraction to guide sampling-based Robots that have the same shape can use the same roadmap.

5315Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI-18)

3.2 Multi-Agent Search over the Discrete a robot state si ∈ Si is mapped to the nearest configu-

Abstraction ration in the roadmap Ri . In this way, a composite state

M ULTI AGENT S EARCH(R1 , . . . , Rn , c1 , . . . , cn , G1 , . . . , Gn ) hs1 , . . . , sn i ∈ S1 × . . . × Sn is mapped component-wise

searches over the roadmaps R1 , . . . , Rn to compute non- to a composite configuration hc1 , . . . , cn i, i.e.,

conflicting routes σ1 , . . . , σn for each robot such that σi is MAP (hs1 , . . . , sn i) = hc1 , . . . , cn i, (7)

over Ri , starts at ci , and ends at a roadmap vertex associated

with the goal Gi . Since in multi-agent search, each robot where ci = NEAREST(Ri , si ).

moves from one vertex to the next in one step, each roadmap The approach leverages this mapping to partition the mo-

edge is divided into several parts to ensure that the distance tion tree T into equivalence classes. The premise is that

from one vertex to the next is no more than a user-specified vertices in T that provide the same discrete information

step size. The edge division is done only once after each should belong to the same equivalence class. In other words,

roadmap is constructed. Note that the roadmap construction vertices v, v 0 ∈ T belong to the same equivalence class

guarantees that robots will not collide with the obstacles as Γhc1 ,...,cn i if and only if MAP(STATE(v)) = MAP(STATE(v 0 )) =

they move from one roadmap vertex to the next. Thus, the hc1 , . . . , cn i. So, an equivalence class Γhc1 ,...,cn i contains all

multi-agent search has to avoid only robot-robot conflicts. the vertices in T that map to hc1 , . . . , cn i.

The overall approach is agnostic to the inner workings of the The significance of an equivalence class Γhc1 ,...,cn i is that

multi-agent search, so it can be used in conjunction with any it makes it possible to leverage multi-agent search to compute

available method that operates over graphs. non-conflicting routes σ1 , . . . , σn , where each σi starts at ci

and reaches the goal Gi . These routes then serve to guide the

3.3 Motion Tree in the Composite State Space motion planner as it seeks to expand the motion tree from ver-

Note that solutions over the relaxed problem setting do not tices associated with the equivalance class Γhc1 ,...,cn i along

necessarily correspond to dynamically-feasible trajectories σ1 , . . . , σ n .

since the discrete abstraction ignores the robot dynamics. As

a result, geometric and differential constraints imposed by the 3.5 Putting it all Together: Using Multi-Agent

obstacles and the underlying dynamics may make it difficult Search to Guide the Motion-Tree Expansion

or impossible for a robot to follow its route σi . The overall approach starts by creating the discrete abstrac-

To account for the dynamics, a motion tree T = (VT , ET ) tion, rooting the motion tree T at the initial state, and creat-

is rooted at the initial state hsinit init

1 , . . . , sn i and is incremen- ing the first equivalence class ΓSTATE(vinit ) , which contains the

tally expanded in the composite state space S1 × . . . × Sn . root vertex vinit . Afterwards, the approach enters a core loop,

Thus, each vertex v ∈ VT corresponds to a composite where each iteration consists of the following:

state, denoted by STATE(v), where STATEi (v) represents the • select an equivalence class Γhc1 ,...,cn i from Γ;

state associated with the i-th robot. By construction, v is

added to VT only if STATE(v) is collision-free, i.e., when the • use multi-agent search over the discrete abstraction to

robots are placed according to STATE(v), there is no robot- obtain non-conflicting routes σ1 , . . . , σn that reach the

robot or robot-obstacle collision. To generate a successor goals starting from c1 , . . . , cn ; and

v 0 from v, some control actions ha1 , . . . , an i are applied • expand the motion tree T from vertices in Γhc1 ,...,cn i

component-wise to STATE(v) and the motion equations of along the routes σ1 , . . . , σn .

each robot are integrated for one time step dt, i.e., ∀i ∈ M ULTI AGENT S EARCH(R1 , . . . , Rn , c1 , . . . , cn , G1 , . . . , Gn )

{1, . . . , n} : STATEi (v 0 ) ← SIMULATE(STATEi (v), ai , fi , dt). searches the discrete abstraction to obtain non-conflicting

When STATE(v 0 ) is not in collision, v 0 and the edge (v, v 0 ) are routes hσ1 , . . . , σn i, where σi starts at ci and reaches the

added to the motion tree T . goal Gi . To save computation time, the routes are stored

The motion tree T is expanded until a vertex vnew is added with Γhc1 ,...,cn i and retrieved when the multi-agent search

where each robot has reached its goal, i.e., ∀i ∈ {1, . . . , n} : is invoked again for Γhc1 ,...,cn i . During the selection of an

STATE i (vnew ) ∈ Gi . The path hv1 , . . . , v` i, with v1 = vinit

equivalence class, priority is given to equivalence classes

and v` = vnew , from the root of T to vnew is retrieved and associated with short routes to promote rapid expansions

the solution trajectory for each robot i is constructed as ζi = toward the goals. The expansion adds new vertices to T ,

hSTATEi (v1 ), . . . , STATEi (v` )i. which could result in creating new equivalence classes.

3.4 Partitioning the Motion Tree into Equivalence When the expansion from Γhc1 ,...,cn i fails or has difficulty

Classes Based on the Discrete Abstraction following the routes σ1 , . . . , σn , which could happen due

to the geometric and differential constraints imposed by the

The motion tree T could be expanded in an uninformed way obstacles and the underlying robot dynamics, the approach

by selecting at each iteration a vertex v ∈ VT at random and penalizes Γhc1 ,...,cn i in order to promote expansions from

applying controls to generate a successor v 0 from v. Such an other equivalence classes. This process of selecting and

expansion, however, would have difficulty finding solutions. expanding from an equivalence class is repeated until a

In contrast, we introduce multi-agent search to guide the solution is found or a runtime limit is reached.

motion-tree expansion. Since multi-agent search operates

over the discrete abstraction, a mapping from the compos- Selecting an Equivalence Class

ite state space to the discrete abstraction must be first de- A weight is defined for each equivalence class and the equiv-

fined. As the discrete abstraction is obtained via roadmaps, alence class with the maximum weight is selected for expan-

5316Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI-18)

sion. Specifically, the weight for Γhc1 ,...,cn i is defined as

αN R S EL(Γhc1 ,...,cn i )

w(Γhc1 ,...,cn i ) = Pn 2

, (8)

i=1 ( COST (σi ))

where σ1 , . . . , σn denote the routes associated with

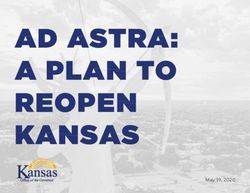

Γhc1 ,...,cn i , 0 < α < 1, and N R S EL(Γhc1 ,...,cn i ) denotes the scene 4

number of times Γhc1 ,...,cn i has been previously selected.

In this way, to promote expansions towards the goals, pref- scene 2

erence is given to equivalence classes associated with short

routes. This constitutes the greedy aspect of the selection

process. To mitigate the potential pitfalls of a greedy selec-

tion, a penalty factor α (0 < α < 1) is introduced, which scene 5

reduces the weight of an equivalence class each time it is se-

lected. In fact, repeatedly selecting Γhc1 ,...,cn i will continue

to reduce its weight so that eventually some other equivalence

class will end up having a greater weight and thus be selected scene 3 scene 6

for expansion. This is essential to ensure probabilistic com-

pleteness and avoid becoming stuck when the motion-tree ex- Figure 3: Scenes used in the experiments (scene 1 shown in Fig. 2).

Videos of solutions can be found at https://goo.gl/sQ6irb. Figure

pansion from Γhc1 ,...,cn i repeatedly fails due to the constraints

best viewed in color and on screen.

imposed by the obstacles and the underlying robot dynamics.

Expanding the Motion Tree from the Equivalence Class 64

After selecting an equivalence class Γhc1 ,...,cn i , the objec- 32

16

runtime [s]

tive becomes to expand the motion tree T from a vertex 8

v ∈ Γhc1 ,...,cn i along the routes σ1 , . . . , σn associated with 4 a

Γhc1 ,...,cn i . The vertex v could be selected at random to pro- 2 b

1

mote expansions from different vertices. Attempts are then 1/2 c

made to expand T from STATE(v) so that each robot i moves 1/4 scene 1 scene 2 scene 3 d s4 s5 s6

from STATEi (v) toward the next configuration in σi . We 1/8 1 2 4 6 8 10 1 2 4 6 8 10 1 2 4 6 8 10 3 3 3

can use proportional-integrative-derivative (PID) controllers nr. of robots

[Spong et al., 2005] to select controls that would steer each

robot toward its target, e.g., by turning the wheels and then Figure 4: Runtime results when comparing (a) our approach to (b)

moving toward the target. The expansion from v continues pGUST, (c) pRRT, and (d) cRRT. Runtime measures everything

from reading the input file to reporting that a solution is found (in-

for several steps until the target is reached or a robot collides cluding for our approach the time to build the roadmaps). Missing

with an obstacle or another robot. If a new state is in col- entries indicate failure by the planner to solve the problem instances

lision, the trajectory generation stops and the approach goes within the runtime limit (set to 90s per run). Note in particular that

back to selecting an equivalence class. Otherwise, the new only our approach could solve scenes 4, 5, 6 (denoted as s4, s5, s6).

state is added to tree T and the partition Γ is updated accord-

ingly. If in the new state each robot has reached its goal, then

a solution is found and the search terminates successfully. faster than our approach since the robot trajectories do not

interfere with each other. In scenes where cooperation is im-

4 Experiments and Results portant, pGUST, as any prioritized approach, has difficulty

finding solutions, even timing out in scenes 4, 5, 6. In con-

Experiments are conducted using vehicle models with nonlin- trast, due to the guidance by multi-agent search, our approach

ear dynamics operating in complex environments, as shown is able to find solutions in a matter of 1-3s.

in Fig. 2 and 3. In many of these scenarios, the robots are

required to cooperate in order to reach their goals.

The approach is compared to a centralized and a priori- 5 Discussion

tized version of RRT [LaValle and Kuffner, 2001], denoted This paper presented an effective multi-robot motion plan-

by cRRT and pRRT, as RRT is one of the most popular ner that enables each robot to reach its desired location while

sampling-based motion planners. The approach is also com- avoiding collisions. The approach also took into account the

pared to a prioritized version of GUST [Plaku, 2015], denoted dynamics of each robot and generated dynamically-feasible

by pGUST, as GUST has been shown to be significantly faster trajectories that can be executed in the physical world.

than RRT and other sampling-based motion planners. The crux of the approach was coupling the ability of

Results in Fig. 4 show significant speedups over cRRT, sampling-based motion planning to handle the complexity

pRRT, and pGUST. As RRT-based planners lack guidance, arising from obstacles and robot dynamics with the ability

they have difficulty finding solutions. pGUST is faster than of multi-agent pathfinding to find non-conflicting routes over

cRRT and pRRT since it uses discrete search to guide the discrete abstractions. The underlying idea was to use multi-

motion-tree expansion. In open scenes (scene 2), where co- agent search over the discrete abstraction as a heuristic to

operation among the robots is not essential, pGUST is even guide sampling-based motion planning as it expands a mo-

5317Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence (IJCAI-18)

tion tree in the composite state space of all the robots. [Khorshid et al., 2011] Mokhtar M Khorshid, Robert C

This work opens up several research directions. Advances Holte, and Nathan R Sturtevant. A polynomial-time al-

in multi-agent search can significantly improve the efficiency gorithm for non-optimal multi-agent pathfinding. In Sym-

and scalability of the framework by reducing the runtime posium on Combinatorial Search, pages 76–83, 2011.

to compute the routes. Moreover, the quality of the routes [LaValle and Kuffner, 2001] Steven M. LaValle and James J.

can also be improved, for example, by requiring multi-agent Kuffner. Randomized kinodynamic planning. Interna-

search to compute non-conflicting routes that maximize the tional Journal of Robotics Research, 20(5):378–400, 2001.

separation among the robots and the clearance from the ob-

stacles. Such routes would be easier to follow, which would [Plaku, 2015] Erion Plaku. Region-guided and sampling-

reduce the runtime for the motion-tree expansion. Another based tree search for motion planning with dynamics.

direction is to enable the team of robots to perform complex IEEE Transactions on Robotics, 31:723–735, 2015.

tasks given by PDDL or some other high-level formalism. [Sharon et al., 2013] Guni Sharon, Roni Stern, Meir Gold-

enberg, and Ariel Felner. The increasing cost tree search

Acknowledgments for optimal multi-agent pathfinding. Artificial Intelligence,

195:470–495, 2013.

This work is supported by NSF IIS1548406.

[Sharon et al., 2015] Guni Sharon, Roni Stern, Ariel Fel-

ner, and Nathan R Sturtevant. Conflict-based search for

References optimal multi-agent pathfinding. Artificial Intelligence,

[Amir et al., 2015] Ofra Amir, Guni Sharon, and Roni Stern. 219:40–66, 2015.

Multi-agent pathfinding as a combinatorial auction. In [Silver, 2005] David Silver. Cooperative pathfinding. In

AAAI Conference on Artificial Intelligence, pages 2003– AAAI Conference on Artificial Intelligence and Interactive

2009, 2015. Digital Entertainment, volume 1, pages 117–122, 2005.

[Barer et al., 2014] Max Barer, Guni Sharon, Roni Stern, [Spong et al., 2005] Mark W. Spong, Seth Hutchinson, and

and Ariel Felner. Suboptimal variants of the conflict-based Mathukumalli Vidyasagar. Robot Modeling and Control.

search algorithm for the multi-agent pathfinding problem. John Wiley and Sons, 2005.

In Symposium on Combinatorial Search, pages 19–27,

2014. [Standley and Korf, 2011] Trevor Standley and Richard

Korf. Complete algorithms for cooperative pathfinding

[Botea and Surynek, 2015] Adi Botea and Pavel Surynek. problems. In International Joint Conference on Artificial

Multi-agent path finding on strongly biconnected digraphs. Intelligence, pages 668–673, 2011.

In AAAI Conference on Artificial Intelligence, pages 2024–

2030, 2015. [Sturtevant and Buro, 2006] Nathan R Sturtevant and

Michael Buro. Improving collaborative pathfinding

[Şucan and Kavraki, 2012] Ioan A. Şucan and Lydia E. using map abstraction. In AAAI Conference on Artificial

Kavraki. A sampling-based tree planner for systems Intelligence and Interactive Digital Entertainment, pages

with complex dynamics. IEEE Transactions on Robotics, 80–85, 2006.

28(1):116–131, 2012.

[Svancara and Surynek, 2017] Jiri Svancara and Pavel

[Erdem et al., 2013] Esra Erdem, Doga Gizem Kisa, Umut Surynek. New flow-based heuristic for search algorithms

Öztok, and Peter Schueller. A general formal frame- solving multi-agent path finding. In International Confer-

work for pathfinding problems with multiple agents. In ence on Agents and Artificial Intelligence, pages 451–458,

AAAI Conference on Artificial Intelligence, pages 290– 2017.

296, 2013.

[Wagner and Choset, 2015] Glenn Wagner and Howie

[Felner et al., 2017] Ariel Felner, Roni Stern, E Shimony, Eli Choset. Subdimensional expansion for multirobot path

Boyarski, M Goldenerg, Guni Sharon, Nathan R Sturte- planning. Artificial Intelligence, 219:1–24, 2015.

vant, Glenn Wagner, and Pavel Surynek. Search-based

[Yu and LaValle, 2013] Jingjin Yu and Steven M LaValle.

optimal solvers for the multi-agent pathfinding problem:

Summary and challenges. In Symposium on Combinato- Structure and intractability of optimal multi-robot path

rial Search, pages 29–37, 2017. planning on graphs. In AAAI Conference on Artificial In-

telligence, pages 1443–1449, 2013.

[Goldenberg et al., 2014] Meir Goldenberg, Ariel Felner,

Roni Stern, Guni Sharon, Nathan R Sturtevant, Robert C

Holte, and Jonathan Schaeffer. Enhanced partial expansion

a. Journal of Artificial Intelligence Research, 50:141–187,

2014.

[Kavraki et al., 1996] Lydia E. Kavraki, Petr Švestka, Jean-

Claude Latombe, and Mark H. Overmars. Probabilistic

roadmaps for path planning in high-dimensional config-

uration spaces. IEEE Transactions on Robotics and Au-

tomation, 12(4):566–580, 1996.

5318You can also read