Improving Distinction between ASR Errors and Speech Disfluencies with Feature Space Interpolation

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Improving Distinction between ASR Errors and Speech Disfluencies

with Feature Space Interpolation

Seongmin Park, Dongchan Shin, Sangyoun Paik, Subong Choi, Alena Kazakova, Jihwa Lee

ActionPower, Seoul, Republic of Korea

{seongmin.park, dongchan.shin, sangyoun.paik, subong.choi, alena.kazakova,

jihwa.lee}@actionpower.kr

Abstract

Fine-tuning pretrained language models (LMs) is a popular ap-

proach to automatic speech recognition (ASR) error detection

during post-processing. While error detection systems often

take advantage of statistical language archetypes captured by

arXiv:2108.01812v1 [cs.CL] 4 Aug 2021

LMs, at times the pretrained knowledge can hinder error de-

tection performance. For instance, presence of speech disflu-

encies might confuse the post-processing system into tagging

disfluent but accurate transcriptions as ASR errors. Such con-

Figure 1: Demonstration of speech disfluency and ASR error.

fusion occurs because both error detection and disfluency de-

tection tasks attempt to identify tokens at statistically unlikely

positions. This paper proposes a scheme to improve existing

LM-based ASR error detection systems, both in terms of de- will utilizing a pretrained LM benefit a single task? Such con-

tection scores and resilience to such distracting auxiliary tasks. cerns arise especially in production settings, where customer

Our approach adopts the popular mixup method in text feature orations tend to contain significantly more disfluencies than in

space and can be utilized with any black-box ASR output. To audio found in academic benchmarks. This situation is illus-

demonstrate the effectiveness of our method, we conduct post- trated in Figure 1. In the figure, ASR output of disfluent speech

processing experiments with both traditional and end-to-end (ASR output) is compared with its reference transcript (Ground

ASR systems (both for English and Korean languages) with 5 truth). Words like and um in the reference are annotated as dis-

different speech corpora. We find that our method improves fluent. The post-processing system must only detect ASR errors

both ASR error detection F1 scores and reduces the number (red and blue), while refraining from detecting merely disfluent

of correctly transcribed disfluencies wrongly detected as ASR speech (green) as ASR errors.

errors. Finally, we suggest methods to utilize resulting LMs This paper proposes a regularization scheme that im-

directly in semi-supervised ASR training. proves ASR error detection performance by weakening an LM’s

Index Terms: disfluency detection, error detection, post- propensity to tag accurate transcriptions of disfluent speech as

processing ASR errors. Our method is applied during the LM fine-tuning

stage. Resulting LMs have been applied as drop-in replace-

1. Introduction ments in existing ASR error correction systems in production.

Furthermore, LM artifacts from our method can be uti-

Post-processing is the final auditor of an automatic speech lized beyond post-prcessing. Recent advancements in semi-

recognition (ASR) system before its output is presented to con- supervised ASR training take advantage of deep LMs to cre-

sumers or downstream tasks. Two notable tasks often entrusted ate pseudo-labels for unlabeled audio [14, 15]. To ensure the

to the post-processing step are ASR error detection [1, 2, 3] and quality of synthetic labels, [14] uses an LM during beam-search

speech disfluency detection [4, 5, 6]. decoding; [15] filters created labels by length-normalized lan-

A recent trend in post-processing is to fine-tune pretrained guage model scores. In both cases, an LM robust to speech

transformer-based language models (LMs) such as BERT [7] disfluencies is essential in improving transcription accuracy.

or GPT-2 [8] for designated tasks. Utilizing language patterns

captured by huge neural networks during their unsupervised

pretraining yields considerable advantages in downstream lan- 2. Related work

guage tasks [9, 10, 11]. When using pretrained LMs, both ASR 2.1. ASR post-processing

error correction and speech disfluency detection tasks employ

a similar setup, where the LM is further fine-tuned with task- Post-processing is a task that complements ASR systems by an-

specific data and label, often in a sequence-labeling scheme alyzing their output. Compared to tasks involved in directly ma-

[12, 13]. Disfluency in speech, by definition, consists of se- nipulating components within the ASR system, the black-box

quence of tokens unlikely to appear together. ASR errors are approach of ASR post-processing yields several advantages. It

statistically likely to introduce tokens heavily out of context. is often intractable for the LM inside an ASR system to account

Both descriptions resemble the pretraining objective of LMs, for and rank all hypothesis presented by the acoustic model [?].

which is to learn a language's statistical landscape. A post-processing system is free from such constraints within

From such observation, a natural question follows: in a sit- an ASR system [1]. ASR post-processing also helps improve

uation where objectives of two post-processing tasks are mutu- performance in downstream tasks [16] as well as in domain

ally exclusive (but both attune to the LM pretraining objective), adaptation flexibility [17].2.1.1. ASR error detection found success in applying the method to text data. [27] con-

structs a new token sequence by randomly selecting a token

One common approach to ASR error detection is through ma-

from two different sequences at each index. [28] suggests ap-

chine translation. Machine translation approaches in error de-

plying mixup in feature space, after an intermediary layer of a

tection commonly interpret error detection as a sequence-to-

pretrained LM. New input data is then generated by reversing

sequence task, and construct a system to translate erroneous

the synthetic feature to find the most similar token in the vocab-

transcriptions to correct sentences [3, 11, 18]. [3] suggests a

ulary. While these two works involve interpolating text inputs,

method applicable to off-the-shelf ASR systems. [11] and [18]

our method differs significantly in that we do not directly gener-

translates acoustic model outputs directly to grammatical text.

ate augmented training samples; instead, we utilize mixup as a

In some domains, however, necessity of huge parallel train- regularizing layer during the training process. Our method also

ing data can render translation-based error detection infeasible does not require reversing word-embeddings or discriminative

[19]. Another popular approach models ASR error detection filtering using GPT-2 introduced in [28].

as a sequence labeling problem. This approach requires much [29], a work most similar to ours, applies manifold mixup

less training samples compared to sequence-to-sequence sys- on text data to calibrate a model to work best on out-of-

tems. Both [9] and [?] use a large, pretrained deep LM to gauge distribution data. However, the study focuses on sentence-level

the likeliness of each output token from an ASR system. classification, which requires a substantially different feature

extraction strategy from the work in this paper. Our paper sug-

2.1.2. Speech disfluency detection gests a token-level mixup method, and aims to gauge the impact

The aim of a disfluency detection task is to separate well- of single task (ASR error detection) regularization on an auxil-

formed sentences from its unnatural counterparts. Disfluencies iary task (speech disfluency detection).

in speech include deviant language, such as hesitation, repeti-

tion, correction, and false starts [5]. Speech disfluencies dis- 3. Methodology

play regularities that can be detected with statistical systems

[4]. While various suggestions such as semi-supervised [6] and 3.1. BERT for sequence tagging

self-supervised [20, 21] approaches were proposed, supervised To utilize BERT for a sequence labeling problem, a token clas-

training on pretrained LM remains state-of-the-art [10, 12]. sification head (TCH) must be added after BERT’s final layer.

Both [10] and [12] fine-tunes deep LMs for sequence tagging. There is no restriction on what constitutes a TCH. In [12], for

example, a combination of conditional random field and a fully

2.2. Data interpolation for regularization connected network was attached to BERT’s output as a TCH. In

our study, a simple fully-connected layer with the same number

Interpolating existing data for data augmentation is a popu-

of inputs and outputs as the original input token sequence suf-

lar method for regularization. The mixup method suggested

fices as a TCH because our aim is to measure the effectiveness

in [22] has been particularly effective, especially in the com-

of regularization that takes place between BERT and the TCH.

puter vision domain [23, 24, 25]. Given dataset D =

We modify a reference BERT implementation [30] with a TCH.

{(x0 , y0 ), (x1 , y1 ) . . . , (xn , yn )} consisting of (data, label)

A single data sample during the supervised training stage

pairs, mixup “mixes” two random training samples (xi , yi ) and

consists of input token sequence x = x0 , x1 , . . . , xn , and a

(xj , yj ) to generate a new training sample (xm , ym ):

sequence of labels for each token, y = y0 , y1 . . . , yn . Each

corresponding label has a value of 1 if the token contains a

xm = xi ∗ λ + xj ∗ (1 − λ) (1)

character with erroneous ASR output, and 0 if the token con-

tains no wrong character. All input sequences are tokenized

ym = yi ∗ λ + yj ∗ (1 − λ) (2)

into subword tokens [31] before entering BERT. For the rest of

where this paper, boldfaced letters denote vectors.

λ ∼ Beta(α, α)

3.2. Feature space interpolation

α is a hyperparameter for the mixup operation that charac-

terizes the Beta distribution to randomly sample λ from. Our proposed method adds a regularizing layer between

[26] suggests manifold mixup to utilize mixup in the feature BERT’s output and the token classification head. BERT pro-

space after the data has been passed through a vectorizing layer, duces a hidden state sequence h, with length equal to that of

such as a deep neural network (DNN). Whereas mixup explicitly input token sequence x:

generates new data by interpolating existing samples in the data

space, manifold mixup adds a regularization layer to an existing h = h0 , h1 , . . . , hn = BERT (x) (3)

architecture that mixes data in its vectorized form. In essence, From h we obtain hshuf f led , a randomly permuted ver-

mixup and its variants discourage a model from making extreme sion of h. Each corresponding label in y is also rearranged

decisions. A hidden space visualization in [26] shows that cor- accordingly, creating a new label vector yshuf f led . The same

rect targets and non-correct targets are better separated within index-wise shuffle applied to h applies to y, such that each la-

the mixup model because it learns to handle intermediate values bel yi originally assigned to an input token xi follows the new

instead of making wild guesses in face of uncertainty. index for that value during the shuffling process:

Because text is both ordered and discrete, mixup with text

data is best applied at feature space. Magnitudes of each to- hshuf f led = hs0 , hs1 , . . . , hsn

ken id in a tokenized text sequence does not convey any relative

meaning; whereas in an image, varying the degrees of continu- yshuf f led = ys0 , ys1 . . . , ysn

ous pixel values does gradually manipulate its properties. where

While mixup is yet to be widely studied in speech-to-text

or natural language processing domains, several studies have s0 . . . sn = RandomP ermutation(0...n)4. Experiments

4.1. Experiment setup

To obtain ASR outputs on which to perform error detection, we

process multiple speech corpora through black box ASR sys-

tems. Detailed descriptions of datasets used is provided in the

next section. Within the decoded transcript, we tag subword to-

ken indices that contain ASR errors to be used as labels during

the post-processing fine-tuning. We also tag indices in the tran-

scription with speech disfluencies. To demonstrate the effec-

tiveness of our method across various off-the-shelf ASR envi-

ronments, we transcribe all available speech corpora with both

a traditional ASR system and an end-to-end (E2E) ASR system.

For English corpora, we utilize a pretrained LibriSpeech Kaldi

[32] model (factorized time-delay neural networks-based chain

model) for the traditional system, and a reference wav2letter++

(w2l++) [33] system for the E2E system. For Korean corpora,

we use the same respective architectures trained from scratch

on internal data.

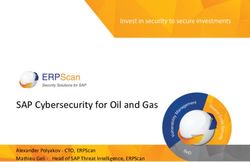

Figure 2: Overview of feature-space regularization for text. To create error-labeled decoded transcripts, each decoded

transcript is compared with corresponding ground truth refer-

ence transcription. We use the Wagner-Fischer algorithm [34]

to build a shortest-distance edit matrix from the decoded tran-

script to its reference transcription. Traversing the edit matrix

from the lowest, rightmost column to the highest, leftmost col-

umn yields a sequence of delete, replace, and insert instructions

to convert the decoded transcript into the reference transcript.

Each character instructed to be deleted and replaced in the de-

coded transcript is marked as ASR errors.

Disfluency labels in reference transcripts must be trans-

ferred over to corresponding positions within the decoded tran-

scripts. Since the introduction of ASR errors causes the de-

coder transcripts to be mere approximations of its ground truth

transcripts, mapping each character index from the reference

transcript to the decoded transcript is not rigorously a defined

process. Here we again utilize the Wagner-Fischer algorithm,

mapping only the character indices in the decoded transcript

without any edit instructions to their corresponding indices in

Figure 3: Effect of α and normalized counts at which the system the reference transcript.

wrongly detects disfluent tokens as ASR errors. Regions within We judge the effectiveness of our regularization method

1 variance of average is shaded. by two criteria: how much it helps in error detection (Error

detection F1 ), and how much it reduces the number of accu-

rately transcribed disfluent speech tagged as errors (Disfluencies

wrongly tagged).

Next, we apply the mixup process element-wise to h and

hshuf f led , according to equation (1) and (2):

4.2. Desciption of datasets

hnew = (h λ) + (hshuf f led (1 − λ)) (4) We tested our proposed methodology on five different speech

datasets (4 English, 1 Korean). All speech corpora were pro-

Similarly, we also mix the original label vector y with its per- vided with reference transcripts hand-annotated with speech

muted counterpart yshuf f led : disfluency positions.

ynew = (y λ) + (yshuf f led (1 − λ)) (5) 4.2.1. SCOTUS

The SCOTUS corpus [35] consists of seven oral arguments be-

Where λ ∼ Beta(α, α). This process “softens” the originally

tween justices and advocates. Nested disfluency tags suggested

binary labels into range [0, 1].

in [4] were flattened and hand-annotated.

For the mixup hyperparameter α, we empirically chose

value a of 0.2. Figure 3 shows the experimentally observed rela-

4.2.2. CallHome

tionship between α and the normalized counts of the fine-tuned

system erroneously tagging disfluent speech as ASR errors. The CallHome corpus [36] is a collection of telephone calls.

hnew is propagated to the token classification head, whose Each participant in the recording was asked to call a person of

prediction loss is calculated using binary cross entropy against their choice. Similar to SCOTUS, nested disfluency tags were

ynew , instead of the original ground truth label y. flattened and hand-annotated [35].4.2.3. AMI Meeting Corpus (AMI) The proposed regularization in this paper shows greater in-

fluence as the number of training data increases (AMI and ICSI).

The AMI Meeting Corpus [37] contains recordings of multiple-

One surprising outcome is the unchanging scores on the Call-

speaker meetings on a wide range of subjects. Multiple types

Home corpus. Analysis of the corpus and high F1 scores in

of crowd-sourced annotations are available, including speech

error detection indicate the uniform scores are a result of dis-

disfluencies, body gestures, and named entities.

tinct patterns in ASR errors during transcription; the fine-tuned

LM in every setting learns to confidently and consistently pick

4.2.4. ICSI Meeting Corpus (ICSI)

out only certain tokens as ASR errors.

The ICSI Meeting Corpus [38] is a collection of multi-person

meeting recordings. The ICSI Meeting corpus provides the Table 2: All results are subword token level scores, averaged

highest count of disfluency-annotated transcripts out of the five over 6 repeated experiments with different random seeds.

corpora used in this paper.

Corpus Error detection F1 Disf. wrongly tagged

4.2.5. KsponSpeech (Kspon)

Mixup No mixup Mixup No mixup

KsponSpeech [39] is a collection of spontaneous Korean

speech. The corpora is a recording of sub-10 seconds of spon- SCOTUS (w2l++) 0.467 0.462 254 312

taneous Korean speech. Each recording is labeled with disflu- SCOTUS (Kaldi) 0.651 0.662 392.5 345.75

encies and mispronunciations. CallHome (w2l++) 0.910 0.910 164 164

CallHome (Kaldi) 0.950 0.950 324 344

Table 1: Statistics for each corpus AMI (w2l++) 0.760 0.746 253.25 291.25

AMI (Kaldi) 0.920 0.915 140 141.25

Corpus Training samples Token level error rate ICSI (w2l++) 0.347 0.340 1556 1559.75

ICSI (Kaldi) 0.777 0.771 1085 1124.25

E2E Traditional E2E Traditional Kspon (w2l++) 0.332 0.308 3 2.3

SCOTUS 1063 2163 0.12 0.12 Kspon (Kaldi) 0.465 0.464 8 5

CallHome 1420 3058 0.43 0.48

AMI 4062 6963 0.35 0.45

ICSI 16389 24165 0.10 0.22

Kspon 2406 2406 0.06 0.15 5. Conclusion

The discrete nature of textual data has stifled adaptation of

ASR output for each corpus was randomly split into 8:1:1 mixup in natural language processing and speech-to-text do-

train:validation:test splits. Table 1 describes the characteris- mains. In this study we propose a way to effectively apply

tics of ASR outputs with which we fine-tune BERT. Training mixup to improve post-processing error detection.

samples denote the number of sub-word tokenized sequences We extensively demonstrate the effect of our proposed ap-

obtained from each ASR output. Token level error rate shows proach on various speech corpora. Impact of feature-space

the rate at which subword tokens contain errors in transcrip- mixup is especially pronounced in high resource settings. The

tion. This latter statistic is purely concerned with processing proposed regularization layer is also computationally inexpen-

ASR outputs, and thus conveys a slightly different meaning than sive and can be easily retrofitted to existing systems as a simple

conventional ASR error metrics, such as character error rates. module. While we chose the popular BERT LM for our experi-

Most importantly, words missing in ASR outputs (compared to ments, our method can be applied to fine-tuning any deep LM in

ground-truth transcriptions) is not tallied in Table 1’s token level a sequence tagging setting. We also highlighted potential uses

error rate. This omission was introduced on purpose, because at of resulting LMs in semi-supervised ASR training as pseudo-

inference time our model is not expected to introduce new edits label filters. Testing the effectiveness of this approach in other

but merely tag each subword token as correct or incorrect. sequence-level tasks is left to further research.

Number of training examples obtained from a single cor-

pus transcribed with English E2E ASR differs from the num-

ber of training examples obtained from the same corpus tran- 6. References

scribed with English traditional ASR. This discrepancy stems [1] M. Feld, S. Momtazi, F. Freigang, D. Klakow, and C. Müller,

from the utilized E2E system’s tendency to refrain from tran- “Mobile texting: can post-asr correction solve the issues? an

scribing words with low confidence. Since the purpose of this experimental study on gain vs. costs,” in Proceedings of the

study is to demonstrate the effectiveness of our proposed reg- 2012 ACM international conference on Intelligent User Inter-

ularization method in various ASR post-processing setups, the faces, 2012, pp. 37–40.

discrepancy in the number of training examples from different [2] M. Ogrodniczuk, Ł. Kobyliński, and P. A. N. I. P. Infor-

ASR systems was generated on purpose. matyki, Proceedings of the PolEval 2020 Workshop. Institute

of Computer Science. Polish Academy of Sciences, 2020.

[Online]. Available: https://books.google.co.kkjkkr/books?id=

4.3. Results

grRSzgEACAAJ

Results of applying our proposed method is presented Table 2. [3] A. Mani, S. Palaskar, N. V. Meripo, S. Konam, and F. Metze,

Corpus names in the first column are each marked with the “ASR Error Correction and Domain Adaptation Using Machine

name of ASR system used for transcription. The second and Translation,” in ICASSP 2020-2020 IEEE International Con-

third columns of table 3 show error detection F1 scores with ference on Acoustics, Speech and Signal Processing (ICASSP).

and without applying proposed regularization, respectively. The IEEE, 2020, pp. 6344–6348.

fourth and fifth columns report the number of correctly tran- [4] E. E. Shriberg, “Preliminaries to a theory of speech disfluencies,”

scribed disfluencies wrongly tagged as ASR errors. PhD Thesis, Citeseer, 1994.[5] E. Salesky, S. Burger, J. Niehues, and A. Waibel, “Towards fluent [23] T. He, Z. Zhang, H. Zhang, Z. Zhang, J. Xie, and M. Li, “Bag

translations from disfluent speech,” in 2018 IEEE Spoken Lan- of tricks for image classification with convolutional neural net-

guage Technology Workshop (SLT). IEEE, 2018, pp. 921–926. works,” in Proceedings of the IEEE/CVF Conference on Com-

puter Vision and Pattern Recognition, 2019, pp. 558–567.

[6] F. Wang, W. Chen, Z. Yang, Q. Dong, S. Xu, and B. Xu, “Semi-

supervised disfluency detection,” in Proceedings of the 27th In- [24] D. Berthelot, N. Carlini, I. Goodfellow, N. Papernot, A. Oliver,

ternational Conference on Computational Linguistics, 2018, pp. and C. Raffel, “Mixmatch: A holistic approach to semi-supervised

3529–3538. learning,” arXiv preprint arXiv:1905.02249, 2019.

[7] J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “BERT: Pre- [25] A. Bochkovskiy, C.-Y. Wang, and H.-Y. M. Liao, “Yolov4: Op-

training of Deep Bidirectional Transformers for Language Under- timal speed and accuracy of object detection,” arXiv preprint

standing,” in Proceedings of the 2019 Conference of the North arXiv:2004.10934, 2020.

American Chapter of the Association for Computational Linguis-

[26] V. Verma, A. Lamb, C. Beckham, A. Najafi, I. Mitliagkas,

tics: Human Language Technologies, Volume 1 (Long and Short

D. Lopez-Paz, and Y. Bengio, “Manifold mixup: Better repre-

Papers), 2019, pp. 4171–4186.

sentations by interpolating hidden states,” in International Con-

[8] A. Radford, J. Wu, R. Child, D. Luan, D. Amodei, I. Sutskever ference on Machine Learning. PMLR, 2019, pp. 6438–6447.

et al., “Language models are unsupervised multitask learners,”

[27] D. Guo, Y. Kim, and A. M. Rush, “Sequence-level Mixed Sample

OpenAI blog, vol. 1, no. 8, p. 9, 2019.

Data Augmentation,” in Proceedings of the 2020 Conference on

[9] Y. Weng, S. S. Miryala, C. Khatri, R. Wang, H. Zheng, P. Molino, Empirical Methods in Natural Language Processing (EMNLP),

M. Namazifar, A. Papangelis, H. Williams, F. Bell, and others, 2020, pp. 5547–5552.

“Joint Contextual Modeling for ASR Correction and Language

[28] R. Zhang, Y. Yu, and C. Zhang, “SeqMix: Augmenting Active

Understanding,” in ICASSP 2020-2020 IEEE International Con-

Sequence Labeling via Sequence Mixup,” in Proceedings of the

ference on Acoustics, Speech and Signal Processing (ICASSP).

2020 Conference on Empirical Methods in Natural Language

IEEE, 2020, pp. 6349–6353.

Processing (EMNLP), 2020, pp. 8566–8579.

[10] N. Bach and F. Huang, “Noisy BiLSTM-Based Models for Dis-

[29] L. Kong, H. Jiang, Y. Zhuang, J. Lyu, T. Zhao, and

fluency Detection.” in INTERSPEECH, 2019, pp. 4230–4234.

C. Zhang, “Calibrated Language Model Fine-Tuning for

[11] O. Hrinchuk, M. Popova, and B. Ginsburg, “Correction of In- and Out-of-Distribution Data,” in Proceedings of the

Automatic Speech Recognition with Transformer Sequence- 2020 Conference on Empirical Methods in Natural Language

To-Sequence Model,” in ICASSP 2020-2020 IEEE Interna- Processing (EMNLP). Online: Association for Computational

tional Conference on Acoustics, Speech and Signal Processing Linguistics, Nov. 2020, pp. 1326–1340. [Online]. Available:

(ICASSP). IEEE, 2020, pp. 7074–7078. https://www.aclweb.org/anthology/2020.emnlp-main.102

[12] D. Lee, B. Ko, M. C. Shin, T. Whang, D. Lee, E. H. Kim, E. Kim, [30] T. Wolf, L. Debut, V. Sanh, J. Chaumond, C. Delangue, A. Moi,

and J. Jo, “Auxiliary Sequence Labeling Tasks for Disfluency De- P. Cistac, T. Rault, R. Louf, M. Funtowicz, and others, “Hugging-

tection,” arXiv preprint arXiv:2011.04512, 2020. Face’s Transformers: State-of-the-art Natural Language Process-

ing,” ArXiv, pp. arXiv–1910, 2019.

[13] T. Tanaka, R. Masumura, H. Masataki, and Y. Aono, “Neural error

corrective language models for automatic speech recognition,” in [31] R. Sennrich, B. Haddow, and A. Birch, “Neural machine transla-

INTERSPEECH, 2018. tion of rare words with subword units,” in Proceedings of the 54th

Annual Meeting of the Association for Computational Linguistics

[14] D. S. Park, Y. Zhang, Y. Jia, W. Han, C.-C. Chiu, B. Li, Y. Wu, and

(Volume 1: Long Papers), 2016, pp. 1715–1725.

Q. V. Le, “Improved noisy student training for automatic speech

recognition,” arXiv preprint arXiv:2005.09629, 2020. [32] D. Povey, A. Ghoshal, G. Boulianne, L. Burget, O. Glembek,

N. Goel, M. Hannemann, P. Motlicek, Y. Qian, P. Schwarz,

[15] W.-N. Hsu, A. Lee, G. Synnaeve, and A. Hannun, “Semi-

J. Silovsky, G. Stemmer, and K. Vesely, “The Kaldi Speech

supervised speech recognition via local prior matching,” arXiv

Recognition Toolkit,” in IEEE 2011 Workshop on Automatic

preprint arXiv:2002.10336, 2020.

Speech Recognition and Understanding. IEEE Signal Process-

[16] L. Wang, M. Fazel-Zarandi, A. Tiwari, S. Matsoukas, and ing Society, Dec. 2011, event-place: Hilton Waikoloa Village, Big

L. Polymenakos, “Data Augmentation for Training Dialog Island, Hawaii, US.

Models Robust to Speech Recognition Errors,” arXiv preprint

[33] V. Pratap, A. Hannun, Q. Xu, J. Cai, J. Kahn, G. Syn-

arXiv:2006.05635, 2020.

naeve, V. Liptchinsky, and R. Collobert, “wav2letter++: The

[17] C. Anantaram and S. K. Kopparapu, “Adapting general-purpose fastest open-source speech recognition system. arxiv 2018,” arXiv

speech recognition engine output for domain-specific natural lan- preprint arXiv:1812.07625.

guage question answering,” arXiv preprint arXiv:1710.06923,

[34] G. Navarro, “A guided tour to approximate string matching,” ACM

2017.

computing surveys (CSUR), vol. 33, no. 1, pp. 31–88, 2001, pub-

[18] A. Mani, S. Palaskar, and S. Konam, “Towards Understanding lisher: ACM New York, NY, USA.

ASR Error Correction for Medical Conversations,” ACL 2020,

[35] V. Zayats, M. Ostendorf, and H. Hajishirzi, “Multi-domain disflu-

p. 7, 2020.

ency and repair detection,” in Fifteenth Annual Conference of the

[19] M. Popel and O. Bojar, “Training tips for the transformer model,” International Speech Communication Association, 2014.

The Prague Bulletin of Mathematical Linguistics, vol. 110, no. 1,

[36] Linguistic Data Consortium, CABank CallHome English Corpus.

pp. 43–70, 2018, publisher: Sciendo.

TalkBank, 2013. [Online]. Available: http://ca.talkbank.org/

[20] S. Wang, W. Che, Q. Liu, P. Qin, T. Liu, and W. Y. Wang, access/CallHome/eng.html

“Multi-task self-supervised learning for disfluency detection,” in

[37] I. McCowan, J. Carletta, W. Kraaij, S. Ashby, S. Bourban,

Proceedings of the AAAI Conference on Artificial Intelligence,

M. Flynn, M. Guillemot, T. Hain, J. Kadlec, V. Karaiskos et al.,

vol. 34, 2020, pp. 9193–9200, issue: 05.

“The ami meeting corpus,” in Proceedings of Measuring Behavior

[21] S. Wang, Z. Wang, W. Che, and T. Liu, “Combining Self-Training 2005, 5th International Conference on Methods and Techniques in

and Self-Supervised Learning for Unsupervised Disfluency De- Behavioral Research. Noldus Information Technology, 2005, pp.

tection,” arXiv preprint arXiv:2010.15360, 2020. 137–140.

[22] H. Zhang, M. Cisse, Y. N. Dauphin, and D. Lopez-Paz, “mixup: [38] A. Janin, D. Baron, J. Edwards, D. Ellis, D. Gelbart, N. Morgan,

Beyond Empirical Risk Minimization,” in International Confer- B. Peskin, T. Pfau, E. Shriberg, A. Stolcke, and C. Wooters, “The

ence on Learning Representations, 2018. ICSI meeting corpus,” 2003, pp. I–364.[39] J.-U. Bang, S. Yun, S.-H. Kim, M.-Y. Choi, M.-K. Lee, Y.-J. Kim,

D.-H. Kim, J. Park, Y.-J. Lee, and S.-H. Kim, “KsponSpeech:

Korean Spontaneous Speech Corpus for Automatic Speech

Recognition,” Applied Sciences, vol. 10, no. 19, 2020. [Online].

Available: https://www.mdpi.com/2076-3417/10/19/6936You can also read