Preservation of Digital Data with Self-Validating, Self-Instantiating

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Preservation of Digital Data with Self-Validating, Self-Instantiating

Knowledge-Based Archives

Bertram Ludäscher Richard Marciano Reagan Moore

San Diego Supercomputer Center, U.C. San Diego

fludaesch,marciano,mooreg@sdsc.edu

Abstract However, since hardware and software technolo-

gies evolve rapidly and much faster than the me-

Digital archives are dedicated to the long-term dia decay, the real challenge lies in the techno-

preservation of electronic information and have logical obsolescence of the infrastructure that is

the mandate to enable sustained access despite used to access and present the archived informa-

rapid technology changes. Persistent archives tion. Among other findings, the Task Force on

are confronted with heterogeneous data formats, Archiving Digital Information concluded that an

helper applications, and platforms being used over infrastructure is needed that supports distributed

the lifetime of the archive. This is not unlike the in- systems of digital archives, and identified data mi-

teroperability challenges, for which mediators are gration as a crucial means for the sustained access

devised. To prevent technological obsolescence to digital information [5].

over time and across platforms, a migration ap- In a collaboration with the National Archives

proach for persistent archives is proposed based and Records Administration (NARA), the San

on an XML infrastructure. Diego Supercomputer Center (SDSC) developed

We extend current archival approaches that an information management architecture and pro-

build upon standardized data formats and sim- totype for digital archives, based on scalable

ple metadata mechanisms for collection manage- archival storage systems (HPSS), data handling

ment, by involving high-level conceptual models middleware (SRB/MCAT), and XML-based medi-

and knowledge representations as an integral part ation techniques (MIX) [9, 12, 1]. 1

of the archive and the ingestion/migration pro- To achieve the goal of reinstantiating archived

cesses. Infrastructure independence is maximized information on a future platform, it is not suffi-

by archiving generic, executable specifications of cient to merely copy data at the bit level from ob-

(i) archival constraints (i.e., “model validators”), solete to current media but to create “recoverable”

and (ii) archival transformations that are part of archival representations that are infrastructure in-

the ingestion process. The proposed architecture dependent (or generic) to the largest extent possi-

facilitates construction of self-validating and self- ble. Indeed the challenge is the forward-migration

instantiating knowledge-based archives. We illus- in time of information and knowledge about the

trate our overall approach and report on first expe- archived data, i.e., of the various kinds of meta-

riences using a sample collection from a collabo- information that will allow recreation and interpre-

ration with the National Archives and Records Ad- tation of structure and content of archived data.

ministration (NARA). In this paper, we describe an architecture

for infrastructure independent, knowledge-based

archival and collection management. Our ap-

1 Background and Overview proach is knowledge-based in the sense that the

ingestion process can employ both structural and

Digital archives have the mandate to capture and semantic models of the collection, including a

preserve information in such a way that the infor- “flattened” relational representation, a “reassem-

mation can be (re-)discovered, accessed, and pre- bled” semistructured representation, and higher-

sented at any time in the future. An obvious chal- level “semantic” representation. The architecture

lenge for archives of digital information is the lim- is modular since the ingestion process consists of

ited storage lifetimes due to physical media decay. transformations that are put together and executed

This research has been sponsored by a NARA supplement 1 www.clearlake.ibm.com/hpss/, www.npaci.

to the National Science Foundation project ASC 96-19020. edu/DICE/SRB, and www.npaci.edu/DICE/MIX/in a pipelined fashion. Another novel feature of Digital objects are handled by helper appli-

our approach is that we allow archiving of the en- cations (e.g., word processors, simulation tools,

tire ingestion pipeline, i.e., the different represen- databases, multimedia players, records manage-

tations of the collection together with the transfor- ment software, etc.) that interpret the objects’

mation rules that were used to create those repre- metadata to determine the appropriate processing

sentations. steps and display actions. Helper applications run

The organization is as follows: In Section 2 we on an underlying operating system (OS) that ul-

introduce the basic approaches for managing tech- timately executes presentation and interaction in-

nology evolution, in particular via migratable for- structions. As time goes by, new versions of data

mats. Section 3 presents the elements of a fully formats, their associated helper applications, or the

XML-based archival infrastructure. In Section 4, underlying OS increase the risk of technological

we show how this architecture can be extended to obsolescence and can cause the digital information

further include conceptual-level information and to perish. There are several approaches to avoid

knowledge about the archived information. A uni- such loss of accessibility to stored information:

fied perspective on XML-based “semantic exten-

sions” is provided by viewing them as constraint (1) If a new version of the OS is not backward

languages. The notions of self-validating and self- compatible, patch it in such a way that the old

instantiating archives are given precise meanings helper applications still runs. This is impos-

based on a formalization of the ingestion process. sible for proprietary operating systems, but

We report on first experiences using a real-world (at least in principle) feasible for open, non-

collection in Section 5 and conclude in Section 6. proprietary ones such as Linux.

(2) Put a wrapper around the new OS, effectively

2 Managing Technology Evolution via emulating (parts of) the old OS such that the

Migratable Formats unchanged helper application can still run.

As long as digital objects “live” in their origi- (3) Migrate the helper application to a new OS;

nal (non-archival) runtime environment, they are also ensure backward compatibility of new

permanently threatened by technological obsoles- versions of the helper application.

cence. Here, by digital object we mean a machine (4) Migrate the digital objects to a new format

readable representation of some data, an image of that is understood by the new versions of

reality, or otherwise relevant piece of information helper applications and OS.

in some recognizable data format (e.g., an elec-

tronic record of a Senator’s legislative activities One can refine these basic approaches, e.g., by de-

in the form of an RTF2 text file, a row of data in coupling helper applications from the OS via an

a scientific data set3 , or a MIME-encoded email intermediary virtual machine VM. Then helper ap-

containing text and MPEG-7 encoded multimedia plications can run on different platforms simply by

objects). The data format in which the digital ob- migrating the VM to those platforms. For exam-

ject is encoded can contain metadata that either ple, the Java VM is available for all major plat-

explicitly or implicitly (e.g., via reference to stan- forms, so helper applications implemented on this

dards) describe further information about the data VM can be run anywhere (and anytime) where this

such as structure, semantics, context, provenance, VM is implemented.4

and display properties. Metadata can be embed- Note that (4) assumes that the target format is

ded via inlined markup tags and attributes or may understood by the new helper application. By re-

be packaged separately. Since metadata is also quiring the migration target in (4) to be a stan-

data, its meaning, context, etc. could be described dard format (such as XML), this will indeed of-

via meta-metadata. In practice, however, this loop ten be the case. Moreover, standards change at

is terminated by assuming the use of agreed-upon a lower rate than arbitrary formats, so conversion

metadata standards. In Section 4.2 a more self- frequency and thus migration cost is minimized.

contained approach is presented that includes ex- The different ways to manage technology evo-

ecutable specifications of semantic constraints as lution can be evaluated by comparing the tension

part of archival packages.

4 VMs are not new – just check your archives! Examples

2 Microsoft’s

Rich Text Format include the P-Code machine of UCSD Pascal and the Warren

3 www.faqs.org/faqs/sci-data-formats/ Abstract Machine (WAM), the target of Prolog compilers.induced by retaining the original presentation tech-

nology and the cost of performing conversion (Ta-

ble 1): information discovery and presentation ca-

pabilities are much more sophisticated in newer

technologies. When access and presentation of in-

formation is limited to old technologies, the more

efficient manipulation provided by new tools can-

not be used.5

Technology Tension Conversion Frequency

(1) old display format OS update

(2) old display format OS update

(3) old display format helper app. update

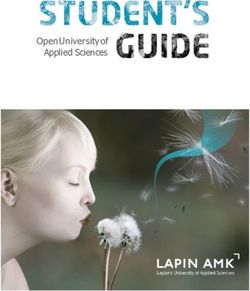

(4) new display tools new standard Figure 1. Digital archive architecture

Table 1. Tracking technology evolution

to agree on the submission or accessioning poli-

In the sequel, we focus on (4), a migration through cies (e.g., acceptable submission formats, specifi-

self-describing standards approach which aims at cations on what is to be preserved, access func-

minimizing the dependency on specific hardware, tions, and other legal requirements). Subsequently,

software (OS, helper apps.), data formats, and ref- the producer can transfer submission information

erences to external, non-persistently archived re- packages (SIPs) to the archive, where they enter –

sources (relevant contextual or meta-information in our proposed system – an ingestion network (see

that cannot be inlined should be indirectly in- Definition 3). An initial quality assurance check

cluded via references to persistently archived in- is performed on SIPs and corresponding feedback

formation). Hence infrastructure independence returned to the producer. As the SIPs are trans-

is maximized by relying on interoperability tech- formed within the ingestion network, archival in-

nologies. It is not coincidental that XML not only formation packages (AIPs) are produced and put

provides a “good archival format” (minimizing mi- into archival storage.

gration cost), but also a good data exchange and in- Migration of AIPs is a normal “refreshing” op-

teroperability framework: a persistent archive has eration of archives to prevent the obsolescence of

to support evolving platforms and formats over AIPs in the presence of changes in technology or

time. This is similar to supporting different plat- the contextual information that is necessary to in-

forms at one time. In other words, a persistent terpret the AIPs. Indeed, failure to migrate AIPs

archive can be seen as an interoperability system. in a timely manner jeopardizes the creation of dis-

semination information packages (DIPs) at a later

3 XML-Based Digital Archives time. Migration may be seen as a feedback of

the AIPs into an updated ingestion network that

produces AIPs for new technology from the pre-

In this section, we describe the basic processes and

vious AIP formats (in this sense, the initial inges-

tion can be viewed as the 0th migration round).

functions of digital archives (using concepts and

terminology from the Open Archival Information

Creation of DIPs from AIPs is sometimes called

System (OAIS) reference model [11]), and show

(re)-instantiation of the archived collection. Ob-

how an infrastructure for digital archives can be

serve that “good” AIP formats aim to be maxi-

built around XML standards and technologies.

mally self-contained, self-describing, and easy to

process in order to minimize migration and dis-

Functional Architecture. The primary goal of semination cost.

digital archives is long-term preservation of infor- The ingestion and migration transformations

mation in a form that guarantees sustained access can be of different nature and involve data refor-

and dissemination in the future (Fig. 1): Initially, matting (e.g., physical: from 6250 to 3480 tape, or

the information producer and the archive need bit-level: from EBCDIC to ASCII), and data con-

5 Restricting the presentation capabilities to the original

version (e.g.“.rtf” to “.html”). Conversions have

technology is analogous to reading a 16th century book only to be content-preserving and should also preserve

in flickering candlelight. as much structure as possible. However, some-times content is “buried” in the structure and can call this the data- or instance-level. Such object-

be lost accidentally during an apparently “content- level information is packaged into the CI. At

preserving” conversion (Section 5). For dissemi- the schema- or class-level, structural and type

nation and high-level “intelligent access”, further information is handled: this metadata describes

transformations can be applied, e.g., to derive a types of object attributes, aggregation informa-

topic map view [14] that allows the user to navi- tion (collections/subcollections), and further de-

gate data based on high-level concepts and their re- scriptive collection-level metadata (e.g., SDSC’s

lationships. Documentation about the sequence of Storage Resource Broker6 provides a state-of-

transformations that have been applied to an AIP the-art “collection-aware” archival infrastructure).

is added to the provenance metadata. Collection-level metadata can be put into the PI

Digital archives implementing the above func- and the DI. Finally, information at the conceptual-

tions and processes can be built upon the follow- level captures knowledge about the archive and in-

ing system components: (i) archival storage sys- cludes, e.g., associations between concepts and ob-

tems (e.g., HPSS), (ii) data management systems ject classes, relationships between concepts, and

(e.g., XML-generating wrappers for data inges- derived knowledge (expressed via logic rules).

tion; databases and XML processors for querying While some of this knowledge fits into the CON

and transforming collections during migration), package, we suggest to provide a distinct knowl-

and (iii) data presentation systems (e.g., based on edge package KP as part of the PDI. Possible rep-

web services with style sheets, search interfaces resentations for expressing such knowledge range

and navigational access for dissemination). from database-related formalisms like (E)ER dia-

grams, XML Schema, and UML class diagrams,

OAIS Information Packages. In order to un- to AI/KR-related ones like logic programs, se-

derstand the disseminated information, the con- mantic networks, formal ontologies, description

sumer (Fig. 1) needs some initial, internal knowl- logics, to recent web-centric variants like RDF(-

edge (e.g., of the English language). Beforehand, Schema), DAML(+OIL) taken in tow by the Se-

the presentation system must be able to interpret mantic Web boat [2]. Some of these formalisms

the AIP, i.e., it needs representation information have been standardized already or will become in

which is packed together with the actual content. the near future, hence are candidate archival for-

Intuitively, the more representation information is mats (e.g., XMI which includes UML model ex-

added to a package, the more self-contained it be- change and OMG’s Meta Object Facility MOF

comes. According to the OAIS framework [11], [15], RDF [13], the Conceptual Graph Standard

an information package IP contains packaging in- [3] and the Knowledge-Interchange Format [6]).

formation PI (e.g., the ISO-9660 directory infor- While the number of possible formalisms (and the

mation of a CD) that encapsulates the actual con- complexity of some of them) makes this a daunt-

tent information CI and additional preservation ing task for any “archival engineer”, there is hope:

description information PDI. The latter holds in- most of them are based on predicate logic or “clas-

formation about the associated CI’s provenance sic” extensions (e.g., rule-based extensions of first-

PR (origin and processing history), context CON order predicate logic have been extensively studied

(relation to information external to the IP), refer- by the logic programming and deductive databases

ence REF (for identifying the CI, say via ISBN or communities). Based on a less volatile “standard”

URI), and fixity information FIX (e.g., a checksum logic framework, one can devise generic, universal

over CI). Finally, similar to a real tag on a physi- formalisms that can express the features of other

cal object, the IP has descriptive information DI on formalisms in a more robust way using executable

the “outside” that is used to discover which IP has specifications (see below).

the CI of interest. Put together, the encapsulation

structure of an IP is as follows [11]: XML-Based Archival Infrastructure. It is de-

sirable that archival formats do not require special

IP = [DI [PI [CI PDI[ PR CON REF FIX ] ] ] ] (*) access software and be standardized, open, and as

simple as possible. Ideally, they should be self-

Information Hierarchy. Information contained contained, self-describing “time-capsules”. The

in an archive or IP can be classified as follows: specifications of proprietary formats7 like Word,

Above the bit-stream, character, and word lev- 6 www.npaci.edu/DICE/SRB

els, we can identify individual digital objects as 7 Proprietary formats like “.doc” tend to be complex, un-

tuples, records, or similar object structures. We documented, and “married” to a hardware or software envi-Wordperfect, etc. may vary from version to ver- ensure modularity of the architecture, complex

sion, may not be available at all, or – even if they XML transformations should be broken up into

are available (e.g., RTF) – may still require special- smaller ones that can be expressed directly with

ized helper applications (“viewers”) to interpret the available tools. For supporting huge data vol-

and display the information contained in a docu- umes and continuous streams of IPs, the architec-

ment. Similarly, formats that use data compression ture needs to be scalable. This can be achieved

like PDF require that the specification describes with a pipelining execution model using stream-

the compression method and that this method is based XML transformation languages (i.e., whose

executable in the future. XML, on the other hand, memory requirements do not depend on the size of

satisfies many desiderata of archival: the language the XML “sent over the wire”). As the XML IPs

is standardized [16], and easy to understand (by are being transformed in the ingestion net, prove-

humans) and parse (by programs). Document nance information PR is added. This includes the

structure and semantics can be encoded via user- usual identification of the organizational unit and

definable tags (markup), sometimes called seman- individuals who performed the migration, as well

tic tags: unlike HTML, which contains a fixed set as identification of the sequence of XML mappings

of structural and presentational tags, XML is a lan- that was applied to the IP. By storing executable

guage for defining new languages, i.e., a metalan- specifications of these mappings, self-instantiating

guage. Because of user-defined tags, and the fact archives can be built (Section 4.3).

that documents explicitly contain some schema in-

formation in the structure of their parse tree (even

if a DTD or XML Schema is not given), XML can

4 Knowledge-Based Archives

be seen as a generic, self-describing data format.

Viewed as a data model, XML corresponds to In this section, we propose to extend the purely

labeled, ordered trees, i.e., a semistructured data structural approach of plain XML to include more

model. Consequently, XML can easily express semantic information. By employing “executable”

the whole range from highly structured informa- knowledge representation formalisms, one can not

tion (records, database tables, object structures) only capture more semantics of the archived col-

to very loosely structured information (HTML, lection, but this additional information can also be

free text with some markup). In particular, the used to automatically validate archives at a higher,

structure of an information package IP as indi- conceptual level than before where it was limited

cated in (*) above can be directly represented with to low-level fixity information or simple structural

XML elements: IPs (and contained sub-IPs) are checks.

encapsulated via delimiting opening and closing Intuitively, we speak of a knowledge-based (or

tags; descriptive (meta)-information DI about a model-based) archival approach, if IPs can contain

package can be attached in XML attributes, etc. conceptual-level information in knowledge pack-

XML elements can be nested and, since order of ages (KPs). The most important reason to include

subelements is preserved, ordered and unordered KPs is that they capture meta-information that may

collection types (list, bag, set) can be easily otherwise be lost: For example, at ingestion time it

encoded, thereby directly supporting collection- may be known that digital objects of one class in-

based archives. herit certain properties from a superclass, or that

The core of our archival architecture is the in- functional or other dependencies exists between

gestion network. Some distinguished nodes (or attributes, etc.Unfortunately, more often than not,

stages) of the ingestion net produce AIPs, others such valuable information is not archived explic-

yield different “external views” (DIPs). As IPs itly.

pass from one stage to the next, they are queried During the overall archival process, KPs also

and restructured like database instances. At the provide additional opportunities and means for

syntactic level, one can maximize infrastructure quality assurance: At ingestion time, KPs can

independence by representing the databases in be used to check that SIPs indeed conform to

XML and employing standard tools for parsing, the given accessioning policies and correspond-

querying, transforming, and presenting XML. 8 To ing feedback can be given to the producer. Dur-

ing archival management, i.e., at migration or dis-

ronment. Data formats whose specifications can be “grasped

easily” (both physically and intellectually) and for which tools-

semination time, KPs can be used to verify that

support is available, are good candidate archival formats. the CI satisfies the pre-specified integrity con-

8 e.g., SAX, XPath, XQuery, XSLT, ... straints implied by the KPs. Such value-addedfunctions are traditionally not considered part of notion of constraint language provides a unifying

an archival organization’s responsibilities. On the perspective and a basis for comparing formalisms

other hand, the detection of “higher-level inconsis- like DTD, XML - SCHEMA, RELAX, RDF - SCHEMA,

tencies” clearly yields valuable meta-information wrt. their expressiveness and complexity.

for the producers and consumers of the archived

information and could become an integral service Definition 2 (Subsumption)

of future archives. We say that C 0 subsumes C wrt. A, denoted C 0 C ,

The current approach for “fixing the meaning” if for all ' 2 C there is a enc(') 2 C 0 s.t. for all

of a data exchange/archival format is to provide a 2 A: a j= ' iff a j= enc('). 2

an XML DTD (e.g., many communities and or-

ganizations define their own standard “commu- As a constraint language, DTD can express

nity language” via DTDs). However, the fact that only certain structural constraints over XML, all

a document has been validated say wrt. the En- of which have equivalent encodings in XML -

coded Archival Description DTD [4] does not im- SCHEMA . Hence XML - SCHEMA subsumes DTD .

ply that it satisfies all constraints that are part of On the other hand, XML - SCHEMA is a much more

the EAD specification. Indeed, only structural complex formalism than DTD, so a more com-

constraints can be automatically checked using plex validator is needed when reinstantiating the

a (DTD-based) validating parser – all other con- archive, thereby actually increasing the infrastruc-

straints are not checked at all or require specialized ture dependence (at least for archives where DTD

software. constraints are sufficient). To overcome this prob-

These and other shortcomings of DTDs for data lem, we propose to use a generic, universal formal-

modeling and validation have been widely recog- ism that allows one to specify and execute other

nized and have led to a flood of extensions, rang- constraint languages:

ing from the W3C-supported XML Schema pro-

posal [17],9 to more grassroots efforts like RELAX 4.2 Self-Validating Archives

(which may become a standard) [10], 10 and many

others (RDF, RDF-Schema, SOX, DSD, Schema- Example 1 (Logic DTD Validator) Consider the

tron, XML-Data, DCD, XSchema/DDML, ...). A following F - LOGIC rules [7]:

unifying perspective on these languages can be

achieved by viewing them as constraint languages %%% Rules for h!ELEMENT X (Y,Z) i

that distinguish “good documents” (those that are (1) false P : X, not (P.1) : Y.

(2) false P : X, not (P.2) : Z.

valid wrt. the constraints) from “bad” (invalid) (3) false P : X, not P[ ! ].

ones. (4) false !

P : X[N ], not N=1, not N=2.

%%% Rules for h!ELEMENT X (Y j Z) i

4.1 XML Extensions as Constraint Lan- (5) false P : X[1!A], not A : Y, not A : Z

(6) false P : X, not P[ ! ].

guages (7) false P : X[N! ], not N=1.

%%% Rule for h!ELEMENT X (Y)* i

Assume IPs are expressed in some archival lan- (8) false P : X[ !C], not C : Y.

guage A. In the sequel, let A XML. A concrete

archive instance (short: archive) is a “word” a of The rule templates show how to generate for each

the archival language A, e.g., an XML document. ' 2 DTD a logic program enc(') in F - LOGIC,

which derives false iff a given document a 2 XML

Definition 1 (Archival Constraint Languages) is not valid wrt. ': e.g., if the first child is not Y

We say that C is a constraint language for A, if (1), or if there are more than two children (4). 2

for all ' 2 C the set V' = fa 2 A j a j= 'g of

valid archives (wrt. ') is decidable. 2 The previous logical DTD specification does not

For example, if C = DTD, a constraint ' is a con-

involve recursion and can be expressed in classical

crete DTD: for any document a 2 XML, validity

first-order logic FO. However, for expressing tran-

sitive constraints (e.g., subclassing, value inheri-

of a wrt. the DTD ' is decidable (so-called “vali-

dating XML parsers” check whether a j= '). The

tance, etc.) fixpoint extensions to FO (like DATA -

LOG or F - LOGIC) are necessary.

DTDs + datatypes + type extensions/restrictions + ...

DTD,

9

10 DTDs + (datatypes, ancestor-sensitive content models, Proposition 1 (i) XML - SCHEMA

local scoping, ...) – (entities, notations) ... (ii) F - LOGIC DTD. 2Note that there is a subtle but important differ- We call the edges of IN pipes and say that an

ence between the two subsumptions: In order to archive a 2 A is acceptable for (“may pass

“recover” the original DTD constraint via (i), one through”) the pipe s! t s0 , if a j= '(s) and

needs to understand the specific XML - SCHEMA t(a) j= '(s0 ). Since IN can have loops, fix-

standard, and in order to execute (i.e., check) the point or closure operations can be handled. If there

constraint, one needs a specific XML - SCHEMA val- are multiple t-edges s!t s0i outgoing from s, then

idator. In contrast, the subsumption of (ii) as one s00 is distinguished to identify the main pipe

sketched above contains its own declarative, ex- s!t s00 ; the remaining s!t s0i are called contin-

ecutable specification, hence is self-contained and gency pipes. The idea is that the postcondition

infrastructure independent. In this case, i.e., if an '(s00 ) captures the normal, desired case for ap-

AIP contains (in KP) an executable specification plying t at s, whereas the other '(s0i ) handle ex-

of the constraint ', we speak of a self-validating ceptions and errors. In particular, for '(s 01 ) =

archive. This means that at dissemination time we : '(s00 ) we catch all archives that fail the main

only need a single logic virtual machine (e.g., to pipe at s, so s01 can be used to abort the ingestion

execute PROLOG or F - LOGIC) on which we can and report the integrity violation : '(s 00 ). Alterna-

run all logically defined constraints. The generic tively, s01 may have further outgoing contingency

engine for executing “foreign constraints” does not pipes aimed at rectifying the problem.

have to be a logic one though: e.g., a RELAX val- When an archive a successfully passes through

idator has been written in XSLT [18]. Then, at re- the ingestion net, one or more of the transformed

instantiation time, one only needs a generic XSLT versions a0 are archived. One benefit of archiv-

engine for checking RELAX constraints. 11 ing the transformations of the pipeline (SIP ! t1

!tn AIP) in an infrastructure independent way

4.3 Self-Instantiating Archives is that knowledge, that was available at ingestion

time and is possibly hidden within the transforma-

A self-validating archive captures one or more tion, is preserved. Moreover, some of the transfor-

snapshots of the archived collection at certain mations yield user-views (AIP !t1 !tm DIP),

stages during the ingestion process, together with e.g., topic maps or HTML pages. By archiving

constraints ' for each snapshot. The notion of self- self-contained, executable specifications of these

instantiating archive goes a step further and aims mappings, the archival reinstantiation process can

at archiving also the transformations of the inges- be automated to a large extent using infrastructure

tion network themselves. Thus, instead of adding independent representations.

only descriptive metadata about a transformation

which is external to the archive, we include the

“transformation knowledge” thereby internalizing Properties of Transformations. By modeling

complete parts of the ingestion process. the ingestion net as a graph of database mappings,

As before, we can maximize infrastructure in- we can formally study properties of the ingestion

dependence by employing a universal formalism process, e.g., the data complexity of a transforma-

whose specifications can be executed on a virtual tion. Based on the time complexity and resource

(logic or XML-based) engine – ideally the same costs of transformations, we can decide whether a

one as used for checking constraints. To do so, we collection should be recreated on demand via the

model an ingestion network as a graph of database ingestion net, or whether it is preferable to materi-

transformations. This is a natural assumption for alize and later retrieve snapshots of the collection.

most real transformations (apart form very low This choice is common in computer science even

level reformatting and conversion steps). outside databases, e.g., for scientific computations

in virtual data grids, applications can choose to re-

Definition 3 (Ingestion Network) Let T be a set trieve previously stored results from disk or recom-

of transformations t : A ! A, and S a set of pute the data product, possibly using stored partial

stages. An ingestion network IN is a finite set results [8]. Note that invertible transformations of

of labeled edges s!t s0 , having associated precon- an ingestion net are content preserving. For trans-

ditions '(s) and postconditions '(s0 ), for s; s0 2 formations t that are not specific to a collection, it

S ; t 2 T ; '(s); '(s0 ) 2 C . 2 can be worthwhile to derive and implement the in-

11 However, verse mapping t;1 thereby guaranteeing that t is

in the archival context, instead of employing the

latest, rapidly changing formalisms, a “timeless” logical ap- content preserving.

proach may be preferable.Example 2 (Inverse Wrapper) Consider a docu- as Sections I and II, but grouped by commit-

ment collection fa1 ; a2 ; : : :g XHTML for which tee referral (e.g., “Senate Armed Services” and

a common wrapper t has been provided s.t. t(a i ) = “House Judiciary”), and that Section VII contains

a0i 2 XML. The exact inverse mapping may be im- a list of subjects with references to corresponding

practicable to construct, but a “reasonably equiv- BAR indentifiers: “Zoning and zoning law ! S.9,

alent” t;1 (i.e., modulo irrelevant formatting de- S.Con.Res.10, S.Res.41, S.J.Res.39”. Measures

tails) may be easy to define as an XSLT stylesheet. are bills and resolutions; the latter have three sub-

Thus, the output of the pipe a i !t a0i !t;1 a00i 2 types: simple, joint, and concurrent.

XHTML can be seen as a normalized (X)HTML Finally, CM0 identified 14 initial data fields

version of the input ai . By restricting to normal- DF0 (=attributes) that needed to be extracted. 12

ized input, t becomes invertible, and the XSLT

script acts as an “inverse wrapper” for presenting Ingestion Process. Figure 2 depicts the inges-

the collection. 2 tion network as it eventually evolved: The (pre-

sumed) conversion from (MS Word) DOC to RTF

5 Case Study: The Senate Collection happened outside of the ingestion net, since the ac-

cessioning policy prescribed SIPs in RTF format.

S1 ! S2 :13 A first, supposedly content

In a research collaboration with the National preserving, conversion to HTML using MS Word

Archives and Records Administration (NARA), turned out to be lossy when checked against CM 0 :

SDSC developed an information management ar- the groupings in Sections III and IV were no longer

chitecture and prototype for digital archives. Be- part of the HTML files,14 so it was impossible to

low, we illustrate some of the aspects of our associate a measure with a committee!

archival architecture, using the Senate Legislative S1 ! S3 : the conversion from RTF to an in-

Activities collection (SLA), one of the reference formation preserving XML representation was ac-

collections that NARA provided for research pur- complished using an rtf2xml module 15 for Omni-

poses. Mark, a stream-oriented rule-based data extraction

and programming language.

Collection Submission and Initial Model. The

S3 ! S4 : this main wrapping step was

used to extract data according to the initial data

SLA collection contains an extract of the 106th

fields DF0 . In order to simplify the (Perl) wrap-

Congress database bills, amendments, and reso-

per module and make it more generic, we used a

lutions (short: BARs). SLA was physically sub-

flat, occurrence-based representation for data ex-

mitted on CD-ROM as 99 files in Microsoft’s Rich

traction: each data field (attribute) was recorded in

Text Format (RTF), one per active senator, and or-

OAV form, i.e.,

ganized to reflect a particular senator’s legislative

contribution over the course of the 106th Congress.

(occurrence, attribute, value)

The occurrence has to be fine-grained enough for

Based on a visual inspection of the files, an initial

the transformation to be information preserving (in

conceptual model CM0 with the following struc-

our case occurrence = (filename, line-number)).

ture was assumed:

The scope of an occurrence is that part of the lin-

Header section: includes the senator name (e.g.,

“Paul S. Sarbanes”), state (“Maryland”), reporting

earized document which defines the extent of the

period (“January 06, 1999 to March 31, 2000”), and occurrence. For example, in case of an occur-

reporting entity (“Senate Computer Center Office of rence based on line numbers, the scope is from

the Sergeant at Arms and Committee on Rules and the first character of the line to the last charac-

Administration”) ter of the line. In case of XML, the scope of

Section I: Sponsored Measures, Section II: an occurrence may often be associated with el-

Cosponsored Measures, Section III: Sponsored Mea- ement boundaries (but finer occurrence granules

sures Grouped by Committee Referral, Section IV: 12 abstract, bar id, committee, congressional record,

Cosponsored Measures Organized by Committee Refer-

cosponsors, date introduced, digest, latest status, official title,

ral, Section V: Sponsored Amendments, Section VI: sponsor, statement of purpose, status actions, submitted by,

Cosponsored Amendments, submitted for

Section VII: Subject Index to Sponsored and 13 this dead end is only an example for existing pitfalls;

Cosponsored Measures and Amendments. !

S1 S2 is not archived.

14 this crucial information was part of the RTF page header

CM0 also modeled the fact that Sections III but left no trace whatsoever in the HTML

and IV contain the same bills and amendments 15 from Rick Geimer at xmeta.comcould have been derived from S 5 .) This step can

be seen as a reverse-engineering of the original

database content, of which SLA is only a view

(group BARs by senator, for each senator group

by measures, committee, etc.)

As part of the consolidation transformation,

it is natural to perform conceptual-level integrity

checks: e.g., at this level it is easy to define a

constraint ' that checks for completeness of the

collection (i.e., if each senator occurring some-

where in the collection also has a corresponding

senator file – a simple declarative query reveals

the answer: no!). Note that a consolidated ver-

Figure 2. Ingestion Network: Senate Collection sion provides an additional archival service; but it

is mandatory to also preserve a non-consolidated

“raw version” (e.g., as derived from the OAV

may be defined for XML as well). By employ- model).

ing the “deconstructing” OAV model, the wrapper S4 ; S6 ! S7 : these transformations create

program could be designed simpler, more modu- a topic map version and thus provide additional

lar and thus easier to reuse: e.g., date introduced conceptual-level “hooks” into the consolidated and

could show up in the file of Senator Paul Sarbanes OAV version.

(senator id=106) at line 25 with value 01/19/1999

and also in line 106 at line 55 with value

03/15/2000. This information is recorded with two 6 Conclusions

tuples: ((106,25), ’date introduced’, ’01/19/1999’)

and ((106,55), ’date introduced’, ’03/15/2000’). We have presented a framework for the preser-

S4 ! S4 : some candidate attributes from vation of digital data, based on a forward migra-

DF0 had to be decomposed further, which is mod- tion approach using XML as the common archival

eled by a recursive closure step S4 ! S4 , corre- format and generic XML tools (XML wrappers,

sponding to a sequence DF1 , : : :, DFn of refine- query and transformation engines). The ap-

ments of the data-fields, e.g., DF1 : list of sponsors proach has been extended towards self-validating

! [sponsor], and DF2 : sponsor! (name, date). knowledge-based archives: self-validating means

S4 ! S5 : this “reconstructing” step builds that declarative constraints about the collection

the desired archival information packages AIP in are included in executable form (as logic rules).

XML. Content and structure of the original SIPs Most parts of the ingestion network (apart from

is preserved by reassembling larger objects from S7 which is under development) have been im-

subobjects using their occurrence values. From the plemented for a concrete collection. Note that

created XML AIPs, DTDs like the following can all transformations following the OAV format can

be inferred (and included as a constraint ' in KP): be very naturally expressed in a high-level object-

oriented logic language (e.g., F - LOGIC). By in-

References

... [1] C. Baru, V. Chu, A. Gupta, B. Ludäscher, R. Mar-

ciano, Y. Papakonstantinou, and P. Velikhov.

S4 ! S6 : this conceptual-level transforma- XML-Based Information Mediation for Digital Li-

tion creates a consolidated version from the col- braries. In ACM Conf. on Digital Libraries (DL),

lection. For example, SLA contains 44,145 oc- pages 214–215, Berkeley, CA, 1999.

currences of BARs, however there are only 5,632 [2] T. Berners-Lee, J. Hendler, and O. Lassila. The

distinct BAR objects. (Alternatively, this version Semantic Web. Scientific American, May 2001.[3] Conceptual Graph Standard. dpANS,

NCITS.T2/98-003, http://www.bestweb.

net/˜sowa/cg/cgdpans.htm, Aug. 1999.

[4] Encoded Archival Description (EAD). http://

lcweb.loc.gov/ead/, June 1998.

[5] J. Garrett and D. Waters, editors. Preserving

Digital Information – Report of the Task Force

on Archiving of Digital Information, May 1996.

http://www.rlg.org/ArchTF/.

[6] Knowledge Interchange Format (KIF).

dpANS, NCITS.T2/98-004, http://logic.

stanford.edu/kif/, 1999.

[7] M. Kifer, G. Lausen, and J. Wu. Logical Foun-

dations of Object-Oriented and Frame-Based Lan-

guages. Journal of the ACM, 42(4):741–843, July

1995.

[8] R. Moore. Knowledge-Based Grids. In 18th IEEE

Symp. on Mass Storage Systems, San Diego, 2001.

[9] R. Moore, C. Baru, A. Rajasekar, B. Ludäscher,

R. Marciano, M. Wan, W. Schroeder, and

A. Gupta. Collection-Based Persistent Digital

Archives. D-Lib Magazine, 6(3,4), 2000.

[10] M. Murata. RELAX (REgular LAnguage descrip-

tion for XML). http://www.xml.gr.jp/

relax/, Oct. 2000.

[11] Reference Model for an Open Archival Infor-

mation System (OAIS). submitted as ISO draft,

http://www.ccsds.org/documents/

pdf/CCSDS-650.0-R-1.pdf, 1999.

[12] A. Paepcke, R. Brandriff, G. Janee, R. Larson,

B. Ludäscher, S. Melnik, and S. Raghavan. Search

Middleware and the Simple Digital Library Inter-

operability Protocol. D-Lib Magazine, 6(3), 2000.

[13] Resource Description Framework (RDF).

W3C Recommendation www.w3.org/TR/

REC-rdf-syntax, Feb. 1999.

[14] ISO/IEC FCD 13250 – Topic Maps, 1999.

http://www.ornl.gov/sgml/sc34/

document/0058.htm.

[15] OMG XML Metadata Interchange (XMI). www.

omg.org/cgi-bin/doc?ad/99-10-02,

1999.

[16] Extensible Markup Language (XML). www.w3.

org/XML/, 1998.

[17] XML Schema, Working Draft. www.w3.org/

TR/xmlschema-{1,2}, Sept. 2000.

[18] K. Yonekura. RELAX Verifier for XSLT.

http://www.geocities.co.jp/

SiliconValley-Bay/4639/, Oct. 2000.You can also read