Paraphrase to Explicate: Revealing Implicit Noun-Compound Relations

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Paraphrase to Explicate:

Revealing Implicit Noun-Compound Relations

Vered Shwartz Ido Dagan

Computer Science Department, Bar-Ilan University, Ramat-Gan, Israel

vered1986@gmail.com dagan@cs.biu.ac.il

Abstract Methods dedicated to paraphrasing noun-

compounds usually rely on corpus co-occurrences

of the compound’s constituents as a source of ex-

arXiv:1805.02442v1 [cs.CL] 7 May 2018

Revealing the implicit semantic rela- plicit relation paraphrases (e.g. Wubben, 2010;

tion between the constituents of a noun- Versley, 2013). Such methods are unable to gen-

compound is important for many NLP ap- eralize for unseen noun-compounds. Yet, most

plications. It has been addressed in the noun-compounds are very infrequent in text (Kim

literature either as a classification task to and Baldwin, 2007), and humans easily interpret

a set of pre-defined relations or by pro- the meaning of a new noun-compound by general-

ducing free text paraphrases explicating izing existing knowledge. For example, consider

the relations. Most existing paraphras- interpreting parsley cake as a cake made of pars-

ing methods lack the ability to generalize, ley vs. resignation cake as a cake eaten to cele-

and have a hard time interpreting infre- brate quitting an unpleasant job.

quent or new noun-compounds. We pro- We follow the paraphrasing approach and pro-

pose a neural model that generalizes better pose a semi-supervised model for paraphras-

by representing paraphrases in a contin- ing noun-compounds. Differently from previ-

uous space, generalizing for both unseen ous methods, we train the model to predict ei-

noun-compounds and rare paraphrases. ther a paraphrase expressing the semantic rela-

Our model helps improving performance tion of a noun-compound (predicting ‘[w2 ] made

on both the noun-compound paraphrasing of [w1 ]’ given ‘apple cake’), or a missing con-

and classification tasks. stituent given a combination of paraphrase and

noun-compound (predicting ‘apple’ given ‘cake

made of [w1 ]’). Constituents and paraphrase tem-

1 Introduction plates are represented as continuous vectors, and

semantically-similar paraphrase templates are em-

Noun-compounds hold an implicit semantic rela- bedded in proximity, enabling better generaliza-

tion between their constituents. For example, a tion. Interpreting ‘parsley cake’ effectively re-

‘birthday cake’ is a cake eaten on a birthday, while duces to identifying paraphrase templates whose

‘apple cake’ is a cake made of apples. Interpreting “selectional preferences” (Pantel et al., 2007) on

noun-compounds by explicating the relationship is each constituent fit ‘parsley’ and ‘cake’.

beneficial for many natural language understand- A qualitative analysis of the model shows that

ing tasks, especially given the prevalence of noun- the top ranked paraphrases retrieved for each

compounds in English (Nakov, 2013). noun-compound are plausible even when the con-

The interpretation of noun-compounds has been stituents never co-occur (Section 4). We evalu-

addressed in the literature either by classifying ate our model on both the paraphrasing and the

them to a fixed inventory of ontological relation- classification tasks (Section 5). On both tasks,

ships (e.g. Nastase and Szpakowicz, 2003) or by the model’s ability to generalize leads to improved

generating various free text paraphrases that de- performance in challenging evaluation settings.1

scribe the relation in a more expressive manner

1

(e.g. Hendrickx et al., 2013). The code is available at github.com/vered1986/panic2 Background from a corpus to generate a list of candidate para-

phrases. Unsupervised methods apply information

2.1 Noun-compound Classification

extraction techniques to find and rank the most

Noun-compound classification is the task con- meaningful paraphrases (Kim and Nakov, 2011;

cerned with automatically determining the seman- Xavier and Lima, 2014; Pasca, 2015; Pavlick

tic relation that holds between the constituents of and Pasca, 2017), while supervised approaches

a noun-compound, taken from a set of pre-defined learn to rank paraphrases using various features

relations. such as co-occurrence counts (Wubben, 2010; Li

Early work on the task leveraged information et al., 2010; Surtani et al., 2013; Versley, 2013)

derived from lexical resources and corpora (e.g. or the distributional representations of the noun-

Girju, 2007; Ó Séaghdha and Copestake, 2009; compounds (Van de Cruys et al., 2013).

Tratz and Hovy, 2010). More recent work broke One of the challenges of this approach is the

the task into two steps: in the first step, a noun- ability to generalize. If one assumes that suffi-

compound representation is learned from the dis- cient paraphrases for all noun-compounds appear

tributional representation of the constituent words in the corpus, the problem reduces to ranking the

(e.g. Mitchell and Lapata, 2010; Zanzotto et al., existing paraphrases. It is more likely, however,

2010; Socher et al., 2012). In the second step, the that some noun-compounds do not have any para-

noun-compound representations are used as fea- phrases in the corpus or have just a few. The ap-

ture vectors for classification (e.g. Dima and Hin- proach of Van de Cruys et al. (2013) somewhat

richs, 2015; Dima, 2016). generalizes for unseen noun-compounds. They

The datasets for this task differ in size, num- represented each noun-compound using a compo-

ber of relations and granularity level (e.g. Nastase sitional distributional vector (Mitchell and Lap-

and Szpakowicz, 2003; Kim and Baldwin, 2007; ata, 2010) and used it to predict paraphrases from

Tratz and Hovy, 2010). The decision on the re- the corpus. Similar noun-compounds are expected

lation inventory is somewhat arbitrary, and sub- to have similar distributional representations and

sequently, the inter-annotator agreement is rela- therefore yield the same paraphrases. For exam-

tively low (Kim and Baldwin, 2007). Specifi- ple, if the corpus does not contain paraphrases for

cally, a noun-compound may fit into more than plastic spoon, the model may predict the para-

one relation: for instance, in Tratz (2011), busi- phrases of a similar compound such as steel knife.

ness zone is labeled as CONTAINED (zone con- In terms of sharing information between

tains business), although it could also be labeled semantically-similar paraphrases, Nulty and

as PURPOSE (zone whose purpose is business). Costello (2010) and Surtani et al. (2013) learned

2.2 Noun-compound Paraphrasing “is-a” relations between paraphrases from the

co-occurrences of various paraphrases with each

As an alternative to the strict classification to pre- other. For example, the specific ‘[w2 ] extracted

defined relation classes, Nakov and Hearst (2006) from [w1 ]’ template (e.g. in the context of olive

suggested that the semantics of a noun-compound oil) generalizes to ‘[w2 ] made from [w1 ]’. One of

could be expressed with multiple prepositional the drawbacks of these systems is that they favor

and verbal paraphrases. For example, apple cake more frequent paraphrases, which may co-occur

is a cake from, made of, or which contains apples. with a wide variety of more specific paraphrases.

The suggestion was embraced and resulted

in two SemEval tasks. SemEval 2010 task 9

2.3 Noun-compounds in other Tasks

(Butnariu et al., 2009) provided a list of plau-

sible human-written paraphrases for each noun- Noun-compound paraphrasing may be considered

compound, and systems had to rank them with the as a subtask of the general paraphrasing task,

goal of high correlation with human judgments. whose goal is to generate, given a text fragment,

In SemEval 2013 task 4 (Hendrickx et al., 2013), additional texts with the same meaning. How-

systems were expected to provide a ranked list of ever, general paraphrasing methods do not guar-

paraphrases extracted from free text. antee to explicate implicit information conveyed

Various approaches were proposed for this task. in the original text. Moreover, the most notable

Most approaches start with a pre-processing step source for extracting paraphrases is multiple trans-

of extracting joint occurrences of the constituents lations of the same text (Barzilay and McKeown,wˆ1i = 28 p̂i = 78

M LPw M LPp

cake made of [w1 ] cake [p] apple

(23) made (1) [w1 ] (23) made

(28) apple (2) [w2 ] (28) apple (78) [w2 ] containing [w1 ]

(4145) cake (3) [p] (4145) cake (1) [w1 ] ...

... ... (2) [w2 ] (131) [w2 ] made of [w1 ]

(7891) of (7891) of (3) [p] ...

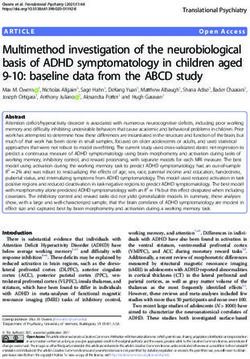

Figure 1: An illustration of the model predictions for w1 and p given the triplet (cake, made of, apple).

The model predicts each component given the encoding of the other two components, successfully pre-

dicting ‘apple’ given ‘cake made of [w1 ]’, while predicting ‘[w2 ] containing [w1 ]’ for ‘cake [p] apple’.

2001; Ganitkevitch et al., 2013; Mallinson et al., Section 3.2 details the creation of training data,

2017). If a certain concept can be described by and Section 3.3 describes the model.

an English noun-compound, it is unlikely that a

translator chose to translate its foreign language 3.1 Multi-task Reformulation

equivalent to an explicit paraphrase instead. Each training example consists of two constituents

Another related task is Open Information Ex- and a paraphrase (w2 , p, w1 ), and we train the

traction (Etzioni et al., 2008), whose goal is to ex- model on 3 subtasks: (1) predict p given w1 and

tract relational tuples from text. Most system fo- w2 , (2) predict w1 given p and w2 , and (3) predict

cus on extracting verb-mediated relations, and the w2 given p and w1 . Figure 1 demonstrates the pre-

few exceptions that addressed noun-compounds dictions for subtasks (1) (right) and (2) (left) for

provided partial solutions. Pal and Mausam the training example (cake, made of, apple). Ef-

(2016) focused on segmenting multi-word noun- fectively, the model is trained to answer questions

compounds and assumed an is-a relation between such as “what can cake be made of?”, “what can

the parts, as extracting (Francis Collins, is, NIH be made of apple?”, and “what are the possible re-

director) from “NIH director Francis Collins”. lationships between cake and apple?”.

Xavier and Lima (2014) enriched the corpus with The multi-task reformulation helps learning bet-

compound definitions from online dictionaries, for ter representations for paraphrase templates, by

example, interpreting oil industry as (industry, embedding semantically-similar paraphrases in

produces and delivers, oil) based on the Word- proximity. Similarity between paraphrases stems

Net definition “industry that produces and delivers either from lexical similarity and overlap between

oil”. This method is very limited as it can only the paraphrases (e.g. ‘is made of’ and ‘made of’),

interpret noun-compounds with dictionary entries, or from shared constituents, e.g. ‘[w2 ] involved in

while the majority of English noun-compounds [w1 ]’ and ‘[w2 ] in [w1 ] industry’ can share [w1 ]

don’t have them (Nakov, 2013). = insurance and [w2 ] = company. This allows the

model to predict a correct paraphrase for a given

3 Paraphrasing Model noun-compound, even when the constituents do

As opposed to previous approaches, that focus on not occur with that paraphrase in the corpus.

predicting a paraphrase template for a given noun-

3.2 Training Data

compound, we reformulate the task as a multi-

task learning problem (Section 3.1), and train the We collect a training set of (w2 , p, w1 , s) exam-

model to also predict a missing constituent given ples, where w1 and w2 are constituents of a noun-

the paraphrase template and the other constituent. compound w1 w2 , p is a templated paraphrase, and

Our model is semi-supervised, and it expects as s is the score assigned to the training instance.2

input a set of noun-compounds and a set of con- 2

We refer to “paraphrases” and “paraphrase templates” in-

strained part-of-speech tag-based templates that terchangeably. In the extracted templates, [w2 ] always pre-

make valid prepositional and verbal paraphrases. cedes [w1 ], probably because w2 is normally the head noun.We use the 19,491 noun-compounds found in 3.3 Model

the SemEval tasks datasets (Butnariu et al., 2009; For a training instance (w2 , p, w1 , s), we predict

Hendrickx et al., 2013) and in Tratz (2011). To ex- each item given the encoding of the other two.

tract patterns of part-of-speech tags that can form

noun-compound paraphrases, such as ‘[w2 ] VERB Encoding. We use the 100-dimensional pre-

PREP [w1 ]’, we use the SemEval task training data, trained GloVe embeddings (Pennington et al.,

but we do not use the lexical information in the 2014), which are fixed during training. In addi-

gold paraphrases. tion, we learn embeddings for the special words

[w1 ], [w2 ], and [p], which are used to represent

Corpus. Similarly to previous noun-compound a missing component, as in “cake made of [w1 ]”,

paraphrasing approaches, we use the Google N- “[w2 ] made of apple”, and “cake [p] apple”.

gram corpus (Brants and Franz, 2006) as a source For a missing component x ∈ {[p], [w1 ], [w2 ]}

of paraphrases (Wubben, 2010; Li et al., 2010; surrounded by the sequences of words v1:i−1 and

Surtani et al., 2013; Versley, 2013). The cor- vi+1:n , we encode the sequence using a bidirec-

pus consists of sequences of n terms (for n ∈ tional long-short term memory (bi-LSTM) net-

{3, 4, 5}) that occur more than 40 times on the work (Graves and Schmidhuber, 2005), and take

web. We search for n-grams following the ex- the ith output vector as representing the missing

tracted patterns and containing w1 and w2 ’s lem- component: bLS(v1:i , x, vi+1:n )i .

mas for some noun-compound in the set. We re- In bi-LSTMs, each output vector is a concate-

move punctuation, adjectives, adverbs and some nation of the outputs of the forward and backward

determiners to unite similar paraphrases. For ex- LSTMs, so the output vector is expected to con-

ample, from the 5-gram ‘cake made of sweet ap- tain information on valid substitutions both with

ples’ we extract the training example (cake, made respect to the previous words v1:i−1 and the sub-

of, apple). We keep only paraphrases that occurred sequent words vi+1:n .

at least 5 times, resulting in 136,609 instances. Prediction. We predict a distribution of the vo-

cabulary of the missing component, i.e. to predict

Weighting. Each n-gram in the corpus is accom-

w1 correctly we need to predict its index in the

panied with its frequency, which we use to assign

word vocabulary Vw , while the prediction of p is

scores to the different paraphrases. For instance,

from the vocabulary of paraphrases in the training

‘cake of apples’ may also appear in the corpus, al-

set, Vp . We predict the following distributions:

though with lower frequency than ‘cake from ap-

ples’. As also noted by Surtani et al. (2013), the p̂ = softmax(Wp · bLS(w~2 , [p], w~1 )2 )

shortcoming of such a weighting mechanism is wˆ1 = softmax(Ww · bLS(w~2 , p~1:n , [w1 ])n+1 ) (1)

that it prefers shorter paraphrases, which are much wˆ2 = softmax(Ww · bLS([w2 ], p~1:n , w~1 )1 )

more common in the corpus (e.g. count(‘cake

where Ww ∈ R|Vw |×2d , Wp ∈ R|Vp |×2d , and d is

made of apples’)

count(‘cake of apples’)). We

the embeddings dimension.

overcome this by normalizing the frequencies for

During training, we compute cross-entropy loss

each paraphrase length, creating a distribution of

for each subtask using the gold item and the pre-

paraphrases in a given length.

diction, sum up the losses, and weight them by the

Negative Samples. We add 1% of negative sam- instance score. During inference, we predict the

ples by selecting random corpus words w1 and missing components by picking the best scoring

w2 that do not co-occur, and adding an exam- index in each distribution:3

p̂i = argmax(p̂)

ple (w2 , [w2 ] is unrelated to [w1 ], w1 , sn ), for

some predefined negative samples score sn . Sim- wˆ1i = argmax(wˆ1 ) (2)

ilarly, for a word wi that did not occur in a para- wˆ2i = argmax(wˆ2 )

phrase p we add (wi , p, UNK, sn ) or (UNK, p, The subtasks share the pre-trained word embed-

wi , sn ), where UNK is the unknown word. This dings, the special embeddings, and the biLSTM

may help the model deal with non-compositional parameters. Subtasks (2) and (3) also share Ww ,

noun-compounds, where w1 and w2 are unrelated, the MLP that predicts the index of a word.

rather than forcibly predicting some relation be- 3

In practice, we pick the k best scoring indices in each

tween them. distribution for some predefined k, as we discuss in Section 5.[w1 ] [w2 ] Predicted Paraphrases [w2 ] Paraphrase Predicted [w1 ] Paraphrase [w1 ] Predicted [w2 ]

[w2 ] of [w1 ] heart surgery

[w2 ] on [w1 ] brain drug

cataract surgery surgery [w2 ] to treat [w1 ] [w2 ] to treat [w1 ] cataract

[w2 ] to remove [w1 ] back patient

[w2 ] in patients with [w1 ] knee transplant

[w2 ] of [w1 ] management company

[w2 ] to develop [w1 ] production firm

software company company [w2 ] engaged in [w1 ] [w2 ] engaged in [w1 ] software

[w2 ] in [w1 ] industry computer engineer

[w2 ] involved in [w1 ] business industry

[w2 ] is of [w1 ] spring party

[w2 ] of [w1 ] afternoon meeting

stone wall meeting [w2 ] held in [w1 ] [w2 ] held in [w1 ] morning

[w2 ] is made of [w1 ] hour rally

[w2 ] made of [w1 ] day session

Table 1: Examples of top ranked predicted components using the model: predicting the paraphrase given

w1 and w2 (left), w1 given w2 and the paraphrase (middle), and w2 given w1 and the paraphrase (right).

[w2]

[w2] belongs to to

belonging [w1]

[w1]

[w2] offered

given to

byby[w1]

[w1] [w2] of providing [w1]

[w2] [w1]

[w2] issued by [w1]

[w2] source of [w1]

[w2] involved

engaged

[w2] conducted

[w2] inin[w1]

by [w1] [w1]

[w2] held by [w1]

[w2] relating

[w2]pertaining

related to to [w1]

[w1] [w2] caused[w2] occur in [w1]

by [w1] [w2] in terms of [w1]

[w2] aimed at [w1] [w2] with [w1] [w2] with respect to [w1]

[w2][w2]

out from [w1]

of part

[w2] [w1] of [w1]

[w2]

[w2] consisting

consists ofof [w1]

[w1]

[w2] composed of [w1] [w2]

[w2] forforuse in [w1]

[w1]in [w1]

[w2] devoted

dedicatedtoto[w1]

[w1] [w2] included

[w2] created by [w1] [w2] employed in [w1]

[w2] is made of [w1] [w2] done by [w1] [w2] owned by [w1]

[w2] is for [w1] [w2] found in [w1] [w2] by

by [w1]

way

meansof [w1]

of [w1]

[w2] generated

[w2] because of [w1] [w2] supplied by[w2]

[w1]produced by [w1]

[w2]

[w2] made of[w2]

made by [w1]to make [w1]

[w2] provided by [w1]

[w2] to produce [w1]

Figure 2: A t-SNE map of a sample of paraphrases, using the paraphrase vectors encoded by the biLSTM,

for example bLS([w2 ] made of [w1 ]).

Implementation Details. The model is imple- data, but are rather generalized from similar exam-

mented in DyNet (Neubig et al., 2017). We dedi- ples. For example, there is no training instance for

cate a small number of noun-compounds from the “company in the software industry” but there is a

corpus for validation. We train for up to 10 epochs, “firm in the software industry” and a company in

stopping early if the validation loss has not im- many other industries.

proved in 3 epochs. We use Momentum SGD While the frequent prepositional paraphrases

(Nesterov, 1983), and set the batch size to 10 and are often ranked at the top of the list, the model

the other hyper-parameters to their default values. also retrieves more specified verbal paraphrases.

The list often contains multiple semantically-

4 Qualitative Analysis similar paraphrases, such as ‘[w2 ] involved in

To estimate the quality of the proposed model, we [w1 ]’ and ‘[w2 ] in [w1 ] industry’. This is a result

first provide a qualitative analysis of the model of the model training objective (Section 3) which

outputs. Table 1 displays examples of the model positions the vectors of semantically-similar para-

outputs for each possible usage: predicting the phrases close to each other in the embedding

paraphrase given the constituent words, and pre- space, based on similar constituents.

dicting each constituent word given the paraphrase To illustrate paraphrase similarity we compute

and the other word. a t-SNE projection (Van Der Maaten, 2014) of

The examples in the table are from among the the embeddings of all the paraphrases, and draw a

top 10 ranked predictions for each component- sample of 50 paraphrases in Figure 2. The projec-

pair. We note that most of the (w2 , paraphrase, tion positions semantically-similar but lexically-

w1 ) triplets in the table do not occur in the training divergent paraphrases in proximity, likely due tomany shared constituents. For instance, ‘with’, is its confidence score. The last feature incorpo-

‘from’, and ‘out of’ can all describe the relation rates the original model score into the decision, as

between food words and their ingredients. to not let other considerations such as preposition

frequency in the training set take over.

5 Evaluation: Noun-Compound

During inference, the model sorts the list of

Interpretation Tasks

paraphrases retrieved for each noun-compound ac-

For quantitative evaluation we employ our model cording to the pairwise ranking. It then scores

for two noun-compound interpretation tasks. The each paraphrase by multiplying its rank with its

main evaluation is on retrieving and ranking para- original model score, and prunes paraphrases with

phrases (§5.1). For the sake of completeness, we final score < 0.025. The values for k and the

also evaluate the model on classification to a fixed threshold were tuned on the training set.

inventory of relations (§5.2), although it wasn’t de-

signed for this task. Evaluation Settings. The SemEval 2013 task

provided a scorer that compares words and n-

5.1 Paraphrasing

grams from the gold paraphrases against those in

Task Definition. The general goal of this task the predicted paraphrases, where agreement on

is to interpret each noun-compound to multiple a prefix of a word (e.g. in derivations) yields

prepositional and verbal paraphrases. In SemEval a partial scoring. The overall score assigned to

2013 Task 4,4 the participating systems were each system is calculated in two different ways.

asked to retrieve a ranked list of paraphrases for The ‘isomorphic’ setting rewards both precision

each noun-compound, which was automatically and recall, and performing well on it requires ac-

evaluated against a similarly ranked list of para- curately reproducing as many of the gold para-

phrases proposed by human annotators. phrases as possible, and in much the same order.

Model. For a given noun-compound w1 w2 , we The ‘non-isomorphic’ setting rewards only preci-

first predict the k = 250 most likely paraphrases: sion, and performing well on it requires accurately

p̂1 , ..., p̂k = argmaxk p̂, where p̂ is the distribution reproducing the top-ranked gold paraphrases, with

of paraphrases defined in Equation 1. no importance to order.

While the model also provides a score for each

paraphrase (Equation 1), the scores have not been Baselines. We compare our method with the

optimized to correlate with human judgments. We published results from the SemEval task. The

therefore developed a re-ranking model that re- SemEval 2013 baseline generates for each noun-

ceives a list of paraphrases and re-ranks the list to compound a list of prepositional paraphrases in

better fit the human judgments. an arbitrary fixed order. It achieves a moder-

We follow Herbrich (2000) and learn a pair- ately good score in the non-isomorphic setting by

wise ranking model. The model determines which generating a fixed set of paraphrases which are

of two paraphrases of the same noun-compound both common and generic. The MELODI sys-

should be ranked higher, and it is implemented tem performs similarly: it represents each noun-

as an SVM classifier using scikit-learn (Pedregosa compound using a compositional distributional

et al., 2011). For training, we use the available vector (Mitchell and Lapata, 2010) which is then

training data with gold paraphrases and ranks pro- used to predict paraphrases from the corpus. The

vided by the SemEval task organizers. We extract performance of MELODI indicates that the sys-

the following features for a paraphrase p: tem was rather conservative, yielding a few com-

1. The part-of-speech tags contained in p mon paraphrases rather than many specific ones.

2. The prepositions contained in p SFS and IIITH, on the other hand, show a more

3. The number of words in p balanced trade-off between recall and precision.

4. Whether p ends with the special [w1 ] symbol As a sanity check, we also report the results of a

p̂i

5. cosine(bLS([w2 ], p, [w1 ])2 , V~p ) · p̂p̂i baseline that retrieves ranked paraphrases from the

p̂i training data collected in Section 3.2. This base-

where V~p is the biLSTM encoding of the pre- line has no generalization abilities, therefore it is

dicted paraphrase computed in Equation 1 and p̂p̂i expected to score poorly on the recall-aware iso-

4

https://www.cs.york.ac.uk/semeval-2013/task4 morphic setting.Method isomorphic non-isomorphic

SFS (Versley, 2013) 23.1 17.9

IIITH (Surtani et al., 2013) 23.1 25.8

Baselines

MELODI (Van de Cruys et al., 2013) 13.0 54.8

SemEval 2013 Baseline (Hendrickx et al., 2013) 13.8 40.6

Baseline 3.8 16.1

This paper

Our method 28.2 28.4

Table 2: Results of the proposed method and the baselines on the SemEval 2013 task.

Category % ally annotated categories for each error type.

False Positive Many false positive errors are actually valid

(1) Valid paraphrase missing from gold 44%

(2) Valid paraphrase, slightly too specific 15% paraphrases that were not suggested by the hu-

(3) Incorrect, common prepositional paraphrase 14% man annotators (error 1, “discussion by group”).

(4) Incorrect, other errors 14% Some are borderline valid with minor grammati-

(5) Syntactic error in paraphrase 8%

(6) Valid paraphrase, but borderline grammatical 5% cal changes (error 6, “force of coalition forces”)

False Negative

or too specific (error 2, “life of women in commu-

(1) Long paraphrase (more than 5 words) 30% nity” instead of “life in community”). Common

(2) Prepositional paraphrase with determiners 25% prepositional paraphrases were often retrieved al-

(3) Inflected constituents in gold 10%

(4) Other errors 35%

though they are incorrect (error 3). We conjec-

ture that this error often stem from an n-gram that

Table 3: Categories of false positive and false neg- does not respect the syntactic structure of the sen-

ative predictions along with their percentage. tence, e.g. a sentence such as “rinse away the oil

from baby ’s head” produces the n-gram “oil from

Results. Table 2 displays the performance of the baby”.

proposed method and the baselines in the two eval-

With respect to false negative examples, they

uation settings. Our method outperforms all the

consisted of many long paraphrases, while our

methods in the isomorphic setting. In the non-

model was restricted to 5 words due to the source

isomorphic setting, it outperforms the other two

of the training data (error 1, “holding done in the

systems that score reasonably on the isomorphic

case of a share”). Many prepositional paraphrases

setting (SFS and IIITH) but cannot compete with

consisted of determiners, which we conflated with

the systems that focus on achieving high precision.

the same paraphrases without determiners (error

The main advantage of our proposed model 2, “mutation of a gene”). Finally, in some para-

is in its ability to generalize, and that is also phrases, the constituents in the gold paraphrase

demonstrated in comparison to our baseline per- appear in inflectional forms (error 3, “holding of

formance. The baseline retrieved paraphrases only shares” instead of “holding of share”).

for a third of the noun-compounds (61/181), ex-

pectedly yielding poor performance on the isomor-

phic setting. Our model, which was trained on the 5.2 Classification

very same data, retrieved paraphrases for all noun-

compounds. For example, welfare system was not Noun-compound classification is defined as a mul-

present in the training data, yet the model pre- ticlass classification problem: given a pre-defined

dicted the correct paraphrases “system of welfare set of relations, classify w1 w2 to the relation that

benefits”, “system to provide welfare” and others. holds between w1 and w2 . Potentially, the cor-

pus co-occurrences of w1 and w2 may contribute

Error Analysis. We analyze the causes of the to the classification, e.g. ‘[w2 ] held at [w1 ]’ in-

false positive and false negative errors made by the dicates a TIME relation. Tratz and Hovy (2010) in-

model. For each error type we sample 10 noun- cluded such features in their classifier, but ablation

compounds. For each noun-compound, false pos- tests showed that these features had a relatively

itive errors are the top 10 predicted paraphrases small contribution, probably due to the sparseness

which are not included in the gold paraphrases, of the paraphrases. Recently, Shwartz and Wa-

while false negative errors are the top 10 gold terson (2018) showed that paraphrases may con-

paraphrases not found in the top k predictions tribute to the classification when represented in a

made by the model. Table 3 displays the manu- continuous space.Model. We generate a paraphrase vector repre- Dataset & Split Method F1

sentation par(w

~ 1 w2 ) for a given noun-compound Tratz and Hovy (2010) 0.739

Dima (2016) 0.725

w1 w2 as follows. We predict the indices of the k Tratz

Shwartz and Waterson (2018) 0.714

most likely paraphrases: p̂1 , ..., p̂k = argmaxk p̂, fine

distributional 0.677

Random

where p̂ is the distribution on the paraphrase vo- paraphrase 0.505

integrated 0.673

cabulary Vp , as defined in Equation 1. We then Tratz and Hovy (2010) 0.340

encode each paraphrase using the biLSTM, and Dima (2016) 0.334

Tratz

average the paraphrase vectors, weighted by their Shwartz and Waterson (2018) 0.429

fine

distributional 0.356

confidence scores in p̂: Lexical

paraphrase 0.333

Pk p̂i ~ p̂i integrated 0.370

i=1 p̂ · Vp Tratz and Hovy (2010) 0.760

par(w

~ 1 w2 ) = Pk (3)

p̂i Dima (2016) 0.775

i=1 p̂ Tratz

Shwartz and Waterson (2018) 0.736

coarse

We train a linear classifier, and represent w1 w2 distributional 0.689

Random

paraphrase 0.557

in a feature vector f (w1 w2 ) in two variants: para- integrated 0.700

phrase: f (w1 w2 ) = par(w ~ 1 w2 ), or integrated: Tratz and Hovy (2010) 0.391

Dima (2016) 0.372

concatenated to the constituent word embeddings Tratz

Shwartz and Waterson (2018) 0.478

f (w1 w2 ) = [par(w

~ coarse

1 w2 ), w

~1 , w~2 ]. The classifier distributional 0.370

Lexical

type (logistic regression/SVM), k, and the penalty paraphrase 0.345

integrated 0.393

are tuned on the validation set. We also pro-

vide a baseline in which we ablate the paraphrase Table 4: Classification results. For each dataset

component from our model, representing a noun- split, the top part consists of baseline methods and

compound by the concatenation of its constituent the bottom part of methods from this paper. The

embeddings f (w1 w2 ) = [w~1 , w~2 ] (distributional). best performance in each part appears in bold.

Datasets. We evaluate on the Tratz (2011) the Full-Additive (Zanzotto et al., 2010) and Ma-

dataset, which consists of 19,158 instances, la- trix (Socher et al., 2012) models. We report the

beled in 37 fine-grained relations (Tratz-fine) or results from Shwartz and Waterson (2018).

12 coarse-grained relations (Tratz-coarse). 3) Paraphrase-based (Shwartz and Waterson,

We report the performance on two different 2018): a neural classification model that learns

dataset splits to train, test, and validation: a ran- an LSTM-based representation of the joint occur-

dom split in a 75:20:5 ratio, and, following con- rences of w1 and w2 in a corpus (i.e. observed

cerns raised by Dima (2016) about lexical mem- paraphrases), and integrates distributional infor-

orization (Levy et al., 2015), on a lexical split in mation using the constituent embeddings.

which the sets consist of distinct vocabularies. The

lexical split better demonstrates the scenario in

Results. Table 4 displays the methods’ perfor-

which a noun-compound whose constituents have

mance on the two versions of the Tratz (2011)

not been observed needs to be interpreted based on

dataset and the two dataset splits. The paraphrase

similar observed noun-compounds, e.g. inferring

model on its own is inferior to the distributional

the relation in pear tart based on apple cake and

model, however, the integrated version improves

other similar compounds. We follow the random

upon the distributional model in 3 out of 4 settings,

and full-lexical splits from Shwartz and Waterson

demonstrating the complementary nature of the

(2018).

distributional and paraphrase-based methods. The

Baselines. We report the results of 3 baselines contribution of the paraphrase component is espe-

representative of different approaches: cially noticeable in the lexical splits.

1) Feature-based (Tratz and Hovy, 2010): we re- As expected, the integrated method in Shwartz

implement a version of the classifier with features and Waterson (2018), in which the paraphrase

from WordNet and Roget’s Thesaurus. representation was trained with the objective of

2) Compositional (Dima, 2016): a neural archi- classification, performs better than our integrated

tecture that operates on the distributional represen- model. The superiority of both integrated models

tations of the noun-compound and its constituents. in the lexical splits confirms that paraphrases are

Noun-compound representations are learned with beneficial for classification.Example Noun-compounds Gold Distributional Example Paraphrases

printing plant PURPOSE OBJECTIVE [w2 ] engaged in [w1 ]

marketing expert [w2 ] in [w1 ]

TOPICAL OBJECTIVE

development expert [w2 ] knowledge of [w1 ]

weight/job loss OBJECTIVE CAUSAL [w2 ] of [w1 ]

rubber band [w2 ] made of [w1 ]

CONTAINMENT PURPOSE

rice cake [w2 ] is made of [w1 ]

laboratory animal LOCATION / PART- WHOLE ATTRIBUTE [w2 ] in [w1 ], [w2 ] used in [w1 ]

Table 5: Examples of noun-compounds that were correctly classified by the integrated model while being

incorrectly classified by distributional, along with top ranked indicative paraphrases.

Analysis. To analyze the contribution of the a plausible concrete interpretation or which origi-

paraphrase component to the classification, we fo- nated from one. For example, it predicted that sil-

cused on the differences between the distributional ver spoon is simply a spoon made of silver and that

and integrated models on the Tratz-Coarse lexical monkey business is a business that buys or raises

split. Examination of the per-relation F1 scores monkeys. In other cases, it seems that the strong

revealed that the relations for which performance prior on one constituent leads to ignoring the other,

improved the most in the integrated model were unrelated constituent, as in predicting “wedding

TOPICAL (+11.1 F1 points), OBJECTIVE (+5.5), AT- made of diamond”. Finally, the “unrelated” para-

TRIBUTE (+3.8) and LOCATION / PART WHOLE (+3.5). phrase was predicted for a few compounds, but

Table 5 provides examples of noun-compounds those are not necessarily non-compositional (ap-

that were correctly classified by the integrated plication form, head teacher). We conclude that

model while being incorrectly classified by the dis- the model does not address compositionality and

tributional model. For each noun-compound, we suggest to apply it only to compositional com-

provide examples of top ranked paraphrases which pounds, which may be recognized using compo-

are indicative of the gold label relation. sitionality prediction methods as in Reddy et al.

(2011).

6 Compositionality Analysis

7 Conclusion

Our paraphrasing approach at its core assumes

compositionality: only a noun-compound whose We presented a new semi-supervised model for

meaning is derived from the meanings of its con- noun-compound paraphrasing. The model differs

stituent words can be rephrased using them. In from previous models by being trained to predict

§3.2 we added negative samples to the train- both a paraphrase given a noun-compound, and a

ing data to simulate non-compositional noun- missing constituent given the paraphrase and the

compounds, which are included in the classifi- other constituent. This results in better general-

cation dataset (§5.2). We assumed that these ization abilities, leading to improved performance

compounds, more often than compositional ones in two noun-compound interpretation tasks. In the

would consist of unrelated constituents (spelling future, we plan to take generalization one step fur-

bee, sacred cow), and added instances of random ther, and explore the possibility to use the biL-

unrelated nouns with ‘[w2 ] is unrelated to [w1 ]’. STM for generating completely new paraphrase

Here, we assess whether our model succeeds to templates unseen during training.

recognize non-compositional noun-compounds.

Acknowledgments

We used the compositionality dataset of Reddy

et al. (2011) which consists of 90 noun- This work was supported in part by an Intel

compounds along with human judgments about ICRI-CI grant, the Israel Science Foundation

their compositionality in a scale of 0-5, 0 be- grant 1951/17, the German Research Foundation

ing non-compositional and 5 being compositional. through the German-Israeli Project Cooperation

For each noun-compound in the dataset, we pre- (DIP, grant DA 1600/1-1), and Theo Hoffenberg.

dicted the 15 best paraphrases and analyzed the er- Vered is also supported by the Clore Scholars Pro-

rors. The most common error was predicting para- gramme (2017), and the AI2 Key Scientific Chal-

phrases for idiomatic compounds which may have lenges Program (2017).References Volume 2: Proceedings of the Seventh International

Workshop on Semantic Evaluation (SemEval 2013).

Regina Barzilay and R. Kathleen McKeown. 2001. Association for Computational Linguistics, pages

Extracting paraphrases from a parallel corpus. 138–143. http://aclweb.org/anthology/S13-2025.

In Proceedings of the 39th Annual Meeting of

the Association for Computational Linguistics. Ralf Herbrich. 2000. Large margin rank boundaries for

http://aclweb.org/anthology/P01-1008. ordinal regression. Advances in large margin classi-

fiers pages 115–132.

Thorsten Brants and Alex Franz. 2006. Web 1t 5-gram

version 1 . Nam Su Kim and Preslav Nakov. 2011. Large-

scale noun compound interpretation using boot-

Cristina Butnariu, Su Nam Kim, Preslav Nakov, strapping and the web as a corpus. In Pro-

Diarmuid Ó Séaghdha, Stan Szpakowicz, and ceedings of the 2011 Conference on Empirical

Tony Veale. 2009. Semeval-2010 task 9: The Methods in Natural Language Processing. Associa-

interpretation of noun compounds using para- tion for Computational Linguistics, pages 648–658.

phrasing verbs and prepositions. In Proceed- http://aclweb.org/anthology/D11-1060.

ings of the Workshop on Semantic Evalua-

tions: Recent Achievements and Future Direc- Su Nam Kim and Timothy Baldwin. 2007. Interpret-

tions (SEW-2009). Association for Computational ing noun compounds using bootstrapping and sense

Linguistics, Boulder, Colorado, pages 100–105. collocation. In Proceedings of Conference of the

http://www.aclweb.org/anthology/W09-2416. Pacific Association for Computational Linguistics.

pages 129–136.

Corina Dima. 2016. Proceedings of the 1st Work-

shop on Representation Learning for NLP, As- Omer Levy, Steffen Remus, Chris Biemann, and

sociation for Computational Linguistics, chapter Ido Dagan. 2015. Do supervised distribu-

On the Compositionality and Semantic Interpreta- tional methods really learn lexical inference re-

tion of English Noun Compounds, pages 27–39. lations? In Proceedings of the 2015 Confer-

https://doi.org/10.18653/v1/W16-1604. ence of the North American Chapter of the As-

sociation for Computational Linguistics: Human

Corina Dima and Erhard Hinrichs. 2015. Automatic Language Technologies. Association for Computa-

noun compound interpretation using deep neural tional Linguistics, Denver, Colorado, pages 970–

networks and word embeddings. IWCS 2015 page 976. http://www.aclweb.org/anthology/N15-1098.

173.

Guofu Li, Alejandra Lopez-Fernandez, and Tony

Oren Etzioni, Michele Banko, Stephen Soderland, and Veale. 2010. Ucd-goggle: A hybrid system for

Daniel S Weld. 2008. Open information extrac- noun compound paraphrasing. In Proceedings of

tion from the web. Communications of the ACM the 5th International Workshop on Semantic Eval-

51(12):68–74. uation. Association for Computational Linguistics,

pages 230–233.

Juri Ganitkevitch, Benjamin Van Durme, and Chris

Callison-Burch. 2013. PPDB: The paraphrase Jonathan Mallinson, Rico Sennrich, and Mirella Lap-

database. In Proceedings of the 2013 Con- ata. 2017. Paraphrasing revisited with neural ma-

ference of the North American Chapter of the chine translation. In Proceedings of the 15th Confer-

Association for Computational Linguistics: Hu- ence of the European Chapter of the Association for

man Language Technologies. Association for Computational Linguistics: Volume 1, Long Papers.

Computational Linguistics, pages 758–764. Association for Computational Linguistics, Valen-

http://aclweb.org/anthology/N13-1092. cia, Spain, pages 881–893.

Roxana Girju. 2007. Improving the interpreta- Jeff Mitchell and Mirella Lapata. 2010. Composition

tion of noun phrases with cross-linguistic infor- in distributional models of semantics. Cognitive sci-

mation. In Proceedings of the 45th Annual ence 34(8):1388–1429.

Meeting of the Association of Computational Lin-

guistics. Association for Computational Linguis- Preslav Nakov. 2013. On the interpretation of noun

tics, Prague, Czech Republic, pages 568–575. compounds: Syntax, semantics, and entailment.

http://www.aclweb.org/anthology/P07-1072. Natural Language Engineering 19(03):291–330.

Alex Graves and Jürgen Schmidhuber. 2005. Frame- Preslav Nakov and Marti Hearst. 2006. Using verbs to

wise phoneme classification with bidirectional lstm characterize noun-noun relations. In International

and other neural network architectures. Neural Net- Conference on Artificial Intelligence: Methodology,

works 18(5-6):602–610. Systems, and Applications. Springer, pages 233–

244.

Iris Hendrickx, Zornitsa Kozareva, Preslav Nakov, Di-

armuid Ó Séaghdha, Stan Szpakowicz, and Tony Vivi Nastase and Stan Szpakowicz. 2003. Explor-

Veale. 2013. Semeval-2013 task 4: Free paraphrases ing noun-modifier semantic relations. In Fifth in-

of noun compounds. In Second Joint Conference ternational workshop on computational semantics

on Lexical and Computational Semantics (*SEM), (IWCS-5). pages 285–301.Yurii Nesterov. 1983. A method of solving a con- E. Duchesnay. 2011. Scikit-learn: Machine learning

vex programming problem with convergence rate o in Python. Journal of Machine Learning Research

(1/k2). In Soviet Mathematics Doklady. volume 27, 12:2825–2830.

pages 372–376.

Jeffrey Pennington, Richard Socher, and Christopher

Graham Neubig, Chris Dyer, Yoav Goldberg, Austin Manning. 2014. Glove: Global vectors for word

Matthews, Waleed Ammar, Antonios Anastasopou- representation. In Proceedings of the 2014 Con-

los, Miguel Ballesteros, David Chiang, Daniel ference on Empirical Methods in Natural Language

Clothiaux, Trevor Cohn, et al. 2017. Dynet: The Processing (EMNLP). Association for Computa-

dynamic neural network toolkit. arXiv preprint tional Linguistics, Doha, Qatar, pages 1532–1543.

arXiv:1701.03980 . http://www.aclweb.org/anthology/D14-1162.

Paul Nulty and Fintan Costello. 2010. Ucd-pn: Select- Siva Reddy, Diana McCarthy, and Suresh Manand-

ing general paraphrases using conditional probabil- har. 2011. An empirical study on compositional-

ity. In Proceedings of the 5th International Work- ity in compound nouns. In Proceedings of 5th In-

shop on Semantic Evaluation. Association for Com- ternational Joint Conference on Natural Language

putational Linguistics, pages 234–237. Processing. Asian Federation of Natural Language

Processing, Chiang Mai, Thailand, pages 210–218.

Diarmuid Ó Séaghdha and Ann Copestake. 2009. http://www.aclweb.org/anthology/I11-1024.

Using lexical and relational similarity to clas-

sify semantic relations. In Proceedings of the Vered Shwartz and Chris Waterson. 2018. Olive oil

12th Conference of the European Chapter of is made of olives, baby oil is made for babies: In-

the ACL (EACL 2009). Association for Computa- terpreting noun compounds using paraphrases in a

tional Linguistics, Athens, Greece, pages 621–629. neural model. In The 16th Annual Conference of

http://www.aclweb.org/anthology/E09-1071. the North American Chapter of the Association for

Computational Linguistics: Human Language Tech-

Harinder Pal and Mausam. 2016. Demonyms and com- nologies (NAACL-HLT). New Orleans, Louisiana.

pound relational nouns in nominal open ie. In Pro-

ceedings of the 5th Workshop on Automated Knowl- Richard Socher, Brody Huval, D. Christopher Man-

edge Base Construction. Association for Compu- ning, and Y. Andrew Ng. 2012. Semantic composi-

tational Linguistics, San Diego, CA, pages 35–39. tionality through recursive matrix-vector spaces. In

http://www.aclweb.org/anthology/W16-1307. Proceedings of the 2012 Joint Conference on Empir-

ical Methods in Natural Language Processing and

Patrick Pantel, Rahul Bhagat, Bonaventura Coppola, Computational Natural Language Learning. Asso-

Timothy Chklovski, and Eduard Hovy. 2007. ISP: ciation for Computational Linguistics, pages 1201–

Learning inferential selectional preferences. In Hu- 1211. http://aclweb.org/anthology/D12-1110.

man Language Technologies 2007: The Confer-

ence of the North American Chapter of the Asso- Nitesh Surtani, Arpita Batra, Urmi Ghosh, and Soma

ciation for Computational Linguistics; Proceedings Paul. 2013. Iiit-h: A corpus-driven co-occurrence

of the Main Conference. Association for Computa- based probabilistic model for noun compound para-

tional Linguistics, Rochester, New York, pages 564– phrasing. In Second Joint Conference on Lexical

571. http://www.aclweb.org/anthology/N/N07/N07- and Computational Semantics (* SEM), Volume 2:

1071. Proceedings of the Seventh International Workshop

on Semantic Evaluation (SemEval 2013). volume 2,

Marius Pasca. 2015. Interpreting compound noun pages 153–157.

phrases using web search queries. In Proceed-

ings of the 2015 Conference of the North American Stephen Tratz. 2011. Semantically-enriched parsing

Chapter of the Association for Computational Lin- for natural language understanding. University of

guistics: Human Language Technologies. Associa- Southern California.

tion for Computational Linguistics, pages 335–344.

https://doi.org/10.3115/v1/N15-1037. Stephen Tratz and Eduard Hovy. 2010. A taxon-

omy, dataset, and classifier for automatic noun

Ellie Pavlick and Marius Pasca. 2017. Identify- compound interpretation. In Proceedings of the

ing 1950s american jazz musicians: Fine-grained 48th Annual Meeting of the Association for Com-

isa extraction via modifier composition. In Pro- putational Linguistics. Association for Computa-

ceedings of the 55th Annual Meeting of the As- tional Linguistics, Uppsala, Sweden, pages 678–

sociation for Computational Linguistics (Volume 687. http://www.aclweb.org/anthology/P10-1070.

1: Long Papers). Association for Computational

Linguistics, Vancouver, Canada, pages 2099–2109. Tim Van de Cruys, Stergos Afantenos, and Philippe

http://aclweb.org/anthology/P17-1192. Muller. 2013. Melodi: A supervised distribu-

tional approach for free paraphrasing of noun com-

F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, pounds. In Second Joint Conference on Lex-

B. Thirion, O. Grisel, M. Blondel, P. Pretten- ical and Computational Semantics (*SEM), Vol-

hofer, R. Weiss, V. Dubourg, J. Vanderplas, A. Pas- ume 2: Proceedings of the Seventh Interna-

sos, D. Cournapeau, M. Brucher, M. Perrot, and tional Workshop on Semantic Evaluation (Se-mEval 2013). Association for Computational Lin- guistics, Atlanta, Georgia, USA, pages 144–147. http://www.aclweb.org/anthology/S13-2026. Laurens Van Der Maaten. 2014. Accelerating t-sne using tree-based algorithms. Journal of machine learning research 15(1):3221–3245. Yannick Versley. 2013. Sfs-tue: Compound para- phrasing with a language model and discriminative reranking. In Second Joint Conference on Lexical and Computational Semantics (* SEM), Volume 2: Proceedings of the Seventh International Workshop on Semantic Evaluation (SemEval 2013). volume 2, pages 148–152. Sander Wubben. 2010. Uvt: Memory-based pairwise ranking of paraphrasing verbs. In Proceedings of the 5th International Workshop on Semantic Eval- uation. Association for Computational Linguistics, pages 260–263. Clarissa Xavier and Vera Lima. 2014. Boosting open information extraction with noun-based relations. In Nicoletta Calzolari (Conference Chair), Khalid Choukri, Thierry Declerck, Hrafn Loftsson, Bente Maegaard, Joseph Mariani, Asuncion Moreno, Jan Odijk, and Stelios Piperidis, editors, Proceedings of the Ninth International Conference on Language Re- sources and Evaluation (LREC’14). European Lan- guage Resources Association (ELRA), Reykjavik, Iceland. Fabio Massimo Zanzotto, Ioannis Korkontzelos, Francesca Fallucchi, and Suresh Manandhar. 2010. Estimating linear models for compositional distribu- tional semantics. In Proceedings of the 23rd Inter- national Conference on Computational Linguistics. Association for Computational Linguistics, pages 1263–1271.

You can also read