Cognitive Errors As Canary Traps - Alex Chinco September 2, 2021

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Cognitive Errors As Canary Traps∗

Alex Chinco†

September 2, 2021

Abstract

When otherwise intelligent investors fail to correct an error, a researcher learns

something about what these investors did not know. The investors must not have

known about anything which would have allowed them to spot their mistake. If

they had, they would have stopped making it.

I show how a researcher can use this insight to identify how investors price

assets. If X predicts returns, then any correlated predictor that investors know

about but researchers have yet to discover represents a potential confound. I define

a special kind of error, called a “cognitive error”, which otherwise intelligent

investors will only fail to correct if they are not aware of any such omitted variables.

So when investors fail to correct a priced cognitive error about X, then a researcher

can be sure that investors are pricing assets based on X. Cognitive errors are

instruments for identifying how mostly rational investors price assets.

Keywords: Model Identification, Cognitive Errors, Market Efficiency

∗

I would like to thank Justin Birru, Brian Boyer, Svetlana Bryzgalova, Andrew Chen, James Dow,

Arpit Gupta, Sam Hartzmark, Christian Heyerdahl-Larsen, Jiacui Li, Andy Neuhierl, Cameron Peng,

Marco Sammon, Shri Santosh, Eric So, Michael Weber, and Jeff Wurgler as well as participants at the

European Winter Finance Conference for excellent comments and suggestions.

†

Zicklin School of Business, Baruch College, CUNY. alexchinco@gmail.com

Current Version: http://www.alexchinco.com/canary-traps.pdf

11 Introduction

Imagine you are a PhD student interested in the cross-section of returns.

A “value stock” has a price that seems low compared to some measure of

fundamentals, such as its earnings or book value. The opposite is true for a “growth

stock”. We have long known that value stocks tend to have higher returns than growth

stocks (Graham and Dodd, 1934). This is the “value premium”.

Your job market paper documents that, in addition to having higher earnings-to-price

and book-to-market ratios than growth stocks, value stocks also tend to have lower levels

of another variable, X. You argue that the value premium exists because investors have

been pricing assets based on X all along. Ratios of price to fundamentals are just a

sideshow. Investors do not consider these things when computing prices.

You are not the first person to write a job market paper on the value premium. But

you are hoping to be the last. Your goal is to definitively prove that X is the real reason

for this well-known empirical regularity. You already have results showing that X

subsumes all previous explanations. And your job market paper also verifies several

novel predictions which, as far as you know, are only consistent with an X-based story.

But if outperforming previous explanations and making novel predictions were

enough, the value premium would have been solved long ago. Every previous paper also

did these things. That is how they got published. Now you are arguing that investors have

been pricing assets based on X all along even though no previous researcher ever thought

of the variable. So you have to consider the possibility that your X will be explained

away by some future PhD student using a variable you yourself have never thought of.

How can you identify that investors are pricing assets based on X when you know

that there will be more discoveries coming down the research pipeline? Is it even

possible? This is a classic omitted-variables problem. The existing explanations for the

value premium represent observed confounds. The explanations that future researchers

will propose are omitted variables. While the size of the factor zoo gets a lot of attention

(Cochrane, 2011), you cannot solve this omitted-variables problem by controlling for

observables (Heckman, 1979).

I show how a researcher can use a special kind of error, called a “cognitive error”, to

identify whether investors are pricing assets based on a particular variable, X. Cognitive

errors are instruments for identifying how mostly rational investors price assets.

2Identification Framework

I start in Section 3 by writing down a framework for thinking about researchers’

omitted-variables problem. A researcher wants to know whether investors are using

a particular predictor, X, to price assets. He tests this hypothesis by regressing the

cross-section of returns on the realized levels of X in some empirical setting:

rn = â + b̂ · xn + ε̂n assets n = 1, . . . , N (1)

All else equal, a higher current price implies a lower future return. So if investors prefer

low X assets, a researcher should estimate b̂ > 0 in a well-identified setting.

Unfortunately, even if investors are not using X to price assets, the variable might

still predict the cross-section of returns in a poorly identified empirical setting. This will

occur whenever X happens to be correlated with some other variable, X 0 , that investors

do care about. If the researcher regresses the cross-section of returns on X in such a

setting, he may spuriously estimate b̂ > 0.

The researcher wants to identify X as part of investors’ true model because he wants

to ensure that his results are robust to future discoveries. That is why he cares about

identification. If he estimates b̂ > 0 in a well-identified setting, then he can be sure that

investors will prefer low-X assets in every other setting too since investors always use

the same model to price assets. By contrast, if the researcher estimates b̂ > 0 in a poorly

identified setting, then he is liable to have his finding explained away by some future

researcher in a new empirical setting where X and X 0 are no longer correlated.

Any confounding explanation for the return predictability associated with X must

take the form of a correlated variable. So, to identify whether investors are using X to

price assets, the researcher must find an empirical setting where X is uncorrelated with

every other variable that investors know about. If he were to estimate b̂ > 0 in such a

setting, then the researcher could be sure that investors were using X to price assets.

Investors would not know about any other omitted variables in that setting.

One way to do this would be to construct test assets that only differed in X. This

approach is analogous to flipping a coin to see which patients get a new drug, X = 1, and

which get a sugar pill, X = 0. Another way to do this would be to look for a pre-existing

subset of market data in which there are good reasons to believe that assets only differ in

3X. This approach is like finding an instrumental variable. Due to the Vietnam draft

lottery (Angrist, 1990), there are good reasons to believe that date of birth was the only

difference between particular subset of Army veterans, X = 1, and an otherwise similar

group of young men born one day earlier who did not enlist, X = 0. Both approaches are

ways to ensure that Cov[X, X 0 ] = 0 for all other predictors X 0 .

Whichever approach the researcher takes, he needs to make sure that X is uncorrelated

with all other predictors that investors know about not just the ones he is currently aware

of. The researcher is worried about his finding being explained away by some future

researcher using an as-yet-undiscovered predictor. So he must do more than consider the

predictors he already knows about.

Cognitive Errors

But how can a researcher say anything useful about predictors which he himself has

yet to discover? The key insight in this paper is that, when otherwise intelligent investors

fail to correct an error, the researcher can learn something about what these investors did

not know. The investors must not have known anything which would have allowed them

to spot their mistake. If they had, they would have stopped making it. Crucially, this

logic reveals what investors do not know regardless of what researchers do know.

In Section 4, I define a special kind of error, called a “cognitive error”, which

investors can only correct if they are aware of at least one such omitted variable. Let

X̃ denote the version of X that investors perceive when making a cognitive error. If

investors fail to correct a cognitive error about X in some empirical setting, a researcher

can conclude that these investors must not know about any omitted variables. And I show

that, if X and X̃ both predict returns in the same way in that setting, b̂ > 0, then the

researcher can be absolutely sure that investors are using X to price assets. Cognitive

errors are instruments for identifying how mostly rational investors price assets.

Section 5 gives examples showing how researchers can use cognitive errors as

instruments for identifying how mostly rational investors price assets. I show how a

quirk in how S&P/Barra classified stocks as “value” or “growth” results in a cognitive

error that can be used to identify whether the value premium exists because investors

price value and growth stocks differently (Boyer, 2011). I also show how peer effects

(Bailey, Cao, Kuchler, and Stroebel, 2018) and investor inattention (Birru, 2015) can

produce cognitive errors that are useful for identification purposes.

4Efficient Markets

In efficient markets, “if there’s a signal not now incorporated in prices that future

values will be high, traders buy on that signal. . . bidding up the price until it fully reflects

available information. (Cochrane, 2013)” The Efficient Market Hypothesis (Fama, 1970)

claims that investors are able to correct every error that they initially make before a

researcher sees the effects in his data.

The conventional wisdom is that it would be easiest for a researcher to identify

investors’ true model if this claim were true. In Section 6, I build on the above logic to

show that the conventional wisdom has it exactly backwards: the assumption of market

efficiency makes it impossible for a researcher to identify investors’ model.

For markets to be efficient, investors must be able to correct every kind of error,

cognitive errors included. But, to correct a cognitive error about X, investors must know

about at least one correlated predictor. And, because correlated predictors are potential

confounds, when a researcher assumes that markets are efficient, he is assuming that

investors must know about a potential confound for any b̂ > 0 he estimates.

Suppose researchers are not aware of a potential confound. No matter. When they

assume markets are efficient, they assume that investors must be. The assumption of

market efficiency guarantees that investors always know about a problematic omitted

variable. The only remaining question is how long before a future researcher discovers it.

1.1 Related Literature

The factor zoo literature (e.g., Harvey, Liu, and Zhu, 2016; Bryzgalova, 2017; Kozak,

Nagel, and Santosh, 2018; Feng, Giglio, and Xiu, 2020; Freyberger, Neuhierl, and

Weber, 2020) analyzes the implications of having lots of observed confounds. I point out

that, when trying to identify investors’ model, a researcher must also consider the

omitted variables coming down the research pipeline.

Lucas (1976) shows how the results of a policy experiment may be distorted if

investors strategically change their behavior in anticipation of the shock. Chemla and

Hennessy (2019) and Hennessy and Strebulaev (2019) study different ways this can bias

parameter estimates. Martin and Nagel (2019) describes how tests of market efficiency

must change when investors face the same high-dimensional problem as researchers.

These papers are all about estimation. The focus in this paper is identification.

5Chemla and Hennessy (2021a,b) study how equilibrium interferes with identification.

These papers take the underlying economic model as given. Then, the authors study

the identification problem that emerges when a researcher interprets his empirical

results when conditioning on this model being at equilibrium. I am studying a

different identification problem. The problem in this paper stems, not from a researcher

conditioning on a specific economic model being in equilibrium, but from the researcher

no knowing which economic model to use. Asset-pricing researchers in particular have

substantial leeway along this dimension (Chen, Dou, and Kogan, 2021).

There are many different frameworks for thinking about identification: potential

outcomes (Rubin, 1974; Imbens and Angrist, 1994), structural equations (Haavelmo,

1943; Heckman and Vytlacil, 2005), causal diagrams (Wright, 1934; Pearl, 2009), and

distributional robustness (Shimodaira, 2000; Peters, Bühlmann, and Meinshausen, 2016).

This paper does not offer yet another one. Instead, it shows how a researcher can exploit

a special kind of investor error to satisfy the key assumptions in all of them.

Papers like Altonji, Elder, and Taber (2005) and Oster (2019) show how, under

certain conditions, a researcher can check for omitted variables by examining parameter

stability given different sets of control variables. When an asset-pricing researcher

assumes markets are efficient, these conditions will never be met.

Finally, several recent papers have explored optimal experimental design. Kasy

(2016) and Banerjee, Chassang, Montero, and Snowberg (2020) both examine how

a researcher should set up his experiment to get results with robust out-of-sample

performance. There is also an active literature looking at the costs and benefits of

randomized controlled trials (Deaton, 2010; Imbens, 2010; Olken, 2015). In the context

of asset-pricing theory, an random variation in investor beliefs must represent an error. I

point out that such errors are the cost of learning investors’ model.

2 Canary Traps

Why does it have to be an error? Investors are often more intelligent and better

informed than the researchers who study them. These are core assumptions that every

asset-pricing researcher holds dear. So it might at first seem a bit odd to rely on investor

errors for identification.

6But identifying the effect of a strategic choice requires observing some bad choices.1

To identify which model investors are using, a researcher needs these investors to be

making a few errors precisely because he believes them to be intelligent and well

informed. If investors to be uninformed simpletons, the researcher would not need any

errors to identify how they were pricing assets. The pattern would be patently obvious

given enough observational data.

To see why, imagine you are running the CIA, and you are concerned that one of

your spies is leaking information. Each of your spies is intelligent; that is why you hired

them. Each of your spies also knows things you do not; they are out in the field while

you are sitting at a desk in Langley, VA.

In spite of these disadvantages, one way you could identify the mole would be to set

a “canary trap” (Clancy, 1988). The next time you pass on a top-secret document, give a

slightly different version to each spy. If you plant unique incorrect details in each version,

then when your enemy acts on the unique brand of bad information he has received, you

will immediately be able to identify which spy gave it to him.

This metaphor highlights two key points. First, the canary trap is necessary precisely

because you want to identify an intelligent better-informed agent. If the mole in your

network always followed rote rules of thumb and you knew everything he did and more,

then setting a canary trap would be overkill. Why go through all the trouble? In the same

way that you do not need canary traps to catch ham-fisted spies, a researcher does not

need cognitive errors to identify how dumb investors are pricing assets.

Second, error plays a critical role. Even if this mole knows things you do not, when

he fails to notice the incorrect details in his document, you can be sure that he does not

know any corroborating details which would have allowed him to recognize the mistake.

As a result, you can be confident that the suspected traitor’s behavior does not merely

look incriminating to you because you lack context. Likewise, when investors fail to

correct a cognitive error about X, a researcher can be sure that he does not merely think

that X is priced because he lacks context. He can be sure that investors are not aware of

some as-yet-undiscovered omitted variable which could also explain the result.

1

Flipping a coin to see who gets a new drug ensures that half the patients in a clinical trial receive

suboptimal care. The efficacy of the new drug merely determines which half. When studying the effect of

army service on future income, Angrist (1990) compared pairs of otherwise identical people, only one of

whom enlisted. It cannot be optimal for two truly identical people to make different enlistment decisions.

73 Identification Framework

This section introduces a framework for thinking about an asset-pricing researcher’s

omitted-variables problem. There are two stages. First, a group of investors prices

N

1 risky assets using some asset-pricing model. Then, a researcher tries to identify

whether the model these investors were using included a particular predictor, X. The

omitted-variables problem stems from the fact that investors know about predictors

which the researcher has yet to discover.

3.1 Empirical Setting

Investors pay pn dollars to buy a share of the nth risky asset, which entitles them to a

payout of vn dollars at the end of the first stage. The realized return is rn = (vn − pn )/pn .

There are K

1 variables that might forecast future payouts. Let K denote the set

of all such predictors with K = |K|. Let Xk denote one of these predictors, such as

book-to-market ratio, exposure to labor-income risk, sales growth, returns over the past 6

months, etc. I use xn,k to denote the realized level of this predictor for the nth risky asset.

I use Z to denote a nuisance variable which never affects future payouts.

Investors are aware of all K candidate predictors, K I = K. At the start of the first

stage, they calculate the level of every predictor for each asset, xn,k . Investors are

unaware of the nuisance variable, Z, and do not calculate its realized values.

Investors are aware of how each asset’s future payout will be determined, v ←

V(X1 = x1 , . . . , XK = xK , Y = y), as a function of the predictor levels that they observe

and an unobserved random shock, y. They also have a particular asset-pricing model in

mind, p ← P(X1 = x1 , . . . , XK = xK ). This model specifies how an asset will be priced

at the start of the first stage as a function of its predictor levels. This payout rule and

asset-pricing model together with distributional assumptions concerning predictors, the

nuisance variable, and the unobserved payout shock define a structural model.

Definition 1 (Entailed Distribution). Let π denote the probability distribution function

entailed by this structural model in a particular empirical setting. This PDF is defined

over payouts, prices, returns, all predictors K, and the nuisance variable.

Let πI denote the marginal distribution entailed by the same structural model given

investors’ information set after assets are priced but before payouts are realized. This

PDF is defined over prices and predictors.

8In the second stage, the researcher observes the price pn , payout vn , and return rn of

each risky asset. The researcher is only aware of a subset of the predictors that investors

know about, K R ⊂ K I . He sees the realized level of each of these predictors for every

risky asset. The researcher is aware of the nuisance variable and observes zn . He knows

that there is some noise in each asset’s payout. But he does not see the realized value, yn .

In some ways, the researcher is less informed than investors. For example, investors

know about more predictors than the researcher does. Investors also know which model

they are using; whereas, the researcher is trying to learn this model from the data.

But the researcher also knows some things that investors do not at time when

they are pricing assets. For example, since the researcher is looking at past data, the

researcher can see each asset’s realized payout. The researcher is also aware of the

nuisance variable, Z, which sits below investors’ waterline of cognition.

Definition 2 (Empirical Density). Let π̂ denote the empirical density of payouts, prices,

returns, all predictors K, and the nuisance variable in a particular empirical setting.

Let π̂R denote the marginal density in that setting given the researcher’s information

set in the second stage. This density is defined over payouts, prices, returns, the subset of

predictors the researcher knows about K R , and the nuisance variable.

Let π̂I denote the marginal density in the same setting given investors’ information

set after assets are priced but before payouts are realized. This density is defined over

prices and all predictors investors know about K I = K.

As Manski (2009, p2) explains: “Studies of identification seek to characterize the

conclusions that could be drawn if one could use the sampling process to obtain an

unlimited number of observations. . . Statistical inference problems may be severe in

small samples but diminish in importance as the sampling process generates more

observations. Identification problems cannot be solved by gathering more of the same

kind of data.” To emphasize this point, I assume that N is large enough that sampling

error can be ignored. There will be no meaningful difference between the results of

calculations such as Ê[xk ] = N1 · n=1

R

xn,k and E[Xk ] = Xk · dπ.

PN

Assumption A (N Is Arbitrarily Large). The number of risky assets, N, is large enough

that you can ignore the difference that results from using the empirical density, π̂, rather

than the entailed distribution, π, to calculate any mean, variance, or covariance.

9The researcher wants to know whether the investors in his empirical setting were

using some predictor to price assets. I will use k = 1 to denote this predictor of interest.

The researcher tests his hypothesis by regressing the cross-section of returns on X1 :

rn = â + b̂ · xn,1 + ε̂n assets n = 1, . . . , N (2)

All else equal, a higher current price implies a lower future return. So if investors prefer

assets with lower X1 values, then the researcher should estimate b̂ > 0 in an empirical

setting where assets only systematically differ in their values of X1 .

3.2 Market Environment

A market environment corresponds to a specific country, time period, asset class, etc.

Let E denote the set of all possible market environments. The defining feature of market

environment e is the cross-sectional distribution of predictors in that environment:

1 σ1,2 (e) · · · σ1,K (e)

σ (e) 1 · · · σ2,K (e)

2,1

Cov[X1 , X2 , . . . , XK ] = . .. .. (3)

...

.. . .

σ (e) σ (e) ··· 1

K,1 K,2

For example, value stocks might tend to have lots of exposure to labor-income risk in US

data but not in Japanese data.

Without loss of generality, all predictors are normalized to have E[Xk ] = 0 and

Var[Xk ] = 1 in every market environment. The nuisance variable is such that E[Z] = 0,

Var[Z] = 1, and Cov[Xk , Z] = 0 for all k ∈ K in every market environment.

The omitted-variables problem at the heart of this paper stems from the existence of

predictors that are known to investors but not to researchers, K R ⊂ K I . These predictors

are the eponymous omitted variables. You cannot solve an omitted-variables problem by

controlling for observables (Heckman, 1979). So I assume that the researcher has already

controlled for all observed confounds in his empirical setting.

Assumption B (No Observed Confounds). X1 is uncorrelated with all other predictors

that the researcher currently knows about, Cov[X1 , Xk ] = 0 for all k ∈ K R \ 1.

10Since N

1 is sufficiently large, it is always possible to orthogonalize the observed

values of x1 = {xn,1 }n=1

N

relative to every other predictor the researcher knows about.

Assumption B basically says that the researcher has already taken this step.

3.3 Asset-Pricing Model

Each risky asset’s future payout is determined as follows:

X

v ← V(X1 = x1 , . . . , XK = xK , Y = y) = µV − φk · xk + y (4)

k∈K

µV = E[V] is the average risky-asset payout.2 Each slope coefficient, φk ≥ 0,

captures the effect of an increase in the kth predictor on an asset’s future payout. I use

the convention that investors dislike higher predictor values, hence the negative sign in

Equation (4). For instance, this would be the case if the kth predictor represented a

risk-factor exposure.

Y ∼ Normal[0, σ2Y ] represents payout noise that is uncorrelated with every predictor:

Cov[Z, Y] = 0 and Cov[Xk , Y] = 0 for all k ∈ K. I will be writing σ2Y rather than

σY (e)2 for clarity. However, the variance of Y must differ across market environments to

ensure a constant payout variance, σ2V = 1.

To ensure researchers face a meaningful identification problem, some of the

predictors must be irrelevant for forecasting future payouts. If this were not the case,

then it would not be possible for a researcher to choose the wrong predictors.

Assumption C (Sparse Payout Rule). φk = 0 for all but A

K active predictors. Let

A = { k ∈ K : φk > 0 } ⊂ K denote the active subset of predictors.

In addition, something about the payout rule must remain constant across market

environments. Otherwise a researcher would not be able to apply insights from one

market environment when making a prediction in another. Extrapolation is the whole

point of identification (Manski, 1995). So I assume that the same payout coefficients are

at work in all market environments.

2

To avoid having to worry about risky-asset prices that are negative or zero,

p which would make returns

ill-defined, I assume that the average risky-asset payout satisfies µV > 2 · log N. This choice comes

IID

from the scaling relationship E{maxn=1,...,N |ξn |} ∝ 2 · log N when ξn ∼ Normal[0, 1].

p

11Assumption D (Fixed Payout Rule). The future payouts of the N risky assets in every

market environment are governed by the same coefficients, {µV , φ1 , . . . , φK }.

The investors in every market environment use the same linear rule to price the risky

assets: X

P(X1 , . . . , XK ) = λ0 − λk · Xk (5)

k∈K

Most asset-pricing models generate linear pricing rules of this sort. See Appendix B for

one example. The negative sign in Equation (5) stems from the convention that investors

dislike higher predictor values. I associate investors’ asset-pricing model with the correct

choice of pricing-rule coefficients implied by this model.

Definition 3 (Asset-Pricing Model). Each asset-pricing model m is defined by a

collection of pricing-rule coefficients, {λ0 , λ1 , . . . , λK }. Let M denote all such models.

3.4 Model Implementation

Investors may fail to implement their model correctly for two different reasons. First,

investors could apply the wrong coefficients. For example, suppose that investors

under-react to negative earnings surprises, Xk . When these investors see a high realization

of Xk , they do not reduce an asset’s price enough. In this scenario, investors would be

using λ̃k < λk . Let ∆[λk ] = λ̃k − λk denote the difference between the pricing-rule

coefficients that investors are using and the correct choice of coefficient implied by their

asset-pricing model.

Second, investors could plug in the wrong inputs. The nth asset realizes Xk = xn,k for

the kth predictor; however, suppose that investors calculate a value of x̃n,k for that asset.

If x̃n,k = xn,k , then investors use the correct level for that predictor. However, if investors

misread their data or make a coding error, then they may calculate the wrong level of

some predictor x̃n,k , xn,k . Let ∆[xn,k ] = x̃n,k − xn,k denote the difference between the

level of the kth predictor for the nth asset in investors’ eyes and its actual level.

For example, financial news sites typically report the S&P 500’s price growth,

d log pS&P500 , not its realized return, rS&P500 . And Hartzmark and Solomon (2020)

document that investors often fail to recognize the difference. Investors who made

this error would price the S&P 500 incorrectly because they applied the pricing-rule

coefficient for past returns to past price growth, x̃ = d log pS&P500 , x = rS&P500 .

12Or, consider Kansas City Value Line (KCVL) futures contracts. Prior to 1988, their

payout was a function of the average price growth across stocks. Unfortunately, many

investors mistakenly priced KCVL futures using the wrong kind of average (Ritter, 1996;

Thomas, 2002; Mehrling, 2011). They calculated x̃ = arithmetic average price growth

instead of x = geometric average price growth.

Definition 4 (Model Implementation). An implementation, i, is defined by an error

operator, ∆i , which describes how the pricing-rule coefficients used by investors and the

realized predictor levels that investors calculate differ from the correct values. I use

i = ? to denote the rational benchmark:

∆? [λk ] = ∆? [xn,k ] = 0 (6)

Any other implementation requires investors to make at least one error.

Investors are worse off whenever they deviate from this rational benchmark. Using

the wrong coefficients and/or applying the right coefficients to the wrong predictor levels

will distort their portfolio holdings. And there is no way to improve investors’ welfare by

adjusting their portfolio holdings away from the rational-expectations equilibrium. This

is why investors would prefer to implement their asset-pricing model correctly.

3.5 Omitted-Variables Problem

The researcher sees the data produced by investors who priced assets in a particular

empirical setting. The researcher does not know which model the investors used.

Unfortunately, the researcher also does not know about all of the predictors in investors’

information set. Thus, the researcher can only assess whether the empirical setting he

observes could, in principle, be consistent with different kinds of models.

Definition 5 (Consistent Models). The researcher’s empirical setting is consistent with

model m under implementation i if the empirical density π̂R is covariance equivalent to

the PDF defined over the same variables that is entailed by implementing model m

in some empirical environment, e ∈ E. Let MR (i) ⊆ M denote the set of all models

consistent with the researcher’s data under implementation i ∈ I.

MR1 (i) = m ∈ MR (i) : λ1 > 0 is the subset of all consistent models under

implementation i that include the predictor that the researcher is interested in, X1 .

13The researcher’s empirical setting identifies X1 as part of investors’ true model if X1

is either active in every consistent model or inactive in every consistent model.

Definition 6 (Identified Setting). The researcher’s empirical setting identifies whether

X1 is part of investors’ true model under implementation i if every consistent model

contains predictor X1 or no consistent model contains X1 :

M (i)

R if λ1 > 0

M1 (i) = (7)

R

if λ1 = 0

∅

Lemma 1 (Identified Setting ⇔ No Correlated Omitted Variables). A setting identifies

whether X1 is part of investors’ model under implementation i if and only if investors do

not know about any correlated omitted variables: Cov[X1 , Xk ] = 0 for all k ∈ K I \ K R .

If there exists at least one identified setting under implementation i, then it is

possible (at least in principle) for a researcher to identify whether investors are using X1

to price assets. But it may be possible that no identified setting exists depending on what

the researcher assumes about how investors implement their chosen model.

Below I give a formal definition of the omitted-variables problem that the researcher

is trying to solve. Here is the idea. Suppose that investors use some model m ∈ M

to price risky assets in some market environment e ∈ E under implementation i.

This will entail a probability distribution. A researcher observes the corresponding

empirical density for a subset of variables, π̂R . Based on this information and the correct

assumption about how investors implemented their model, the researcher must decide

whether the setting identifies X1 is part of investors’ model.

Definition 7 (Omitted-Variables Problem).

(Setting) An empirical density, π̂R , that was generated by investors using a

particular model m ∈ M to price the risky assets in a specific market environment

e ∈ E under implementation i.

(Query) Does the setting identify whether X1 is part of investors’ model?

An asset-pricing researcher can answer with “yes”, “no”, or “I do not know”. His

answer is correct if he says “yes” when the empirical setting is identified or “no” when it

14is not. His answer is incorrect if he says “yes” when the setting is unidentified or “no”

when it is identified. An identification strategy is a rule that tells the researcher which

answer to give for a particular setting.

Definition 8 (Valid Identification Strategy). A valid identification strategy never gives

an incorrect answer in any setting and correctly answers “yes” in at least one setting.

There are many different kinds of identification strategies. None of them works in all

settings. However, each of them gives conditions such that, if a particular empirical

setting satisfies those conditions, the researcher can conclude that the setting is identified.

For example, instrumental variables is a valid identification strategy. Not every market

setting contains an instrument, but some do. And the potential-outcomes framework lays

out criteria that need to be satisfied by any such instrument. If those criteria are satisfied,

then the researcher will never falsely think that his empirical setting is identified.

Small stocks tend to be more illiquid in this world, Cov[LIQ, SIZE] > 0. Suppose

that investors are pricing assets based on liquidity (Amihud, 2002) but not size (Banz,

1981), and consider an asset-pricing researcher living in 1994. This researcher exists

at a time after the size effect has been widely publicized but before liquidity has

gained traction in the academic research. So, even though liquidity and size are highly

correlated, the researcher has never thought to use liquidity as a predictor.

This researcher regresses the cross-section of returns on SIZE. Since small stocks

tend to be more illiquid, the researcher finds evidence of a size effect, b̂ > 0. But the

researcher also knows that there will be future discoveries coming down the pipeline. So

he recognizes that his results might be due to an omitted variable. He does not yet know

about LIQ as a predictor in 1994, but he does recognize that future researchers might

discover a variable, such as liquidity, which seems to account for much of the size effect.

To solve this researcher’s omitted-variables problem, you must come up with a valid

identification strategy that the researcher could use in 1994 to determine whether or not

investors were pricing assets based on SIZE. Since we are assuming that the researcher

has already orthogonalized SIZE with respect to every observable predictor in each

empirical setting, this amounts to giving conditions under which the researcher can be

sure that SIZE is uncorrelated with every other as-yet-undiscovered predictor that

investors know about. If the researcher encounters such a setting, he will be able to

identify that SIZE is not part of investors model.

154 Main Result

This section describes how to use a special kind of investor error, called a “cognitive

error”, to solve this omitted-variables problem. The trick is to define a cognitive error

about X1 in such a way that otherwise intelligent investors will only fail to recognize

their error in empirical settings where they do not know about any correlated omitted

variables. That way, when a researcher encounters an empirical setting where investors

fail to correct a cognitive error about X1 , he can be absolutely sure that these investors

are not aware of any potential confounds—i.e., that the setting is identified.

4.1 Key Assumption

The logic outlined above hinges on one key assumption: while investors sometimes

make mistakes, they are not completely foolish. Investors are aware that they could be

implementing their model incorrectly. They are aware that they may be using some

∆i , ∆? . However, they do not initially know the nature of their mistake.

After assets are priced but before payouts are realized, investors get a chance to

correct their initial error. Investors know how prices and predictors should be distributed

under the rational benchmark, πI (?). Their economic intuition tells them that they

should observe this entailed distribution. Investors can also inspect the empirical density

of prices and predictors for the N risky assets in their setting, π̂I (i).

Investors look for evidence that they are using some implementation ∆i , ∆? by

comparing this entailed distribution, πI (?), to the observed empirical density, π̂I (i).

Since N is arbitrarily large, there will be no mismatch between these two distributions

under the rational benchmark, i = ?. In essence, investors ask themselves: “If I take my

model seriously, then I should see such and such. When I look at the way I just priced

assets, do I see that this prediction actually holds up?”

Assumption ♠ (Investors Are Otherwise Intelligent). Investors recognize that ∆i , ∆?

in every empirical setting where the entailed distribution, πI (?), and the empirical

density, π̂I (i), are not covariance equivalent given investors’ information set.

Whenever the investors in a particular empirical setting recognize that they were

using ∆i , ∆? , they implement their model using the i = ?, and the researcher sees

market data that were produced under the rational benchmark in the second stage.

16Like in Tirole (2009), if the entailed distribution, πI (?), and the empirical density,

π̂I (i), are not covariance equivalent given investors’ information set, it is an “eye

opener”. Investors immediately recognize their error and adjust prices to reflect the

rational benchmark. A researcher studying an empirical setting in which investors have

recognized and corrected an initial implementation error will never see any of its effects.

Otherwise intelligent investors may not always be able to spot an implementation

error, though. This will happen whenever investors’ information set is not fine enough to

distinguish between πI (?) and π̂I (i). The key insight in this paper is that, when this

happens, it reveals something about what investors did not know. They must not have

known about anything which would have allowed them to tell that πI (?) and π̂I (i) were

not covariance equivalent. Importantly, this constraint on what investors must not have

known is independent of what researchers currently do know.

4.2 Cognitive Errors

I operationalize this insight by defining a special kind of error about X1 , called a

“cognitive error”, that investors only fail to recognize when they are not aware of any

correlated omitted variables. In other words, a cognitive error is defined in such a way

that investors could use any omitted variable that a researcher would classify as a

confounding explanation for error correction purposes.

Definition 9 (Cognitive Error). When investors make a cognitive error about X1 , they

implement their model using an error operator defined as ∆[λk ] = 0 for all k ≥ 0,

√ √

∆[xn,1 ] = ( 1 − θ − 1) · xn,1 + θ · zn for some θ ∈ [0, 1) (8)

and ∆[xn,k ] = 0 for all k > 1. When investors recognize a cognitive error, they set θ = 0.

When investors make a cognitive error about X1 , it is as if they are unknowingly

anchoring on the nuisance variable Z à la Tversky and Kahneman (1974). Investors are

not directly aware of this variable, but it still seeps into their decision-making process.

Larger values of θ correspond to more severe anchoring. In the limit as θ → 1,

investors incorrectly calculate x̃n,1 = zn even though X1 and Z are entirely unrelated,

Cov[X1 , Z] = 0. By contrast, if θ = 0, investors are not making an error at all or they

have recognized and corrected their mistake.

17For example, when asked, home buyers claim that their beliefs about future

house-price growth are not affected by their out-of-state friends’ past experiences in the

housing market. Nevertheless, their distant friends’ housing-market experiences do

distort their beliefs (Bailey, Cao, Kuchler, and Stroebel, 2018). When their distant

friends’ home prices have risen more, they unwittingly anchor their beliefs about future

house-price growth in their own city on this good reference point.

Suppose that investors know about some other predictor, Xk , that is correlated with

X1 , in their empirical setting, σ1,k (e) , 0. If investors are making a cognitive error about

X1 , then this error will create a mismatch between the covariance entailed by the rational

benchmark, σ1,k (e), and the covariance they observe in their data:

√

d x̃1 , xk ] =

Cov[ 1 − θ · σ1,k (e) < σ1,k (e) (9)

A cognitive error about X1 will deflate its covariance with any other correlated predictor.

Thus, otherwise intelligent investors will only ever fail to correct a cognitive error about

X1 in empirical settings where they do not know about any correlated predictors.

Cognitive errors do not affect predictor variances, Var[Xk ] = 1 for all k. Cognitive

errors also do not affect any covariances that do not involve X1 . However, these two

additional assumptions are not essential to the results in this paper. The key thing is that

investors must be able to spot the error in any empirical setting where they are aware of

at least one correlated predictor.

Suppose that a researcher knows that investors have failed to correct a cognitive error

about X1 in his empirical setting. The following proposition details how he can exploit

this knowledge for identification purposes.

Proposition 1 (Valid Identification Strategy). If investors have failed to correct a

cognitive error about X1 in an empirical setting, then the setting is identified.

Moreover, the following protocol represents a valid identification strategy. Regress

the cross-section of returns on the Z associated with the cognitive error:

rn = ĉ + d̂ · zn + ε̂n (10)

X1 is part of investors’ model if d̂ > 0. It is not part of investors model if d̂ = 0.

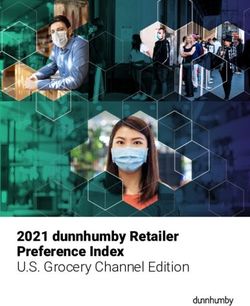

18Cumulative HML Returns

$47

$50 $40

$21

$20

$10

$5

-1.36%

$2 +0.46% per month -0.09%

$1

6 6 6 6 6 0 6

l2 c4 c6 c8 c1 ov2 c0

Ju D e D e D e e

D N D e

Figure 1. Cumulative returns to Fama and French (1993)’s high-minus-low (HML)

book-to-market factor, FHML , using monthly data from July 1926 to November 2020.

y-axis is on a logarithmic scale. Percentages in white are average monthly returns to the

HML factor during different time windows.

Notice that the logic behind this identification strategy converts a portion of the

existing behavioral-finance literature into a cache of instruments. Researchers should not

view the behavioral literature as an attack on rational models. Instead, this literature is a

collection of tools for identifying how mostly rational investors price assets.

Cognitive errors offer a way to halt the explanation cycling—size matters (Banz,

1981); no, the size effect is dead (Berk, 1995); actually, size still matters when controlling

for asset quality (Asness, Frazzini, Israel, Moskowitz, and Pedersen, 2018); etc—that has

slowed progress in the field. Once a researcher uses a cognitive error to identify X1 , he

no longer has to worry his result being explained away by an omitted variable that will

be discovered by some future researcher.3

5 Some Applications

Do investors price value stocks differently than growth stocks because they care

about the value-vs-growth distinction (Subsection 5.1)? Do home buyer beliefs about

future house-price growth affect how these investors price homes (Subsection 5.2)? Are

momentum profits the result of investors making pricing decisions based on realized

3

This does not mean that the researcher’s results would be infallible. In practice, the researcher would still

have to worry about estimation error, mis-specification, etc. N is not arbitrarily large in the real world.

19gains (Subsection 5.3)? This section shows how to answer these questions using

cognitive errors taken from the existing behavioral-finance literature.

Along the way, I point out promising places to look for more cognitive errors. But

for the same reasons that there is no mechanical recipe for finding instrumental variables

(Angrist and Krueger, 2001), I can offer no such recipe for finding cognitive errors.

Finding instruments involves creativity. So does finding cognitive errors.

5.1 Label Changes

Does the value premium exist because investors prefer growth stocks? This

subsection shows how to answer this question using a cognitive error taken from Boyer

(2011). The cognitive error is based on a quirk in how S&P/Barra constructed its value-

and growth-stock indexes.

Identification Problem

A portfolio that is long high book-to-market (B/M) stocks and short low B/M stocks

(Fama and French, 1993) has an average excess return of b̂ = 0.44 ± 0.8% per month

during the period from July 1926 to November 2020 as shown in Figure 1.4 This finding

is consistent with the hypothesis that investors are willing to pay more for growth stocks.

But growth stocks also tend to be younger less-profitable firms. Both of these

variables represent potential confounds. Either of them could be the true explanation for

the 44bps per month value premium in Figure 1.

You cannot solve this identification problem by simply controlling firm age,

profitability, etc using more sophisticated econometric techniques. These methods allow

you to control for the observed predictors in the existing academic literature. But you

cannot solve an omitted-variables problem by better controlling for observables.

You also cannot solve this identification problem by writing down a fancy theory.

The challenge is to identify what investors are actually doing not what they theoretically

could be doing (Chinco, Hartzmark, and Sussman, 2020). If you tested an additional

theoretical prediction and found that it was satisfied in the data, the result would be

consistent with your new story. But it would not represent unique identifying evidence

that could only be explained this way.

4

The data come from Ken French’s website. https://mba.tuck.dartmouth.edu/pages/

faculty/ken.french/Data_Library/f-f_factors.html.

20Cognitive Error

To identify whether investors care about the value-vs-growth distinction when

pricing assets, a researcher must find an empirical setting in which stocks sometimes

change from being labeled as value to being labeled as growth in investors’ minds for

reasons unrelated to any other variables that investors know about.

Boyer (2011) documents a cognitive error that can be used for this purpose.

S&P/Barra calls any S&P 500 stock with a book-to-market (B/M) above a certain

threshold a “value stock”. They label every other S&P 500 stock as a “growth stock”.

When S&P/Barra’s increases this threshold, a value stock with an unchanged B/M can

get reclassified as a growth stock. The same thing in reverse can happen to growth stocks.

From 1992 to 2004, there were 54 instances where S&P/Barra reclassified an

S&P 500 stock from value to growth or vice versa when its B/M ratio had actually

moved in the opposite direction. Boyer (2011) shows that investors respond to these

reclassifications in the same way as every other reclassification, Sign[b̂] = Sign[d̂]. It is

as if investors’ mental calculation of a stock’s valueness or growthiness is anchored on

S&P/Barra’s uninformative label.

To construct an identified setting, collect two observations about each of the 54 firms

that were reclassified due to a change in S&P/Barra’s value-vs-growth threshold: one

before the reclassification and one immediately after. The only difference between these

two observations would be how investors perceive the firm’s B/M in each instance.

Let Z denote an indicator variable such that:

+1 if post-reclassification from growth to value

√

Z = 2× (11)

0 if pre-reclassification

if post-reclassification from value to growth

−1

√ √ √

The factor of 2 ensures that Var[Z] = 0.25 · ( 2)2 + 0.50 · 02 + 0.25 · (− 2)2 = 1,

and the parameter θ ∈ [0, 1) captures the salience of S&P/Barra’s labeling to investors’

√

mental calculations. Table 2 in Boyer (2011) documents that θ · Z ∈ {−30, 0, +30}bps,

which would imply a θ = 0.302 /2 ≈ 0.05.

21Results/Implications

Proposition 1 says to regress the cross-section of 108 return observations in this

empirical setting on Z:

rn = ĉ + d̂ · zn + ε̂n observations n = 1, . . . , 108 (12)

If the estimated slope coefficient from Equation (12) is non-zero, d̂ > 0, then this would

identify that the value premium was due to investors’ preference for growth stocks. If

investors were aware of any confounding explanations, then they would have stopped

anchoring their perceptions of valueness and growthiness on S&P/Barra’s labels. Given

such an identifying result, you would not have to worry about a future PhD student

proposing an alternative explanation for your result.

Classification mistakes and arbitrary label changes are an important cache of

cognitive errors for future researchers to mine. For example, there are numerous papers

looking at how changes in mutual-fund style labels often do not reflect changes in their

actual holdings (Shleifer, 1986; Barberis, Shleifer, and Wurgler, 2005; Cooper, Gulen,

and Rau, 2005; Sensoy, 2009). Companies can also be mislabeled in uninformative ways

(Cooper, Dimitrov, and Rau, 2001; Rashes, 2001; Hartzmark and Sussman, 2019). And

analysts sometimes make important labeling errors (Chen, Cohen, and Gurun, 2019;

Ben-David, Li, Rossi, and Song, 2020). In the past, researchers have viewed situations

where this sort of uninformative labeling affects asset prices as demonstrations of market

inefficiency. This paper shows they are also more than that; they represent opportunities

to better understand how investors price assets.

5.2 Social Connections

Do home buyer beliefs about future house-price growth affect how these investors

price homes today? This subsection shows how to answer this question using a cognitive

error taken from Bailey, Cao, Kuchler, and Stroebel (2018) involving peer effects.

Identification Problem

Cities with higher current house prices also tend to have higher future house-price

growth. For example, in November 2000, the price of a typical house in Detroit was

$150k; whereas, the typical price in Los Angeles was $253k. Over the subsequent two

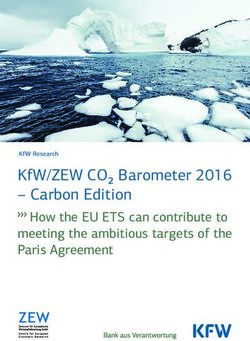

22Price vs. Future Appreciation Rate

$400k San Francisco

$300k

York SanSeattle

Boston

New DiegoLos Angeles

Denver

Washington

$200k Sacramento

Chicago Minneapolis Portland

Detroit Atlanta

CharlotteBaltimore

Phoenix Riverside

Philadelphia

Houston Dallas Miami

St Louis Tampa

$100k Pittsburgh

2% 3% 4% 5%

Figure 2. Relationship between the November 2000 house-price level in the largest 25

US cities (y-axis; log scale; dependent variable in Equation 13) and the house-price

appreciation rate from December 2000 to November 2020 in these cities (x-axis;

annualized; RHS variable in Equation 13).

decades, while house prices only grew at a rate of 1.32% per year in Detroit, they grew

at a rate of 5.45% per year in Los Angeles.5

This pattern generalizes to other cities as illustrated by Figure 2. When I regress the

log price level in each of the 25 largest US cities as of November 2000, log pn , on the

annualized house-price growth rate over the next 20 years, xn , I find that:

log pn = â + 0.14 · xn + ε̂n (13)

[0.06]

This regression says cities with 1% per year higher growth rates in the future tended to

have current price levels that were b̂ = 14 ± 6% higher on average.

This point estimate is consistent with the hypothesis that investors consider future

house-price growth when deciding how much to pay for a home today. This makes

intuitive sense. If the price of a house will rise in the future, whoever buys the house will

pocket these gains. Knowing this, every potential buyer should be willing to pay a bit

more for the house right now. Home buyers in standard models à la Poterba (1984) all

think this way (e.g., see Case and Shiller, 2003; Himmelberg, Mayer, and Sinai, 2005).

However, home buyers in the real world do not have to think this way. The same sort

of reasoning says that a homeowner who is currently underwater on his mortgage should

default when the expected gains from future house-price growth are less than the present

5

These data come from Zillow Research. See https://www.zillow.com/research/data/.

23value of his future mortgage payments. And we know most homeowners do not exercise

this default option correctly (Deng, Quigley, and Van Order, 2000), which suggests

real-world investors are not guaranteed to think about the present value of future

house-price changes like a textbook investor would.

Moreover, in the same way that risk-factor betas are not exogenous in the stock

market (Campbell and Mei, 1993), home buyers’ beliefs about future house-price growth

are not exogenous either. We can all think of lots of reasons besides higher future

house-price growth why you might want to pay more for a house in Los Angeles than in

Detroit. In short, this does not represent an identified setting.

Cognitive Error

Solving this omitted-variables problem requires finding an empirical setting with

variation in perceived future house-price growth rates that is unrelated to any other

variable that home buyers might know about. Bailey, Cao, Kuchler, and Stroebel (2018)

suggests a cognitive error based on social connections.

Suppose Alice and Bob are home buyers living in Phoenix. The only difference

between these two otherwise identical people is that Alice has a bunch of Facebook

friends in Los Angeles (high appreciation) while most of Bob’s friends live in Detroit

(low appreciation). Bailey, Cao, Kuchler, and Stroebel (2018, Table 10) shows that, due

to the influence of her Facebook connections, Alice’s beliefs about house-price growth in

Phoenix will be higher than Bob’s. This belief distortion represents a cognitive error.

Differences in where Alice and Bob’s Facebook friends live can have nothing to do with

underlying housing-market conditions in Phoenix.

Let X1 denote the correct belief about the future house-price growth rate in a

particular city. Alice and Bob should both have beliefs of x1 if they were not making any

errors. Let x̃n,1 denote the distorted beliefs about future house-price growth rates held by

a particular home buyer, n ∈ {Alice, Bob, Charles, Diane, . . .}.

Define Z to be the average house-price growth experienced by a home buyer’s

Facebook friends that live some other city. For Alice and Bob, this is the house-price

growth experienced by their Facebook friends who live anywhere but Phoenix. Assume

this anchor has been normalized to have mean zero and unit variance across buyers,

E[Z] = 0 and Var[Z] = 1. The parameter θ ∈ (0, 1) captures the strength of home

buyers’ Facebook connections. Higher θ means they check Facebook more often.

24This cognitive error makes it possible to create an identified setting for testing

whether home buyer beliefs about future house-price growth affect current prices. This

empirical setting contains home buyers, like Alice and Bob, who live in the same place

and only differ in the past house-price growth enjoyed by their Facebook friends living in

other cities. Bailey, Cao, Kuchler, and Stroebel (2018) use this empirical setting to

document that these social connections influence a home buyers’ willingness to pay.

However, by extension, the authors’ results also imply something important about the

model that home buyers use to price houses.

Results/Implications

Bailey, Cao, Kuchler, and Stroebel (2018, Table 9) reports the results of the following

specification. The authors regress the log price paid by the nth home buyer on the

house-price growth experienced by this home buyers’ Facebook friends living in some

other city, zn . This is the exact specification in Proposition 1 written in terms of prices

rather than realized returns. The estimated slope coefficient implies that, if Alice’s

Facebook friends in other cities had experienced 10% higher house-price growth, Alice

would have paid 4.5 ± 1.5% more than Bob.

The authors interpret this as evidence that social connections affect housing-market

outcomes. And it is. But it is also more than that. By showing that Facebook connections

distort house prices via their effect on beliefs about future house-price growth rates,

Bailey, Cao, Kuchler, and Stroebel (2018) also identify home buyers’ beliefs about future

house-price growth rates as an input to these investors’ model. They confirm that home

buyers’ beliefs about future house-price growth directly affect home prices today.

The literature on peer effects and social connections represents a natural place

to look for cognitive errors going forward (Shiller, 1984; Shiller and Pound, 1989;

Kuchler and Stroebel, 2020; Hirshleifer, 2020). This literature is concerned about

whether a result can be explained by homophily rather than social influence (McPherson,

Smith-Lovin, and Cook, 2001; Jackson, 2010). To show that an effect is not just a case of

birds of a feather flocking together, an asset-pricing researcher must show that there is no

unobserved fundamental shock around which to organize a flock. In short, identifying

that a change in beliefs was due to peer effects requires a cognitive error.

25You can also read