SemEval-2021 Task 6: Detection of Persuasion Techniques in Texts and Images

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

SemEval-2021 Task 6:

Detection of Persuasion Techniques in Texts and Images

Dimitar Dimitrov,1 Bishr Bin Ali,2 Shaden Shaar,3 Firoj Alam,3

Fabrizio Silvestri,4 Hamed Firooz,5 Preslav Nakov, 3 and Giovanni Da San Martino6

1

Sofia University “St. Kliment Ohridski”, Bulgaria, 2 King’s College London, UK,

3

Qatar Computing Research Institute, HBKU, Qatar

4

Sapienza University of Rome, Italy, 5 Facebook AI, USA, 6 University of Padova, Italy

mitko.bg.ss@gmail.com, bishrkc@gmail.com

{sshaar, fialam, pnakov}@hbku.edu.qa, mhfirooz@fb.com

fsilvestri@diag.uniroma1.it, dasan@math.unipd.it

Abstract

We describe SemEval-2021 task 6 on Detec-

tion of Persuasion Techniques in Texts and Im-

ages: the data, the annotation guidelines, the

evaluation setup, the results, and the partici-

pating systems. The task focused on memes

and had three subtasks: (i) detecting the tech-

niques in the text, (ii) detecting the text spans

where the techniques are used, and (iii) detect-

ing techniques in the entire meme, i.e., both in

the text and in the image. It was a popular task,

attracting 71 registrations, and 22 teams that Figure 1: A meme with a civil war threat during the

eventually made an official submission on the President Trump’s impeachment trial. Two persuasion

test set. The evaluation results for the third sub- techniques are used: (i) Appeal to Fear in the image,

task confirmed the importance of both modal- and (ii) Exaggeration in the text. Source(s): Image ;

ities, the text and the image. Moreover, some License

teams reported benefits when not just combin-

ing the two modalities, e.g., by using early or

late fusion, but rather modeling the interaction Thus, in 2020, we proposed SemEval-2020

between them in a joint model. task 11 on Detection of Persuasion Techniques in

News Articles, with the aim to help bridge this

1 Introduction

gap (Da San Martino et al., 2020a). The task fo-

cused on text only. Yet, some of the most influential

Internet and social media have amplified the posts in social media use memes, as shown in Fig-

impact of disinformation campaigns. Tradition- ure 1,1 where visual cues are being used, along

ally a monopoly of states and large organiza- with text, as a persuasive vehicle to spread disin-

tions, now such campaigns have become within formation (Shu et al., 2017). During the 2016 US

the reach of even small organisations and individu- Presidential campaign, malicious users in social

als (Da San Martino et al., 2020b). media (bots, cyborgs, trolls) used such memes to

Such propaganda campaigns are often carried provoke emotional responses (Guo et al., 2020).

out using posts spread on social media, with the In 2021, we introduced a new SemEval shared

aim to reach very large audience. While the rhetor- task, for which we prepared a multimodal corpus

ical and the psychological devices that constitute of memes annotated with an extended set of tech-

the basic building blocks of persuasive messages niques, compared to SemEval-2020 task 11. This

have been thoroughly studied (Miller, 1939; We- time, we annotated both the text of the memes,

ston, 2008; Torok, 2015), only few isolated efforts highlighting the spans in which each technique has

have been made to devise automatic systems to de- been used, as well as the techniques appearing in

tect them (Habernal et al., 2018; Habernal et al., the visual content of the memes.

2018; Da San Martino et al., 2019b). 1

In order to avoid potential copyright issues, all memes we

WARNING: This paper contains meme examples and show in this paper are our own recreation of existing memes,

wording that might be offensive to some readers. using images with clear copyright.

70

Proceedings of the 15th International Workshop on Semantic Evaluation (SemEval-2021), pages 70–98

Bangkok, Thailand (online), August 5–6, 2021. ©2021 Association for Computational LinguisticsBased on our annotations, we offered the follow- They performed massive experiments, investi-

ing three subtasks: gated writing style and readability level, and trained

models using logistic regression and SVMs. Their

Subtask 1 (ST1) Given the textual content of a findings confirmed that using distant supervision,

meme, identify which techniques (out of 20 in conjunction with rich representations, might en-

possible ones) are used in it. This is a multil- courage the model to predict the source of the ar-

abel classification problem. ticle, rather than to discriminate propaganda from

non-propaganda. The study by Habernal et al.

Subtask 2 (ST2) Given the textual content of a (2017, 2018) also proposed a corpus with 1.3k ar-

meme, identify which techniques (out of 20 guments annotated with five fallacies, including

possible ones) are used in it together with ad hominem, red herring, and irrelevant authority,

the span(s) of text covered by each technique. which directly relate to propaganda techniques.

This is a multilabel sequence tagging task. A more fine-grained propaganda analysis was

done by Da San Martino et al. (2019b), who devel-

Subtask 3 (ST3) Given a meme, identify which

oped a corpus of news articles annotated with the

techniques (out of 22 possible ones) are used

spans of use of 18 propaganda techniques, from

in the meme, considering both the text and

an invetory they put together. They targeted two

the image. This is a multilabel classification

tasks: (i) binary classification —given a sentence,

problem.

predict whether any of the techniques was used

A total of 71 teams registered for the task, 22 in it; and (ii) multi-label multi-class classification

of them made an official submission on the test and span detection task —given a raw text, iden-

set and 15 of the participating teams submitted a tify both the specific text fragments where a pro-

system description paper. paganda technique is being used as well as the

type of technique. They further proposed a multi-

2 Related Work granular gated deep neural network that captures

signals from the sentence-level task to improve the

Propaganda Detection Previous work on propa- performance of the fragment-level classifier and

ganda detection has focused on analyzing textual vice versa. Subsequently, an automatic system,

content (Barrón-Cedeno et al., 2019; Da San Mar- Prta, was developed and made publicly avail-

tino et al., 2019b; Rashkin et al., 2017). See able (Da San Martino et al., 2020c), which per-

(Martino et al., 2020) for a recent survey on com- forms fine-grained propaganda analysis of text us-

putational propaganda detection. Rashkin et al. ing these 18 fine-grained propaganda techniques.

(2017) developed the TSHP-17 corpus, which

had document-level annotations with four classes: Multimodal Content Another line of related re-

trusted, satire, hoax, and propaganda. Note that search is on analyzing multimodal content, e.g.,

TSHP-17 was labeled using distant supervision, for predicting misleading information (Volkova

i.e., all articles from a given news outlet were as- et al., 2019), for detecting deception (Glenski et al.,

signed the label of that news outlet. The news 2019), emotions and propaganda (Abd Kadir et al.,

articles were collected from the English Gigaword 2016), hateful memes (Kiela et al., 2020), and pro-

corpus (which covers reliable news sources), as paganda in images (Seo, 2014). Volkova et al.

well as from seven unreliable news sources, includ- (2019) developed a corpus of 500K Twitter posts

ing two propagandistic ones. They trained a model consisting of images and labeled with six classes:

using word n-grams, and reported that it performed disinformation, propaganda, hoaxes, conspiracies,

well only on articles from sources that the system clickbait, and satire. Glenski et al. (2019) explored

was trained on, and that the performance degraded multilingual multimodal content for deception de-

quite substantially when evaluated on articles from tection. Multimodal hateful memes were the target

unseen news sources. Barrón-Cedeno et al. (2019) of the Hateful Memes Challenge, which was ad-

developed a corpus QProp with two labels (pro- dressed by fine-tuning state-of-art methods such

paganda vs. non-propaganda), and experimented as ViLBERT (Lu et al., 2019), Multimodal Bi-

with two corpora: TSHP-17 and QProp . They transformers (Kiela et al., 2019), and VisualBERT

binarized the labels of TSHP-17 as follows: pro- (Li et al., 2019) to classify hateful vs. not-hateful

paganda vs. the other three categories. memes (Kiela et al., 2020).

71Related Shared Tasks The present shared task Below, we provide a definition for each of these

is closely related to SemEval-2020 task 11 on De- 22 techniques; more detailed instructions of the

tection of Persuasion Techniques in News Articles annotation process and examples are provided in

(Da San Martino et al., 2020a), which focused on Appendix A.

news articles, and asked (i) to detect the spans

where propaganda techniques are used, as well as 1. Loaded Language: Using specific words and

(ii) to predict which propaganda technique (from phrases with strong emotional implications (ei-

an inventory of 14 techniques) is used in a given ther positive or negative) to influence an audi-

text span. Another closely related shared task is the ence.

NLP4IF-2019 task on Fine-Grained Propaganda 2. Name Calling or Labeling: Labeling the ob-

Detection, which asked to detect the spans of use in ject of the propaganda campaign as either some-

news articles of each of 18 propaganda techniques thing the target audience fears, hates, finds un-

(Da San Martino et al., 2019a). While these tasks desirable, or loves, praises.

focused on the text of news articles, here we target

memes and multimodality, and we further use an 3. Doubt: Questioning the credibility of someone

extended inventory of 22 propaganda techniques. or something.

Other related shared tasks include the FEVER 4. Exaggeration or Minimisation: Either rep-

2018 and 2019 tasks on Fact Extraction and VER- resenting something in an excessive manner,

ification (Thorne et al., 2018), the SemEval 2017 e.g., making things larger, better, worse (“the

and 2019 tasks on predicting the veracity of rumors best of the best”, “quality guaranteed”), or mak-

in Twitter (Derczynski et al., 2017; Gorrell et al., ing something seem less important or smaller

2019), the SemEval-2019 task on Fact-Checking than it really is, e.g., saying that an insult was

in Community Question Answering Forums (Mi- just a joke.

haylova et al., 2019), the NLP4IF-2021 shared

task on Fighting the COVID-19 Infodemic (Shaar 5. Appeal to Fear or Prejudices: Seeking to

et al., 2021). We should also mention the CLEF build support for an idea by instilling anxiety

2018–2021 CheckThat! lab (Nakov et al., 2018; El- and/or panic in the population towards an alter-

sayed et al., 2019a,b; Barrón-Cedeño et al., 2020; native. In some cases, the support is built based

Barrón-Cedeño et al., 2020), which featured tasks on preconceived judgments.

on automatic identification (Atanasova et al., 2018, 6. Slogans: A brief and striking phrase that may

2019) and verification (Barrón-Cedeño et al., 2018; include labeling and stereotyping. Slogans tend

Hasanain et al., 2019, 2020; Shaar et al., 2020; to act as emotional appeals.

Nakov et al., 2021) of claims in political debates

7. Whataboutism: A technique that attempts to

and social media. While these tasks focused on

discredit an opponent’s position by charging

factuality, check-worthiness, and stance detection,

them with hypocrisy without directly disproving

here we target propaganda; moreover, we focus

their argument.

on memes and on multimodality rather than on

analyzing the text of tweets, political debates, or 8. Flag-Waving: Playing on strong national feel-

community question answering forums. ing (or positive feelings toward any group,

e.g., based on race, gender, political preference)

3 Persuasion Techniques to justify or promote an action or idea.

9. Misrepresentation of Someone’s Position

Scholars have proposed a number of inventories

(Straw Man): When an opponent’s proposition

of persuasion techniques of various sizes (Miller,

is substituted with a similar one, which is then

1939; Torok, 2015; Abd Kadir and Sauffiyan, 2014).

refuted in place of the original proposition.

Here, we use an inventory of 22 techniques, bor-

rowing from the lists of techniques described in 10. Causal Oversimplification: Assuming a sin-

(Da San Martino et al., 2019b), (Shah, 2005) and gle cause or reason, when there are actually

(Abd Kadir and Sauffiyan, 2014). Among these 22 multiple causes for an issue. It includes trans-

techniques, the first 20 are applicable to both text ferring blame to one person or group of people

and images, while the last two, Appeal to (Strong) without investigating the actual complexities of

Emotions and Transfer, are reserved for images. the issue.

7211. Appeal to Authority: Stating that a claim is 21. Appeal to (Strong) Emotions: Using images

true because a valid authority or expert on the with strong positive/negative emotional implica-

issue said so, without any other supporting ev- tions to influence an audience.

idence offered. We consider the special case 22. Transfer: Also known as Association, this is a

in which the reference is not an authority or an technique that evokes an emotional response by

expert as part of this technique, although it is projecting positive or negative qualities (praise

referred to as Testimonial in the literature. or blame) of a person, entity, object, or value

12. Thought-Terminating Cliché: Words or onto another one in order to make the latter more

phrases that discourage critical thought and acceptable or to discredit it.

meaningful discussion about a given topic. They

are typically short, generic sentences that offer 4 Dataset

seemingly simple answers to complex questions

The annotation process is explained in detail in

or that distract the attention away from other

Appendix A, and in this section, we give a just

lines of thought.

brief summary.

13. Black-and-White Fallacy or Dictatorship: We collected English memes from our personal

Presenting two alternative options as the only Facebook accounts over several months in 2020

possibilities, when in fact more possibilities ex- by following 26 public Facebook groups, which

ist. As an extreme case, tell the audience exactly focus on politics, vaccines, COVID-19, and gender

what actions to take, eliminating any other pos- equality. We considered a meme to be a “photo-

sible choices (Dictatorship). graph style image with a short text on top of it”, and

14. Reductio ad Hitlerum: Persuading an audi- we removed examples that did not fit this defini-

ence to disapprove of an action or an idea by tion, e.g., cartoon-style memes, memes whose tex-

suggesting that the idea is popular with groups tual content was strongly dominant or non-existent,

that are hated or in contempt by the target audi- memes with a single-color background image, etc.

ence. It can refer to any person or concept with Then, we annotated the memes using our 22 persua-

a negative connotation. sion techniques. For each meme, we first annotated

its textual content, and then the entire meme. We

15. Repetition: Repeating the same message over

performed each of these two annotations in two

and over again, so that the audience will eventu-

phases: in the first phase, the annotators indepen-

ally accept it.

dently annotated the memes; afterwards, all anno-

16. Obfuscation, Intentional Vagueness, Confu- tators met together with a consolidator to discuss

sion: Using words that are deliberately not clear, and to select the final gold label(s).

so that the audience can have their own interpre- The final annotated dataset consists of 950

tations. memes: 687 memes for training, 63 for develop-

17. Presenting Irrelevant Data (Red Herring): ment, and 200 for testing. While the maximum

Introducing irrelevant material to the issue be- number of sentences in a meme is 13, the average

ing discussed, so that everyone’s attention is number of sentences per meme is just 1.68, as most

diverted away from the points made. memes contain very little text.

18. Bandwagon Attempting to persuade the target Table 1 shows the number of instances of each

audience to join in and take the course of ac- technique for each of the tasks. Note that Trans-

tion because “everyone else is taking the same fer and Appeal to (Strong) Emotions are not ap-

action.” plicable to text, i.e., to Subtasks 1 and 2. For

Subtasks 1 and 3, each technique can be present

19. Smears: A smear is an effort to damage or at most once per example, while in Subtask 2, a

call into question someone’s reputation, by pro- technique could appear multiple times in the same

pounding negative propaganda. It can be applied example. This explains the sizeable differences in

to individuals or groups. the number of instances for some persuasion tech-

20. Glittering Generalities (Virtue): These are niques between Subtasks 1 and 2: some techniques

words or symbols in the value system of the are over-used in memes, with the aim of making the

target audience that produce a positive image message more persuasive, and thus they contribute

when attached to a person or an issue. higher counts to Subtask 2.

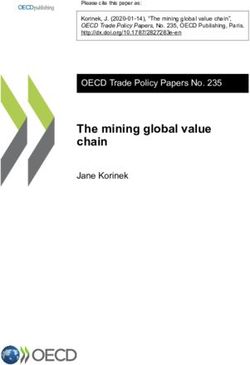

73Persuasion Techniques Subtask 1 Subtask 2 Subtask 3 We observe that the number of memes with only

# Len. # #

one persuasion technique in Subtask 3 is consider-

Loaded Language 489 2.41 761 492 ably lower compared to Subtask 1, while the num-

Name Calling/Labeling 300 2.62 408 347

Smears 263 17.11 266 602 ber of memes with three or more persuasion tech-

Doubt 84 13.71 86 111 niques has greatly increased for Subtask 3.

Exaggeration/Minimisation 78 6.69 85 100

Slogans 66 4.70 72 70

265

Appeal to Fear/Prejudice 57 10.12 60 91

250 239

Whataboutism 54 22.83 54 67

Glittering Generalities (Virtue) 44 14.07 45 112

200 198

Flag-Waving 38 5.18 44 55

Repetition 12 1.95 42 14

# of memes

150 153

Causal Oversimplification 31 14.48 33 36

Thought-Terminating Cliché 27 4.07 28 27

Black-and-White 100

25 11.92 25 26

Fallacy/Dictatorship

68

Straw Man 24 15.96 24 40

50

Appeal to Authority 22 20.05 22 35

22

Reductio ad Hitlerum 13 12.69 13 23 4

0 0 1

Obfuscation, Intentional 0 1 2 3 4 5 6 7 8

5 9.8 5 7 # of distinct persuasion techniques in a meme

Vagueness, Confusion

Presenting Irrelevant Data 5 15.4 5 7

Bandwagon 5 8.4 5 5

(a) Subtask 1

Transfer — — — 95

213 220

Appeal to (Strong) Emotions — — — 90

Total 1,642 2,119 2,488 200 198

Table 1: Statistics about the persuasion techniques. For

150

# of memes

each technique, we show the average length of its spans

120

(in number of words) and the number of its instances as

annotated in the text only vs. in the entire meme. 100

84

53

50

29

Note that the number of instances for Sub- 14

6 4 3 2 1 1 1 1

tasks 1 and 3 differs, and in some cases by quite 0

0 1 2 3 4 5 6 7 8 9 10 11 12 13 16 20

# of instances of persuasion techniques in a meme

a bit, e.g., for Smears, Doubt, and Appeal to

Fear/Prejudice. This shows that many techniques (b) Subtask 2

cannot be found in the text, and require the visual

content, which motivates the need for multimodal 261

250 246

approaches for Subtask 3. Note also that different

techniques have different span lengths, e.g., Loaded 200 185

Language and Name Calling are about 2–3 words

# of memes

long, e.g., violence, mass shooter, and coward. 150 137

However, for techniques such as Whataboutism,

100

the average span length is 22 words.

59

Figure 2 shows statistics about the distribution 50 36

of the number of persuasion techniques per meme. 21

2 3

Note the difference for memes without persuasion 0

0 1 2 3 4 5 6 7 8

# of distinct persuasion techniques in a meme

techniques between Figures 2a and 2c: we can see

that the number of memes without any persuasion (c) Subtask 3

technique drastically drops for Subtask 3. This is

Figure 2: Distribution of the number of persuasion

because the visual modality introduces additional techniques per meme. Subfigure (b) reports the num-

context that was not available during the text-only ber of instances of persuasion techniques for a meme.

annotation, which further supports the need for Note that a meme could have multiple instances of the

multimodal analysis. The visual modality also has same technique for this subtask. Subfigures (a) and (c)

an impact on memes that already had persuasion show the number of distinct persuasion techniques in

techniques in the text-only phase. a meme.

745 Evaluation Framework

1 X

5.1 Evaluation Measures R(S, T ) = C(s, t, |t|), (3)

|T |

s ∈ S,

Subtasks 1 and 3 To measure the performance t∈T

of the systems, for Subtasks 1 and 3, we use Micro We define (2) to be zero if |S| = 0, and Eq. (3) to

and Macro F1 , as these are multi-class multi-label be zero if |T | = 0. Following Potthast et al. (2010),

tasks, where the labels are imbalanced. The official in (2) and (3) we penalize systems predicting too

measure for the task is Micro F1 . many or too few instances by dividing by |S| and

Subtask 2 For Subtask 2, the evaluation requires |T |, respectively. Finally, we combine Eqs. (2)

matching the text spans. Hence, we use an evalu- and (3) into an F1 -measure, the harmonic mean of

ation function that gives credit to partial matches precision and recall.

between gold and predicted spans.

5.2 Task Organization

Let document d be represented as a sequence of

characters. The i-th propagandistic text fragment We ran the shared task in two phases:

is then represented as a sequence of contiguous Development Phase In the first phase, only train-

characters t ⊆ d. A document includes a set of ing and development data were made available, and

(possibly overlapping) fragments T . Similarly, a no gold labels were provided for the latter. The par-

learning algorithm produces a set S with fragments ticipants competed against each other to achieve

s ⊆ d, predicted on d. A labeling function l(x) ∈ the best performance on the development set. A

{1, . . . , 20} associates t ∈ T , s ∈ S with one of live leaderboard was made available to keep track

the techniques. An example of (gold) annotation is of all submissions.

shown in Figure 3, where an annotation t1 marks

the span stupid and petty with the technique Loaded Test Phase In the second phase, the test set was

Language. released and the participants were given just a few

days to submit their final predictions.

t1 : loaded language

In the Development Phase, the participants could

h o w s t u p i d a n d p e t t y t h i n g s

make an unlimited number of submissions, and see

s1 : loaded language s2 : name calling

the outcome in their private space. The best score

h o w s t u p i d a n d p e t t y t h i n g s for each team, regardless of the submission time,

s3 : loaded language s5 : loaded language was also shown in a public leaderboard. As a result,

h o w s t u p i d a n d p e t t y t h i n g s not only could the participants observe the impact

s4 : loaded language

of various modifications in their systems, but they

could also compare against the results by other par-

Figure 3: Example of gold annotation (top) and the pre- ticipating teams. In the Test Phase, the participants

dictions of a supervised model (bottom) in a document could again submit multiple runs, but they would

represented as a sequence of characters. not get any feedback on their performance. Only

the latest submission of each team was considered

We define the following function to handle par- as official and was used for the final team rank-

tial overlaps of fragments with the same labels: ing. The final leaderboard on the test set was made

|(s ∩ t)| public after the end of the shared task.

C(s, t, h) = δ (l(s), l(t)) , (1)

h In the Development Phase, a total of 15, 10 and

where h is a normalizing factor and δ(a, b) = 1 13 teams made at least one submission for ST1,

if a = b, and 0, otherwise. For example, still ST2 and ST3, respectively. In the Test Phase the

6 number of teams who made official submissions

with reference to Figure 3, C(t1 , s1 , |t1 |) = 16 and

C(t1 , s2 , |t1 |) = 0. was 16, 8, and 15 for ST1, ST2, ST3, respectively.

Given Eq. (1), we now define variants of preci- After the competition was over, we left the sub-

sion and recall that can account for the imbalance mission system open for the development set, and

in the corpus: we plan to reopen it on the test set as well. The up-

1 X to-date leaderboards can be found on the website

P (S, T ) = C(s, t, |s|), (2) of the competition.2

|S|

s ∈ S, 2

t∈T http://propaganda.math.unipd.it/semeval2021task6/

75Rank. Team Transformers Models Repres. Misc

Data augmentation

Random Forest

Postprocessing

Char n-grams

Embeddings

Naive Bayes

DistilBERT

RoBERTa

DeBERTa

Ensemble

ALBERT

XLNet

LSTM

BERT

SVM

CNN

CRF

PoS

1. MinD

2. Alpha

3. Volta Ë

5. AIMH

6. LeCun Ë Ë

Ë

7. WVOQ

9. NLyticsFKIE

Ë

12. YNU-HPCC

13. CSECUDSG

15. NLP-IITR Ë

Ë ËËË

1 (Tian et al., 2021) 6 (Dia et al., 2021) 13 (Hossain et al., 2021)

2 (Feng et al., 2021) 7 (Roele, 2021) 15 (Gupta and Sharma, 2021)

3 (Gupta et al., 2021) 9 (Pritzkau, 2021)

5 (Messina et al., 2021) 12 (Zhu et al., 2021)

Table 2: ST1: Overview of the approaches used by the participating systems.

=part of the official submission;

Ë=considered in internal experiments; Repres. stand for Representations. References to system description

papers are shown below the table.

6 Participants and Results Rank Team F1-Micro F1-Macro

Below, we give a general description of the systems 1 MinD .593 .2902

that participated in the three subtasks and their 2 Alpha .572 .2625

results, with focus on those ranked among the top-3. 3 Volta .570 .2663

Appendix C gives a description of every system. 4 mmm .548 .3031

5 AIMH .539 .2456

6.1 Subtask 1 (Unimodal: Text) 6 LeCun .512 .2278

Table 2 gives an overview of the systems that took 7 WVOQ .511 .2278

part in Subtask 1. We can see that transformers 8 TeamUNCC .510 .2367

were quite popular, and among them, most com- 9 NLyticsFKIE .498 .14013

monly used was RoBERTa, followed by BERT. 10 TeiAS .497 .21410

Some participants used learning models such as 11 DAJUST .497 .18711

LSTM, CNN, and CRF in their final systems, while 12 YNUHPCC .493 .2634

internally, Naı̈ve Bayes and Random Forest were 13 CSECUDSG .489 .18512

also tried. In terms of representation, embeddings 14 TeamFPAI .406 .11515

clearly dominated. Moreover, techniques such as 15 NLPIITR .379 .12614

ensembles, data augmentation, and post-processing Majority baseline .374 .033

were also used in some systems. 16 TriHeadAttention .184 .02418

The evaluation results are shown in Table 3, Random baseline .064 .044

which also includes two baselines: (i) random,

and (ii) majority class. The latter always predicts Table 3: Results for Subtask 1. The systems are ordered

Loaded Language, as it is the most frequent tech- by the official score: F1-micro.

nique for Subtask 1 (see Table 1).

The best system MinD (Tian et al., 2021) used

five transformers: BERT, RoBERTa, XLNet, De- The final prediction for MinD averages the prob-

BERTa, and ALBERT. It was fine-tuned on the abilities for these models, and further uses post-

PTC corpus (Da San Martino et al., 2020a) and processing rules, e.g., each bigram appearing more

then on the training data for Subtask 1. than three times is flagged as a Repetition.

76Team Alpha (Feng et al., 2021) was ranked sec- Rank Team F1 Precision Recall

ond. However, they used features from images,

1 Volta .482 .5012 .4641

which was not allowed (images were only allowed

2 HOMADOS .407 .4123 .4032

for Subtask 3).

3 TeamFPAI .397 .6521 .2865

Team Volta (Gupta et al., 2021) was third. They

4 TeamUNCC .329 .2854 .3903

used a combination of transformers with the [CLS]

5 WVOQ .268 .2435 .2994

token as an input to a two-layer feed-forward net- 6 CSECUDSG .120 .0808 .2436

work. They further used example weighting to 7 YNUHPCC .091 .1866 .0607

address class imbalance. 8 TriHeadAttention .080 .1707 .0528

We should also mention team LeCun, which Random Baseline .010 .034 .006

used additional corpora such as the PTC cor-

pus (Da San Martino et al., 2020a), and aug- Table 5: Results for Subtask 2. The systems are ordered

mented the training data using synonyms, random by the official score: F1-micro.

insertion/deletion, random swapping, and back-

translation.

The best model by team Volta (Gupta et al.,

6.2 Subtask 2 (Unimodal: Text) 2021) used various transformer models, such as

The approaches for this task varied from modeling BERT and RoBERTa, to predict token classes by

it as a question answering (QA) task to performing considering the output of each token embedding.

multi-task learning. Table 4 presents a high-level Then, they assigned classes for a given word as the

summary. We can see that BERT dominated, while union of the classes predicted for the subwords that

RoBERTa was much less popular. We further see make that word (to account for BPEs).

a couple of systems using data augmentation. Un- Team HOMADOS (Kaczyński and Przybyła,

fortunately, there are too few systems with system 2021) was second, and they used a multi-task learn-

description papers for this subtask, and thus it is ing (MTL) and additional datasets such as the PTC

hard to do a very deep analysis. corpus from SemEval-2020 task 11 (Da San Mar-

tino et al., 2020a), and a fake news corpus (Przy-

Rank. Team Trans. Models Repres. Misc byla, 2020). They used BERT, followed by several

output layers that perform auxiliary tasks of propa-

Data augmentation

ganda detection and credibility assessment in two

distinct scenarios: sequential and parallel MTL.

Sentiment

RoBERTa

Ensemble

Rhetorics

Their final submission used the latter.

LSTM

ELMo

BERT

Team TeamFPAI (Xiaolong et al., 2021) for-

SVM

CNN

PoS

mulated the task as a question answering problem

1. Volta Ë

using machine reading comprehension, thus im-

2. HOMADOS

proving over the ensemble-based approach of Liu

3. TeamFPAI

5. WVOQ

Ë

et al. (2018). They further explored data augmenta-

6. CSECUDSG

Ë

tion and loss design techniques, in order to alleviate

7. YNU-HPCC

the problem of data sparseness and data imbalance.

1 (Gupta et al., 2021) 5 (Roele, 2021)

2 (Kaczyński and Przybyła, 2021) 6 (Hossain et al., 2021) 6.3 Subtask 3 (Multimodal: Memes)

3 (Xiaolong et al., 2021) 7 (Zhu et al., 2021)

Table 6 presents an overview of the approaches

Table 4: ST2: Overview of the approaches used by the used by the systems that participated in Subtask

participating systems.

=part of the official submis-

3. This is a very rich and very interesting table.

sion; Ë=considered in internal experiments; Trans. is

for Transformers; Repres. is for Representations. Ref- We can see that transformers were quite popular

erences to system description papers are shown below for text representation, with BERT dominating, but

the table. RoBERTa being quite popular as well. For the vi-

sual modality, the most common representations

Table 5 shows the evaluation results. We report were variants of ResNet, but VGG16 and CNNs

our random baseline, which is based on the ran- were also used. We further see a variety of represen-

dom selection of spans with random lengths and a tations and fusion methods, which is to be expected

random assignment of labels. given the multi-modal nature of this subtask.

77Rank. Team Transformers Models Representations Fusion Misc

Words/Word n-grams

Data augmentation

Chained classifier

FR (ResNet34)

Postprocessing

Char n-grams

YouTube-8M

Embeddings

ERNIE-VIL

FastBERT

Sentiment

RoBERTa

DeBERTa

Ensemble

Rhetorics

ALBERT

ResNet18

ResNet50

ResNet51

Attention

MS OCR

SemVLP

Average

VGG16

Concat

XLNet

LSTM

GPT-2

ELMo

BUTD

BERT

CLIP

SVM

MLP

CNN

CRF

PoS

1. Alpha Ë

Ë Ë Ë

ËË Ë

2. MinD

Ë Ë

3. 1213Li

4. AIMH

5. Volta Ë

6. CSECUDSG

Ë

8. LIIR

10. WVOQ

Ë

11. YNU-HPCC

13. NLyticsFKIE

Ë

Ë

15. LT3-UGent

1 (Feng et al., 2021) 5 (Gupta et al., 2021) 11 (Zhu et al., 2021)

2 (Tian et al., 2021) 6 (Hossain et al., 2021) 13 (Pritzkau, 2021)

3 (Peiguang et al., 2021) 8 (Ghadery et al., 2021) 15 (Singh and Lefever, 2021)

4 (Messina et al., 2021) 10 (Roele, 2021)

Table 6: ST3: Overview of the approaches used by the participating systems.

=part of the official submission;

Ë=considered in internal experiments. References to system description papers are shown below the table.

Table 7 shows the performance on the test set for Team 1213Li (Peiguang et al., 2021) used

the participating systems for Subtask 3. The two RoBERTa and ResNet-50 as feature extractors for

baselines shown in the table are similar to those texts and images, respectively, and adopted a la-

for Subtask 1, namely a random baseline and a ma- bel embedding layer with a multi-modal attention

jority class baseline. However, this time the most mechanism to measure the similarity between la-

frequent class baseline always predicts Smears (for bels with multi-modal information, and fused fea-

Subtask 1, it was Loaded Language), as this is the tures for label prediction.

most frequent technique for Subtask 3 (as can be

seen in Table 1).

Rank Team F1-Micro F1-Macro

Team Alpha (Feng et al., 2021) pre-trained a

1 Alpha .581 .2731

transformer using text with visual features. They

2 MinD .566 .2443

extracted grid features using ResNet50, and salient

3 1213Li .549 .2285

region features using BUTD. They further used

4 AIMH .540 .2076

these grid features to capture the high-level se-

5 Volta .521 .1898

mantic information in the images. Moreover, they

6 CSECUDSG .513 .12111

used salient region features to describe objects

7 aircasMM .511 .2007

and to caption the event present in the memes.

8 LIIR .498 .1889

Finally, they built an ensemble of fine-tuned De-

9 CAU731NLP .481 .08414

BERTA+ResNet, DeBERTA+BUTD, and ERNIE-

10 WVOQ .478 .2404

VIL systems.

11 YNUHPCC .446 .09613

Team MinD (Tian et al., 2021) combined a sys- 12 TriHeadAttention .442 .06215

tem for Subtask 1 with (i) ResNet-34, a face recog- 13 NLyticsFKIE .423 .11812

nition system, (ii) OCR-based positional embed- Majority baseline .354 .036

dings for text boxes, and (iii) Faster R-CNN to 14 LT3UGent .332 .2642

extract region-based image features. They used 15 TeamUNCC .224 .12410

late fusion to combine the textual and the visual Random baseline .071 .052

representations. Other multimodal fusion strategies

they tried were concatenation of the representation Table 7: Results for Subtask 3. The systems are ordered

and mapping using a multi-layer perceptron. by the official score: F1-micro.

787 Conclusion and Future Work References

Shamsiah Abd Kadir, Anitawati Lokman, and

We presented SemEval-2021 Task 6 on Detection

T. Tsuchiya. 2016. Emotion and techniques of

of Persuasion Techniques in Texts and Images. It propaganda in YouTube videos. Indian Journal of

was a successful task: a total of 71 teams registered Science and Technology, Vol (9):1–8.

to participate, 22 teams eventually made an offi-

Shamsiah Abd Kadir and Ahmad Sauffiyan. 2014. A

cial submission on the test set, and 15 teams also content analysis of propaganda in Harakah news-

submitted a task description paper. paper. Journal of Media and Information Warfare,

In future work, we plan to increase the data size 5:73–116.

and to add more propaganda techniques. We further Sami Abu-El-Haija, Nisarg Kothari, Joonseok Lee,

plan to cover several different languages. Paul Natsev, George Toderici, Balakrishnan

Varadarajan, and Sudheendra Vijayanarasimhan.

Acknowledgments 2016. YouTube-8M: A large-scale video classifica-

tion benchmark. arXiv preprint arXiv:1609.08675.

This research part of the Tanbih mega-project,3 Ivan Habernal et al. 2018. Before name-calling: Dy-

which is developed at the Qatar Computing Re- namics and triggers of ad hominem fallacies in web

search Institute, HBKU, and aims to limit the im- argumentation. In Proceedings of the 16th An-

pact of “fake news,” propaganda, and media bias nual Conference of the North American Chapter

of the Association for Computational Linguistics:

by making users aware of what they are reading.

Human Language Technologies, NAACL-HLT’18,

pages 386–396, New Orleans, LA, USA.

Ethics and Broader Impact

Ron Artstein and Massimo Poesio. 2008. Survey ar-

User Privacy Our dataset only includes memes ticle: Inter-coder agreement for computational lin-

and it contains no user information. guistics. Computational Linguistics, 34(4):555–

596.

Biases Any biases in the dataset are uninten- Pepa Atanasova, Lluı́s Màrquez, Alberto Barrón-

tional, and we do not intend to do harm to any Cedeño, Tamer Elsayed, Reem Suwaileh, Wajdi Za-

group or individual. Note that annotating propa- ghouani, Spas Kyuchukov, Giovanni Da San Mar-

ganda techniques can be subjective, and thus it is tino, and Preslav Nakov. 2018. Overview of the

CLEF-2018 CheckThat! lab on automatic identifi-

inevitable that there would be biases in our gold- cation and verification of political claims, task 1:

labeled data or in the label distribution. We address Check-worthiness. In CLEF 2018 Working Notes,

these concerns by collecting examples from a va- Avignon, France.

riety of users and groups, and also by following a Pepa Atanasova, Preslav Nakov, Georgi Karadzhov,

well-defined schema, which has clear definitions Mitra Mohtarami, and Giovanni Da San Martino.

and on which we achieved high inter-annotator 2019. Overview of the CLEF-2019 CheckThat!

agreement. lab on automatic identification and verification of

claims. Task 1: Check-worthiness. In Working

Moreover, we had a diverse annotation team, Notes of CLEF 2019, Lugano, Switzerland.

which included six members, both female and male,

all fluent in English, with qualifications ranging Alberto Barrón-Cedeño, Tamer Elsayed, Reem

Suwaileh, Lluı́s Màrquez, Pepa Atanasova, Wajdi

from undergrad to MSc and PhD degrees, including Zaghouani, Spas Kyuchukov, Giovanni Da San Mar-

experienced NLP researchers, and covering multi- tino, and Preslav Nakov. 2018. Overview of the

ple nationalities. This helped to ensure the quality. CLEF-2018 CheckThat! lab on automatic identifi-

No incentives were provided to the annotators. cation and verification of political claims, task 2:

Factuality. In CLEF 2018 Working Notes, Avignon,

Misuse Potential We ask researchers to be aware France.

that our dataset can be maliciously used to unfairly Alberto Barrón-Cedeño, Tamer Elsayed, Preslav

moderate memes based on biases that may or may Nakov, Giovanni Da San Martino, Maram Hasanain,

not be related to demographics and other infor- Reem Suwaileh, Fatima Haouari, Nikolay Babulkov,

Bayan Hamdan, Alex Nikolov, Shaden Shaar, and

mation within the text. Intervention with human

Zien Sheikh Ali. 2020. Overview of CheckThat!

moderation would be required in order to ensure 2020 — automatic identification and verification of

this does not occur. claims in social media. In Proceedings of the 11th

International Conference of the CLEF Association:

Experimental IR Meets Multilinguality, Multimodal-

3

http://tanbih.qcri.org/ ity, and Interaction, CLEF ’2020.

79Alberto Barrón-Cedeño, Tamer Elsayed, Preslav persuasion techniques in text using ensembled pre-

Nakov, Giovanni Da San Martino, Maram Hasanain, trained transformers and data augmentation. In Pro-

Reem Suwaileh, and Fatima Haouari. 2020. Check- ceedings of the International Workshop on Semantic

That! at CLEF 2020: Enabling the automatic iden- Evaluation, SemEval ’21, Bangkok, Thailand.

tification and verification of claims in social media.

In Proceedings of the European Conference on Infor- Tamer Elsayed, Preslav Nakov, Alberto Barrón-

mation Retrieval, ECIR ’19, pages 499–507, Lisbon, Cedeño, Maram Hasanain, Reem Suwaileh, Gio-

Portugal. vanni Da San Martino, and Pepa Atanasova. 2019a.

CheckThat! at CLEF 2019: Automatic identification

Alberto Barrón-Cedeno, Israa Jaradat, Giovanni and verification of claims. In Advances in Informa-

Da San Martino, and Preslav Nakov. 2019. Proppy: tion Retrieval, ECIR ’19, pages 309–315, Lugano,

Organizing the news based on their propagandistic Switzerland.

content. Information Processing & Management,

56(5):1849–1864. Tamer Elsayed, Preslav Nakov, Alberto Barrón-

Cedeño, Maram Hasanain, Reem Suwaileh, Gio-

Giovanni Da San Martino, Alberto Barrón-Cedeño, and vanni Da San Martino, and Pepa Atanasova. 2019b.

Preslav Nakov. 2019a. Findings of the NLP4IF- Overview of the CLEF-2019 CheckThat!: Auto-

2019 shared task on fine-grained propaganda de- matic identification and verification of claims. In

tection. In Proceedings of the Second Workshop Experimental IR Meets Multilinguality, Multimodal-

on Natural Language Processing for Internet Free- ity, and Interaction, LNCS, pages 301–321.

dom: Censorship, Disinformation, and Propaganda,

NLP4IF ’19, pages 162–170, Hong Kong, China. Zhida Feng, Jiji Tang, Jiaxiang Liu, Weichong Yin,

Shikun Feng, Yu Sun, and Li Chen. 2021. Alpha

Giovanni Da San Martino, Alberto Barrón-Cedeño, at SemEval-2021 Tasks 6: Transformer based pro-

Henning Wachsmuth, Rostislav Petrov, and Preslav paganda classification. In Proceedings of the In-

Nakov. 2020a. SemEval-2020 task 11: Detection of ternational Workshop on Semantic Evaluation, Sem-

propaganda techniques in news articles. In Proceed- Eval ’21, Bangkok, Thailand.

ings of the Fourteenth Workshop on Semantic Eval-

Erfan Ghadery, Damien Sileo, and Marie-Francine

uation, SemEval ’20, pages 1377–1414, Barcelona,

Moens. 2021. LIIR at SemEval 2021 Task 6: De-

Spain.

tection of persuasion techniques in texts and images

Giovanni Da San Martino, Stefano Cresci, Alberto using CLIP features. In Proceedings of the Inter-

Barrón-Cedeño, Seunghak Yu, Roberto Di Pietro, national Workshop on Semantic Evaluation, Sem-

and Preslav Nakov. 2020b. A survey on computa- Eval ’21, Bangkok, Thailand.

tional propaganda detection. In Proceedings of the Maria Glenski, E. Ayton, J. Mendoza, and Svitlana

International Joint Conference on Artificial Intelli- Volkova. 2019. Multilingual multimodal digital de-

gence, IJCAI-PRICAI ’20, pages 4826–4832. ception detection and disinformation spread across

social platforms. ArXiv, abs/1909.05838.

Giovanni Da San Martino, Shaden Shaar, Yifan Zhang,

Seunghak Yu, Alberto Barrón-Cedeno, and Preslav Genevieve Gorrell, Elena Kochkina, Maria Liakata,

Nakov. 2020c. Prta: A system to support the analy- Ahmet Aker, Arkaitz Zubiaga, Kalina Bontcheva,

sis of propaganda techniques in the news. In Pro- and Leon Derczynski. 2019. SemEval-2019 task 7:

ceedings of the 58th Annual Meeting of the Associa- RumourEval, determining rumour veracity and sup-

tion for Computational Linguistics, ACL ’20, pages port for rumours. In Proceedings of the 13th In-

287–293. ternational Workshop on Semantic Evaluation, Sem-

Eval ’19, pages 845–854, Minneapolis, Minnesota,

Giovanni Da San Martino, Seunghak Yu, Alberto USA.

Barrón-Cedeño, Rostislav Petrov, and Preslav

Nakov. 2019b. Fine-grained analysis of propa- Bin Guo, Yasan Ding, Lina Yao, Yunji Liang, and Zhi-

ganda in news articles. In Proceedings of the 2019 wen Yu. 2020. The future of false information detec-

Conference on Empirical Methods in Natural Lan- tion on social media: New perspectives and trends.

guage Processing and 9th International Joint Con- ACM Comput. Surv., 53(4).

ference on Natural Language Processing, EMNLP-

IJCNLP ’19, pages 5636–5646, Hong Kong, China. Kshitij Gupta, Devansh Gautam, and Radhika Mamidi.

2021. Volta at SemEval-2021 Task 6: Towards de-

Leon Derczynski, Kalina Bontcheva, Maria Liakata, tecting persuasive texts and images using textual and

Rob Procter, Geraldine Wong Sak Hoi, and Arkaitz multimodal ensemble. In Proceedings of the Inter-

Zubiaga. 2017. SemEval-2017 Task 8: RumourEval: national Workshop on Semantic Evaluation, Sem-

Determining rumour veracity and support for ru- Eval ’21, Bangkok, Thailand.

mours. In Proceedings of the 11th International

Workshop on Semantic Evaluation, SemEval ’17, Vansh Gupta and Raksha Sharma. 2021. NLPIITR at

pages 60–67, Vancouver, Canada. SemEval-2021 Task 6: detection of persuasion tech-

niques in texts and images. In Proceedings of the In-

Abujaber Dia, Qarqaz Ahmed, and Abdullah Malak A. ternational Workshop on Semantic Evaluation, Sem-

2021. LeCun at SemEval-2021 Task 6: Detecting Eval ’21, Bangkok, Thailand.

80Ivan Habernal, Raffael Hannemann, Christian Pol- Liunian Harold Li, Mark Yatskar, Da Yin, Cho-Jui

lak, Christopher Klamm, Patrick Pauli, and Iryna Hsieh, and Kai-Wei Chang. 2019. VisualBERT: A

Gurevych. 2017. Argotario: Computational argu- simple and performant baseline for vision and lan-

mentation meets serious games. In Proceedings of guage. arXiv preprint arXiv:1908.03557.

the 2017 Conference on Empirical Methods in Natu-

ral Language Processing, EMNLP ’17, pages 7–12, Jiahua Liu, Wan Wei, Maosong Sun, Hao Chen, Yantao

Copenhagen, Denmark. Du, and Dekang Lin. 2018. A multi-answer multi-

task framework for real-world machine reading com-

Ivan Habernal, Patrick Pauli, and Iryna Gurevych. prehension. In Proceedings of the 2018 Conference

2018. Adapting serious game for fallacious argu- on Empirical Methods in Natural Language Process-

mentation to German: Pitfalls, insights, and best ing, EMNLP ’18, pages 2109–2118, Brussels, Bel-

practices. In Proceedings of the Eleventh Interna- gium.

tional Conference on Language Resources and Eval-

uation, LREC’18, Miyazaki, Japan. Weijie Liu, Peng Zhou, Zhiruo Wang, Zhe Zhao,

Haotang Deng, and Qi Ju. 2020. FastBERT: a self-

Maram Hasanain, Fatima Haouari, Reem Suwaileh, distilling BERT with adaptive inference time. In

Zien Sheikh Ali, Bayan Hamdan, Tamer Elsayed, Proceedings of the 58th Annual Meeting of the As-

Alberto Barrón-Cedeño, Giovanni Da San Martino, sociation for Computational Linguistics, ACL ’20,

and Preslav Nakov. 2020. Overview of CheckThat! pages 6035–6044, Online.

2020 Arabic: Automatic identification and verifica-

tion of claims in social media. In Working Notes Jiasen Lu, Dhruv Batra, Devi Parikh, and Stefan Lee.

of CLEF 2020—Conference and Labs of the Evalua- 2019. ViLBERT: Pretraining task-agnostic visi-

tion Forum, CLEF ’2020. olinguistic representations for vision-and-language

tasks. In Proceedings of the Conference on Neu-

Maram Hasanain, Reem Suwaileh, Tamer Elsayed, ral Information Processing Systems, NeurIPS ’19,

Alberto Barrón-Cedeño, and Preslav Nakov. 2019. pages 13–23, Vancouver, Canada.

Overview of the CLEF-2019 CheckThat! Lab on

Automatic Identification and Verification of Claims. Giovanni Da San Martino, Stefano Cresci, Alberto

Task 2: Evidence and Factuality. In CLEF 2019 Barrón-Cedeño, Seunghak Yu, Roberto Di Pietro,

Working Notes. Working Notes of CLEF 2019 - Con- and Preslav Nakov. 2020. A survey on computa-

ference and Labs of the Evaluation Forum, Lugano, tional propaganda detection. In Proceedings of the

Switzerland. CEUR-WS.org. International Joint Conference on Artificial Intelli-

gence, IJCAI-PRICAI ’20, pages 4826–4832.

Tashin Hossain, Jannatun Naim, Fareen Tasneem, Ra-

diathun Tasnia, and Abu Nowshed Chy. 2021. CSE- Nicola Messina, Fabrizio Falchi, Claudio Gennaro, and

CUDSG at SemEval-2021 Task 6: Orchestrating Giuseppe Amato. 2021. AIMH at SemEval-2021

multimodal neural architectures for identifying per- Task 6: multimodal classification using an ensem-

suasion techniques in texts and images. In Pro- ble of transformer models. In Proceedings of the In-

ceedings of the International Workshop on Semantic ternational Workshop on Semantic Evaluation, Sem-

Evaluation, SemEval ’21, Bangkok, Thailand. Eval ’21, Bangkok, Thailand.

Konrad Kaczyński and Piotr Przybyła. 2021. HOMA- Tsvetomila Mihaylova, Georgi Karadzhov, Pepa

DOS at SemEval-2021 Task 6: Multi-task learning Atanasova, Ramy Baly, Mitra Mohtarami, and

for propaganda detection. In Proceedings of the In- Preslav Nakov. 2019. SemEval-2019 task 8: Fact

ternational Workshop on Semantic Evaluation, Sem- checking in community question answering forums.

Eval ’21, Bangkok, Thailand. In Proceedings of the 13th International Workshop

on Semantic Evaluation, SemEval ’19, pages 860–

Douwe Kiela, Suvrat Bhooshan, Hamed Firooz, and 869, Minneapolis, Minnesota, USA.

Davide Testuggine. 2019. Supervised multimodal

bitransformers for classifying images and text. Tomás Mikolov, Kai Chen, Greg Corrado, and Jeffrey

In Proceedings of the NeurIPS 2019 Workshop Dean. 2013. Efficient estimation of word represen-

on Visually Grounded Interaction and Language, tations in vector space. In Proceedings of the 1st

ViGIL@NeurIPS ’19. International Conference on Learning Representa-

tions, Workshop Track, ICLR ’13, Scottsdale, Ari-

Douwe Kiela, Hamed Firooz, Aravind Mohan, Vedanuj zona, USA.

Goswami, Amanpreet Singh, Pratik Ringshia, and

Davide Testuggine. 2020. The hateful memes chal- Clyde R. Miller. 1939. The Techniques of Propaganda.

lenge: Detecting hate speech in multimodal memes. From “How to Detect and Analyze Propaganda,” an

In Proceedings of the Annual Conference on Neural address given at Town Hall. The Center for learning.

Information Processing Systems, NeurIPS ’20.

Preslav Nakov, Alberto Barrón-Cedeño, Tamer El-

J Richard Landis and Gary G Koch. 1977. The mea- sayed, Reem Suwaileh, Lluı́s Màrquez, Wajdi Za-

surement of observer agreement for categorical data. ghouani, Pepa Atanasova, Spas Kyuchukov, and

Biometrics, pages 159–174. Giovanni Da San Martino. 2018. Overview of the

81CLEF-2018 CheckThat! lab on automatic identifi- Giovanni Da San Martino, and Preslav Nakov. 2020.

cation and verification of political claims. In Pro- Overview of CheckThat! 2020 English: Automatic

ceedings of the International Conference of CLEF, identification and verification of claims in social me-

CLEF ’18, pages 372–387, Avignon, France. dia. In Working Notes of CLEF 2020—Conference

and Labs of the Evaluation Forum, CLEF ’2020.

Preslav Nakov, Giovanni Da San Martino, Tamer

Elsayed, Alberto Barrón-Cedeño, Rubén Mı́guez, Anup Shah. 2005. War, propaganda and the media.

Shaden Shaar, Firoj Alam, Fatima Haouari, Maram

Hasanain, Nikolay Babulkov, Alex Nikolov, Gau- Kai Shu, Amy Sliva, Suhang Wang, Jiliang Tang, and

tam Kishore Shahi, Julia Maria Struß, and Thomas Huan Liu. 2017. Fake news detection on social me-

Mandl. 2021. The CLEF-2021 CheckThat! lab dia: A data mining perspective. SIGKDD Explor.

on detecting check-worthy claims, previously fact- Newsl., 19(1):22–36.

checked claims, and fake news. In Advances in In-

formation Retrieval, ECIR ’21, pages 639–649. Pranaydeep Singh and Els Lefever. 2021. LT3 at

SemEval-2021 Task 6: Using multi-modal compact

Li Peiguang, Li Xuan, and Sun Xian. 2021. 1213Li bilinear pooling to combine visual and textual un-

at SemEval-2021 Task 6: detection of propaganda derstanding in memes. In Proceedings of the In-

with multi-modal attention and pre-trained models. ternational Workshop on Semantic Evaluation, Sem-

In Proceedings of the Workshop on Semantic Evalu- Eval ’21, Bangkok, Thailand.

ation, SemEval ’21, Bangkok, Thailand.

James Thorne, Andreas Vlachos, Christos

Martin Potthast, Benno Stein, Alberto Barrón-Cedeño, Christodoulopoulos, and Arpit Mittal. 2018.

and Paolo Rosso. 2010. An Evaluation Framework FEVER: a large-scale dataset for fact extraction

for Plagiarism Detection. In Proceedings of the 23rd and VERification. In Proceedings of the 2018

International Conference on Computational Linguis- Conference of the North American Chapter of the

tics, COLING’10, pages 997–1005, Beijing, China. Association for Computational Linguistics: Human

Language Technologies, NAACL-HLT ’18, pages

Albert Pritzkau. 2021. NLyticsFKIE at SemEval-2021 809–819, New Orleans, Louisiana.

Task 6: Detection of persuasion techniques in texts

and images. In Proceedings of the International Junfeng Tian, Min Gui, Chenliang Li, Ming Yan, and

Workshop on Semantic Evaluation, SemEval ’21, Wenming Xiao. 2021. MinD at SemEval-2021 Task

Bangkok, Thailand. 6: Propaganda detection using transfer learning and

multimodal fusion. In Proceedings of the Inter-

Piotr Przybyla. 2020. Capturing the style of fake news. national Workshop on Semantic Evaluation, Sem-

Proceedings of the AAAI Conference on Artificial In- Eval ’21, Bangkok, Thailand.

telligence, 34(01):490–497.

Robyn Torok. 2015. Symbiotic radicalisation strate-

Hannah Rashkin, Eunsol Choi, Jin Yea Jang, Svitlana gies: Propaganda tools and neuro linguistic program-

Volkova, and Yejin Choi. 2017. Truth of varying ming. In Proceedings of the Australian Security

shades: Analyzing language in fake news and politi- and Intelligence Conference, ASIC ’15, pages 58–

cal fact-checking. In Proceedings of the Conference 65, Perth, Australia.

on Empirical Methods in Natural Language Process-

ing, EMNLP ’17, pages 2931–2937, Copenhagen, Svitlana Volkova, Ellyn Ayton, Dustin L. Arendt,

Denmark. Zhuanyi Huang, and Brian Hutchinson. 2019. Ex-

plaining multimodal deceptive news prediction mod-

Cees Roele. 2021. WVOQ at SemEval-2021 Task 6: els. In Proceedings of the International Conference

BART for span detection and classification. In Pro- on Web and Social Media, ICWSM ’19, pages 659–

ceedings of the International Workshop on Semantic 662, Munich, Germany.

Evaluation, SemEval ’21, Bangkok, Thailand.

Anthony Weston. 2008. A rulebook for arguments.

Hyunjin Seo. 2014. Visual propaganda in the age of Hackett Publishing.

social media: An empirical analysis of Twitter im-

ages during the 2012 Israeli–Hamas conflict. Visual Hou Xiaolong, Ren Junsong, Rao Gang, Jiang Lianxin,

Communication Quarterly, 21(3):150–161. Ruan Zhihao, Yang Mo, and Shen Jianping. 2021.

TeamFPAI at SemEval-2020 Task 6: BERT-MRC

Shaden Shaar, Firoj Alam, Giovanni Da San Martino, for propaganda techniques detection. In Proceed-

Alex Nikolov, Wajdi Zaghouani, and Preslav Nakov. ings of the International Workshop on Semantic

2021. Findings of the NLP4IF-2021 shared task on Evaluation, SemEval ’21, Bangkok, Thailand.

fighting the COVID-19 infodemic. In Proceedings

of the Fourth Workshop on Natural Language Pro- Xingyu Zhu, Jin Wang, and Xuejie Zhang. 2021. YNU-

cessing for Internet Freedom: Censorship, Disinfor- HPCC at SemEval-2021 Task 6: Combining AL-

mation, and Propaganda, NLP4IF@NAACL’ 21. BERT and Text-CNN for persuasion detection in

texts and images. In Proceedings of the Inter-

Shaden Shaar, Alex Nikolov, Nikolay Babulkov, Firoj national Workshop on Semantic Evaluation, Sem-

Alam, Alberto Barrón-Cedeño, Tamer Elsayed, Eval ’21, Bangkok, Thailand.

Maram Hasanain, Reem Suwaileh, Fatima Haouari,

82Appendix We used PyBossa4 as an annotation platform,

as it provides the functionality to create a custom

A Data Collection and Annotation annotation interface that we found to be a good

fit for our needs in each phase of the annotation

A.1 Data Collection process. Figure 4 shows examples of the annotation

To collect the data for the dataset, we used Face- interface for the five different phases of annotation,

book, as it has many public groups with a large which we describe in detail below.

number of users, who intentionally or unintention- Phase 1: Filtering and Text Editing The first

ally share a large number of memes. We used our phase of the annotation process is about selecting

own private Facebook accounts to crawl the public the memes for our task, followed by extracting and

posts from users and groups. To make sure the editing the textual contents of each meme. After we

resulting feed had a sufficient number of memes, collected the memes, we observed that we needed

we initially selected some public groups focusing to remove some of them as they did not fit our

on topics such as politics, vaccines, COVID-19, definition: “photograph style image with a short

and gender equality. Then, using the links between text on top of it.” Thus, we asked the annotators

groups, we expanded our initial group pool to a to exclude images with the characteristics listed

total of 26 public groups. We went through each below. During this phase, we filtered out a total of

group, and we collected memes from old posts, dat- 111 memes.

ing up to three months before the newest post in

the group. Out of the 26 groups, 23 were about pol- • Images with diagrams/graphs/tables (see Fig-

itics, US and Canadian: left, right, centered, anti- ure 5a).

government, and gun control. The other 3 groups

• Cartoons. (see Figure 5b)

were on general topics such as health, COVID-19,

pro-vaccines, anti-vaccines, and gender equality. • Memes for which no multi-modal analysis is

Even though the number of political groups was possible: e.g., only text, only image, etc. (see

larger (i.e., 23), the other 3 general groups had a Figure 5c)

higher number of users and a substantial amount of

memes. Next, we used the Google Vision API5 to extract

the text from the memes. As the resulting text

A.2 Annotation Process sometimes contains errors, manual checking was

needed to correct it. Thus, we defined several text

We annotated the memes using the 22 persuasion editing rules, and we asked the annotators to apply

techniques from Section 3 in a multi-label setup. them on the memes that passed the filtering rules

Our annotation focused (i) on the text only, using above.

20 techniques, and (ii) on the entire meme (text +

image), using all 22 techniques. 1. When the meme is a screenshot of a social

We could not annotate the visual modality as an network account, e.g., WhatsApp, the user

independent task because memes have the text as name and login can be removed as well as all

part of the image. Moreover, in many cases, the “Like”, “Comment’, “Share”.

message in the meme requires both modalities. For 2. Remove the text related to logos that are not

example, in Figure 28, the image by itself does part of the main text.

not contain any persuasion technique, but together

with the text, we can see Smears and Reductio at 3. Remove all text related to figures and tables.

Hitlerum.

4. Remove all text that is partially hidden by an

The annotation team included six members, both image, so that the sentence is almost impossi-

female and male, all fluent in English, with qualifi- ble to read.

cations ranging from undergrad to MSc and PhD

degrees, including experienced NLP researchers, 5. Remove all text that is not from the meme, but

and covering multiple nationalities. This helped to on banners carried on by demonstrators, street

ensure the quality of the annotation, and our focus advertisements, etc.

was really on having very high-quality annotation. 4

https://pybossa.com

5

No incentives were given to the annotators. http://cloud.google.com/vision

83You can also read