Transparent, Live Migration of a Software-Defined Network

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Transparent, Live Migration of a Software-Defined Network

Soudeh Ghorbani∗, Eric Keller† , Matt Caesar∗ , Jennifer Rexford‡ , Cole Schlesinger‡, David Walker‡

∗ UIUC † University of Colorado ‡ Princeton University

Abstract 1.1 Case for Ensemble Migration

Live virtual machine (VM) migration is fast becoming

an invaluable management tool in data centers. How- Once a research novelty, live VM migration is fast be-

ever, a VM rarely acts alone. A VM often has depen- coming an invaluable management tool in public and pri-

dencies with other VMs in a multi-tier application, and vate clouds [8, 9, 4, 23, 7]. Migrating VMs, both within

with the underlying network. Cloud providers continue and across locations, gives network and service admin-

to expand the network functionality offered to tenants, istrators the flexibility they need to consolidate servers,

from a “one big switch” abstraction today to proposals balance load, perform planned maintenance, prepare for

for virtual topologies tailored to application traffic pat- natural disasters, and optimize user performance, with-

terns and network performance and cost. Seamless mi- out disrupting the applications. Yet, today’s multi-tier

gration of all (or part) of a virtual network would greatly applications often consist of multiple VMs, with signifi-

simplify management tasks like cloud bursting, moving- cant interaction between VMs in neighboring tiers. Plac-

target defense, and optimizing resource usage. Draw- ing these VMs near each other improves performance

ing on advances in Software Defined Networking, our and reduces network overhead. Migrating a single VM

LIME (LIve Migration of Ensembles) architecture mi- in isolation can lead to significant performance degrada-

grates VM and switch state, transparent to the controller tion and high bandwidth costs to “backhaul” traffic to the

and end-host applications. To seamlessly carry traffic other VMs. As such, migrating a single application may

during the transition, a virtual switch temporarily runs require joint migration of a group of related VMs [5, 11].

on multiple physical switches, with LIME merging net- These applications are often tightly coupled with the

work events, updating packet-handling rules, and com- underlying network. The network provides reachability

bining traffic statistics, to present a consistent view of a between the application VMs, directs traffic through vir-

single switch. Our formal model shows that network be- tual appliances like load balancers and firewalls, and ap-

havior during migration matches a valid, migration-free plies resource-allocation policies like routing and packet

execution. Experiments with our prototype, built on the scheduling. Though early cloud offerings give tenants a

Floodlight controller, suggest that network migration can simplified view of the network, customers increasingly

be an integral network-management mechanism. demand more sophisticated network functionality. Be-

yond offering tenants a “one big switch” abstraction [19],

1 Introduction the cloud provider can offer topologies that group related

VMs under (virtual) aggregation switches [6] or more

Virtual machines (VMs) have emerged as a key technol- general topologies that match application communica-

ogy for sharing resources and seamlessly migrating run- tion patterns [22]. The virtual topology enables tenants

ning software from one computer to another. However, to reason about the performance and cost of sending traf-

modern applications consist of multiple VMs that have fic between different pairs of VMs, within or between

a tight coupling with the underlying network (e.g., to data centers. Knowing the virtual topology allows the

reach the other VMs, perform access control, and enforce cloud provider to optimize resource usage more effec-

QoS policies). Rather than migrating individual VMs, tively, rather than assuming an arbitrary traffic matrix.

we show how to migrate all (or part) of an ensemble of The flow of traffic through a virtual network depends

VMs and network elements, while the hosts communi- on the configuration of the virtual switches. The cloud

cate seamlessly across the transition. provider may control the configuration of these virtualcomponents or give tenants control over the flow of their naturally support other network functionality (such as

own traffic within their own virtual networks, based on switches, firewalls, and load balancers) or protocols. Per-

their application requirements. Some tenants may them- haps more importantly, VROOM cannot simultaneously

selves be (virtual) cloud providers, using the infrastruc- migrate a collection of hosts and network elements. In

ture of a traditional provider to create its own footprint addition, VROOM understandably requires changes to

for a particular class of customers and services (e.g., fi- the router implementation, impeding adoption. In con-

nancial applications). Yet, the owner of the data center trast, our goal is to migrate an entire ensemble without

should have the flexibility to move virtual machines and any modifications to the server and network equipment.

switches to new locations, for a variety of reasons: To support ensemble migration in a generic way, we

separate the control-plane state and logic from the in-

Optimizing resource usage: Cloud providers can dividual network elements by capitalizing on the recent

adapt the placement of virtual machines and switches to trend of Software Defined Networking (SDN) [3, 17, 2].

make more efficient use of server and network resources, In an SDN, a logically-centralized controller manages

and to ensure that no single failure disrupts an existing how the switches forward packets. The controller ex-

tenant. For example, a provider could move a cluster changes control messages with the switches using a stan-

of related VMs (and the virtual aggregation switch) to dard protocol, such as OpenFlow [18], and offers an API

another “pod.” During periods of low load, the cloud to controller applications. The switches provide a sim-

provider could consolidate virtual components onto a ple packet-forwarding abstraction, such as a table with

smaller set of physical components to save energy. a prioritized list of rules that match packets on patterns

and perform actions. For example, OpenFlow switches

match on the input port and packet header fields (e.g.,

Cloud bursting: An enterprise could move all or part MAC addresses, IP addresses, TCP/UDP ports, VLAN

of an application between a private data center and the tags, etc.), including wildcards for “don’t care” bits, and

cloud as traffic demands change. Migration can also perform actions like dropping, forwarding, or flooding,

enable seamless changes between data centers, or even or directing a packet to the controller for processing.

cloud providers, for better performance or lower cost. An Unfortunately, migrating an SDN switch is not as sim-

enterprise could also start a trial service in its own data ple as copying the rules from one physical switch to an-

center, and transparently migrate the production service other. Since migrating a large amount of network state

(permanently) to the public cloud. takes time, we cannot afford to stop all communication

during the transition. Instead, our solutions must allow

Moving target defense: In a public cloud, a tenant is both the old and new physical switches to carry data traf-

vulnerable to side-channel attacks, where other tenants fic during the transition. Yet, these two switches must

using the same physical server or link infer properties present a unified view (e.g., of traffic statistics, control

of the running application. To thwart adversaries, the messages, etc.) of a single switch to the controller, in-

cloud provider could periodically change the placement cluding any changes made to the packet-processing rules

of VMs and switches, all while presenting the abstraction during the transition. To make ensemble migration a gen-

of a stable topology and configuration to the tenant. eral and widely-used management tool, we cannot make

In each case, live migration of an ensemble minimizes any assumptions about the (unmodified) controller ap-

performance disruptions and completely avoids the un- plication or the VMs running on the end-host. The mi-

wieldy, error-prone task of reconfiguring a complex sys- gration technique must preserve whatever dependencies

tem of servers and switches in the new location. How- this software has on the network. Finally, to avoid exces-

ever, today’s VM migration techniques do not handle the sive overhead, our migration solution should minimize

network state, limiting VM migration to a single subnet the “backhaul” traffic between the old and new parts of

or VLAN, and requiring manual intervention to config- the network during the transition period.

ure the network devices at the new location.

1.3 LIve Migration of Ensembles (LIME)

1.2 Ensemble Migration Challenges

LIME performs live migration of an ensemble of VMs

While major server virtualization platforms support live and switches. LIME runs on the SDN controller, under-

VM migration, the migration of network state has re- neath the (unmodified) controller application, to provide

ceived relatively little attention. A notable exception is the illusion of a single topology while gradually mov-

the VROOM system [21] that migrates a single virtual ing all of the components to a new location. LIME al-

router running BGP or OSPF, transparent to the end hosts lows both the old and new networks to operate during

and neighboring routers. However, VROOM does not the transition by synchronizing the switch state and cre-

2ating tunnels to relay traffic between the two networks.

Our migration algorithms are carefully designed to avoid

correctness problems that could arise from differences in

the orderings of data packets or control messages in the

two networks. LIME can also schedule the migration of

VMs based on how much network traffic they exchange,

to minimize the backhaul traffic during the transition. In

this paper, we make the following contributions:

Modular LIME architecture: LIME presents a famil-

iar controller interface to applications while seamlessly

coordinating the migration of VMs and switches and the

shifting of traffic to the new locations. Reusable building

blocks support migration algorithms with different per-

formance trade-offs.

Switch-cloning algorithm: LIME clones a switch

while presenting a consistent view of network events, Figure 1: LIME architecture with example.

traffic statistics, and rule updates to controller applica-

tions. We divide cloning into steps that respect con-

straints on event ordering and the handling of packets ensemble migration through an API for specifying which

reaching different instances of the same switch. elements to migrate and where to place them. Critically,

LIME masks the effects of migration from controller ap-

plications, allowing them to continue operating seam-

Formal model of end hosts: We develop an abstract,

lessly on a virtual network that remains the same.

formal model of software defined networks that includes

To the network operator, LIME provides a single “mi-

network elements and end hosts. Researchers may use

grate ensemble” command. Since networks differ in their

this model to reason about a variety of different algo-

size, scope, and applications, LIME supports a range

rithms involving the interactions between network and

of migration algorithms with different cost-performance

hosts (e.g., simple VM migration, end-host updates, con-

trade-offs, using a small set of high-level primitives for

sistent network updates, and so on). Prior SDN mod-

migrating virtual components, extensions to network vir-

els, such as those proposed in [15] and [20], focus ex-

tualization technology to maintain the virtual topology

clusively on the forwarding element and ignore end host

throughout the migration, and a mechanism to plug in

and controller interactions.

algorithms for orchestrating the migration process.

Proof of correctness: We formally define “observa-

tional equivalence,” where network behavior during mi- 2.1 Ensemble Migration Primitives

gration matches an execution in the migration-free set- The goal of migration is to change which physical de-

ting. Using our formal model, we prove the correctness vices host one or more of the virtual components (ma-

of critical parts of our cloning algorithm. chines or switches) in a virtual network. The LIME

framework consists of two basic primitives that can both

LIME prototype and evaluation: We present a LIME achieve this goal, but with different trade-offs.

prototype built on the FloodLight [12] controller. Our ex- Move: The first way to migrate an element is to freeze

periments show that LIME migration offers substantially it on one physical device, copy the state to a different de-

lower packet loss and overhead than a simple “freeze and vice, and continue running in the new location; however,

copy” solution. this operation cannot avoid incurring a small downtime

during the move. As shown with existing VM migra-

2 LIME Architecture tion technology, this process can be optimized by itera-

tively copying state while the component is running so

LIME (LIve Migration of Ensembles) is a general and ef- that only a small amount of state must be copied while

ficient technique for joint migration of VMs and network it is frozen (i.e., only the state that has changed since

elements. LIME builds on top of a virtualization layer in the last copy). Whereas with VMs, state changes are

an SDN controller, as shown in Figure 1. LIME enables modifications to memory pages due to the VM contin-

network operators (or automated processes) to initiate an uing execution, with an SDN switch, the state changes

3are instead modifications to the table entries as a result

of the controller application continuing to run during the

move. The LIME move primitive can iteratively freeze-

and-copy the state of an SDN switch, or leverage existing

VM migration techniques to move a virtual machine.

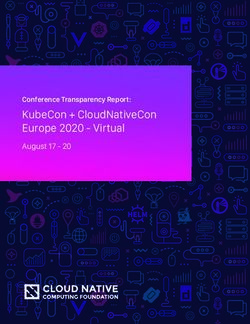

Clone: While move is simple and efficient, any packets

a component receives during the freeze phase are lost.

With clone, the virtual component runs concurrently on

multiple physical devices, with the state kept consistent Figure 2: Illustration of clone operation. Virtual switch

across the instances. LIME has no restriction on the S has been cloned to instances S’ and S” – select and

number of times a component is cloned—in fact, a new merge are not physical elements but operations on traffic

ensemble migration can begin before the previous one performed inside of the switches (incoming traffic to S

has completed. While we focus our efforts on cloning is logically selected to determine which instance should

the switches, VMs can be cloned as well (e.g., single- receive the traffic, outgoing traffic from S’ and S” is log-

machine VM cloning in Remus [10]). With cloning, traf- ically merged into a single stream)

fic can be redirected from one instance to another without

any packet loss. The downside, however, is that cloning

imposes the added cost of maintaining a consistent view new connection (either a direct link or a tunnel).

of a single element. As we discuss in Section 3, this The clone operation requires a more nuanced ap-

includes extra processing overhead on the controller, ex- proach, as it introduces multiple concurrent instances

tra overhead for performing updates, and restrictions on of a component, each able to handle traffic. Traffic

which data-plane features can be used during migration. traversing a single virtual link destined for a virtual

With LIME, both the clone and move operations can component—say, S in Figure 2—must in actuality tra-

operate on one or more components. Moving or cloning verse a single instance—either S′ or S′′ . LIME achieves

an entire set of components performs the operation on this by exposing a select operation on the virtual link that

all of the elements in the set simultaneously. Doing so controls which instance of a cloned element receives the

enables certain optimizations, such as traffic-optimizing traffic. In practice, LIME implements the selection via a

VM migration [11]; however, grouping elements into simple rule transformation on the switches—it does not

sets introduces its own trade-offs, as we discuss in Sec- require a separate physical device. In fact, this is the

tion 2.3. same transformation employed by the move operation to

move links, although in the move operation, traffic al-

ways shifts immediately to the new location.

2.2 Maintaining The Virtual Topology

By default, traffic is directed to the nearest neighbor-

When migrating an ensemble, LIME must maintain the ing clone switch, where distance is determined by the

virtual links seen by the controller application. A vir- length of the tunnel (and direct links are shortest). For

tual link may map to a physical link, or a tunnel over example, suppose a VM connects directly to a switch,

a physical path. LIME creates a tunnel in two steps. and the switch is cloned. The VM may now connect

First, LIME picks a tag, calculates a path between the to the cloned instance via a newly created (and, for the

two physical ports being connected, and updates the rules sake of argument, very long) tunnel—in this case, LIME

on each intermediate physical switch to forward tagged selects the direct link by default, rather than the tunnel.

packets along the path. At this point, the tunnel exists, When the VM later migrates, perhaps to a new location

but no traffic is entering it. Next, LIME updates rules closer to the cloned switch, the select operation is up-

on the physical switches at either end of the tunnel to dated to choose the new nearest neighbor.

tag packets and forward them along the newly-created To maintain the view of a single virtual link, outgoing

path. Tags are then stripped from packets emerging from traffic from each port of the cloned switch instances must

the tunnel. When a virtual component changes physical be merged to appear as a single stream from the virtual

location, these rules are updated to maintain the virtual switch. Like the select operation, this logical merge can

links. be achieved through rule translation on the switches. One

For move operations, this is fairly straightforward. implementation strategy relies on replacing each logical

When moving a switch, any rules that match on the input rule on each neighboring switch—R and T in Figure 2—

port are changed to match on the new port, which may with two rules, one to handle traffic from each physical

be either a direct link or tunnel. For each neighboring port. The exact implementation of the select and merge

switch, any actions that forward out a port connected to operations depends on the underlying hardware.

the migrating component must be changed to reflect the Once the move operation finishes, the old physical re-

4sources are freed for the virtualization layer to allocate (iii) migration time (for the entire ensemble or individual

elsewhere. In the case of a clone operation, there is elements), (iv) acceptable levels of packet loss, and (v)

no explicit end of a clone’s lifetime. Instead, we ef- acceptable overhead in the controller. As future work,

fectively employ a light-weight mechanism analogous we intend to provide a more in depth exploration of new

to reference-counting based garbage collection (albeit algorithms and expand on existing algorithms for node-

much simpler). Once no input ports of the virtual switch by-node network migration [16].

instance are still selected, the instance is deleted.

3 Consistent View During Switch Cloning

2.3 Ensemble Migration Orchestration

The main technical challenge in ensemble migration is

In order to support the vastly different scenarios such as ensuring the correct operation of the network during mi-

cloud-bursting, resource optimization, and moving tar- gration, for arbitrary applications running on the end

get defense, LIME must be flexible. To balance cost- hosts and the controller. In this section, we present tech-

performance trade-offs, LIME can apply custom algo- niques for cloning a switch, and allowing multiple in-

rithms that control the approach, order, and grouping of stances of a switch to carry data traffic, while presenting

components in a migration. a consistent view of a single switch to the application.

Approach: The approach—the choice to either move For ease of exposition, we introduce the cloning algo-

or clone a given component—offers a trade-off during an rithms in stages, starting with a simplified environment

ensemble migration. A clone operation enables loss-free where rules do not change during migration and events

migration but imposes extra overhead on the controller can arrive at the controller in any order. Then we gradu-

and limits the use of certain hardware features during mi- ally relax these assumptions.

gration. A move operation avoids these overheads and

limitations, at the expense of packet loss. 3.1 Copy Rules and Merge Events

Order: The order in which components are migrated

impacts the amount of “backhaul” traffic traversing the Initially, we consider a simplified setting where the

physical network between the old and new locations. switch rules do not change during migration and the con-

However, ordering the components to minimize back- troller may not assume that events from a single switch

haul traffic may be more disruptive to an individual ser- are ordered—e.g., if first one packet and then another ar-

vice. For example, consider two groups of VMs that pro- rive at a switch and are sent to the controller, the latter

vide two services. Migrating every VM from the first may arrive at the controller first. These assumptions are

group may minimize backhaul traffic, as VMs from that appropriate in many practical settings, and they allow for

group never need to communicate between the old and a straightforward migration algorithm. Specifically, we

new networks. Of course, migrating every VM in the assume the following:

group at once renders the service unavailable for the du-

ration of the move. Many services are tolerant of partial No changes to switch rules: During a migration event,

failure, however, and so alternating between VMs in the the controller does not install any new rules in the logi-

two groups may mitigate potential downtime. cal switch. Instead, the controller queues any updates

Grouping: The grouping of components to migrate at and applies them to the new instance of the switch after

the same time can have an impact. Migrating many com- migration completes. This is a reasonable assumption

ponents simultaneously may reduce control traffic (e.g., if migration can be performed quickly. In addition, the

less state to copy) but may also increase disruption or switches do not apply hard or soft timeouts that would

overhead. Moving switches individually will incur short automatically delete rules, as one instance of the switch

bursts of loss but take longer overall, whereas moving may delete a rule when the other does not—we discuss

every switch at once would lead to a single, longer pe- how to emulate rule timeouts in Section 3.4.

riod of loss, but the entire migration would finish more

quickly. Cloning the entire network of switches would No ordering constraints on events: The switch

be quicker and incur less backhaul traffic than cloning informs the controller about events, such as link failures

each switch iteratively, but synchronizing state for many and packets requiring further handling. Even a relatively

components has more overhead. simple switch has distributed components, such as

In general, an operator may tune the approach, or- multiple line cards. As such, the switch may not report

der, and grouping of the ensemble migration process for events in precisely the order they occur; in fact, today’s

metrics such as (i) amount of backhaul data traffic, (ii) OpenFlow specification does not require switches to

amount of control traffic (to copy or synchronize state), create messages for events in order. For example, two

5packets arriving on different line cards may trigger violates the correctness of the controller or end-host ap-

packet-in events in the opposite order, unless the switch plications. Once LIME knows that both switch instances

has precise mechanisms for imposing an ordering on have installed the new rule (e.g., through the use of a bar-

these events. As such, we initially assume that the rier), the second phase installs the original rule with the

switch does not necessarily report events in order. action specified by the application. This ensures that any

dependent packet is either dropped (or sent to the con-

Under these two assumptions, a relatively simple al- troller) or processed appropriately by the updated rule.

gorithm suffices for cloning a switch. First, LIME in- Note that the packet loss (or extra controller traffic) only

stalls all the rules on the new switch before allowing traf- affects traffic matching the pattern in the updated rule,

fic to traverse it. Second, when both switches are han- and only during the first phase of the update.

dling traffic, LIME merges events from the two switches The two-phase update algorithm introduces packet

as they arrive. For example, packet-in events from the loss or extra packet-processing load on the controller, de-

two switches are relayed to the controller application as pending on whether packets are dropped or sent to the

they arrive. In addition, if the application queries traffic controller. To avoid these overheads, the atomic update

counters, LIME queries the statistics on both switches algorithm directs traffic through a single instance of the

and sums the results; similarly, if a link fails at either switch. Before updating either switch instance, LIME

switch, LIME reports a link failure to the application. installs rules in one switch to direct traffic matching the

Third, once the original switch stops handling data traf- pattern to the other for processing. LIME can update this

fic, LIME applies any pending updates to the new in- single switch atomically, avoiding any inconsistency in

stance of the switch. packet handling, at the expense of longer paths for some

packets.

3.2 Update Rules in Two Phases

3.3 Preserve Event Dependencies

Next, we relax the first assumption in Section 3.1 to al-

low the controller to update rules during migration: In the previous two subsections, we assume that an SDN

switch does not necessarily convey local events to the

Controller updates to the rules: Migration should be controller in the order they occur. In this subsection, we

transparent to the controller application. As such, buffer- relax this assumption:

ing every update during migration is not acceptable if mi-

gration takes a long time. Yet, updating the rules is chal- FIFO ordering of events from a single switch: A

lenging when two instances of a switch handle data traf- switch can order control messages by the time the events

fic at the same time—thanks to the distributed nature of occur. (Imposing an ordering across multiple switches

switches, LIME cannot ensure that both switches apply is much less practical, given clock differences between

the updates at exactly the same time. Applying updates at switches.) The FIFO ordering simplifies reasoning about

different times can violate (unspecified) application de- the evolution of the system. Consider a stateful-firewall

pendencies. As an example, consider a request packet application that only delivers server traffic sent in re-

that traverses a switch and triggers a response packet that, sponse to a client request. The controller application

in turn, traverses a clone of the same switch. Since up- could install a rule that performs two actions on client

dates do not occur instantaneously, the response packet traffic: (i) sending the packet header to the controller

might encounter an older version of the rules than the re- (e.g., to trigger installing a rule permitting the server’s re-

quest packet—something that would never occur in the turn traffic), and (ii) forwarding the packet to the server.

migration-free setting. The application could also install a default rule that sends

To obey application dependencies, LIME must ensure server packets to the controller, in the absence of a more-

that data traffic has a consistent experience at any in- specific rule forwarding the traffic directly to the client.

stance of a switch, despite the ongoing update. LIME Now, both the client request and the server response trig-

can achieve this goal in two different ways—by drop- ger packet-in events. With a FIFO ordering of events, the

ping packets during an update or directing the affected controller would never receive the packet-in event for the

packets through a single instance of a switch. In the first server’s reply packet before the packet-in event for corre-

algorithm, LIME performs a two-phase update to change sponding request, ensuring that the application does not

a rule. In the first phase, LIME installs a rule with the mistakenly block the server traffic.

same pattern, but with an action of “drop” (or “send to If the SDN protocol ensures FIFO ordering, the con-

controller”) to discard (or serialize) the packets. Packet troller application may implicitly rely on FIFO ordering

loss is always possible in a best-effort network, and it to enforce dependencies between packets. LIME must

is preferable to inducing behavior in the network in that respect these dependencies when merging events from

6multiple instances of a switch. Fortunately, a best-effort ters, deleting the rule if no traffic matches the rule (on

network does not ensure in-order packet delivery. Two any instance of the switch) for a period of time. To delete

packets sent independently may arrive at the switch in the rules, LIME can follow the same two-phase update

either order; so, if these packets reach different instances procedures outlined in the previous subsections. Since

of the same switch, the controller need not preserve the the application API does not offer precise timing guaran-

order of the packet-in events. But, if the arrival of one tees, a coarse-grain approximation of timeouts does not

packet could trigger the transmission of the other, the compromise the correctness of the controller application.

controller must preserve the order, in case the application And, when no migration is in progress, LIME can freely

depends on the ordering. These dependencies arise if a install rules with hard or soft timeouts to offload the dele-

rule has multiple actions—both “send to the controller” tion of rules to the underlying switches.

and “forward,” as in the stateful-firewall example. This Over time, we envision that SDNs will evolve to of-

application must operate correctly if the client request fer controller applications an API with higher-level pro-

traverses one instance of the switch, and the server re- gramming abstractions, deviating more and more from

sponse traverses the other. To serialize these events, the low-level API to the underlying switch hardware. Fu-

LIME transparently replaces any such rule with a single ture SDN platforms may completely shield the program-

“send to the controller” action, and performs the “for- mer from nitty-gritty details like timeouts. In our future

warding” action at the controller. This ensures a FIFO work, we plan to explore how LIME can capitalize on

ordering of dependent packet-in events during migration, this trend to simplify support for ensemble migration.

at the expense of extra overhead on the controller and

extra latency for the traffic, just for policies that want to

perform these two actions at the same time. 4 Migration Correctness

Intuitively, we say that a whole-network migration is cor-

3.4 Emulate Rule Timeouts on Switches rect if every sequence of observations that a client (i.e.,

The previous subsections assume that only the controller the controller application and end-host virtual machines)

adds, deletes, or modifies rules. Yet, switches may sup- can make during a migration could also have been ob-

port local modifications to rules. Whether or not these served in a migration-free setting. In this section, we de-

features are exposed to controller applications depends velop an abstract network model and use it reason about

on the API the controller offers: the correctness of LIME migration. There are three main

contributions here: (1) we develop an abstract model for

reasoning about algorithms over software-defined net-

Controller API that supports rule timeouts: Today’s

works that includes both end hosts and forwarding ele-

OpenFlow controller platforms allow controller applica-

ments, (2) we give a mathematical definition of what it

tions to install a rule with a hard timeout (that deletes

means for an ensemble migration to be correct (indepen-

a rule after a fixed time elapses) or a soft timeout (that

dent of what algorithm is used to achieve the migration),

deletes a rule after a period of inactivity), although the

and (3) we define a collection of reusable theoretical ab-

specification makes no guarantees as to the accuracy of

stractions (unobservable updates, network clones, and

these timeouts. However, allowing rules to expire makes

VM migrations) sufficient to prove that the core LIME

it difficult for two instances of a switch to behave con-

algorithms satisfy our notion of correctness.

sistently. Depending on when a rule in installed, or how

much traffic matches the rule, a rule may expire on one

instance of a switch but not the other, leading to incon- 4.1 SDN Model

sistent packet-forwarding behavior when both switches

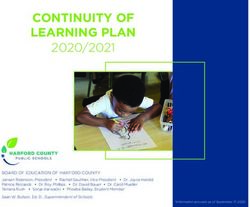

handle data traffic. For example, a client request packet Figure 3 presents the central components of our SDN

may encounter a switch instance that has not deleted a model. The figure defines a collection of mathemati-

rule, but the subsequent server response packet may en- cal objects with their types. These types include pairs

counter a switch instance that has already deleted the (×), alternatives/enumerations/unions (+), total func-

rule, leading to inconsistent behavior. tions (→), partial functions (⇀) as well as sets, multisets

LIME offers controller applications the abstraction and lists. Those objects listed without types specified are

of rule timeouts, while retaining complete control over simple atomic data with uninteresting or irrelevant struc-

changing rules in the switches. If the application requests ture. For instance, a located packet (lp) is a pair of a port

a hard timeout, LIME installs the rule without a timeout (p) and a packet (pk). Ports are assumed to be named in

and deletes the rule after a fixed period of time. If the a globally unique way (in other words, a port name in-

application requests a soft timeout, LIME installs a rule cludes the name of the switch on which it lives). As an-

without a timeout and periodically polls the traffic coun- other example, switch update is a (partial) function from

7is represented by the function f . If, in turn, f (lp) =

Packets:

{lp1 , lp2 }, then the packet-processing state is such that

Packet pk

if a located packet lp appears at the host, the host will

Port p

Located Pkt (LP) lp ∈ Port × Packet analyze it and return the set of packets that includes lp1

Trace t ∈ LP List and lp2 as a response.

The map Q represents the state of the network’s packet

Observations: queues. For example, if Q(p) = [(pk1 ,t1 ), ...] then at port

Query ID id p, there exists a queue of packets and the first packet in

Query IDs ids ∈ ID Set the queue is pk1 . The object t1 paired with the packet

Host ID idh is the packet’s trace—the history of its travels through

Host IDs idsh ∈ ID Set

the network. This trace is not something that the system

Observation (Obs) o ∈ ID + (IDh × LP)

implements physically; it appears in the model strictly to

Observations os ∈ Obs Multiset

Switch Update uS ∈ LP ⇀ LP Set × ID Set facilitate proofs of correctness.

Host Update uH ∈ Port × IDh Finally, M maps ports in the original network to their

Update u ∈ Switch U pdate + Host U pdate new locations in the migrated network—in essence, M

Updates us ∈ U pdate List characterizes the target of the migration.

Port Map M ∈ Port ⇀ Port

Network State: 4.2 Operational Semantics

Switch S ∈ LP ⇀ (LP Set × ID Set) Figure 4 explains how a network executes using a con-

Topology T ∈ P (Port × Port)

ventional operational semantics.1 The inference rules

Hosts H ∈ Port ⇀ (IDh × (Packet ⇀ Packet Set))

provided allow us to conclude relations of the form

Packet Queue Q ∈ Port → (Packet × Trace) List

os

(Q, S, H, us) −→ (Q′ , S′ , H ′ , us′ ).

Figure 3: Network elements.

Intuitively, the above relation states that when the net-

work is in a state described by the queue Q, the switching

function S, the hosts H and there are a series of network

a located packet to a set of located packets paired with a

updates us to process then the network may transition to

set of identifiers.

a state in which the queue is now Q′ , the switching func-

The key physical structures that define the state of

tion S′ , the hosts H ′ and the remaining updates us′ . When

a network are found towards the bottom of the figure.

the network executes such a transition, one may make

The first (S) is an abstract specification of the switch

some observations os concerning the network. Such ob-

configurations of the network. For instance, if S(lp) =

servations include the observation that a packet has tra-

({lp′ }, {id}) then the a network switch will forward the

versed a switch that emits a query id, and the observation

located packet lp across its switching fabric producing a

(id h , pk), which indicates that a packet pk has arrived at

single packet lp′ at one of its output ports. At the same

end host id h . Intuitively, these observations capture those

time, id will be emitted. Such ids are an abstract rep-

network state changes that a controller or end host may

resentation of the counters on the switch elements that

observe and care about. Take note that observations are

a controller may read. By emitting a particular id, the

represented as a multiset (as opposed to a set) so the for-

switch represents the fact that a specific counter of inter-

mal system can “count” the number of occurrences of

est has been incremented. Note that a switch may gener-

each observation.

ate one result packet (as above), zero result packets (in-

Each rule in Figure 4 represents a discrete action that

dicating the switch will drop such inputs), or many result

may occur in the network. As an example, the rule

packets (indicate the switch will broadcast such inputs

[Switch] removes a located packet from the queue, applies

across multiple links).

the switch function, applies the topology function to the

The topology T is a relation between ports. It repre- resulting packets, and enqueues the results. Two obser-

sents the connections between switches and hosts. We vations can be made of this step: (1) The packet updates

also use T to model tunnels between switches. Hence, local, observable state on the switch (e.g., a counter),

two ports are related if they are either directly connected and/or (2) the packet is forwarded to an end host. As an-

or a tunnel has been established between them. other example, the rule [Update-Switch] does not emit any

The function H represents the ports where particular 1 This figure presents a set of inductive inference rules. These rules

hosts are attached to the network. More specifically, if are structured so that assertions above the line in a rule are premises

H(p) = (id h , f ) then the host identified by id h exists off or preconditions, and the assertions below the line are conclusions that

port p, and that host’s relevant packet-processing state may be drawn when the premises are proven.

8os

(Q, S, H, us) −→ (Q′ , S′ , H ′ , us′ )

(lp, Q′ ) = dequeue(Q) lps′ = (p′i , pki ) | (pi , pki ) ∈ lps ∧ (pi , p′i ) ∈ T

S(lp) = (lps, ids)

os = ids ∪ (idh , pkk ) | H(pk ) = (idh , f ) ∧ (pk , pkk ) ∈ lps′ Q′′ = enqueue(Q′ , lps′ )

os [Switch]

(Q, S, H, M, us) −→ (Q′′ , S, H, M, us)

(lp, Q′ ) = dequeue(Q) lp = (p, pk) (idh , f ) = H(p) f (pk) = pks

lps = (p, pk′ ) | pk′ ∈ pks lps′ = (p′i , pki ) | (pi , pki ) ∈ lps ∧ (pi , p′i ) ∈ T Q′′ = enqueue(Q′ , lps′ )

[Host]

(Q, S, H, us) −→ (Q′′ , S, H, us)

us = [uS ] ++ us′ S′ = override(S, uS )

[Update-Switch]

(Q, S, H, us) −→ (Q, S′ , H, us′ )

us = [(p, idh )] ++ us′ H(p′ ) = (idh , f )

′ ′′ ′ ′

H = update(H, p, (id h , f )) H = clear(H , p ) Q = update(Q, p, Q(p′ ))

′

Q′′ = update(Q′ , p′ , [ ])

[Update-Host]

(Q, S, H, us) −→ (Q′′ , S, H ′′ , us′ )

Figure 4: Network operational semantics.

observations. Rather, it consumes the first update from Definition 2 (Logically Unobservable Migration). A se-

the list of updates to produce a new switch function— quence of updates us is logically unobservable if, for all

the clause [uS ] ++ us′ states that the switch update uS is observations os generated during a network migration

the head of the update list concatenated with us′ (the tail), os

and override(S, uS ) yields a new switch function that for- (Q, S, H, us) −→ ⋆(Q′ , S′ , H ′ , us′ )

wards packets according to uS , if uS (lp) is defined, or S

from any initial state Q, there exists a migration-free ex-

otherwise. ecution

In the following sections, we will write os′

os (Q, S, H, [ ]) −→ ⋆(Q′′ , S, H, [ ]),

(Q, S, H, us) −→ ⋆(Q′ , S′ , H ′ , us′ ) to represent a se-

quence of zero, one, two or more of the transition steps such that os ⊆ os′ .

described in Figure 4. Given such a relation, we say that

Q is an initial state if every located packet in Q (in both 4.4 Migration Strategies

queues and traces) is located at a port in either (a) the

domain or range of the initial switch function S, or (b) in We begin by proving the correctness of the simple migra-

the domain of the initial host map H. tion algorithm, first presented in Section 3.1, that buffers

switch configuration updates from the controller until af-

ter the migration is complete. The algorithm has two

4.3 Statement of Correctness phases: first, a clone update sequence clones each switch

A migration-free execution is any execution that does not in the original network, updating the boundary switches

undergo updates issued by the migration mechanism. to tunnel traffic exiting the new network to the old; and

second, for each traffic source in the migration zone, a

Definition 1 (Migration-free Execution). An execution source migration sequence blocks, freezes and copies it.

We begin by defining the properties that characterize a

os

(Q, S, H, [ ]) −→ ⋆(Q′ , S, H, [ ]) clone update sequence and prove that any such sequence

is logically unobservable. Then, we define the source mi-

is a migration-free execution. gration sequence and prove that a clone update sequence

followed by a source migration sequence is logically un-

We say that a migration mechanism—i.e., a series of observable.

updates—is correct if every set of logical observations

that can be generated during a migration may also have

4.4.1 Clone Phase

been generated in a migration-free setting. A migration-

free setting may produce more observations—after all, Intuitively, a clone update sequence consists of a series

a migration mechanism may drop some packets—but a of updates that copy configurations from an existing, in-

migration will not introduce new behavior. use set of switches to a “fresh” (i.e., currently unused) set

9of clone switches. A clone update sequence is always ac- 2. Move. Next, freeze the VM, move it to its target

companied by a map M that relates ports on the original location, and unfreeze it.

switches to ports on the cloned switches. For example,

if pold is a port on one of the existing switches then we 3. Forward. Finally, update the switch function so

write M(pold ) to denote the corresponding port pnew on that, rather than dropping traffic destined for port

the cloned switch.2 p′ , tunnel it to the new host location.

After every update has been applied, each switch in the

We elide mathematical definitions of block, move and

original network has a counterpart in the extended net-

forward updates as they are detailed, but unsurprising;

work, and one of two properties holds. If the switch is not

here, we simply define the full source migration se-

a boundary switch, then its counterpart mirrors its rules

quence as follows.

exactly, modulo the translation on ports. Otherwise, its

counterpart is reconfigured to tunnel any external bound- Definition 4 (Source Migration Sequence). Let p be a

ary ports M(pb ) directly to the traffic sink attached to host port and ub a block update, um a move update, and

the original port pb . In practice, the tunnel is configured u f a forward update, all with respect to p. We say that

to go through port pb on the original switch in order to the sequence [ub , um , u f ] is a source migration sequence

minimize latency. with respect to port p.

Definition 3 (Clone Update Sequence). Let us be a se- Theorem 2. A clone migration sequence followed by a

quence of switch updates, S′ = override(S, us), and M be source migration sequence is logically unobservable.

a mapping from ports to ports. The update sequence us

is a clone update sequence if and only if the following The proof relies on a property of clone update se-

properties hold. quences: after the update sequence completes, for any

path a packet might take through the extended network,

1. Fresh switches. For all u ∈ us, dom(S) ∩ dom(u) = that packet will take an equivalent path when injected

{ } and for all lp, if S(lp) = (lps, ids), then lps ∩ into the original, pre-cloned network. Hence, every trace

dom(u) = { }. generated during a source migration sequence has an

equivalent—albeit possibly longer—trace that could be

2. Faithful cloning. For all located packets lp, if

generated during a migration-free execution.

S(lp) = (lps, ids), then S′ (M(lp)) = (M(lps), ids).

A clone update sequence is also characterized by faith-

3. Boundary forwarding. For all boundary ports pb , ful cloning—every located packet in every trace gener-

if (pb , p′ ) ∈ T , then (M(pb ), p′ ) ∈ T . ated during a source migration sequence is generated by

some switch rule, and that rule has an equivalent coun-

A clone update sequence is logically unobservable. terpart in the original switch configuration that produces

exactly the same observations.

Theorem 1. If an update sequence [u1 , ..., un ] is a clone

Hence, if every trace generated during a migration has

update, then [u1 , ..., un ] is logically unobservable.

an equivalent or longer trace generated in a migration-

The proof proceeds by induction on the structure of the free setting, and traces generate the same observations,

multi-step relation and exploits the fact that a clone up- then every multiset of observations generated during a

date only modifies the forwarding behavior of the net- migration could have been generated during a migration-

work and, moreover, only introduces rules that traffic free execution.

cannot (yet) reach.

4.5 Logical Updates During Migration

4.4.2 Block, Move, and Forward Phase

In the previous section, we proved that network behav-

Once the original network has been cloned, we can begin ior during a migration is indistinguishable from network

to migrate traffic sources to their target destinations. For behavior in a migration-free setting. The proof relies on

each VM attached to a host port p ∈ dom(H), we take a particular property of the clone update sequence: that,

the following actions. for any located packet in any trace through a migrating

network, the rules on the switch that produce it are ex-

1. Block. For each port p′ attached to p, either by the actly the same as on its counterpart switch in the origi-

topology or via a tunnel, update the switch function nal network. Naively interspersing updates from the con-

to drop traffic destined for p′ . troller invalidates this property and can lead to the kinds

2 Given a located packet lp = (p,pk) and a set of such located pack- of nuanced problems described in Section 3.2.

ets {lp,...}, we also let M(lp) = (M(p),pk) and M({(p,pk),...}) = For example, imagine the controller emits a switch up-

{(M(p),pk),...}. date u during migration. At the very least, LIME must

10duplicate u across any switch clones. But switches are 5.1 Packet Loss, Jitter, and Latency

updated asynchronously, and so there may exist a win-

dow when a packet can traverse a cloned switch that does We run two experiments to measure packet loss, jitter,

not match the rules installed on the original. and latency. In the first, only switches are migrated

while the VMs generating traffic remain stationary. In

To overcome this dilemma, LIME translates each

the second, both the VMs and the switches migrate. Both

switch update u from the controller using a two-phase

experiments instantiate their respective virtual topolo-

update: first, u is rewritten to u′ , which drops packets

gies on four types of physical topology: VL2 [13] and

and emits no observations, and u′ is installed on both the

BCube [14], which represent data-center topologies, as

original and cloned switches. Then, once u′ has been in-

well as scale-free and grid topologies.

stalled on both switches, u is installed, replacing u′ .

Now, consider any trace that hits u′ during the win-

dow of inconsistency when u is installed on one switch

but u′ is still installed on the other—u′ drops any pack-

ets it matches without emitting any observations, and so

the trace ends there. Critically, the result of applying u′

is not in the trace—the packet was dropped. And so the

property established by the clone update sequence is pre-

served: every rule that produces a packet is identical to

a rule on its counterpart switch in the original network,

because the inconsistent rule does not produce a packet.

Hence, a logically unobservable migration interspersed (a) Chain (b) Tree

with two-phase updates from the controller remains log-

ically unobservable. Figure 5: Topologies used in experiments.

The virtual topology of the first experiment is shown

5 Implementation and Evaluation

in Figure 5(a). Ten packets are sent from one end host

to the other (the small circles in the figure) as the mi-

We have developed a prototype implementation of LIME

gration of S1 to S1’ and S2 to S2’ is initiated—the ex-

on the Floodlight SDN controller [12]—LIME resides in

periment was run five times on each physical topology.

a layer between the Floodlight controller and controller

The freeze-and-copy approach showed an average packet

applications, exposing an API with which a network op-

loss ranging from 21-24%, depending on the underlying

erator may issue migration commands. Our initial expe-

physical topology. LIME does not freeze switches dur-

rience with this prototype suggests that LIME is capa-

ing a switch—as one might expect, the LIME migration

ble of performing migration while substantially reducing

exhibited no packet loss.

outage time (as measured by packet loss), without im-

posing much overhead on the controller, and without in-

1

ducing significant degradation on the quality of service 0.9 LIME

0.8 Freeze And Copy

seen by applications running on end hosts. 0.7

0.6

CDF

We evaluate the LIME prototype in three situations: 0.5

0.4

0.3

• Measuring packet loss, jitter, and latency as traffic 0.2

0.1

flows through the virtual network during a migra- 0

0 5 10 15 20 25 30 35 40 45

tion. Packet loss percentage (per 1ms interval)

• Measuring the time it takes to migrate a network as Figure 6: Comparison of packet loss between LIME and

the number of rules and switches increases. Freeze and Copy.

• Measuring the overhead incurred on the controller The second experiment uses a virtual topology shown

during a migration. in Figure 5(b), where VM h1 and switch S1 are migrated

as h1 sends traffic to h2. Traffic is sent at a rate of 2000

In each case, we compare the LIME clone migration al- packets/second for five seconds, and the migration is ini-

gorithm described in Section 3.2 to a naive mechanism tiated two seconds after the flow of traffic begins. The ex-

that simultaneously freezes and then copies all the net- periment is repeated emitting packets at intervals accord-

work elements undergoing migration. ing to constant, normal, and Poisson distributions—each

11variation was repeated twenty times. Figure 7 presents 40

LIME

Disruption time (s)

35

the results, with LIME showing a significant improve- 30

Freeze and Copy

ment over the freeze-and-copy approach. 25

20

15

0.002 10

0.0018 LIME 5

0.0016 0

0.0014 0 10 20 30 40 50 60 70 80 90 100

0.0012

Delay

0.001 Number of rules per switch

0.0008

0.0006 80

LIME

Disruption time (s)

0.0004 70

0.0002 Freeze and Copy

0 60

1.8 1.9 2 2.1 2.2 2.3 2.4 50

Time (s) 40

30

20

Figure 7: Effect of migration with LIME on packet delay. 10

0

0 5 10 15 20 25 30 35 40 45 50

Size of the network

5.2 Total Migration Time Figure 9: Effect of migration with LIME on disruption

Next, we examine the total time a LIME migration re- time.

quires, broken down by each step in the algorithm. We

arrange twenty switches in a chain topology, with one

VM connected to each switch, and the entirety of the machine. We use the cbench controller benchmarking

virtual topology—switches and VMs—is included in the tool [1] in “throughput” mode, emulating ten switches,

migration. Figure 8 shows how the migration algorithm and compare the number of packet-in events a LIME-

scales as more rules are installed on each switch and as augmented controller can handle compared to the stan-

more switches are added to the chain. dard Floodlight controller. The LIME prototype pro-

cessed 8631 events per second as compared to 9702 for

18 the unaugmented Floodlight controller—an overhead of

16 Total

Migration time (s)

14 Clone roughly 11%. Of course, our prototype is not optimized

Establish tunnels

12

Reverse tunnels for controller traffic, and we believe this can be improved

10

Delete rules in the future.

8

6

4

2

0

0 10 20 30 40 50 60 70 80 90 100 6 Conclusions and Future Work

Number of rules per switch

40

Total Live ensemble migration can be a powerful management

Migration time (s)

35

Clone

30 Establish tunnels tool for network operators. Leveraging recent advances

25 Reverse tunnels

20 Delete rules in SDN, with LIME, we show how to migrate an entire

15 ensemble in a way that both supports arbitrary controller

10

5 application software and efficiently orchestrates the mi-

0

0 5 10 15 20 25 30 35 40 45 50

gration process. Our evaluation with our initial prototype

Size of the network shows the promise that ensemble migration can be an in-

tegral tool rather than a rate maintenance event.

Figure 8: Effect of the network size on the migration As future work, we plan to explore algorithms which

time. make use of the general framework for orchestrating a

migration. This includes determining the optimal group-

While the total migration time may seem high, the ser- ing of components, the best migration approach to use for

vices being migrated are unavailable for much shorter pe- that group, the migration order across groups, and opti-

riods. Figure 9 shows the length of network downtime as mizing migration based on appropriate network measure-

the number of rules and switches increases, respectively. ments. In addition, we plan to integrate and investigate

technologies like redundancy elimination to reduce the

overhead of copying the state for multiple related VMs.

5.3 Controller Overhead

Finally, we plan to extend LIME for other management

LIME resides as a layer on the controller and may in- needs, such as cloning ensembles for the purposes of

duce additional processing overhead on the underlying failover or load balancing.

12References [22] K. C. Webb, A. C. Snoeren, and K. Yocum. Topology switching

for data center networks. In Hot-ICE Workshop, March 2011.

[1] cbench OpenFlow controller benchmark. See http://www.

[23] D. Williams, H. Jamjoom, and H. Weatherspoon. The Xen-

openflow.org/wk/index.php/Oflops.

blanket: Virtualize once, run everywhere. In ACM European

[2] Open Networking Foundation. https://www. Conference on Computer Systems (Eurosys), April 2012.

opennetworking.org/.

[3] OpenFlow. http://www.openflow.org.

[4] NTT, in collaboration with Nicira Networks, succeeds in remote

datacenter live migration, August 2011. http://www.ntt.co.

jp/news2011/1108e/110802a.html.

[5] S. Al-Kiswany, D. Subhraveti, P. Sarkar, and M. Ripeanu. VM-

Flock: Virtual machine co-migration for the cloud. In High Per-

formance Distributed Computing, 2011.

[6] H. Ballani, P. Costa, T. Karagiannis, and A. Rowstron. Towards

predictable datacenter networks. In ACM SIGCOMM, August

2011.

[7] P. Bodik, I. Menache, M. Chowdhury, P. Mani, D. A. Maltz, and

I. Stoica. Surviving failures in bandwidth-constrained datacen-

ters. In ACM SIGCOMM, August 2012.

[8] R. Bradford, E. Kotsovinos, A. Feldmann, and H. Schioberg. Live

wide-area migration of virtual machines including local persistent

state. In Virtual Execution Environments, pages 169–179, 2007.

[9] Cisco and VMWare. Virtual machine mobility with VMware

VMotion and Cisco data center interconnect technologies, 2009.

[10] B. Cully, G. Lefebvre, D. Meyer, M. Feeley, N. Hutchinson, and

A. Warfield. Remus: High availability via asynchronous virtual

machine replication. In USENIX Networked Systems Design and

Implementation (NSDI), April 2008.

[11] U. Deshpande, X. Wang, and K. Gopalan. Live gang migration of

virtual machines. In High Performance Distributed Computing,

2011.

[12] Floodlight OpenFlow Controller. http://floodlight.

openflowhub.org/.

[13] A. Greenberg, N. Jain, S. Kandula, C. Kim, P. Lahiri, D. Maltz,

P. Patel, and S. Sengupta. VL2: A scalable and flexible data

center network. In ACM SIGCOMM, 2009.

[14] C. Guo, G. Lu, D. Li, H. Wu, X. Zhang, Y. Shi, C. Tian, Y. Zhang,

and S. Lu. BCube: a high performance, server-centric network

architecture for modular data centers. In Proceedings of the ACM

SIGCOMM 2009 conference on Data communication, 2009.

[15] P. Kazemian, G. Verghese, and N. McKeown. Header space anal-

ysis: Static checking for networks. In USENIX Networked Sys-

tems Design and Implementation (NSDI), 2012.

[16] S. Lo, M. Ammar, and E. Zegura. Design and analysis of sched-

ules for virtual network migration. Technical Report GT-CS-12-

05, Georgia Tech, 2012.

[17] J. Markoff. Open networking foundation pursues new standards.

The New York Times, March 2011. See http://nyti.ms/

eK3CCK.

[18] N. McKeown, T. Anderson, H. Balakrishnan, G. Parulkar, L. Pe-

terson, J. Rexford, S. Shenker, and J. Turner. OpenFlow: En-

abling innovation in campus networks. SIGCOMM Computer

Communications Review, 38(2), 2008.

[19] Nicira. Networking in the era of virtualization. 2012.

[20] M. Reitblatt, N. Foster, J. Rexford, C. Schlesinger, and D. Walker.

Abstractions for network update. In ACM SIGCOMM, August

2012.

[21] Y. Wang, E. Keller, B. Biskeborn, J. van der Merwe, and J. Rex-

ford. Virtual routers on the move: Live router migration as a

network-management primitive. In ACM SIGCOMM, 2008.

13You can also read