Datasets for Online Controlled Experiments - OpenReview

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Datasets for Online Controlled Experiments

C. H. Bryan Liu ?† Ângelo Cardoso † Paul Couturier ? Emma J. McCoy ?

? †

Imperial College London & ASOS.com, UK

bryan.liu12@imperial.ac.uk

Abstract

1 Online Controlled Experiments (OCE) are the gold standard to measure impact and

2 guide decisions for digital products and services. Despite many methodological

3 advances in this area, the scarcity of public datasets and the lack of a systematic

4 review and categorization hinders its development. We present the first survey and

5 taxonomy for OCE datasets, which highlight the lack of a public dataset to support

6 the design and running of experiments with adaptive stopping, an increasingly pop-

7 ular approach to enable quickly deploying improvements or rolling back degrading

8 changes. We release the first such dataset, containing daily checkpoints of decision

9 metrics from multiple, real experiments run on a global e-commerce platform. The

10 dataset design is guided by a broader discussion on data requirements for common

11 statistical tests used in digital experimentation. We demonstrate how to use the

12 dataset in the adaptive stopping scenario using sequential and Bayesian hypothesis

13 tests and learn the relevant parameters for each approach.

14 1 Introduction

15 Online controlled experiments (OCEs) have become popular among digital technology organisations

16 in measuring the impact of their products and services, and guiding business decisions [50, 53, 75].

17 Large tech companies including Google [41], Linkedin [84], and Microsoft [49] reported running

18 thousands of experiments on any given day, and there are multiple companies established solely to

19 manage OCEs for other businesses [10, 45]. It is also considered a key step in the machine learning

20 development lifecycle [8, 89].

21 OCEs are essentially randomized controlled trials run on the Web. The simplest example, commonly

22 known as an A/B test, splits a group of entities (e.g. users to a website) randomly into two groups,

23 where one group is exposed to some treatment (e.g. showing a "free delivery" banner on the website)

24 while the other act as the control (e.g. seeing the original website without any mention of free

25 delivery). We calculate the decision metric(s) (e.g. proportion of users who bought something)

26 based on responses from both groups, and compare the metrics using a statistical test to draw causal

27 statements about the treatment.

28 The ability to run experiments on the Web allows one to interact with a large number of subjects

29 within a short time frame and collect a large number of responses. This, together with the scale of

30 experimentation carried out by tech organizations, should lead to a wealth of datasets describing

31 the result of an experiment. However, there are not many publicly available OCE datasets, and we

32 believe they were never systematically reviewed nor categorized. This is in contrast to the machine

33 learning field, which also enjoyed its application boom in the past decade yet already has established

34 archives and detailed categorizations for its datasets [28, 77].

35 We argue the lack of relevant datasets arising from real experiments is hindering further development

36 of OCE methods (e.g. new statistical tests, bias correction, and variance reduction methods). Many

37 statistical tests proposed relied on simulated data that impose restrictive distributional assumptions

Submitted to the 35th Conference on Neural Information Processing Systems (NeurIPS 2021) Track on Datasets

and Benchmarks. Do not distribute.38 and thus may not be representative of the real-world scenario. Moreover, it may be difficult to

39 understand how two methods differ to each other and assess their relative strength/weakness without

40 a common dataset to compare on.

41 To address this problem, we present the first ever survey and taxonomy for OCE datasets. Our

42 survey identified 12 datasets, including standalone experiment archives, accompanying datasets from

43 scholarly works, and demo datasets from online courses on the design and analysis of experiments.

44 We also categorize these datasets based on dimensions such as the number of experiments each

45 dataset contains, how granular each data point is time-wise and subject-wise, and whether it include

46 results from real experiment(s).

47 The taxonomy enables us to engage in a discussion on the data requirements for an experiment by

48 systematically mapping out which data dimension is required for which statistical test and/or learning

49 the hyperparameter(s) associated with the test. We also recognize that in practice data are often used

50 for purposes beyond what it is originally collected for [47]. Hence, we posit the mapping is equally

51 useful in allowing one to understand the options they have when choosing statistical tests given the

52 format of data they possess. Together with the survey, the taxonomy helps us to identify what types

53 of dataset are required for commonly used statistical tests, yet are missing from the public domain.

54 One of the gaps the survey and taxonomy identify is datasets that can support the design and running

55 of experiments with adaptive stopping (a.k.a. continuous monitoring / optional stopping), which we

56 motivate their use below. Traditionally, experimenters analyze experiments using Null Hypothesis

57 Statistical Tests (NHST, e.g. a Student’s t-test). These tests require one to calculate and commit to a

58 required sample size based on some expected treatment effect size, all prior to starting the experiment.

59 Making extra decisions during the experiment, be it stopping the experiment early due to seeing

60 favorable results, or extending the experiment as it teeters “on the edge of statistical significance” [61],

61 is discouraged as they risk one having more false discoveries than intended [37, 60].

62 Clearly the restrictions above are incompatible with modern decision-making processes. Businesses

63 operating online are incentivized to deploy any beneficial and roll back any damaging changes

64 as quickly as possible. Using the “free delivery” banner example above, the business may have

65 calculated that they require four weeks to observe enough users based on an expected 1% change

66 in the decision metric. If the experiment shows, two weeks in, that the banner is leading to a 2%

67 improvement, it will be unwise not to deploy the banner to all users simply due to the need to run the

68 experiment for another two weeks. Likewise, if the banner is shown leading to a 2% loss, it makes

69 every sense to immediately terminate the experiment and roll back the banner to stem further losses.

70 As a result, more experimenters are moving away from NHST and adopting adaptive stopping

71 techniques. Experiments with adaptive stopping allows one to decide whether to stop an experiment

72 at any time in between based on the sample responses observed so far without worrying much on

73 false positive/discovery rate control. To encourage further development in this area, both in methods

74 and data, we release the ASOS Digital Experiments Dataset, which contains daily checkpoints of

75 decision metrics from multiple, real OCEs run on the global online fashion retail platform.

76 The dataset design is guided by the requirements identified by the mapping between the taxonomy

77 and statistical tests, and to the best of our knowledge, is the first public dataset that can support the

78 end-to-end design and running of online experiments with adaptive stopping. We demonstrate it can

79 indeed do so by (1) running a sequential test and a Bayesian hypothesis test on all the experiments in

80 the dataset, and (2) estimating the value of hyperparameters associated with the tests. While the notion

81 of ground-truth does not exist in real OCEs, we show the dataset can also act as a quasi-benchmark

82 for statistical tests by comparing results from the tests above with that of a t-test.

83 To summarize, our contributions are:

84 1. (Sections 2 & 3) We create, to the best of our knowledge, the first ever taxonomy on online

85 controlled experiment datasets and apply it to datasets that are publicly available;

86 2. (Section 4) We map the relationship between the taxonomy to statistical tests commonly used in

87 experiments by identifying the minimally sufficient set of statistics and dimensions required in

88 each test. The mapping, which also applies to offline and non-randomized controlled experiments,

89 enables experimenters to quickly identify the data collection requirements for their experiment

90 design (and conversely the test options available given the data availability); and

91 3. (Section 5) We make available, to the best of our knowledge, the first real, multi-experiment time

92 series dataset, enabling the design and running of experimentation with adaptive stopping.

293 2 A Taxonomy for Online Controlled Experiment Datasets

94 We begin by presenting a taxonomy on OCE datasets, which is necessary to characterize and

95 understand the results of a survey. To the best of our knowledge, there are no surveys nor taxonomies

96 specifically on this topic prior to this work. While there is a large volume of work concerning the

97 categorization of datasets in machine learning [28, 77], of research work in the online randomized

98 controlled experiment methods [3, 4, 32, 68], and of general experiment design [43, 52], our search

99 on Google Scholar and Semantic Scholar using combinations of the keywords “online controlled

100 experiment”/“A/B test”, “dataset”, and “taxonomy”/“categorization” yields no relevant results.

101 The taxonomy focuses on the following four main dimensions:

102 Experiment count A dataset can contain the data collected from a single experiment or multiple

103 experiments. Results from a single experiment is useful for demonstrating how a test works, though

104 any learning should ideally involve multiple experiments. Two closely related but relatively minor

105 dimensions are the variant count (number of control/treatment groups in the experiment) and the

106 metric count (number of performance metrics the experiment is tracking). Having an experiment

107 with multiple variants and metrics enable the demonstration of methods such as false discovery rate

108 control procedures [7] and learning the correlation structure within an experiment.

109 Response granularity Depending on the experiment analysis requirements and constraints imposed

110 by the online experimentation platform, the dataset may contain data aggregated to various levels.

111 Consider the “free delivery” banner example in Section 1, where the website users are randomly

112 allocated to the treatment (showing the banner) and control (not showing the banner) groups, with the

113 goal to understand whether the banner changes the proportion of users who bought something. In this

114 case each individual user is considered a randomization unit [50].

115 A dataset may contain, for each experiment, only summary statistics on the group level, e.g. the

116 proportion of users who have bought something in the control and treatment groups respectively.

117 It can also record one response per randomization unit, with each row containing the user ID and

118 whether the user bought something. The more detailed activity logs at a sub-randomization unit level

119 can also have each row containing information about a particular page view from a particular user.

120 Time granularity An experiment can last anytime between a week to many months [50], which

121 provides many possibilities in recording the result. A dataset can opt to record the overall result

122 only, showing the end state of an experiment. It may or may not come with a timestamp for

123 each randomization unit or experiment if there are multiple instances of them. It can also record

124 intermediate checkpoints for the decision metrics, ideally at regular intervals such as daily or hourly.

125 These checkpoints can either be a snapshot of the interval (recording activities between time t

126 and t + 1, time t + 1 and t + 2, etc.) or cumulative from the start of the experiment (recording

127 activities between time 0 and 1, time 0 and 2, etc.).

128 Syntheticity A dataset can record data generated from a real process. It can also be synthetic—

129 generated via simulations with distributional assumptions applied. A dataset can also be semi-synthetic

130 if it is generated from a real-life process and subsequently augmented with synthetic data.

131 Note we can also describe datasets arising from any experiments (including offline and non-

132 randomized controlled experiments) using these four dimensions. We will discuss in Section 4

133 how these dimensions map to common statistical tests used in online experimentation.

134 In addition, we also record the application domain, target demographics, and the temporal coverage

135 of the experiment(s) featured in a dataset. In an age when data are often reused, it is crucial for

136 one to understand the underlying context, and that learnings from a dataset created under a certain

137 context may not translate to another context. We also see the surfacing of such context as a way to

138 promote considerations in fairness and transparency for experimenters as more experiment datasets

139 become available [44]. For example, having target demographics information on a meta-level helps

140 experimenters to identify who were involved, or perhaps more importantly, who were not involved in

141 experiments and could be adversely impacted by a treatment that is effectively untested.

142 Finally, two datasets can also differ in their medium of documentation and the presence/absence

143 of a data management / long-term preservation plan. The latter includes the hosting location, the

144 presence/absence of a DOI, and the type of license. We record these attributes for the datasets

145 surveyed below for completeness.

3146 3 Public Online Controlled Experiment Datasets

147 Here we discuss our approach to produce the first ever survey on OCE datasets and present its results.

148 The survey is compiled via two search directions, which we describe below. For both directions, we

149 conduct a first round search in May 2021, with a follow up round in August 2021 to ensure we have

150 the most updated results.

151 We first search on the vanilla Google search engine using the keywords “Online controlled experiment

152 "dataset"”, “A/B test "dataset"”, and “Multivariate test "dataset"”. For each keyword, we inspect the

153 first 10 pages of the search result (top 100 results) for scholarly articles, web pages, blog posts, and

154 documents that may host and/or describe a publicly available OCE dataset. The search term “dataset”

155 is in double quotes to limit the search results to those with explicit mention of dataset(s). We also

156 search on specialist data search engines/hosts, namely on Google Dataset Search (GDS) and Kaggle,

157 using the keywords “Online controlled experiment(s)” and “A/B test(s)”. We inspect the metadata

158 and description for all the results returned (except for GDS, where we inspect the first 100 results for

159 “A/B test(s)”) for relevant datasets as defined below.1

160 A dataset must record the result arising from a randomized controlled experiment run online to be

161 included in the survey. The criteria excludes experimental data collected from offline experiments, e.g.

162 those in agriculture [83], medicine [27], and economics [29]. It also excludes datasets used to perform

163 quasi-experiments and observational studies, e.g. the LaLonde dataset used in econometrics [19] and

164 datasets constructed for uplift modeling tasks [25, 40].2

165 The result is presented in Table 1. We place the 12 OCE datasets identified in this exercise along the

166 four taxonomy dimensions defined in Section 2 and record the additional features. These datasets

167 include two standalone archives for online media and education experiments respectively [56, 70],

168 and three accompanying datasets for peer-reviewed research articles [23, 74, 86]. There are also tens

169 of Kaggle datasets, blog posts, and code repositories that describes and/or duplicates one of the five

170 example datasets used in four different massive open online courses on online controlled experiment

171 design and analysis [9, 11, 38, 76]. Finally, we identify two standalone datasets hosted on Kaggle

172 with relatively light documentation [31, 48].

173 From the table we observe a number of gaps in OCE dataset availability, the most obvious one being

174 the lack of datasets that record responses at a sub-randomization unit level. In the sections below, we

175 will identify more of these gaps and discuss their implications to OCE analysis.

176 4 Matching Dataset Taxonomy with Statistical Tests

177 Specifying the data requirements (or structure) and performing statistical tests are perhaps two of the

178 most common tasks carried out by data scientists. However, the link between the two processes is

179 seldom mapped out explicitly. It is all too common to consider from scratch the question “I need

180 to run this statistical test, how should I format my dataset?” (or more controversially, “I have this

181 dataset, what statistical tests can I run?” [47]) for every new project/application, despite the list of

182 possible dataset dimensions and statistical tests remaining largely the same.

183 We aim to speed up the process above by describing what summary statistics are required to perform

184 common statistical tests in OCE, and link the statistics back to the taxonomy dimensions defined in

185 Section 2. The exercise is similar to identifying the sufficient statistic(s) for a statistical model [33],

186 though the identification is done for the encapsulating statistical inference procedure, with a practical

187 focus on data dimension requirements. We do so by stating the formula used to calculate the

188 corresponding effect sizes and test statistics and observe the summary statistics required in common.

189 The general approach enables one to also apply the resultant mapping to any experiments that involves

1

Searching for the keyword “Online controlled experiment” on GDS and Kaggle returned 42 and 7 results

respectively, and that for “A/B test” returned “100+” and 286 results respectively. Curiously, replacing “experi-

ment” and “test” in the keywords with their plural form changes the number of results, with the former returning

6 and 10 results on GDS and Kaggle respectively, and the latter returning “100+” and 303 results respectively.

2

Uplift modeling (UM) tasks for online applications often start with an OCE [66] and thus we can consider

UM datasets as OCE datasets with extra randomization unit level features. The nature of the tasks are different

though: OCEs concern validating the average treatment effect across the population using a statistical test,

whereas UM concerns modeling the conditional average treatment effect for each individual user, making it

more a general causal inference task that is outside the scope of this survey.

4Experiment Count

Response Data management /

Dataset Name Reference(s) - Variant / Metric Time Granularity Syntheticity Application Documentation

Granularity long-term preservation plan

Count

Host: Open Science Framework

Multiple (32,487) Overall result only Media & Ads Peer reviewed

Upworthy Research Archive [56] Group Real DOI: 3 (see [56])

- 2-14 / 2 (C) Timestamp per expt. Copy/Creative data article

Licence: CC BY 4.0

ASSISTments Dataset from Multiple Multiple (22) Overall result only Education Peer reviewed

[70] Rand. Unit Real Host: Author website

Randomized Controlled Experiments - 2 / 2 (BC) 7 timestamp Teaching model data article

Accompanying

Host: University library

A/B Testing Web Analytics Data Single Overall result only Education dataset to

[86] Group Real DOI: 3 (see [86])

(From [87]) - 5 / 2 (C) X timestamp UX/UI change peer-reviewed

Licence: CC BY-SA 4.0

research article

Dataset of two experiments of the

Host: Journal website

application of gamified peer assessment Multiple (2) Overall result only Education Peer reviewed

[74] Rand. Unit Real DOI: 3 (see [74])

model into online learning environment - 3+2 / 3+3 (R+C) 7 timestamp Teaching model data article

Licence: CC BY 4.0

MeuTutor (From [73])

The Effects of Change Decomposition on Host: University library

Single Overall result only Tech Peer reviewed

Code Review - A Controlled Experiment - [23] Rand. Unit Real DOI: 3 (see [23])

- 2 / multi (CLR) 7 timestamp Dev. Process data article

Online appendix (From [24]) License: CC BY-SA 4.0

Udacity Free Trial Screener Experiment Blog posts & Host: Kaggle / GitHub (multiple)

See e.g. Single Daily checkpoint Education

(From Udacity A/B Testing Course - Group Real Kaggle notebooks DOI: Unknown

[62, 71, 79] - 2 / 4 (C) Snapshot UX/UI change

Final Project [38]) e.g. [57, 69, 88] Licence: Unknown

"Analyse A/B Test Results" Dataset Blog posts & Host: Kaggle / GitHub (multiple)

See e.g. Single Overall result only E-commerce

(From Udacity Online Data Analyst Rand. Unit Unknown Kaggle notebooks DOI: Unknown

[2, 18, 64] - 2 / 1 (B) Timestamp per RU UX/UI change

5

Course - Project 3 [76]) e.g. [15, 54, 67] Licence: Unknown

Host: Kaggle / Course website

Mobile Games A/B Testing with Cookie See e.g. Single Overall result only Gaming

Rand. Unit Real Kaggle notebook DOI: Unknown

Cats (DataCamp project [9]) [30, 72, 85] - 2 / 3 (BC) 7 timestamp Design change

Licence: Unknown

Host: Course website

Experiment Dataset (From DataCamp Single Overall result only Tech Course notes

[12] Rand. Unit Synthetic DOI: Unknown

A/B Testing in R Course [11]) - 2 / 1 (B) Timestamp per RU UX/UI Change Blog posts

Licence: Unknown

Data Visualization Website - April 2018 Host: Course website

Single Overall result only Tech

(From DataCamp A/B Testing [13] Rand. Unit Synthetic Course notes DOI: Unknown

- 2 / 4 (BR) Timestamp per RU UX/UI Change

in R Course [11]) Licence: Unknown

Host: Kaggle

Single Overall result only E-commerce

Grocery Website Data for AB Test [48] Rand. Unit Unknown Kaggle notebook DOI: Unknown

- 2 / 1 (B) 7 timestamp UX/UI change

Licence: Unknown

Host: Kaggle

Single Overall result only Media & Ads

Ad A/B Testing (aka SmartAd AB Data) [31] Rand. Unit Unknown Kaggle notebook DOI: N/A

- 2 / 2 (B) Timestamp per RU Display ads

Licence: CC BY-SA 3.0

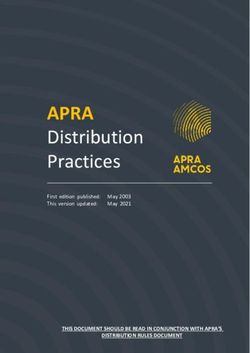

Table 1: Results from the first ever survey of OCE datasets. The 12 datasets identified are placed on the four taxonomy dimensions defined in Section 2 together

with the additional attributes recorded. In the Experiment Count column, a second-line value (corresponding to Variant/Metric Count) of “x / y (BCLR)” means

the dataset features x variants and y metrics, with the metrics based on Binary, Count, Likert-scale, and Real-valued responses. In the Response Granularity and

Time Granlarity columns, Randomization Unit is abbreviated as RU or Rand. Unit. Note that a large proportion of resources are accessed via links to non-scholarly

articles—blog plots, Kaggle dataset pages, and GitHub repositories. These resources may not persist over time.190 a two-sample statistical test, including offline experiments and experiments without a randomized

191 control. For brevity, we will refrain from discussing the full model assumptions as well as their

192 applicability. Instead we point readers to the relevant work in the literature.

193 4.1 Effect size and Welch’s t-test

194 We consider a two-sample setting and let X1 , · · · , XN and Y1 , · · · , YM be i.i.d. samples from

195 the distributions FX (·) and FY (·) respectively. We assume the first two moments exist for the

2

196 distributions FX and FY , with their mean and variance denoted (µX , σX ) and (µY , σY2 ) respectively.

197 We also denote the sample mean and variance of the two samples (X̄, sX ) and (Ȳ , s2Y ) respectively.

2

198 Often we are interested in the difference between the mean of the two distributions ∆ = µY − µX ,

199 commonly known as the effect size (of the difference in mean) or the average treatment effect. A

200 standardized effect size enables us to compare the difference across many experiments and is thus

201 useful in meta-analyses. One commonly used effect size is Cohen’s d, defined as the difference in

202 sample means divided by the pooled sample standard deviation [17]:

s

(N − 1)s2X + (M − 1)s2Y

d = Ȳ − X̄ . (1)

N +M −2

203 We are also interested in whether the samples carry sufficient evidence to indicate ∆ is different to a

204 prescribed value θ0 . This can be done via a hypothesis test with H0 : ∆ = θ0 and H1 : ∆ 6= θ0 .3 One

205 of the most common statistical test used in online controlled experiments is the Welch’s t-test [81], in

206 which we calculate the test statistic t as follow:

r 2

sX s2

+ Y .

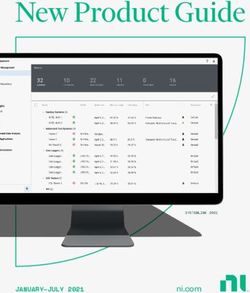

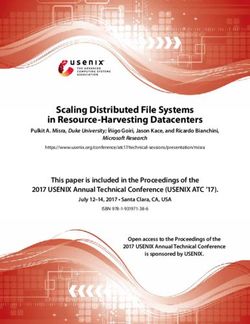

t = Ȳ − X̄ (2)

N M

207 We observe that in order to calculate the two stated quantities above, we require six quantities: two

208 means (X̄, Ȳ ), two (sample) variances (s2X , s2Y ), and two counts (N , M ). We call these quantities

209 Dimension Zero (D0) quantities as they are the bare minimum required to run a statistical test—these

210 quantities will be expanded along the taxonomy dimensions defined in Section 2.

211 Cluster randomization / dependent data The sample variance estimates (s2X , s2Y ) may be biased

212 in the case where cluster randomization is involved. Using again the “free delivery” banner example,

213 instead of randomly assigning each individual user to the control and treatment groups, the business

214 may randomly assign postcodes to the two groups, with all users from the same postcode getting the

215 same version of the website. In this case user responses may become correlated, which violates the

216 independence assumptions in statistical tests. Common workarounds including the use of bootstrap [6]

217 and the Delta method [22] generally require access to sub-randomization unit responses.

218 4.2 Experiments with adaptive stopping

219 As discussed in Section 1, experiments with adaptive stopping are getting increasingly popular

220 among the OCE community. Here we motivate the data requirement for statistical tests in this

221 domain by looking at the quantities required to calculate the test statistics for a Mixture Sequential

222 Probability Ratio Test (mSPRT) [45] and a Bayesian hypothesis test using Bayes factor [21], two

223 popular approaches in online experimentation. There are many other tests that supports adaptive

224 stopping [59, 78], though the data requirements, in terms of the dimensions defined in Section 2,

225 should be largely identical.

226 We first observe running a mSPRT with a normal mixing distribution H = N (θ0 , τ 2 ) involves

227 calculating the following test statistic upon observing the first n Xi and Yj (see Eq. (11) in [45]):

s

2 + σ2

n2 τ 2 (Ȳn − X̄n − θ0 )2

H,θ0 σX Y

Λ̃n = 2 + σ 2 + nτ 2 exp 2(σ 2 + σ 2 )(σ 2 + σ 2 + nτ 2 ) , (3)

σX Y X Y X Y

Pn Pn

228 where X̄n = n−1 i=1 Xi and Ȳn = n−1 j=1 Yj represent the sample mean of the Xs and Y s up

229 to sample n respectively, and τ 2 is a hyperparameter to be specified or learnt from data.

3

There are other ways to specify the hypotheses such as that in superiority and non-inferiority tests [34],

though they are unlikely to change the data requirement as long as it remains anchored on θ0 .

6230 For Bayesian hypothesis tests using Bayes factor, we calculate the (square root of) Wald test statistic

231 upon seeing the first n Xi and first m Yj [20, 21]:

Ȳm − X̄n Ȳm − X̄n 1

Wn,m = r =q 2 q1 , (4)

2 2 σX σ2

σX σY

+ mY / n1 + 1 1

n + m

n + m n m

√

| {z } | {z }

δn,m En,m

232 where δn,m and En,m are the effect size (standardized by the pooled variance) and the effective sample

233 size of the test respectively. In OCE it is common to appeal to the central limit theorem and assume a

234 normal likelihood for the effect size, i.e. δn,m ∼ N (µ, 1/En,m ). We then compare the hypotheses

235 H0 : µ = θ0 and H1 : µ ∼ N (θ0 , V 2 ) by calculating the Bayes factor [46]:

f (δn,m |H1 ) φ(δn,m ; θ0 , V 2 + 1/En,m )

BFn,m = = , (5)

f (δn,m |H0 ) φ(δn,m ; θ0 , 1/En,m )

236 where φ(· ; a, b) is the PDF of a normal distribution with mean a and variance b, and V 2 is a

237 hyperparameter that we specify or learn from data.

238 During an experiment with adaptive stopping, we calculate the test statistics stated above many times

239 for different n and m. This means a dataset can only support the running of such experiments if it

240 contains intermediate checkpoints for the counts (n, m) and the means (X̄n , Ȳm ), ideally cumulative

241 from the start of the experiment. Often one also requires the variances at the same time points (see

242 below). The only exception to the dimensional requirement above is the case where the dataset

243 contains responses at a randomization unit or finer level of granularity, and despite recording the

244 overall results only, has a timestamp per randomization unit. Under this special case, we will still be

245 able to construct the cumulative means (X̄n , Ȳm ) for all relevant values of n and m by ordering the

246 randomization units by their associated timestamps.

247 Learning the effect size distribution (hyper)parameters The two tests introduced above feature

248 some hyperparameters (τ 2 and V 2 ) that have to be specified or learnt from data. These parameters

249 characterizes the prior belief of the effect size distribution, which will be the most effective if it

250 “matches the distribution of true effects across the experiments a user runs” [45]. Common parameter

251 estimation procedures [1, 5, 39] require results from multiple related experiments.

252 Estimating the response variance In the equations above, the response variance of the two

2

253 samples σX and σY2 are assumed to be known. In practice we often use the plug-in empirical

254 estimates (sX )n and (s2Y )m —the sample variances for the first n Xi and first m Yj respectively, and

2

255 thus the data dimensional requirement is identical to that of the counts and means as discussed above.

256 In the case where the plug-in estimate may be biased due to dependent data, we will also require a

257 sub-randomization unit response granularity (see Section 4.1).

258 4.3 Non-parametric tests

259 We also briefly discuss the data requirements for non-parametric tests, where we do not impose

260 any distributional assumptions on the responses but compare the hypotheses H0 : FX ≡ FY and

261 H1 : FX 6= FY , where we recall FX and FY are the distributions of the two samples.

262 One of the most commonly used (frequentist) non-parametric test in OCE, the Mann-Whitney

263 U -test [55], calculates the following test statistic:

1 if Y < X,

N X M

(

X

U= S(Xi , Yj ), where S(X, Y ) = 1/2 if Y = X, (6)

i=1 j=1 0 if Y > X.

264 While a rank-based method is available for large N and M , both methods require the knowledge of

265 all the Xi and Yj . Such requirement is the same for other non-parametric tests, e.g. the Wilcoxon

266 signed-rank [82], Kruskal-Wallis [51], and Kolmogorov–Smirnov tests [26]. This suggests a dataset

267 can only support a non-parametric test if it at least provides responses at a randomization unit level.

268 We conclude by showing how we can combine the individual data requirements above to obtain the

269 requirement to design and/or run experiments for more complicated statistical tests. This is possible

270 due to the orthogonal design of the taxonomy dimensions. Consider an experiment with adaptive

7Metric 1 Metric 2 Metric 3 Metric 4

20 20 20 20

10 10 10 10

0 0 0 0

0.0 0.5 1.0 0.0 0.5 1.0 0.0 0.5 1.0 0.0 0.5 1.0

Figure 1: Distribution of p-values attained by the 99 OCEs in the ASOS Digital Experiment Dataset

using Welch’s t-tests, split by decision metrics. The leftmost bar in each histogram represents

experiments with p < 0.05. Here we treat OCEs with multiple variants as multiple independent OCEs.

271 stopping using Bayesian non-parametric tests (e.g. with a Pólya Tree prior [16, 42]). It involves a

272 non-parametric test and hence require responses at a randomization unit level. It computes multiple

273 Bayes factors for adaptive stopping and hence requires intermediate checkpoints for the responses (or

274 a timestamp for each randomization unit). Finally, to learn the hyperparameters of the Pólya Tree

275 prior we require multiple related experiments. The substantial data requirement along 3+ dimensions

276 perhaps explains the lack of relevant OCE datasets and the tendency for experimenters to use simpler

277 statistical tests for the day-to-day design and/or running of OCEs.

278 5 A Novel Dataset for Experiments with Adaptive Stopping

279 We finally introduce the ASOS Digital Experiments Dataset, which we believe is the first public

280 dataset that supports the end-to-end design and running of OCEs with adaptive stopping. We motivate

281 why this is the case, provide a light description of the dataset (and a link to the more detailed

282 accompanying datasheet),5 and showcase the capabilities of the dataset via a series of experiments.

283 We also discuss the ethical implications of releasing this dataset.

284 Recall from Section 4.2 that in order to support the end-to-end design and running of experiments

285 with adaptive stopping, we require a dataset that (1) includes multiple related experiments; (2) is

286 real, so that any parameters learnt is reflective of the real-world scenario; and either (3a) contains

287 intermediate checkpoints for the summary statistics during each experiment (i.e. time-granular), or

288 (3b) contains responses at a randomization unit granularity with a timestamp for each randomization

289 unit (i.e. response-granular with timestamps).

290 None of the datasets surveyed in Section 3 meet all three criteria. While the Upworthy [56],

291 ASSISTments [70], and MeuTutor [74] datasets meet the first two criteria, they all fail to meet the

292 third.4 The Udacity Free Trial Screener Experiment dataset meets the last two criteria by having

293 results from a real experiment with daily snapshots of the decision metrics (and hence time-granular),

294 which supports the running of an experiment with adaptive stopping. However, the dataset only

295 contains a single experiment, which is not helpful for learning the effect size distribution (the design).

296 The ASOS Digital Experiments Dataset contain results from OCEs run by a business unit within

297 ASOS.com, a global online fashion retail platform. In terms of the taxonomy defined in Section 2,

298 the dataset contain multiple (78), real experiments, with two to five variants in each experiment and

299 four decision metrics based on binary, count and real-valued responses. The results are aggregated on

300 a group level, with daily or 12-hourly checkpoints of the metric values cumulative from the start of

301 the experiment. The dataset design meets all the three criteria stated above, and hence differentiates

302 itself from other public datasets.

303 We provide readers with an accompanying datasheet (based on [36]) that provides further information

304 about the dataset, and host the dataset on Open Science Framework to ensure it is easily discoverable

305 and can be preserved long-term.5 It is worth noting that the dataset is released with the intent to

306 support development in the statistical methods required to run OCEs. The experiment results shown

307 in the dataset is not representative of ASOS.com’s overall business operations, product development,

308 or experimentation program operations, and no conclusion of such should be drawn from this dataset.

4

All three report the overall results only and hence are not time-granular. The Upworthy dataset reports

group-level statistics and hence is not response-granular. The ASSISTments and MeuTutor datasets are response-

granular but they lack the timestamp to order the samples.

5

Link to dataset and accompanying datasheet: [Private link, reviewers please refer to the OpenReview portal]

81.0 1.0

mSPRT p-value

Expt.-Variant ID

P(H0|Data)

08bcc2-1

0.5 0.5 591c2c-1

a4386f-1

bac0d3-1

0.0 0.0 c56288-1

0.0 0.2 0.4 0.6 0.8 1.0 0.0 0.2 0.4 0.6 0.8 1.0

Figure 2: Change in (left) p-values in a mixed Sequential Probability Ratio Test (τ 2 = 5.92e-06)

and (right) posterior belief in the null hypothesis (π(H0 |data)) in a Bayesian hypothesis test (V 2 =

5.93e-06, π(H0 ) = 0.75) during the experiment for five experiments selected at random. The

experiment duration (x-axis) is normalized by its overall runtime. Only results for Metric 4 is shown.

309 5.1 Potential use cases

310 Meta-analyses The multi-experiment nature of the dataset enables one to perform meta-analyses.

311 A simple example is to characterize the distribution of p-values (under a Welch’s t-test) across all

312 experiments (see Figure 1). We observe there are roughly a quarter of experiments in this dataset

313 attaining p < 0.05, and attribute this to the fact that what we experiment in OCEs are often guided by

314 what domain experts think may have an impact. Having that said, we invite external validation on

315 whether there is evidence for data dredging using e.g. [58].

316 Design and running of experiments with adaptive stopping We then demonstrate the dataset

317 can indeed support OCEs with adaptive stopping by performing a mixed Sequential Probability Ratio

318 test (mSPRT) and a Bayesian hypothesis test via Bayes factor for each experiment and metric. This

319 require learning the hyperparameters τ 2 and V 2 . We learn, for each metric, a naïve estimate for

320 V 2 by collating the δn,m (see (4)) at the end of each experiment and taking their sample variance.

321 This yields the estimates 1.30e-05, 1.07e-05, 6.49e-06, and 5.93e-06 for the four metrics

322 respectively. For τ 2 , we learn near-identical naïve estimates by collating the value of Cohen’s d

323 (see (1)) instead. However, as τ 2 captures the spread of unstandardized effect sizes, we specify in each

324 test τ 2 = d · (s2X )n , where (s2X )n is the sample variance of all responses up to the nth observation in

325 that particular experiment. The Bayesian tests also require a prior belief in the null hypothesis being

326 true (π(H0 ))—we set it to 0.75 based on what we observed in the t-tests above.

327 We then calculate the p-value in mSPRT and the posterior belief in the null hypothesis (π(H0 |data))

328 in Bayesian test for each experiment and metric at each daily/12-hourly checkpoint, following the

329 procedures stated in [45] and [21, 46] respectively. We plot the results for five experiments selected

330 at random in Figure 2, which shows the p-value for a mSPRT is monotonically non-increasing, while

331 the posterior belief for a Bayesian test can fluctuate depending on the effect size observed so far.

332 A quasi-benchmark for adaptive stopping methods Real online controlled experiments, unlike

333 machine learning tasks, generally do not have a notion of ground truth. The use of quasi-ground

334 truth enables the comparison between two hyperparameter settings of the same adaptive stopping

335 method or two adaptive stopping methods. Using as quasi-ground truth the significant / not significant

336 verdict from a Welch’s t-test at the end of the experiment as the “ground truth”. We could then

337 compare this “ground truth” to the significant / not significant verdict of a mSPRT at different stages

338 of individual experiments. This yields many “confusion matrices” over different stages of individual

339 experiments where a “Type I error” corresponds to cases where a Welch’s t-test gives a not significant

340 result and a mSRPT reports a significant result, a confusion matrix for the end of each experiment

341 can be seen in Table 2. As the dataset was collected without early stopping it allows us to perform

342 sensitivity analysis and optimization on the hyperparameters of mSPRT under what can be construed

343 as “precision-recall” tradeoff of statistically significant treatments.

344 Other use cases The time series nature of this dataset enables one to detect bias (of the estimator)

345 across time, e.g. those that are caused by concept drift or feedback loops. In the context of OCEs, [14]

346 described a number of methods to detect invalid experiments over time that may be run on this dataset.

347 Moreover, being both a multi-experiment and time series dataset also enables one to learn the

348 correlation structure across experiments, decision metrics, and time [65, 80].

349 5.2 Ethical considerations

350 We finally discuss the ethical implications of releasing the dataset, touching on data protection and

351 anonymization, potential misuses, and the ethical considerations for running OCEs in general.

9t-test Significant Not significant

mSPRT Significant Not significant Significant Not significant

Metric 1 19 7 2 71

Metric 2 20 7 18 49

Metric 3 16 9 6 63

Metric 4 16 11 4 63

Table 2: Comparing the number of statistically significant / not significant results reported by a

Welch’s t-test and an mSPRT at the end of an experiment for all four metrics.

352 Data protection and anonymization The dataset records aggregated activities of hundreds of

353 thousands or millions of website users for business measurement purposes and hence it is impossible to

354 identify a particular user. Moreover, to minimize the risk of disclosing business sensitive information,

355 all experiment context is either removed or anonymized such that one should not be able to tell who

356 is in an experiment, when is it run, what treatment does it involve, and what decision metrics are used.

357 We refer readers to the accompanying datasheet5 for further details in this area.

358 Potential misuses An OCE dataset, no matter how anonymized it is, reflects the behavior of its

359 participants under a certain application domain and time. We urge potential users of this dataset

360 to exercise caution when attempting to generalize the learnings. It is important to emphasize that

361 the learnings is different from the statistical methods and processes that are demonstrated on this

362 dataset. We believe the latter are generalizable, i.e. they can be applied on other datasets with a

363 similar data dimensions regardless of the datasets’ application domain, target demographics, and

364 temporal coverage, and appeal for potential users of the dataset to focus on such.

365 One example of generalizing the learnings is the use of this dataset as a full performance benchmark.

366 As discussed above, this dataset does not have a notion of ground truth and any quasi-ground truths

367 constructed are themselves a source of bias to estimators. Thus, experiment design comparison need

368 to considered at a theoretical level [52]. Another example will be directly applying the value of

369 hyperparameter(s) obtained while training a model on this dataset to another dataset. While this may

370 work for similar application domains, the less similar they are the the less likely the hyperparameters

371 learnt will transfer introducing risk in incurring bias both on the estimator and in fairness.

372 Running OCEs in general The dataset is released with the aim to support experiments with

373 adaptive stopping, which will enable faster experimentation cycle. As we run more experiments, the

374 ethical concerns for running OCEs in general will naturally mount, as they are ultimately human

375 subjects research. We reiterate the importance of the following three principles when we design and

376 run experiments [35, 63] : respect for persons, beneficence (properly assess and balance the risks and

377 benefits), and justice (ensure participants are not exploited), and refer readers to Chapter 9 of [50]

378 and its references for further discussions in this area.

379 6 Conclusion

380 Online controlled experiments (OCE) are a powerful tool for online organizations to assess their

381 digital products and services’ impact. To safeguard future methodological development in the area, it

382 is vital to have access and systematic understanding of relevant datasets arising from real experiments.

383 We described the result of the first ever survey on publicly available OCE datasets, and provided a

384 dimensional taxonomy that links the data collection and statistical test requirements. We also released

385 the first ever dataset that can support OCEs with adaptive stopping, which design is grounded on a

386 theoretical discussion between the taxonomy and statistical tests. Via extensive experiments, we also

387 showed that the dataset is capable of addressing the identified gap in the literature.

388 Our work on surveying, categorizing, and enriching the publicly available OCE datasets is just the

389 beginning and we invite the community to join in the effort. As discussed above we have yet to see a

390 dataset that can support methods dealing with correlated data due to cluster randomization, or the

391 end-to-end design and running of experiments with adaptive stopping using Bayesian non-parametric

392 tests. We also see ample opportunity to generalize the survey to cover datasets arising from uplift

393 modeling tasks, quasi-experiments, and observational studies. Finally, we can further expand the

394 taxonomy, which already supports datasets from all experiments, with extra dimensions (e.g. number

395 of features to support stratification, control variate, and uplift modeling methods) as the area matures.

10396 Acknowledgments and Disclosure of Funding

397 CHBL is part-funded by the EPSRC CDT in Modern Statistics and Statistical Machine Learning at

398 Imperial College London and University of Oxford (StatML.IO) and ASOS.com.

399 References

400 [1] Christopher P. Adams. Empirical Bayesian estimation of treatment effects. SSRN Electronic Jour-

401 nal, 2018. doi: 10.2139/ssrn.3157819. URL https://doi.org/10.2139/ssrn.3157819.

402 [2] Sadiq Alreemi. Analyze AB test results, 2019. URL https://github.com/SadiqAlreemi/

403 Analyze-AB-Test-Results. Code repository.

404 [3] Florian Auer and Michael Felderer. Current state of research on continuous experimentation: A

405 systematic mapping study. In 2018 44th Euromicro Conference on Software Engineering and

406 Advanced Applications (SEAA), pages 335–344, 2018. doi: 10.1109/SEAA.2018.00062.

407 [4] Florian Auer, Rasmus Ros, Lukas Kaltenbrunner, Per Runeson, and Michael Felderer. Controlled

408 experimentation in continuous experimentation: Knowledge and challenges. Information and

409 Software Technology, 134:106551, 2021. ISSN 0950-5849. doi: https://doi.org/10.1016/

410 j.infsof.2021.106551. URL https://www.sciencedirect.com/science/article/pii/

411 S0950584921000367.

412 [5] Eduardo M. Azevedo, Alex Deng, José L. Montiel Olea, and E. Glen Weyl. Empirical Bayes

413 estimation of treatment effects with many A/B tests: An overview. AEA Papers and Proceedings,

414 109:43–47, May 2019. doi: 10.1257/pandp.20191003. URL https://www.aeaweb.org/

415 articles?id=10.1257/pandp.20191003.

416 [6] Eytan Bakshy and Dean Eckles. Uncertainty in online experiments with dependent data: An

417 evaluation of bootstrap methods. In Proceedings of the 19th ACM SIGKDD International

418 Conference on Knowledge Discovery and Data Mining, KDD ’13, page 1303–1311, New

419 York, NY, USA, 2013. Association for Computing Machinery. ISBN 9781450321747. doi:

420 10.1145/2487575.2488218. URL https://doi.org/10.1145/2487575.2488218.

421 [7] Yoav Benjamini and Yosef Hochberg. Controlling the false discovery rate: A practical and

422 powerful approach to multiple testing. Journal of the Royal Statistical Society: Series B

423 (Methodological), 57(1):289–300, January 1995. doi: 10.1111/j.2517-6161.1995.tb02031.x.

424 URL https://doi.org/10.1111/j.2517-6161.1995.tb02031.x.

425 [8] Lucas Bernardi, Themistoklis Mavridis, and Pablo Estevez. 150 successful machine learning

426 models: 6 lessons learned at Booking.com. In Proceedings of the 25th ACM SIGKDD Interna-

427 tional Conference on Knowledge Discovery & Data Mining, KDD ’19, page 1743–1751, New

428 York, NY, USA, 2019. Association for Computing Machinery. ISBN 9781450362016. doi:

429 10.1145/3292500.3330744. URL https://doi.org/10.1145/3292500.3330744.

430 [9] Rasmus Bååth and Bret Romero. Mobile games A/B testing with Cookie Cats [MOOC]. URL

431 https://www.datacamp.com/projects/184.

432 [10] Will Browne and Mike Swarbrick Jones. What works in e-commerce - a meta-

433 analysis of 6700 online experiments. http://www.qubit.com/wp-content/uploads/

434 2017/12/qubit-research-meta-analysis.pdf, 2017. URL http://www.qubit.com/

435 wp-content/uploads/2017/12/qubit-research-meta-analysis.pdf. White paper.

436 [11] David Campos, Chester Ismay, and Shon Inouye. A/B testing in R | DataCamp [MOOC]. URL

437 https://www.datacamp.com/courses/ab-testing-in-r.

438 [12] David Campos, Chester Ismay, and Shon Inouye. Experiment dataset [Dataset], n.d..

439 URL https://assets.datacamp.com/production/repositories/2292/datasets/

440 52b52cb1ca28ce10f9a09689325c4d94d889a6da/experiment_data.csv.

441 [13] David Campos, Chester Ismay, and Shon Inouye. Data visualization website -

442 April 2018 [Dataset], n.d.. URL https://assets.datacamp.com/production/

443 repositories/2292/datasets/b502094e5de478105cccea959d4f915a7c0afe35/

444 data_viz_website_2018_04.csv.

11445 [14] Nanyu Chen, Min Liu, and Ya Xu. How A/B tests could go wrong: Automatic diagnosis

446 of invalid online experiments. In Proceedings of the Twelfth ACM International Conference

447 on Web Search and Data Mining, WSDM ’19, page 501–509, New York, NY, USA, 2019.

448 Association for Computing Machinery. ISBN 9781450359405. doi: 10.1145/3289600.3291000.

449 URL https://doi.org/10.1145/3289600.3291000.

450 [15] Sisi Chen. A/B tests project — Udacity DAND P2, 2020. URL https://rachelchen0104.

451 medium.com/a-b-tests-project-udacity-dand-p2-55faddd4d5e0. Blog post.

452 [16] Yuhui Chen and Timothy E. Hanson. Bayesian nonparametric k-sample tests for censored and

453 uncensored data. Computational Statistics & Data Analysis, 71:335–346, 2014. ISSN 0167-

454 9473. doi: https://doi.org/10.1016/j.csda.2012.11.003. URL https://www.sciencedirect.

455 com/science/article/pii/S0167947312003945.

456 [17] Jacob Cohen. Statistical Power Analysis for the Behavioral Sciences. Taylor & Francis, 1988.

457 ISBN 9781134742707.

458 [18] Ahmed Dawoud. E-commerce A/B testing, Version 1 [Dataset], 2021. URL https://www.

459 kaggle.com/ahmedmohameddawoud/ecommerce-ab-testing/version/1.

460 [19] Rajeev H. Dehejia and Sadek Wahba. Causal effects in nonexperimental studies: Reevaluating

461 the evaluation of training programs. Journal of the American Statistical Association, 94(448):

462 1053–1062, 1999. ISSN 01621459. URL http://www.jstor.org/stable/2669919.

463 [20] Alex Deng. Objective Bayesian two sample hypothesis testing for online controlled experi-

464 ments. In Proceedings of the 24th International Conference on World Wide Web, WWW ’15

465 Companion, page 923–928, New York, NY, USA, 2015. Association for Computing Machinery.

466 ISBN 9781450334730. doi: 10.1145/2740908.2742563. URL https://doi.org/10.1145/

467 2740908.2742563.

468 [21] Alex Deng, Jiannan Lu, and Shouyuan Chen. Continuous monitoring of A/B tests without pain:

469 Optional stopping in Bayesian testing. In 2016 IEEE International Conference on Data Science

470 and Advanced Analytics (DSAA), pages 243–252, 2016. doi: 10.1109/DSAA.2016.33.

471 [22] Alex Deng, Ulf Knoblich, and Jiannan Lu. Applying the delta method in metric analytics:

472 A practical guide with novel ideas. In Proceedings of the 24th ACM SIGKDD International

473 Conference on Knowledge Discovery & Data Mining, KDD ’18, page 233–242, New York,

474 NY, USA, 2018. Association for Computing Machinery. ISBN 9781450355520. doi: 10.1145/

475 3219819.3219919. URL https://doi.org/10.1145/3219819.3219919.

476 [23] Marco di Biase. The effects of change decomposition on code review - A controlled experi-

477 ment - Online appendix, May 2018. URL https://data.4tu.nl/articles/dataset/

478 The_Effects_of_Change_Decomposition_on_Code_Review_-_A_Controlled_

479 Experiment_-_Online_appendix/12706046/1.

480 [24] Marco di Biase, Magiel Bruntink, Arie van Deursen, and Alberto Bacchelli. The effects of

481 change decomposition on code review—a controlled experiment. PeerJ Computer Science, 5:

482 e193, May 2019. doi: 10.7717/peerj-cs.193. URL https://doi.org/10.7717/peerj-cs.

483 193.

484 [25] Eustache Diemert, Artem Betlei, Christophe Renaudin, and Amini Massih-Reza. A large

485 scale benchmark for uplift modeling, 2018. URL http://ama.imag.fr/~amini/Publis/

486 large-scale-benchmark.pdf. 2018 AdKDD & TargetAd Workshop (in conjunction with

487 KDD ’18).

488 [26] Yadolah Dodge. Kolmogorov–Smirnov Test. In The Concise Encyclopedia of Statistics, pages

489 283–287. Springer New York, New York, NY, 2008. ISBN 978-0-387-32833-1. doi: 10.1007/

490 978-0-387-32833-1_214. URL https://doi.org/10.1007/978-0-387-32833-1_214.

491 [27] Joseph Doyle, Sarah Abraham, Laura Feeney, Sarah Reimer, and Amy Finkelstein. Clinical

492 decision support for high-cost imaging: a randomized clinical trial [Dataset], 2018. URL

493 https://doi.org/10.7910/DVN/BRKDVQ.

12494 [28] Dheeru Dua and Casey Graff. UCI machine learning repository, 2019. URL http://archive.

495 ics.uci.edu/ml.

496 [29] Pascaline Dupas, Vivian Hoffman, Michael Kremer, and Alix Peterson Zwane. Targeting health

497 subsidies through a non-price mechanism: A randomized controlled trial in Kenya, 2016. URL

498 https://doi.org/10.7910/DVN/PBLJXJ.

499 [30] Arpit Dwivedi. Cookie Cats, Version 1 [Dataset], 2020. URL https://www.kaggle.com/

500 arpitdw/cokie-cats/version/1.

501 [31] Osuolale Emmanuel. Ad A/B testing, Version 1 [Dataset], 2020. URL https://www.kaggle.

502 com/osuolaleemmanuel/ad-ab-testing/version/1.

503 [32] Aleksander Fabijan, Pavel Dmitriev, Helena Holmstrom Olsson, and Jan Bosch. Online

504 controlled experimentation at scale: An empirical survey on the current state of A/B testing. In

505 2018 44th Euromicro Conference on Software Engineering and Advanced Applications (SEAA),

506 pages 68–72, 2018. doi: 10.1109/SEAA.2018.00021.

507 [33] Ronald A. Fisher. On the mathematical foundations of theoretical statistics. Philosophical

508 Transactions of the Royal Society of London. Series A, Containing Papers of a Mathematical or

509 Physical Character, 222(594-604):309–368, January 1922. doi: 10.1098/rsta.1922.0009. URL

510 https://doi.org/10.1098/rsta.1922.0009.

511 [34] Committee for Proprietary Medicinal Products. Points to consider on switching between

512 superiority and non-inferiority. British Journal of Clinical Pharmacology, 52(3):223–228, Sep

513 2001. ISSN 0306-5251. doi: 10.1046/j.0306-5251.2001.01397-3.x. URL https://pubmed.

514 ncbi.nlm.nih.gov/11560553.

515 [35] United States. National Commission for the Protection of Human Subjects of Biomedical and

516 Behavioral Research. The Belmont report: ethical principles and guidelines for the protection

517 of human subjects of research, volume 2. The Commission, 1978.

518 [36] Timnit Gebru, Jamie Morgenstern, Briana Vecchione, Jennifer Wortman Vaughan, Hanna

519 Wallach, Hal Daumé III, and Kate Crawford. Datasheets for datasets, 2020.

520 [37] Sander Greenland, Stephen J. Senn, Kenneth J. Rothman, John B. Carlin, Charles Poole,

521 Steven N. Goodman, and Douglas G. Altman. Statistical tests, P values, confidence intervals,

522 and power: a guide to misinterpretations. European Journal of Epidemiology, 31(4):337–

523 350, April 2016. doi: 10.1007/s10654-016-0149-3. URL https://doi.org/10.1007/

524 s10654-016-0149-3.

525 [38] Carrie Grimes, Caroline Buckey, and Diane Tang. A/B testing | Udacity free courses [MOOC].

526 URL https://www.udacity.com/course/ab-testing--ud257.

527 [39] F. Richard Guo, James McQueen, and Thomas S. Richardson. Empirical Bayes for large-scale

528 randomized experiments: a spectral approach, 2020.

529 [40] Kevin Hillstrom. The minethatdata e-mail analytics and data mining challenge, 2008.

530 [41] Henning Hohnhold, Deirdre O’Brien, and Diane Tang. Focusing on the long-term: It’s good

531 for users and business. In Proceedings of the 21th ACM SIGKDD International Conference on

532 Knowledge Discovery and Data Mining, KDD ’15, pages 1849–1858, New York, NY, USA,

533 2015. ACM. ISBN 978-1-4503-3664-2.

534 [42] Chris C. Holmes, François Caron, Jim E. Griffin, and David A. Stephens. Two-sample Bayesian

535 nonparametric hypothesis testing. Bayesian Analysis, 10(2):297 – 320, 2015. doi: 10.1214/

536 14-BA914. URL https://doi.org/10.1214/14-BA914.

537 [43] S. Hopewell, S. Dutton, L.-M. Yu, A.-W. Chan, and D. G Altman. The quality of reports of

538 randomised trials in 2000 and 2006: comparative study of articles indexed in PubMed. BMJ,

539 340(mar23 1):c723–c723, March 2010. doi: 10.1136/bmj.c723. URL https://doi.org/10.

540 1136/bmj.c723.

13You can also read