Event Representation with Sequential, Semi-Supervised Discrete Variables

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Event Representation with Sequential, Semi-Supervised Discrete

Variables

Mehdi Rezaee Francis Ferraro

Department of Computer Science Department of Computer Science

University of Maryland Baltimore County University of Maryland Baltimore County

Baltimore, MD 21250 USA Baltimore, MD 21250 USA

rezaee1@umbc.edu ferraro@umbc.edu

AAAB+3icdVDLSgMxFM3UV62vWpdugkVwNWTGFttd0Y3gpoJ9QDuUTJq2oZkHyR1pGforblwo4tYfceffmD4EFT1w4XDOvcm9x4+l0EDIh5VZW9/Y3Mpu53Z29/YP8oeFpo4SxXiDRTJSbZ9qLkXIGyBA8nasOA18yVv++Grut+650iIK72Aacy+gw1AMBKNgpF6+0OkCn4Bm6Y2Q5pHhzOvli8SuVkmpVMbELhPXdSuGkHO3UnWwY5MFimiFei//3u1HLAl4CExSrTsOicFLqQLBJJ/luonmMWVjOuQdQ0MacO2li91n+NQofTyIlKkQ8EL9PpHSQOtp4JvOgMJI//bm4l9eJ4FBxUtFGCfAQ7b8aJBIDBGeB4H7QnEGcmoIZUqYXTEbUUUZmLhyJoSvS/H/pOnaTtkmt6Vi7XIVRxYdoxN0hhx0gWroGtVRAzE0QQ/oCT1bM+vRerFel60ZazVzhH7AevsEDtaVHQ==

[Killing]

Abstract

f2 f3

AAAB6nicdVDJSgNBEK2JW4xb1KOXxiB4GnomCSa3oBePEc0CyRB6Oj1Jk56F7h4hDPkELx4U8eoXefNv7CyCij4oeLxXRVU9PxFcaYw/rNza+sbmVn67sLO7t39QPDxqqziVlLVoLGLZ9YligkespbkWrJtIRkJfsI4/uZr7nXsmFY+jOz1NmBeSUcQDTok20m0wKA+KJWzX67hSqSJsV7HrujVDcNmt1R3k2HiBEqzQHBTf+8OYpiGLNBVEqZ6DE+1lRGpOBZsV+qliCaETMmI9QyMSMuVli1Nn6MwoQxTE0lSk0UL9PpGRUKlp6JvOkOix+u3Nxb+8XqqDmpfxKEk1i+hyUZAKpGM0/xsNuWRUi6khhEpubkV0TCSh2qRTMCF8fYr+J23Xdqo2vqmUGperOPJwAqdwDg5cQAOuoQktoDCCB3iCZ0tYj9aL9bpszVmrmWP4AevtE16Djd0=

AAAB6nicdVDLSgNBEOyNrxhfUY9eBoPgaZlNzK65Bb14jGhiIFnC7GQ2GTL7YGZWCCGf4MWDIl79Im/+jZOHoKIFDUVVN91dQSq40hh/WLmV1bX1jfxmYWt7Z3evuH/QUkkmKWvSRCSyHRDFBI9ZU3MtWDuVjESBYHfB6HLm390zqXgS3+pxyvyIDGIeckq0kW7CXrlXLGHb8bDr1BC2K27FrWFDPK9cdTFybDxHCZZo9Irv3X5Cs4jFmgqiVMfBqfYnRGpOBZsWupliKaEjMmAdQ2MSMeVP5qdO0YlR+ihMpKlYo7n6fWJCIqXGUWA6I6KH6rc3E//yOpkOz/0Jj9NMs5guFoWZQDpBs79Rn0tGtRgbQqjk5lZEh0QSqk06BRPC16fof9Iq207VxtdnpfrFMo48HMExnIIDHtThChrQBAoDeIAneLaE9Wi9WK+L1py1nDmEH7DePgFj543h

Within the context of event modeling and

understanding, we propose a new method

arXiv:2010.04361v3 [cs.LG] 13 Apr 2021

Crush occurred at station Train collided with train Killed passengers and injured

for neural sequence modeling that takes

partially-observed sequences of discrete, ex-

ternal knowledge into account. We construct Figure 1: An overview of event modeling, where the ob-

a sequential neural variational autoencoder, served events (black text) are generated via a sequence

which uses Gumbel-Softmax reparametriza- of semi-observed random variables. In this case, the

tion within a carefully defined encoder, to random variables are directed to take on the meaning

allow for successful backpropagation during of semantic frames that can be helpful to explain the

training. The core idea is to allow semi- events. Unobserved frames are in orange and observed

supervised external discrete knowledge to frames are in blue. Some connections are more impor-

guide, but not restrict, the variational latent pa- tant than others, indicated by the weighted arrows.

rameters during training. Our experiments in-

dicate that our approach not only outperforms such as for script learning—complicates the devel-

multiple baselines and the state-of-the-art in

opment of neural generative models.

narrative script induction, but also converges

more quickly. In this paper, we provide a successful approach

for incorporating partially-observed, discrete, se-

1 Introduction quential external knowledge in a neural genera-

tive model. We specifically examine event se-

Event scripts are a classic way of summarizing quence modeling augmented by semantic frames.

events, participants, and other relevant information Frames (Minsky, 1974, i.a.) are a semantic repre-

as a way of analyzing complex situations (Schank sentation designed to capture the common and gen-

and Abelson, 1977). To learn these scripts we eral knowledge we have about events, situations,

must be able to group similar-events together, learn and things. They have been effective in providing

common patterns/sequences of events, and learn crucial information for modeling and understand-

to represent an event’s arguments (Minsky, 1974). ing the meaning of events (Peng and Roth, 2016;

While continuous embeddings can be learned for Ferraro et al., 2017; Padia et al., 2018; Zhang et al.,

events and their arguments (Ferraro et al., 2017; 2020). Though we focus on semantic frames as our

Weber et al., 2018a), the direct inclusion of more source of external knowledge, we argue this work

structured, discrete knowledge is helpful in learn- is applicable to other similar types of knowledge.

ing event representations (Ferraro and Van Durme, We examine the problem of modeling observed

2016). Obtaining fully accurate structured knowl- event tuples as a partially observed sequence of

edge can be difficult, so when the external knowl- semantic frames. Consider the following three

edge is neither sufficiently reliable nor present, a events, preceded by their corresponding bracketed

natural question arises: how can our models use frames, from Fig. 1:

the knowledge that is present?

[E VENT ] crash occurred at station.

Generative probabilistic models provide a frame-

[I MPACT ] train collided with train.

work for doing so: external knowledge is a random

[K ILLING ] killed passengers and injured.

variable, which can be observed or latent, and the

data/observations are generated (explained) from We can see that even without knowing the

it. Knowledge that is discrete, sequential, or both— [K ILLING ] frame, the [E VENT ] and [I MPACT ]frames can help predict the word killed in the third explain the data. Mathematically, the joint proba-

event; the frames summarize the events and can be bility p(x, f ; θ) factorizes as follows

used as guidance for the latent variables to repre-

sent the data. On the other hand, words like crash, p(x, f ; θ) = p(f )p(x|f ; θ), (1)

station and killed from the first and third events

where θ represents the model parameters. Since

play a role in predicting [I MPACT] in the second

in practice maximizing the log-likelihood is in-

event. Overall, to successfully represent events,

tractable, we approximate the posterior by defining

beyond capturing the event to event connections,

q(f |x; φ) and maximize the ELBO (Kingma and

we propose to consider all the information from the

Welling, 2013) as a surrogate objective:

frames to events and frames to frames.

In this work, we study the effect of tying discrete, p(x, f ; θ)

sequential latent variables to partially-observable, Lθ,φ = Eq(f |x;φ) log . (2)

q(f |x; φ)

noisy (imperfect) semantic frames. Like Weber

et al. (2018b), our semi-supervised model is a bidi- In this paper, we are interested in studying a spe-

rectional auto-encoder, with a structured collection cific case; the input x is a sequence of T tokens,

of latent variables separating the encoder and de- we have M sequential discrete latent variables

coder, and attention mechanisms on both the en- z = {zm }M m=1 , where each zm takes F discrete

coder and decoder. Rather than applying vector values. While there have been effective proposals

quantization, we adopt a Gumbel-Softmax (Jang for unsupervised optimization of θ and φ, we focus

et al., 2017) ancestral sampling method to easily on learning partially observed sequences of these

switch between the observed frames and latent variables. That is, we assume that in the training

ones, where we inject the observed frame infor- phase some values are observed while others are

mation on the Gumbel-Softmax parameters before latent. We incorporate this partially observed, ex-

sampling. Overall, our contributions are: ternal knowledge to the φ parameters to guide the

inference. The inferred latent variables later will

• We demonstrate how to learn a VAE that be used to reconstruct to the tokens.

contains sequential, discrete, and partially- Kingma et al. (2014) generalized VAEs to the

observed latent variables. semi-supervised setup, but they assume that the

• We show that adding partially-observed, ex- dataset can be split into observed and unobserved

ternal, semantic frame knowledge to our struc- samples and they have defined separate loss func-

tured, neural generative model leads to im- tions for each case; in our work, we allow portions

provements over the current state-of-the-art of a sequence to be latent. Teng et al. (2020) charac-

on recent core event modeling tasks. Our ap- terized the semi-supervised VAEs via sparse latent

proach leads to faster training convergence. variables; see Mousavi et al. (2019) for an in-depth

• We show that our semi-supervised model, study of additional sparse models.

though developed as a generative model, can Of the approaches that have been developed

effectively predict the labels that it may not for handling discrete latent variables in a neu-

observe. Additionally, we find that our model ral model (Vahdat et al., 2018a,b; Lorberbom

outperforms a discriminatively trained model et al., 2019, i.a.), we use the Gumbel-Softmax

with full supervision. reparametrization (Jang et al., 2017; Maddison

et al., 2016). This approximates a discrete draw

with logits π as softmax( π+g τ ), where g is a vec-

2 Related Work

tor of Gumbel(0, 1) draws and τ is an annealing

Our work builds on both event modeling and la- temperature that allows targeted behavior; its ease,

tent generative models. In this section, we outline customizability, and efficacy are big advantages.

relevant background and related work.

2.2 Event Modeling

2.1 Latent Generative Modeling Sequential event modeling, as in this paper, can

Generative latent variable models learn a mapping be viewed as a type of script or schema induc-

from the low-dimensional hidden variables f to tion (Schank and Abelson, 1977) via language

the observed data points x, where the hidden rep- modeling techniques. Mooney and DeJong (1985)

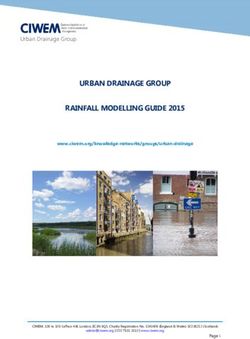

resentation captures the high-level information to provided an early analysis of explanatory schemaENCODER DECODER

Frame and Events Alignment RNN Hidden States Extracted Frames Frames Lookup Emb

Train collided with train

e fm

AAAB8nicbVBNS8NAEN3Ur1q/qh69LBbBiyURRY9FLx4rWFtIQ9hsJ+3S3WzY3Qgl5Gd48aCIV3+NN/+N2zYHbX0w8Hhvhpl5UcqZNq777VRWVtfWN6qbta3tnd29+v7Bo5aZotChkkvVi4gGzhLoGGY49FIFREQcutH4dup3n0BpJpMHM0khEGSYsJhRYqzkQ5jHYS7OvKII6w236c6Al4lXkgYq0Q7rX/2BpJmAxFBOtPY9NzVBTpRhlENR62caUkLHZAi+pQkRoIN8dnKBT6wywLFUthKDZ+rviZwIrScisp2CmJFe9Kbif56fmfg6yFmSZgYSOl8UZxwbiaf/4wFTQA2fWEKoYvZWTEdEEWpsSjUbgrf48jJ5PG96l033/qLRuinjqKIjdIxOkYeuUAvdoTbqIIokekav6M0xzovz7nzMWytOOXOI/sD5/AEwG5Ew

1

Context

External Information Event and Frames Alignment

f1

AAAB8XicdVBNSwMxEJ2tX7V+VT16CRbB05JdW2xvRS89KlgrtkvJptk2NJtdkqxQlv4LLx4U8eq/8ea/Ma0VVPTBwOO9GWbmhang2mD87hSWlldW14rrpY3Nre2d8u7etU4yRVmbJiJRNyHRTHDJ2oYbwW5SxUgcCtYJx+czv3PHlOaJvDKTlAUxGUoecUqMlW57rWzI8qjvTfvlCnYbDVyt1hB2a9j3/bol+MSvNzzkuXiOCixw0S+/9QYJzWImDRVE666HUxPkRBlOBZuWeplmKaFjMmRdSyWJmQ7y+cVTdGSVAYoSZUsaNFe/T+Qk1noSh7YzJmakf3sz8S+vm5moHuRcpplhkn4uijKBTIJm76MBV4waMbGEUMXtrYiOiCLU2JBKNoSvT9H/5Np3vZqLL6uV5tkijiIcwCEcgwen0IQWXEAbKEi4h0d4crTz4Dw7L5+tBWcxsw8/4Lx+AMUwkP4=

f2

AAAB8XicdVBNS8NAEN3Ur1q/qh69LBbBU0lLS9Nb0UuPFewHtqFstpN26WYTdjdCCf0XXjwo4tV/481/46aNoKIPBh7vzTAzz4s4U9q2P6zcxubW9k5+t7C3f3B4VDw+6akwlhS6NOShHHhEAWcCupppDoNIAgk8Dn1vfp36/XuQioXiVi8icAMyFcxnlGgj3Y3a8RQSf1xdjoslu2zbjlNv4JSkMKTZrDWdGq5kSgll6IyL76NJSOMAhKacKDWs2JF2EyI1oxyWhVGsICJ0TqYwNFSQAJSbrC5e4gujTLAfSlNC45X6fSIhgVKLwDOdAdEz9dtLxb+8Yax9x02YiGINgq4X+THHOsTp+3jCJFDNF4YQKpm5FdMZkYRqE1LBhPD1Kf6f9KrlSr1s39RKrassjjw6Q+foElVQA7VQG3VQF1Ek0AN6Qs+Wsh6tF+t13ZqzsplT9APW2yfC/ZD9

fM

AAAB6nicdVDJSgNBEK2JW4xb1KOXxiB4GnrGBJNb0IsXIaJZIBlCT6cnadKz0N0jhCGf4MWDIl79Im/+jZ1FUNEHBY/3qqiq5yeCK43xh5VbWV1b38hvFra2d3b3ivsHLRWnkrImjUUsOz5RTPCINTXXgnUSyUjoC9b2x5czv33PpOJxdKcnCfNCMox4wCnRRroN+tf9YgnbtRoulysI2xXsum7VEHzmVmsOcmw8RwmWaPSL771BTNOQRZoKolTXwYn2MiI1p4JNC71UsYTQMRmyrqERCZnysvmpU3RilAEKYmkq0miufp/ISKjUJPRNZ0j0SP32ZuJfXjfVQdXLeJSkmkV0sShIBdIxmv2NBlwyqsXEEEIlN7ciOiKSUG3SKZgQvj5F/5OWazsVG9+US/WLZRx5OIJjOAUHzqEOV9CAJlAYwgM8wbMlrEfrxXpdtOas5cwh/ID19gmF6433

Impact

Context

Sample Token

Gumbel-Softmax Params Sample Frame

Figure 2: Our encoder (left) and decoder (right). The orange nodes mean that the frame is latent (Im = 0), while

the blue nodes indicate observed frames (Im is one-hot). In the encoder, the RNN hidden vectors are aligned with

the frames to predict the next frame. The decoder utilizes the inferred frame information in the reconstruction.

generating system to process narratives, and Pi- tional VAE-based model.

chotta and Mooney (2016) applied an LSTM-based In another thread of research, Chen et al. (2018)

model to predict the event arguments. Modi (2016) utilized labels in conjunction with latent variables,

proposed a neural network model to predict ran- but unlike Weber et al. (2018b), their model’s latent

domly missing events, while Rudinger et al. (2015) variables are conditionally independent and do not

showed how neural language modeling can be form a hierarchy. Sønderby et al. (2016) proposed

used for sequential event prediction. Weber et al. a sequential latent structure with Gaussian latent

(2018a) and Ding et al. (2019) used tensor-based variables. Liévin et al. (2019), similar to our model

decomposition methods for event representation. structure, provided an analysis of hierarchical re-

Weber et al. (2020) studied causality in event mod- laxed categorical sampling but for the unsupervised

eling via a latent neural language model. settings.

Previous work has also examined how to incor-

porate or learn various forms of semantic repre- 3 Method

sentations while modeling events. Cheung et al. Our focus in this paper is modeling sequential event

(2013) introduced an HMM-based model to ex- structure. In this section, we describe our varia-

plain event sequences via latent frames. Materna tional autoencoder model, and demonstrate how

(2012), Chambers (2013) and Bamman et al. (2013) partially-observed external knowledge can be in-

provided structured graphical models to learn event jected into the learning process. We provide an

models over syntactic dependencies; Ferraro and overview of our joint model in §3.1 and Fig. 2. Our

Van Durme (2016) unified and generalized these model operates on sequences of events: it consists

approaches to capture varying levels of semantic of an encoder (§3.2) that encodes the sequence of

forms and representation. Kallmeyer et al. (2018) events as a new sequence of frames (higher-level,

proposed a Bayesian network based on a hierar- more abstract representations), and a decoder (§3.3)

chical dependency between syntactic dependencies that learns how to reconstruct the original sequence

and frames. Ribeiro et al. (2019) provided an anal- of events from the representation provided by the

ysis of clustering predicates and their arguments to encoder. During training (§3.4), the model can

infer semantic frames. make use of partially-observed sequential knowl-

Variational autoencoders and attention networks edge to enrich the representations produced by the

(Kingma and Welling, 2013; Bahdanau et al., 2014), encoder. In §3.5 we summarize the novel aspects

allowed Bisk et al. (2019) to use RNNs with at- of our model.

tention to capture the abstract and concrete script

representations. Weber et al. (2018b) came up with 3.1 Model Setup

a recurrent autoencoder model (HAQAE), which We define each document as a sequence of M

used vector-quantization to learn hierarchical de- events. In keeping with previous work on event

pendencies among discrete latent variables and an representation, each event is represented as a lexi-

observed event sequence. Kiyomaru et al. (2019) calized 4-tuple: the core event predicate (verb), two

suggested generating next events using a condi- main arguments (subject and object), and eventmodifier (if applicable) (Pichotta and Mooney, tion term learns to generate the observed events w

2016; Weber et al., 2018b). For simplicity, we across all valid encodings f , while the KL term

can write each document as a sequence of T words uses the prior p(f ) to regularize q.

w = {wt }Tt=1 , where T = 4M and each wt is Optimizing Eq. (4) is in general intractable, so

from a vocabulary of size V .1 Fig. 1 gives an ex- we sample S chains of variables f (1) , . . . , f (S)

ample of 3 events: during learning (but not testing) from q(f |w) and approximate Eq. (4) as

our model would have access to some, but not all, "

frames to lightly guide training (in this case, the 1X p(f (s) )

L≈ log p(w|f (s) ) + αq log +

first two frames but not the third). S s q(f (s) |w)

While lexically rich, this 4-tuple representation i

is limited in the knowledge that can be directly αc Lc (q(f (s) |w)) .

encoded. Therefore, our model assumes that each (5)

document w can be explained jointly with a collec- As our model is designed to allow the injection

tion of M random variables fm : f = {fm }M of external knowledge I, we define the variational

m=1 .

The joint probability for our model factorizes as distribution as q(f |w; I). In our experiments, Im

is a binary vector encoding which (if any) frame is

T M

Y Y observed for event m.3 For example in Fig. 1, we

p(w, f ) = p(wt |f , wAlgorithm 1 Encoder: The following algorithm not observed in training, such as for the third event

shows how we compute the next frame fm given ([K ILLING ]), γ m is not adjusted.

the previous frame fm−1 . We compute and return Since each draw fm from a Gumbel-Softmax is a

a hidden frame representation γ m , and fm via a simplex vector, given learnable frame embeddings

continuous Gumbel-Softmax reparametrization. E F , we can obtain an aggregate frame represen-

Input: previous frame fm−1 , . fm−1 ∈ RF tation em by calculating em = fm > E F . This can

current frame observation (Im ), be thought of as roughly extracting row m from

encoder GRU hidden states H ∈ RT ×dh . E F for low entropy draws fm , and using many

Parameters: Win ∈ Rdh ×de , Wout ∈ RF ×dh , frames in the representation for high entropy draws.

frames embeddings E F ∈ RF ×de Via the temperature hyperparameter, the Gumbel-

Output: fm , γ m Softmax allows us to control the entropy.

1: efm−1 = fm−1 > E F

3.3 Decoder

2: α ← Softmax(HWin e> fm−1 ) . Attn. Scores

3: cm ← H α >

. Context Vector Our decoder (Alg. 2) must be able to reconstruct

0

4: γ m ← Wout tanh(Win efm−1 ) + tanh(cm )

the input event token sequence from the frame rep-

5: γ m ← γ m + kγ m k Im . Observation resentations f = (f1 , . . . , fM ) computed by the

6: q(fm |fm−1 ) ← GumbelSoftmax(γ m )

encoder. In contrast to the encoder, the decoder is

7: fm ∼ q(fm |fm−1 ) . fm ∈ R F relatively simple: we use an auto-regressive (left-

to-right) GRU to produce hidden representations

zt for each token we need to reconstruct, but we

enrich that representation via an attention mecha-

Next, it calculates the similarity between efm−1 and

nism over f . Specifically, we use both f and the

RNN hidden representations and all recurrent hid-

same learned frame embeddings EF from the en-

den states H (line 2). After deriving the attention

coder to compute inferred, contextualized frame

scores, the weighted average of hidden states (cm )

embeddings as E M = f EF ∈ RM ×de . For each

summarizes the role of tokens in influencing the

output token (time step t), we align the decoder

frame fm for the mth event (line 3). We then com-

GRU hidden state ht with the rows of E M (line 1).

bine the previous frame embedding efm−1 and the

After calculating the scores for each frame embed-

current context vector cm to obtain a representation

ding, we obtain the output context vector ct (line 2),

γ 0m for the mth event (line 4).

which is used in conjunction with the hidden state

While γ 0m may be an appropriate representation of the decoder zt to generate the wt token (line 5).

if no external knowledge is provided, our encoder In Fig. 1, the collection of all the three frames and

needs to be able to inject any provided external the tokens from the first event will be used to pre-

knowledge Im . Our model defines a chain of dict the Train token from the second event.

variables—some of which may be observed and

some of which may be latent—so care must be 3.4 Training Process

taken to preserve the gradient flow within the net- We now analyze the different terms in Eq. (5). In

work. We note that an initial strategy of solely our experiments, we have set the number of sam-

using Im instead of fm (whenever Im is provided) ples S to be 1. From Eq. (6), and using the sam-

is not sufficient to ensure gradient flow. Instead, pled sequence of frames f1 , f2 , . . . fM from our

we incorporate the observed information given by encoder, we

P approximate the reconstruction term

Im by adding this information to the output of the as Lw = t log p(wt |f1 , f2 , . . . fM ; zt ), where zt

encoder logits before drawing fm samples (line 5). is the decoder GRU’s hidden representation after

This remedy motivates the encoder to softly in- having reconstructed the previous t − 1 words in

crease the importance of the observed frames dur- the event sequence.

ing the training. Finally, we draw fm from the Looking at the KL-term, we define the prior

Gumbel-Softmax distribution (line 7). frame-to-frame distribution as p(fm |fm−1 ) =

For example, in Fig. 1, when the model knows 1/F , and let the variational distribution capture

that [I MPACT ] is triggered, it increases the value the dependency between the frames. A simi-

of [I MPACT ] in γ m to encourage [I MPACT ] to be lar type of strategy has been exploited success-

sampled, but it does not prevent other frames from fully by Chen et al. (2018) to make computa-

being sampled. On the other hand, when a frame is tion simpler. We see the computational benefitsAlgorithm 2 Decoder: To (re)generate each token Our model is related to HAQAE (Weber et al.,

in the event sequence, we compute an attention 2018b), though with important differences. While

α̂ over the sequence of frame random variables f HAQAE relies on unsupervised deterministic infer-

(from Alg. 1). This attention weights each frame’s ence, we aim to incorporate the frame information

contribution to generating the current word. in a softer, guided fashion. The core differences are:

Input: E M ∈ RM ×de (computed as f EF ) our model supports partially-observed frame se-

decoder’s current hidden state zt ∈ Rdh quences (i.e., semi-supervised learning); the linear-

Parameters: Ŵin ∈ Rde ×dh , Ŵout ∈ RV ×de , EF chain connection among the event variables in our

Output: wt model is simpler than the tree-based structure in

1: α̂ ← Softmax(E M Ŵin zt ) . Attn. Scores HAQAE; while both works use attention mech-

M> anisms in the encoder and decoder, our attention

2: ct ← E α̂ . Context Vector mechanism is based on addition rather than concate-

3: g ← Ŵout tanh(Ŵin zt ) + tanh(ct )

4: p(wt |f ; zt ) ∝ exp(g)

nation; and our handling of latent discrete variables

5: wt ∼ p(wt |f ; zt )

is based on the Gumbel-Softmax reparametrization,

rather than vector quantization. We discuss these

differences further in Appendix C.1.

of a uniform prior: splitting the KL term into

Eq(f |w) log p(f ) − Eq(f |w) log q(f |w) allows us 4 Experimental Results

to neglect the first term. For the second term,

we normalize the Gumbel-Softmax logits γ m , i.e., We test the performance of our model on a

γ m = Softmax(γ m ), and compute portion of the Concretely Annotated Wikipedia

X dataset (Ferraro et al., 2014), which is a dump of

Lq = −Eq(f |w) log q(f |w) ≈ − γ>

m log γ m .

English Wikipedia that has been annotated with the

m outputs of more than a dozen NLP analytics; we

use this as it has readily-available FrameNet an-

Lq encourages the entropy of the variational dis-

notations provided via SemaFor (Das et al., 2014).

tribution to be high which makes it hard for the

Our training data has 457k documents, our valida-

encoder to distinguish the true frames from the

tion set has 16k documents, and our test set has

wrong ones. We add a fixed and constant regular-

21k documents. More than 99% of the frames are

ization coefficient αq to decrease the effect of this

concentrated in the first 500 most common frames,

term (Bowman et al., 2015).

P We define the classifi- so we set F = 500. Nearly 15% of the events

cation loss as Lc = − Im >0 Im > log γ m , to en-

did not have any frame, many of which were due

courage q to be good at predicting any frames that

to auxiliary/modal verb structures; as a result, we

were actually observed. We weight Lc by a fixed

did not include them. For all the experiments, the

coefficient αc . Summing these losses together, we

vocabulary size (V ) is set as 40k and the number

arrive at our objective function:

of events (M ) is 5; this is to maintain compara-

L = Lw + αq Lq + αc Lc . (7) bility with HAQAE. For the documents that had

more than 5 events, we extracted the first 5 events

3.5 Relation to prior event modeling that had frames. For both the validation and test

A number of efforts have leveraged frame induction datasets, we have set Im = 0 for all the events;

for event modeling (Cheung et al., 2013; Chambers, frames are only observed during training.

2013; Ferraro and Van Durme, 2016; Kallmeyer Documents are fed to the model as a sequence

et al., 2018; Ribeiro et al., 2019). These meth- of events with verb, subj, object and modifier ele-

ods are restricted to explicit connections between ments. The events are separated with a special sep-

events and their corresponding frames; they do not arating < TUP > token and the missing elements are

capture all the possible connections between the ob- represented with a special N ONE token. In order to

served events and frames. Weber et al. (2018b) pro- facilitate semi-supervised training and examine the

posed a hierarchical unsupervised attention struc- impact of frame knowledge, we introduce a user-

ture (HAQAE) that corrects for this. HAQAE uses set value : in each document, for event m, the true

vector quantization (Van Den Oord et al., 2017) to value of the frame is preserved in Im with probabil-

capture sequential event structure via tree-based ity , while with probability 1 − we set Im = 0.

latent variables. This is set and fixed prior to each experiment. ForPPL Inv Narr Cloze

Model Valid Test Model Wiki NYT

RNNLM - 61.34 ±2.05 61.80 ±4.81 Valid Test Valid Test

RNNLM+ROLE - 66.07 ±0.40 60.99 ±2.11 RNNLM - 20.33 ±0.56 21.37 ±0.98 18.11 ±0.41 17.86 ±0.80

HAQAE - 24.39 ±0.46 21.38 ±0.25 RNNLM+ROLE - 19.57 ±0.68 19.69 ±0.97 17.56 ±0.10 17.95 ±0.25

HAQAE - 29.18 ±1.40 24.88 ±1.35 20.5 ±1.31 22.11 ±0.49

Ours 0.0 41.18 ±0.69 36.28 ±0.74

Ours 0.0 43.80 ±2.93 45.75 ±3.47 29.40 ±1.17 28.63 ±0.37

Ours 0.2 38.52 ±0.83 33.31 ±0.63 Ours 0.2 45.78 ±1.53 44.38 ±2.10 29.50 ±1.13 29.30 ±1.45

Ours 0.4 37.79 ±0.52 33.12 ±0.54 Ours 0.4 47.65 ±3.40 47.88 ±3.59 30.01 ±1.27 30.61 ±0.37

Ours 0.5 35.84 ±0.66 31.11 ±0.85 Ours 0.5 42.38 ±2.41 40.18 ±0.90 29.36 ±1.58 29.95 ±0.97

Ours 0.7 24.20 ±1.07 21.19 ±0.76 Ours 0.7 38.40 ±1.20 39.08 ±1.55 29.15 ±0.95 30.13 ±0.66

Ours 0.8 23.68 ±0.75 20.77 ±0.73 Ours 0.8 39.48 ±3.02 38.96 ±2.75 29.50 ±0.30 30.33 ±0.81

Ours 0.9 22.52 ±0.62 19.84 ±0.52 Ours 0.9 35.61 ±0.62 35.56 ±1.70 28.41 ±0.29 29.01 ±0.84

(a) Validation and test per-word perplexities (lower (b) Inverse Narrative Cloze scores (higher is better), averaged across 3

is better). We always outperform RNNLM and runs, with standard deviation reported. Some frame observation ( = 0.4)

RNNLM+ROLE, and outperform HAQAE when au- is most effective across Wikipedia and NYT, though we outperform our

tomatically extracted frames are sufficiently available baselines, including the SOTA, at any level of frame observation. For the

during training ( ∈ {0.7, 0.8, 0.9}). NYT dataset, we first trained the model on the Wikipedia dataset and then

did the tests on the NYT valid and test inverse narrative cloze datasets.

Table 1: Validation and test results for per-word perplexity (Table 1a: lower is better) and inverse narrative cloze

accuracy (Table 1b: higher is better). Recall that is the (average) percent of frames observed during training

though during evaluation no frames are observed.

all the experiments we set αq and αc as 0.1, found 4.1 Evaluations

empirically on the validation data. To measure the effectiveness of our proposed model

for event representation, we first report the perplex-

Setup We represent words by their pretrained

ity and Inverse Narrative Cloze metrics.

Glove 300 embeddings and used gradient clipping

at 5.0 to prevent exploding gradients. We use a two Perplexity We summarize our per-word perplex-

layer of bi-directional GRU for the encoder, and ity results in Table 1a, which compares our event

a two layer uni-directional GRU for the decoder chain model, with varying values, to the three

(with a hidden dimension of 512 for both). See baselines.5 Recall that refers to the (average) per-

Appendix B for additional computational details.4 cent of frames observed during training. During

evaluation no frames are observed; this ensures a

Baselines In our experiments, we compare our fair comparison to our baselines.

proposed methods against the following methods: As clearly seen, our model outperforms other

baselines across both the validation and test

• RNNLM: We report the performance of a se- datasets. We find that increasing the observation

quence to sequence language model with the probability consistently yields performance im-

same structure used in our own model. A Bi- provement. For any value of we outperform

directional GRU cell with two layers, hidden RNNLM and RNNLM+ROLE. HAQAE outper-

dimension of 512, gradient clipping at 5 and forms our model for ≤ 0.5, while we outperform

Glove 300 embeddings to represent words. HAQAE for ∈ {0.7, 0.8, 0.9}. This suggests that

• RNNLM+ROLE (Pichotta and Mooney, while the tree-based latent structure can be helpful

2016): This model has the same structure as when external, semantic knowledge is not suffi-

RNNLM, but the role for each token (verb, ciently available during training, a simpler linear

subject, object, modifier) as a learnable em- structure can be successfully guided by that knowl-

bedding vector is concatenated to the token edge when it is available. Finally, recall that the

embeddings and then it is fed to the model. external frame annotations are automatically pro-

The embedding dimension for roles is 300. vided, without human curation: this suggests that

our model does not require perfectly, curated anno-

• HAQAE (Weber et al., 2018b) This work is tations. These observations support the hypothesis

the most similar to ours. For fairness, we seed that frame observations, in conjunction with latent

HAQAE with the same dimension GRUs and variables, provide a benefit to event modeling.

pretrained embeddings.

5

In our case, perplexity provides an indication of the

4

https://github.com/mmrezaee/SSDVAE model’s ability to predict the next event and arguments.Tokens βenc (Frames Given Tokens)

kills K ILLING D EATH H UNTING _ SUCCESS _ OR _ FAILURE H IT _ TARGET ATTACK

paper S UBMITTING _ DOCUMENTS S UMMARIZING S IGN D ECIDING E XPLAINING _ THE _ FACTS

business C OMMERCE _ PAY C OMMERCE _ BUY E XPENSIVENESS R ENTING R EPORTING

Frames βdec (Tokens Given Frames)

C AUSATION raise rendered caused induced brought

P ERSONAL _ RELATIONSHIP dated dating married divorced widowed

F INISH _ COMPETITION lost compete won competed competes

Table 2: Results for the outputs of the attention layer, the upper table shows the βenc and the bottom table shows

the βdec , when = 0.7. Each row shows the top 5 words for each clustering.

Inverse Narrative Cloze This task has been pro- 4.2 Qualitative Analysis of Attention

posed by Weber et al. (2018b) to evaluate the abil-

ity of models to classify the legitimate sequence To illustrate the effectiveness of our proposed at-

of events over detractor sequences. For this task, tention mechanism, in Table 2 we show the most

we have created two Wiki-based datasets from our likely frames given tokens (βenc ) , and tokens given

validation and test datasets, each with 2k samples. frames (βdec ). We define βenc = Wout tanh(H > )

Each sample has 6 options in which the first events >

and βdec = Ŵout tanh(E M ) where βenc ∈ RF ×T

are the same and only one of the options represents

provides a contextual token-to-frame soft cluster-

the actual sequence of events. All the options have

ing matrix for each document and analogously

a fixed size of 6 events and the one that has the low-

βdec ∈ RV ×M provides a frame-to-word soft-

est perplexity is selected as the correct one. We also

clustering contextualized in part based on the in-

consider two NYT inverse narrative cloze datasets

ferred frames. We argue that these clusters are

that are publicly available.6 All the models are

useful for analyzing and interpreting the model and

trained on the Wiki dataset and then classifications

its predictions. Our experiments demonstrate that

are done on the NYT dataset (no NYT training data

the frames in the encoder (Table 2, top) mostly at-

was publicly available).

tend to the verbs and similarly the decoder utilizes

Table 1b presents the results for this task. Our expected and reasonable frames to predict the next

method tends to achieve a superior classification verb. Note that we have not restricted the frames

score over all the baselines, even for small . Our and tokens connection: the attention mechanism

model also yields performance improvements on makes the ultimate decision for these connections.

the NYT validation and test datasets. We observe

that the inverse narrative cloze scores for the NYT We note that these clusters are a result of our

datasets is almost independent from the . We sus- attention mechanisms. Recall that in both the en-

pect this due to the different domains between train- coder and decoder algorithms, after computing the

ing (Wikipedia) and testing (newswire). context vectors, we use the addition of two tanh(·)

functions with the goal of separating the GRU hid-

Note that while our model’s perplexity improved den states and frame embeddings (line 3). This is a

monotonically as increased, we do not see mono- different computation from the bi-linear attention

tonic changes, with respect to , for this task. By mechanism (Luong et al., 2015) that applies the

examining computed quantities from our model, tanh(·) function over concatenation. Our additive

we observed both that a high resulted in very approach was inspired by the neural topic modeling

low entropy attention and that frames very often method from Dieng et al. (2016), which similarly

attended to the verb of the event—it learned this uses additive factors to learn an expressive and pre-

association despite never being explicitly directly dictive neural component and the classic “topics”

to. While this is a benefit to localized next word (distributions/clusters over words) that traditional

prediction (i.e., perplexity), it is detrimental to in- topic models excel at finding. While theoretical

verse narrative cloze. On the other hand, lower guarantees are beyond our scope, qualitative anal-

resulted in slightly higher attention entropy, sug- yses suggests that our additive attention lets the

gesting that less peaky attention allows the model model learn reasonable soft clusters of tokens into

to capture more of the entire event sequence and frame-based “topics.” See Table 6 in the Appendix

improve global coherence. for an empirical comparison and validation of our

use of addition rather than concatenation in the

6

https://git.io/Jkm46 attention mechanisms.Valid Test

Model

Acc Prec f1 Acc Prec f1 Model

RNNLM - 0.89 0.73 0.66 0.88 0.71 0.65

RNNLM + ROLE - 0.89 0.75 0.69 0.88 0.74 0.68 6 HAQAE

Ours 0.00 0.00 0.00 0.00 0.00 0.00 0.00

Ours (ε=0.2)

Heldout NLL

Ours 0.20 0.59 0.27 0.28 0.58 0.27 0.28

Ours 0.40 0.77 0.49 0.50 0.77 0.49 0.50

Ours 0.50 0.79 0.51 0.48 0.79 0.50 0.48

5 Ours (ε=0.7)

Ours 0.70 0.85 0.69 0.65 0.84 0.69 0.65

Ours (ε=0.9)

Ours 0.80 0.86 0.77 0.74 0.85 0.76 0.74

Ours 0.90 0.87 0.81 0.78 0.86 0.81 0.79 4

Table 3: Accuracy and macro precision and F1-score,

averaged across three different runs. We present stan- 3

dard deviations in Table 5. 10000 20000

Epoch

4.3 How Discriminative Is A Latent Node? Figure 3: Validation NLL during training of our model

with ∈ {0.2, 0.7, 0.9} and HAQAE (the red curve).

Though we develop a generative model, we want

Epochs are displayed with a square root transform.

to make sure the latent nodes are capable of lever-

aging the frame information in the decoder. We 4.4 Training Speed

examine this assessing the ability of one single la-

tent node to classify the frame for an event. We re- Our experiments show that our proposed approach

purpose the Wikipedia language modeling dataset converges faster than the existing HAQAE model.

into a new training data set with 1,643,656 samples, For fairness, we have used the same data-loader,

validation with 57,261 samples and test with 75903 batch size as 100, learning rate as 10−3 and Adam

samples. We used 500 frame labels. Each sample is optimizer. In Fig. 3, on average each iteration takes

a single event. We fixed the number of latent nodes 0.2951 seconds for HAQAE and 0.2958 seconds

to be one. We use RNNLM and RNNLM+ROLE as for our model. From Fig. 3 we can see that for

baselines, adding a linear classifier layer followed sufficiently high values of our model is converg-

by the softplus function on top of the bidirectional ing both better, in terms of negative log-likelihood

GRU last hidden vectors and a dropout of 0.15 on (NLL), and faster—though for small , our model

the logits. We trained all the models with the afore- still converges much faster. The reasons for this can

mentioned training dataset, and tuned the hyper be boiled down to utilizing Gumbel-Softmax rather

parameters on the validation dataset. than VQ-VAE, and also injection information in

We trained the RNNLM and RNNLM+ROLE the form of frames jointly.

baselines in a purely supervised way, whereas our

model mixed supervised (discriminative) and un-

5 Conclusion

supervised (generative) training. The baselines ob- We showed how to learn a semi-supervised VAE

served all of the frame labels in the training set; our with partially observed, sequential, discrete latent

model only observed frame values in training with variables. We used Gumbel-Softmax and a modi-

probability , which it predicted from γ m . The fied attention to learn a highly effective event lan-

parameters leading to the highest accuracy were guage model (low perplexity), predictor of how

chosen to evaluate the classification on the test an initial event may progress (improved inverse

dataset. The results for this task are summarized in narrative cloze), and a task-based classifier (outper-

Table 3. Our method is an attention based model forming fully supervised systems). We believe that

which captures all the dependencies in each event future work could extend our method by incorpo-

to construct the latent representation, but the base- rating other sources or types of knowledge (such

lines are autoregressive models. Our encoder acts as entity or “commonsense” knowledge), and by

like a discriminative classifier to predict the frames, using other forms of a prior distribution, such as

where they will later be used in the decoder to con- “plug-and-play” priors (Guo et al., 2019; Moham-

struct the events. We expect the model performance madi et al., 2021; Laumont et al., 2021).

to be comparable to RNNLM and RNNLM+ROLE

in terms of classification when is high. Our model Acknowledgements and Funding Disclosure

with larger tends to achieve better performance in We would also like to thank the anonymous re-

terms of macro precision and macro F1-score. viewers for their comments, questions, and sug-gestions. Some experiments were conducted on Dipanjan Das, Desai Chen, André FT Martins, Nathan

the UMBC HPCF, supported by the National Sci- Schneider, and Noah A Smith. 2014. Frame-

semantic parsing. Computational linguistics,

ence Foundation under Grant No. CNS-1920079.

40(1):9–56.

We’d also like to thank the reviewers for their com-

ments and suggestions. This material is based in Adji B Dieng, Chong Wang, Jianfeng Gao, and John

part upon work supported by the National Science Paisley. 2016. Topicrnn: A recurrent neural net-

Foundation under Grant Nos. IIS-1940931 and IIS- work with long-range semantic dependency. arXiv

preprint arXiv:1611.01702.

2024878. This material is also based on research

that is in part supported by the Air Force Research Xiao Ding, Kuo Liao, Ting Liu, Zhongyang Li, and

Laboratory (AFRL), DARPA, for the KAIROS pro- Junwen Duan. 2019. Event representation learning

gram under agreement number FA8750-19-2-1003. enhanced with external commonsense knowledge.

In Proceedings of the 2019 Conference on Empirical

The U.S.Government is authorized to reproduce Methods in Natural Language Processing and the

and distribute reprints for Governmental purposes 9th International Joint Conference on Natural Lan-

notwithstanding any copyright notation thereon. guage Processing (EMNLP-IJCNLP), pages 4896–

The views and conclusions contained herein are 4905.

those of the authors and should not be interpreted Francis Ferraro, Adam Poliak, Ryan Cotterell, and Ben-

as necessarily representing the official policies or jamin Van Durme. 2017. Frame-based continuous

endorsements, either express or implied, of the Air lexical semantics through exponential family tensor

Force Research Laboratory (AFRL), DARPA, or factorization and semantic proto-roles. In Proceed-

ings of the 6th Joint Conference on Lexical and Com-

the U.S. Government. putational Semantics (*SEM 2017), pages 97–103,

Vancouver, Canada. Association for Computational

Linguistics.

References

Francis Ferraro, Max Thomas, Matthew R. Gormley,

Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Ben- Travis Wolfe, Craig Harman, and Benjamin Van

gio. 2014. Neural machine translation by jointly Durme. 2014. Concretely Annotated Corpora. In

learning to align and translate. arXiv preprint 4th Workshop on Automated Knowledge Base Con-

arXiv:1409.0473. struction (AKBC), Montreal, Canada.

David Bamman, Brendan O’Connor, and Noah A.

Francis Ferraro and Benjamin Van Durme. 2016. A

Smith. 2013. Learning latent personas of film char-

unified bayesian model of scripts, frames and lan-

acters. In ACL.

guage. In Thirtieth AAAI Conference on Artificial

Yonatan Bisk, Jan Buys, Karl Pichotta, and Yejin Choi. Intelligence.

2019. Benchmarking hierarchical script knowledge.

In Proceedings of the 2019 Conference of the North Bichuan Guo, Yuxing Han, and Jiangtao Wen. 2019.

American Chapter of the Association for Computa- Agem: Solving linear inverse problems via deep pri-

tional Linguistics: Human Language Technologies, ors and sampling. Advances in Neural Information

Volume 1 (Long and Short Papers), pages 4077– Processing Systems, 32:547–558.

4085.

Eric Jang, Shixiang Gu, and Ben Poole. 2017. Cate-

Samuel R Bowman, Luke Vilnis, Oriol Vinyals, An- gorical reparameterization with gumbel-softmax. In

drew M Dai, Rafal Jozefowicz, and Samy Ben- ICLR.

gio. 2015. Generating sentences from a continuous

space. arXiv preprint arXiv:1511.06349. Laura Kallmeyer, Behrang QasemiZadeh, and Jackie

Chi Kit Cheung. 2018. Coarse lexical frame acquisi-

Nathanael Chambers. 2013. Event schema induction tion at the syntax–semantics interface using a latent-

with a probabilistic entity-driven model. In Proceed- variable pcfg model. In Proceedings of the Seventh

ings of the 2013 Conference on Empirical Methods Joint Conference on Lexical and Computational Se-

in Natural Language Processing, pages 1797–1807. mantics, pages 130–141.

Mingda Chen, Qingming Tang, Karen Livescu, and Diederik P Kingma and Max Welling. 2013. Auto-

Kevin Gimpel. 2018. Variational sequential labelers encoding variational bayes. arXiv preprint

for semi-supervised learning. In Proceedings of the arXiv:1312.6114.

2018 Conference on Empirical Methods in Natural

Language Processing, pages 215–226. Durk P Kingma, Shakir Mohamed, Danilo Jimenez

Rezende, and Max Welling. 2014. Semi-supervised

Jackie Chi Kit Cheung, Hoifung Poon, and Lucy learning with deep generative models. In Advances

Vanderwende. 2013. Probabilistic frame induction. in neural information processing systems, pages

NAACL HLT 2013, pages 837–846. 3581–3589.Hirokazu Kiyomaru, Kazumasa Omura, Yugo Mu- First Workshop on Fact Extraction and VERification

rawaki, Daisuke Kawahara, and Sadao Kurohashi. (FEVER), pages 161–165, Brussels, Belgium. Work-

2019. Diversity-aware event prediction based on a shop at EMNLP.

conditional variational autoencoder with reconstruc-

tion. In Proceedings of the First Workshop on Com- Haoruo Peng and Dan Roth. 2016. Two discourse

monsense Inference in Natural Language Process- driven language models for semantics. In Proceed-

ing, pages 113–122. ings of the 54th Annual Meeting of the Association

for Computational Linguistics (Volume 1: Long Pa-

Rémi Laumont, Valentin De Bortoli, Andrés Almansa, pers), pages 290–300, Berlin, Germany. Association

Julie Delon, Alain Durmus, and Marcelo Pereyra. for Computational Linguistics.

2021. Bayesian imaging using plug & play pri-

ors: when langevin meets tweedie. arXiv preprint Karl Pichotta and Raymond J Mooney. 2016. Learn-

arXiv:2103.04715. ing statistical scripts with lstm recurrent neural net-

works. In Thirtieth AAAI Conference on Artificial

Valentin Liévin, Andrea Dittadi, Lars Maaløe, and Ole Intelligence.

Winther. 2019. Towards hierarchical discrete vari-

ational autoencoders. In 2nd Symposium on Ad- Eugénio Ribeiro, Vânia Mendonça, Ricardo Ribeiro,

vances in Approximate Bayesian Inference (AABI). David Martins de Matos, Alberto Sardinha, Ana Lú-

cia Santos, and Luísa Coheur. 2019. L2f/inesc-id at

Guy Lorberbom, Andreea Gane, Tommi Jaakkola, and semeval-2019 task 2: Unsupervised lexical semantic

Tamir Hazan. 2019. Direct optimization through frame induction using contextualized word represen-

argmax for discrete variational auto-encoder. In Ad- tations. In SemEval@NAACL-HLT.

vances in Neural Information Processing Systems,

pages 6203–6214. Rachel Rudinger, Pushpendre Rastogi, Francis Ferraro,

and Benjamin Van Durme. 2015. Script induction as

Minh-Thang Luong, Hieu Pham, and Christopher D language modeling. In EMNLP.

Manning. 2015. Effective approaches to attention-

based neural machine translation. In Proceedings of Roger C Schank and Robert P Abelson. 1977. Scripts,

the 2015 Conference on Empirical Methods in Natu- plans, goals, and understanding: an inquiry into hu-

ral Language Processing, pages 1412–1421. man knowledge structures.

Chris J Maddison, Andriy Mnih, and Yee Whye Teh. Casper Kaae Sønderby, Tapani Raiko, Lars Maaløe,

2016. The concrete distribution: A continuous relax- Søren Kaae Sønderby, and Ole Winther. 2016. Lad-

ation of discrete random variables. arXiv preprint der variational autoencoders. In Advances in neural

arXiv:1611.00712. information processing systems, pages 3738–3746.

Jiří Materna. 2012. LDA-Frames: an unsupervised ap- Michael Teng, Tuan Anh Le, Adam Scibior, and Frank

proach to generating semantic frames. In Compu- Wood. 2020. Semi-supervised sequential generative

tational Linguistics and Intelligent Text Processing, models. In Conference on Uncertainty in Artificial

pages 376–387. Springer. Intelligence, pages 649–658. PMLR.

Marvin Minsky. 1974. A framework for representing Arash Vahdat, Evgeny Andriyash, and William

knowledge. MIT-AI Laboratory Memo 306. Macready. 2018a. Dvae#: Discrete variational au-

toencoders with relaxed boltzmann priors. In Ad-

Ashutosh Modi. 2016. Event embeddings for seman- vances in Neural Information Processing Systems,

tic script modeling. In Proceedings of The 20th pages 1864–1874.

SIGNLL Conference on Computational Natural Lan-

guage Learning, pages 75–83. Arash Vahdat, William G Macready, Zhengbing

Bian, Amir Khoshaman, and Evgeny Andriyash.

Narges Mohammadi, Marvin M Doyley, and Mujdat 2018b. Dvae++: Discrete variational autoencoders

Cetin. 2021. Ultrasound elasticity imaging using with overlapping transformations. arXiv preprint

physics-based models and learning-based plug-and- arXiv:1802.04920.

play priors. arXiv preprint arXiv:2103.14096.

Aaron Van Den Oord, Oriol Vinyals, et al. 2017. Neu-

Raymond J Mooney and Gerald DeJong. 1985. Learn- ral discrete representation learning. In Advances

ing schemata for natural language processing. In IJ- in Neural Information Processing Systems, pages

CAI, pages 681–687. 6306–6315.

Seyedahmad Mousavi, Mohammad Mehdi Rezaee Noah Weber, Niranjan Balasubramanian, and

Taghiabadi, and Ramin Ayanzadeh. 2019. A survey Nathanael Chambers. 2018a. Event representations

on compressive sensing: Classical results and recent with tensor-based compositions. In Thirty-Second

advancements. arXiv preprint arXiv:1908.01014. AAAI Conference on Artificial Intelligence.

Ankur Padia, Francis Ferraro, and Tim Finin. 2018. Noah Weber, Rachel Rudinger, and Benjamin

Team UMBC-FEVER : Claim verification using se- Van Durme. 2020. Causal inference of script knowl-

mantic lexical resources. In Proceedings of the edge. In Proceedings of the 2020 Conference onEmpirical Methods in Natural Language Processing (EMNLP). Noah Weber, Leena Shekhar, Niranjan Balasubrama- nian, and Nathanael Chambers. 2018b. Hierarchi- cal quantized representations for script generation. In Proceedings of the 2018 Conference on Empiri- cal Methods in Natural Language Processing, pages 3783–3792. Rong Ye, Wenxian Shi, Hao Zhou, Zhongyu Wei, and Lei Li. 2020. Variational template ma- chine for data-to-text generation. arXiv preprint arXiv:2002.01127. Zhuosheng Zhang, Yuwei Wu, Hai Zhao, Zuchao Li, Shuailiang Zhang, Xi Zhou, and Xiang Zhou. 2020. Semantics-aware BERT for language understanding. In AAAI.

A Table of Notation VQ-VAE

Query

Symbol Description Dimension

F Frames vocabulary size N Min Distance

V Tokens vocabulary size N

M Number of events, per sequence N

T Number of words, per sequence (4M ) N (a) VQVAE

de Frame emb. dim. N

dh RNN hidden state dim. N

EF Learned frame emb. R ×de

F Gumbel-Softmax

EM Embeddings of sampled frames (E M = f E F ) R ×de

M

Im Observed frame (external one-hot) RF Query

fm Sampled frame (simplex) RF (Simplex)

H RNN hidden states from the encoder RT ×dh

Vector to Matrix

zt RNN hidden state from the decoder Rdh Multiplication

Win Learned frames-to-hidden-states weights (Encoder) Rdh ×de

Ŵin Learned hidden-states-to-frames weights (Decoder) Rde ×dh (b) Gumbel-Softmax and Emb (ours)

Wout Contextualized frame emb. (Encoder) RF ×dh

Ŵout Learned frames-to-words weights (Decoder) RV ×de Figure 4: (a) VQ-VAE in HAQAE works based on the

α Attention scores over hidden states (Encoder) RT

α̂ Attention scores over frame emb. (Decoder) RM

minimum distance between the query vector and the

cm Context vector (Encoder) Rdh embeddings. (b) our approach first draws a Gumbel-

ct Context vector (Decoder) Rd e Softmax sample and then extracts the relevant row by

γm Gumbel-Softmax params. (Encoder) RF

wt Tokens (onehot) RV doing vector to matrix multiplication.

Table 4: Notations used in this paper

latent variable zi is defined in the embedding space

denoted as e. The varaitional distribution to ap-

proximate z = {z1 , z2 , . . . , zM }M

i=1 given tokens

B Computing Infrastructure

x is defined as follows:

We used the Adam optimizer with initial learning M

Y −1

rate 10−3 and early stopping (lack of validation q(z|x) = q0 (z0 |x) qi (zi |parent_of(zi ), x).

performance improvement for 10 iterations). We i=1

represent events by their pretrained Glove 300 em- The encoder calculates the attention over the input

beddings and utilized gradient clipping at 5.0 to RNN hidden states hx and the parent of zi to define

prevent exploding gradients. The Gumbel-Softmax qi (zi = k|zi−1 , x):

temperature is fixed to τ = 0.5. We have not used (

dropout or batch norm on any layer. We have used 1 k = argminj kgi (x, parent_of(zi )) − eij k2

two layers of Bi-directional GRU cells with a hid- 0 elsewise,

den dimension of 512 for the encoder module and

where gi (x, parent_of(zi )) computes bilinear atten-

Unidirectional GRU with the same configuration

tion between hx and parent_of(zi )).

for the decoder. Each model was trained using

In this setting, the variational distribution is de-

a single GPU (a TITAN RTX RTX 2080 TI, or

terministic; right after deriving the latent query vec-

a Quadro 8000), though we note that neither our

tor it will be compared with a lookup embedding ta-

models nor the baselines required the full memory

ble and the row with minimum distance is selected,

of any GPU (e.g., our models used roughly 6GB of

see Fig. 4a. The decoder reconstructs the tokens

GPU memory for a batch of 100 documents).

as p(xi |z) that calculates the attention over latent

C Additional Insights into Novelty variables and the RNN hidden states. A reconstruc-

tion loss LR j and a “commit” loss (Weber et al.,

We have previously mentioned how our work is 2018b) loss LC j force gj (x, parent_of(zi )) to be

most similar to HAQAE (Weber et al., 2018b). close to the embedding referred to by qi (zi ). Both

In this section, we provide a brief overview of LR C

j and Lj terms rely on a deterministic mapping

HAQAE (Appendix C.1) and then highlight dif- that is based on a nearest neighbor computation

ferences (Fig. 4), with examples (Appendix C.2). that makes it difficult to inject guiding information

to the latent variable.

C.1 Overview of HAQAE

HAQAE provides an unsupervised tree structure C.2 Frame Vector Norm

based on the vector quantization variational autoen- Like HAQAE, we use embeddings E F instead

coder (VQVAE) over M latent variables. Each of directly using the Gumbel-Softmax frame sam-Valid Test

Model

Acc Prec f1 Acc Prec f1

RNNLM - 0.89 ±0.001 0.73 ±0.004 0.66 ±0.008 0.88 ±0.005 0.71 ±0.014 0.65 ±0.006

RNNLM + ROLE - 0.89 ±0.004 0.75 ±0.022 0.69 ±0.026 0.88 ±0.005 0.74 ±0.017 0.68 ±0.020

Ours 0.00 0.00 ±0.001 0.00 ±0.001 0.00 ±0.000 0.00 ±0.001 0.00 ±0.000 0.00 ±0.000

Ours 0.20 0.59 ±0.020 0.27 ±0.005 0.28 ±0.090 0.58 ±0.010 0.27 ±0.050 0.28 ±0.010

Ours 0.40 0.77 ±0.012 0.49 ±0.130 0.50 ±0.010 0.77 ±0.010 0.49 ±0.060 0.50 ±0.010

Ours 0.50 0.79 ±0.010 0.51 ±0.080 0.48 ±0.080 0.79 ±0.009 0.50 ±0.080 0.48 ±0.050

Ours 0.70 0.85 ±0.007 0.69 ±0.050 0.65 ±0.040 0.84 ±0.013 0.69 ±0.050 0.65 ±0.020

Ours 0.80 0.86 ±0.003 0.77 ±0.013 0.74 ±0.006 0.85 ±0.005 0.76 ±0.008 0.74 ±0.010

Ours 0.90 0.87 ±0.002 0.81 ±0.007 0.78 ±0.006 0.86 ±0.011 0.81 ±0.020 0.79 ±0.010

Table 5: Accuracy and macro precision and F1-score, averaged across 3 runs, with standard deviation reported.

Addition Concatenation against the concatenation (regular bilinear atten-

Valid Test Valid Test

0.0 41.18 ±0.69 36.28 ±0.74 48.86 ±0.13 42.76 ±0.08 tion) method. We report the results on the

0.2 38.52 ±0.83 33.31 ±0.63 48.73 ±0.18 42.76 ±0.06

0.4 37.79 ±0.52 33.12 ±0.54 49.34 ±0.32 43.18 ±0.21 Wikipedia dataset in Table 6. Experiments indicate

0.5 35.84 ±0.66 31.11 ±0.85 49.83 ±0.24 43.90 ±0.32 that the regular bilinear attention structure with

0.7 24.20 ±1.07 21.19 ±0.76 52.41 ±0.38 45.97 ±0.48

0.8 23.68 ±0.75 20.77 ±0.73 55.13 ±0.31 48.27 ±0.21 larger obtains worse performance. These results

0.9 22.52±0.62 19.84 ±0.52 58.63 ±0.25 51.39 ±0.34

confirm the claim that the proposed approach bene-

Table 6: Validation and test per-word perplexities with fits from the addition structure.

bilinear-attention (lower is better).

D.3 Generated Sentences

ples. In our model definition, each frame fm = Recall that the reconstruction loss is

[fm,1 , fm,2 , . . . , fm,F ] sampled from the Gumbel- 1X (s) (s) (s)

Softmax distribution is a simplex vector: Lw ≈ log p(w|f1 , f2 , . . . fM ; z),

S s

F

X

0 ≤ fm,i ≤ 1, fm,i = 1. (8) (s) (s)

where fm ∼ q(fm |fm−1 , Im , w). Based on these

i=1

formulations, we provide some examples of gener-

PF p 1/p ated scripts. Given the seed, the model first predicts

So kfm kp = i=1 fm,i ≤ F 1/p . After sam-

pling a frame simplex vector, we multiply it to the the first frame f1 , then it predicts the next verb v1

frame embeddings matrix E F . With an appropri- and similarly samples the tokens one-by-one. Dur-

ate temperature for Gumbel-Softmax, the simplex ing the event generations, if the sampled token is

would be approximately a one-hot vector and the unknown, the decoder samples again. As we see in

multiplication maps the simplex vector to the em- Table 7, the generated events and frames samples

beddings space without any limitation on the norm. are consistent which shows the ability of model in

event representation.

D More Results

D.4 Inferred Frames

In this section, we provide additional quantitative

and qualitative results, to supplement what was Using Alg. 1, we can see the frames sampled dur-

presented in §4. ing the training and validation. In Table 8, we

provide some examples of frame inferring for both

D.1 Standard Deviation for Frame training and validation examples. We observe that

Prediction (§4.3) for training examples when > 0.5, almost all the

In Table 5, we see the average results of classifi- observed frames and inferred frames are the same.

cation metrics with their corresponding standard In other words, the model prediction is almost the

deviations. same as the ground truth.

Interestingly, the model is more flexible in sam-

D.2 Importance of Using Addition rather pling the latent frames (orange ones). In Table 8a

than Concatenation in Attention the model is estimating HAVE _ ASSOCIATED in-

To demonstrate the effectiveness of our proposed stead of the POSSESSION frame. In Table 8b,

attention mechanism, we compare the addition instead of PROCESS _ START we have ACTIV-You can also read