Undersensitivity in Neural Reading Comprehension

←

→

Page content transcription

If your browser does not render page correctly, please read the page content below

Undersensitivity in Neural Reading Comprehension

Johannes Welbl†∗ Pasquale Minervini† Max Bartolo†

†

Pontus Stenetorp Sebastian Riedel†

†

University College London

{j.welbl,p.minervini,m.bartolo,p.stenetorp,s.riedel}@cs.ucl.ac.uk

Abstract et al., 2018), semantically invariant reformula-

tions (Ribeiro et al., 2018b; Iyyer et al., 2018a) or –

Current reading comprehension methods gen- specifically in Reading Comprehension (RC) – ad-

eralise well to in-distribution test sets, yet

versarial text insertions (Jia and Liang, 2017; Wang

perform poorly on adversarially selected data.

Prior work on adversarial inputs typically stud-

and Bansal, 2018). A model’s inability to handle

ies model oversensitivity: semantically invari- adversarially chosen input text puts into perspec-

ant text perturbations that cause a model’s pre- tive otherwise impressive generalisation results for

diction to change. Here we focus on the com- in-distribution test sets (Seo et al. (2017); Yu et al.

plementary problem: excessive prediction un- (2018); Devlin et al. (2019); inter alia) and con-

dersensitivity, where input text is meaning- stitutes an important caveat to conclusions drawn

fully changed but the model’s prediction does regarding a model’s comprehension abilities.

not, even though it should. We formulate an

While semantically invariant text transforma-

adversarial attack which searches among se-

mantic variations of the question for which a tions can remarkably alter a model’s predictions,

model erroneously predicts the same answer, the complementary problem of model undersen-

and with even higher probability. We demon- sitivity is equally troublesome: a model’s text in-

strate that models trained on both SQuAD2.0 put can often be drastically changed in meaning

and NewsQA are vulnerable to this attack, and while retaining the original prediction. In particular,

then investigate data augmentation and adver- previous works (Feng et al., 2018; Ribeiro et al.,

sarial training as defences. Both substantially

2018a; Welbl et al., 2020) show that even after

decrease adversarial vulnerability, which gen-

eralises to held-out data and held-out attack deletion of all but a small fraction of input words,

spaces. Addressing undersensitivity further- models often produce the same output. However,

more improves model robustness on the pre- such reduced inputs are usually unnatural to a hu-

viously introduced A DD S ENT and A DD O NE - man reader, and it is both unclear what behaviour

S ENT datasets, and models generalise better we should expect from natural language models

when facing train/evaluation distribution mis- evaluated on unnatural text, and how to use such

match: they are less prone to overly rely on unnatural inputs to improve models.

shallow predictive cues present only in the

training set, and outperform a conventional

In this work we explore RC model undersensi-

model by as much as 10.9% F1 . tivity for natural language questions, and we show

that addressing undersensitivity not only makes RC

models more sensitive where they should be, but

1 Introduction also less reliant on shallow predictive cues. Fig. 1

shows an example for a BERT L ARGE model (De-

Neural networks can be vulnerable to adversar-

vlin et al., 2019) trained on SQuAD2.0 (Rajpurkar

ial input perturbations (Szegedy et al., 2013; Ku-

et al., 2018) that is given a text and a comprehen-

rakin et al., 2016). In Natural Language Pro-

sion question, i.e. What was Fort Caroline renamed

cessing (NLP), which operates on discrete sym-

to after the Spanish attack? which it correctly an-

bol sequences, adversarial attacks can take a vari-

swers as San Mateo with 98% probability. Altering

ety of forms (Ettinger et al., 2017; Alzantot et al.,

this question, however, can increase model proba-

2018) including character perturbations (Ebrahimi

bility for this same prediction to 99%, although the

∗

Now at DeepMind. new question is unanswerable given the same con-

1152

Findings of the Association for Computational Linguistics: EMNLP 2020, pages 1152–1165

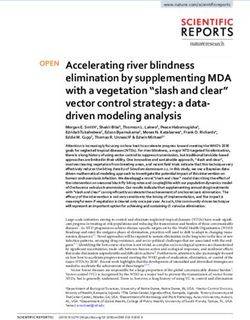

November 16 - 20, 2020. c 2020 Association for Computational LinguisticsOriginal Example ( q):

What was Fort Caroline renamed to after the Spanish attack? San Mateo (0.98)

F. Caroline → R.Oppenheimer Spanish → Hungarian

Given Text: The nearby

qadv q F.Caroline → Fort Knox

Spanish settlement of St.

q 0 0.99 Augustine attacked Fort

Caroline, and killed nearly all

the French soldiers defending

0.95 it. The Spanish renamed the

fort San Mateo […]

0.90

Adversarial Example ( qadv ):

What was Robert Oppenheimer renamed to after the Spanish attack? San Mateo (0.99)

Figure 1: Method Overview: Adversarial search over semantic variations of RC questions, producing unanswerable

questions for which the model retains its predictions with even higher probability.

text. That is, we observe an increase in probability the resulting models generalise better on the adver-

despite removing relevant question information and sarial datasets of Jia and Liang (2017), and in the

replacing it with new, irrelevant content. biased data setting of Lewis and Fan (2019).

We formalise the process of finding such ques-

tions as an adversarial search in a discrete space 2 Related Work

arising from perturbations of the original question.

There are two types of discrete perturbations we Adversarial Attacks in NLP Adversarial exam-

consider, based on part-of-speech tags and named ples have been studied extensively in NLP – see

entities, with the aim of obtaining grammatical and Zhang et al. (2019) for a recent survey. Yet automat-

semantically consistent alternative questions that ically generating adversarial inputs is non-trivial,

do not accidentally have the same correct answer. as altering a single word can change the seman-

We find that SQuAD2.0 and NewsQA models can tics of an instance or render it incoherent. Prior

be attacked on a substantial proportion of samples. work typically considers semantic-invariant input

The observed undersensitivity correlates nega- transformations to which neural models are over-

tively with in-distribution test set performance met- sensitive. For instance, Ribeiro et al. (2018b) use a

rics (EM/F1 ), suggesting that this phenomenon – set of simple perturbations such as replacing Who

where present – is indeed a reflection of a model’s is with Who’s. Other semantics-preserving pertur-

lack of question comprehension. When training bations include typos (Hosseini et al., 2017), the

models to defend against undersensitivity attacks addition of distracting sentences (Jia and Liang,

with data augmentation and adversarial training, 2017; Wang and Bansal, 2018), character-level ad-

we observe that they can generalise their robust- versarial perturbations (Ebrahimi et al., 2018; Be-

ness to held out evaluation data without sacrificing linkov and Bisk, 2018), and paraphrasing (Iyyer

in-distribution test set performance. Furthermore, et al., 2018b). In this work, we focus on the com-

the models improve on the adversarial datasets pro- plementary problem of undersensitivity of neural

posed by Jia and Liang (2017), and behave more RC models to semantic perturbations of the input.

robustly in a learning scenario that has dataset bias Our method is based on the idea that modifying,

with a train / evaluation distribution mismatch, in- for instance, the named entities in a question can

creasing performance by up to 10.9%F1 . completely change its meaning and, as a conse-

List of Contributions: i) We propose a new quence, the question should become unanswerable

type of adversarial attack exploiting the undersen- given the context. Our approach does not assume

sitivity of neural RC models to input changes, and white-box access to the model, as do e.g. Ebrahimi

show that contemporary models are vulnerable to it; et al. (2018) and Wallace et al. (2019).

ii) We compare data augmentation and adversarial Undersensitivity Jacobsen et al. (2019) demon-

training as defences, and show their effectiveness strated classifier undersensitivity in computer vi-

at reducing undersensitivity errors on both held-out sion. Niu and Bansal (2018) investigated undersen-

data and held-out perturbations without sacrificing sitivity in dialogue models and addressed the prob-

nominal test performance; iii) We demonstrate that lem with a max-margin training approach. Ribeiro

1153et al. (2018a) describe a general model diagnosis approaches have shown the benefit of synthetic

tool to identify minimal feature sets that are suffi- data to improve performance in SQuAD2.0 (Zhu

cient for a model to form high-confidence predic- et al., 2019; Alberti et al., 2019). In contrast to

tions. Feng et al. (2018) showed that it is possible prior work, we demonstrate that despite improving

to reduce inputs to minimal input word sequences performance on test sets that include unanswerable

without changing a model’s predictions. Welbl et al. questions, the problem persists when adversarially

(2020) investigated formal verification against un- choosing from a larger space of questions.

dersensitivity to text deletions. We see our work as

a continuation of these lines of inquiry, with a par- 3 Methodology

ticular focus on undersensitivity in RC. In contrast

Problem Overview Consider a discriminative

to Feng et al. (2018) and Welbl et al. (2020) we

model fθ , parameterised by a collection of vectors

consider concrete alternative questions, rather than

θ, which transforms an input x into a prediction

arbitrarily reduced input word sequences, and fur-

ŷ = fθ (x). In our task, x = (t, q) is a given text t

thermore address the observed phenomenon using

paired with a question q about this text. The label

dedicated training objectives, in contrast to Feng

y is the answer to q where it exists, or a NoAnswer

et al. (2018) and Ribeiro et al. (2018a) who simply

label where it cannot be answered.1

highlight it. Gardner et al. (2020) and Kaushik et al.

In an RC setting, the set of possible answers

(2020) also recognise the problem of models learn-

is large, and predictions ŷ should be dependent

ing shallow but successful heuristics, and propose

on x. And indeed, randomly choosing a different

counterfactual data annotation paradigms as pre-

input (t0 , q 0 ) usually changes the model prediction

vention. The perturbations used in this work define

ŷ. However, there exist many examples where the

such counterfactual samples. Their composition

prediction erroneously remains stable; the goal of

does not require additional annotation efforts, and

the attack formulated here is to find such cases.

we furthermore adapt an adversarial perspective

Formally, the goal is to discover inputs x0 for which

on the choice of such samples. Finally, one of the

fθ still erroneously predicts fθ (x0 ) = fθ (x), even

methods we evaluate for defending against under-

though x0 is not answerable from the text.

sensitivity attacks is a form of data augmentation

Identifying suitable candidates for x0 can be

that has similarly been used for de-biasing NLP

achieved in manifold ways. One approach is to

models (Zhao et al., 2018; Lu et al., 2018).

search among a large question collection, but we

Concurrent work on model C HECK L IST evalu- find this to only rarely be successful; an example is

ation (Ribeiro et al., 2020) includes an invariance shown in Table 8, Appendix E. Generating x0 , on

test which also examines model undersensitivity. the other hand, is prone to result in ungrammatical

In contrast to C HECK L IST, our work focuses with or otherwise ill-formed text. Instead, we consider

more detail on the analysis of the invariance phe- a perturbation space XT (x) spanned by perturbing

nomenon, the automatic generation of probing sam- original inputs x using a perturbation function fam-

ples, an investigation of concrete methods to over- ily T : XT (x) = {Ti (x) | Ti ∈ T }. This space

come undesirably invariant model behaviour, and XT (x) contains alternative model inputs derived

shows that adherence to invariance tests leads to from x. Ideally the transformation function fam-

more robust model generalisation. ily T is chosen such that the correct label of these

Unanswerable Questions in RC Rajpurkar inputs is changed, and the question becomes unan-

et al. (2018) proposed the SQuAD2.0 dataset, which swerable. We will later search within XT (x) to

includes over 43,000 human-curated unanswerable find inputs x0 which erroneously retain the same

questions. NewsQA is a second dataset with unan- prediction as x: ŷ(x) = ŷ(x0 ).

swerable questions, in the news domain (Trischler Part-of-Speech (PoS) Perturbations We first

et al., 2017). Training on these datasets should consider the perturbation space XTP (x) with PoS

conceivably result in models with an ability to tell perturbations TP of the original question: we swap

whether questions are answerable or not; we will individual tokens with other, PoS-consistent alter-

however see that this does not extend to adver- native tokens, drawing from large collections of to-

sarially chosen unanswerable questions. Hu et al. kens of the same PoS types. For example, we might

(2019) address unanswerability of questions from a 1

Unanswerable questions are part of, e.g. the SQuAD2.0

given text using additional verification steps. Other and NewsQA datasets, but not SQuAD1.1.

1154alter the question “Who patronized the monks in maximum (or any) higher prediction probability.

Italy?” to “Who betrayed the monks in Italy?” by We can however repeat the replacement procedure

replacing the past tense verb “patronized” with multiple times, arriving at texts with larger lexi-

“betrayed”. There is however no guarantee that cal distance to the original question. For example,

the altered question will require a different answer in two iterations of PoS-consistent lexical replace-

(e.g. due to synonyms). Even more so – there might ment, we can alter “Who was the duke in the battle

be type clashes or other semantic inconsistencies. of Hastings?” to inputs like “Who was the duke

We perform a qualitative analysis to investigate the in the expedition of Roger?” The space of possi-

extent of this problem and find that, while a valid bilities grows combinatorially, and with increasing

concern, for the majority of attackable samples perturbation radius it becomes computationally in-

(at least 51%) there exist attacks based on correct feasible to comprehensively cover the full pertur-

well-formed questions (see Section 5). bation space arising from iterated substitutions. To

Named Entity (NE) Perturbations The space address this, we follow Feng et al. (2018) and apply

XTE (x) of the transformation family TE is created beam search to narrow the search space, and seek to

by substituting NE mentions in the question with maximise the difference ∆ = P (ŷ | x0 )−P (ŷ | x).

different type-consistent NE, derived from a large Beam search is conducted up to a pre-specified

set E. For example, a question “Who patronized maximum perturbation radius ρ, but once x0 with

the monks in Italy?” could be altered to “Who ∆ > 0 has been found, we stop the search.

patronized the monks in Las Vegas?”, replacing Relation to Attacks in Prior Work Note that

the geopolitical entity “Italy” with “Las Vegas”, this type of attack stands in contrast to other at-

chosen from E. Altering NE often changes the tacks based on small, semantically invariant in-

question specifics and alters the answer require- put perturbations which investigate oversensitivity

ments, which are unlikely to be satisfied from what problems. Such semantic invariance comes with

is stated in the given text, given the broad nature stronger requirements and relies on synonym dic-

of entities in E. While perturbed questions are not tionaries (Ebrahimi et al., 2018) or paraphrases har-

guaranteed to be unanswerable or require a differ- vested from back-translation (Iyyer et al., 2018a),

ent answer, we will in a later analysis see that for which are both incomplete and noisy. Our attack

the large majority of cases (at least 84%) they do. is instead focused on undersensitivity, i.e. where

Undersensitivity Attacks Thus far we have de- the model is stable in its prediction even though

scribed methods for perturbing questions. We will it should not be. Consequently the requirements

search in the resulting perturbation spaces XTP (x) are not as difficult to fulfil when defining pertur-

and XTE (x) for inputs x0 for which model predic- bation spaces that alter the question meaning, and

tion remains constant. However, we pose a slightly one can rely on sets of entities and PoS examples

stronger and more conservative requirement to rule automatically extracted from a large text collection.

out cases where the prediction is retained, but with

lower probability: fθ should assign a higher proba- 4 Experiments: Model Vulnerability

bility to the same prediction ŷ(x) = ŷ(x0 ) than for

the original input: Training and Dataset Details We next conduct

experiments using the attacks laid out above to in-

P (ŷ | x0 ) > P (ŷ | x) (1) vestigate model undersensitivity. We attack the

BERT model fine-tuned on SQuAD2.0, and mea-

To summarise, we are searching in a perturbation sure to what extent the model exhibits undersensi-

space for altered questions which result in a higher tivity to adversarially chosen inputs. Our choice

model probability to the same answer as the orig- of BERT is motivated by the currently widespread

inal input question. If we have found an altered adoption of its variants across the NLP field, and

question that satisfies inequality (1), then we have empirical success across a wide range of datasets.

identified a successful attack, which we will refer SQuAD2.0 per design contains unanswerable ques-

to as an undersensitivity attack. tions; models are thus trained to predict a NoAn-

Adversarial Search in Perturbation Space In swer option where a comprehension question can-

its simplest form, a search for an adversarial at- not be answered. As the test set is unavailable,

tack in the previously defined spaces amounts to a we split off 5% from the original training set for

search over a list of single lexical alterations for the development purposes and retain the remaining

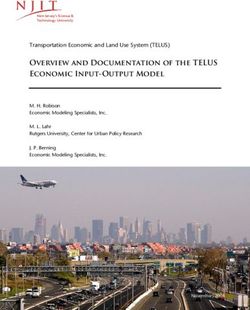

1155(a) Part of Speech perturbations (b) Named Entity perturbations

Figure 2: BERT L ARGE on SQuAD2.0: vulnerability to noisy attacks on held out data for differently sized attack

spaces (parameter η) and different beam search depth (perturbation radius ρ).

95% for training, stratified by articles. The original Results Fig. 2 shows plots for adversarial error

SQuAD2.0 development set is then used as eval- rates on SQuAD2.0 for both perturbation types. We

uation data, where the model reaches 73.0%EM observe that attacks based on PoS perturbations

and 76.5%F1 ; we will compute the undersensitivity can already for very small search budgets (η = 32,

attacks on this entirely held out part of the dataset. ρ = 1) reach more than 60% attack success rates,

Appendix B provides further training details. and this number can be raised to 95% with a larger

Attack Details To compute the perturbation computational budget. For perturbations based on

spaces, we gather large collections of NE and PoS NE substitution, we find overall lower attack suc-

expressions across types that define the perturba- cess rates, but still find that more than half of the

tion spaces TE and TP , which we gather from the samples can successfully be attacked with the bud-

Wikipedia paragraphs used in the SQuAD2.0 train- gets tested. Note that where attacks were found, we

ing set, with the pretrained taggers in spaCy, and observed that there often exist multiple alternatives

the Penn Treebank tag set for PoS. This results on satisfying inequality 1.

average in 5,126 different entities per entity type, These findings demonstrate that BERT is not

and 2,337 different tokens per PoS tag. When com- necessarily considering the entire content of a

puting PoS perturbations, we found it useful to dis- comprehension question given to it, and that even

regard perturbations of particular PoS types that of- though trained to tell when questions are unanswer-

ten led to only minor changes or incorrectly formed able, the model often fails when facing adversari-

expressions, such as punctuation or determiners; ally selected unanswerable questions.

details on these can be found in Appendix A. As In a side experiment we also investigated un-

the number of possible perturbations to consider dersensitivity attacks using NE perturbations on

is potentially very large, we limit the beam search SQuAD1.1, which proves even more vulnerable

at each step to a maximum of η randomly chosen with an adversarial error rate of 70% already using

type-consistent entities from E, or tokens from P , η = 32; ρ = 1 (compared to 34% on SQuAD2.0).

and re-sample these throughout the search. We use While this demonstrates that undersensitivity is

a beam width of b = 5, resulting in a bound to the also an issue for SQuAD1.1, the unanswerable ques-

total computation spent on adversarial search of tion behaviour is not really well-defined, rendering

b · ρ · η model evaluations per sample, where ρ is results difficult to interpret. On the other hand, the

the perturbation “radius” (maximum search depth). notable drop between the datasets demonstrates the

We quantify vulnerability to the described at- effectiveness of the unanswerable questions added

tacks by measuring the fraction of evaluation sam- during training in SQuAD2.0.

ples for which at least one undersensitivity attack is

found given a computational search budget, disre- 5 Analysis of Vulnerable Samples

garding cases where a model predicts NoAnswer.2

Qualitative Analysis of Attacks The attacks are

potentially noisy, and the introduced substitutions

2

Altering such samples likely retains their unanswerability. are by no means guaranteed to result in semanti-

1156Original / Modified Question Prediction Annotation Scores

What city in Victoria is called the cricket ground of Melbourne valid 0.63 / 0.75

Australia the Delhi Metro Rail Corporation Limited ?

What were the annual every year carriage fees for the channels? £30m same answer 0.95 / 0.97

What percentage of Victorians are Christian Girlish ? 61.1% valid 0.92 / 0.93

Which plateau is the left part achievement of Warsaw on? moraine sem. inconsist. 0.52 / 0.58

Table 1: Example adversarial questions ( original , attack ), together with their annotation as either a valid coun-

terexample or other type. Top 2: Named Entity perturbations. Bottom 2: PoS perturbations.

PoS NE (24%) of the cases. While these questions have

some sort of inconsistency (e.g. What year did the

Valid attack 51% 84%

case go before the supreme court? vs. a perturbed

Syntax error 10% 6%

version What scorer did the case go before the

Semantically incoherent 24% 5%

supreme court?), it is remarkable that the model

Same answer 15% 5%

assigns higher probabilities to the original answer

even when faced with incoherent questions, casting

Table 2: Analysis of undersensitivity attack samples for

both PoS and named entity (NE) perturbations. doubt on the extent to which the replaced question

information is used to determine the answer. Since

NE-based attacks have a substantially larger frac-

cally meaningful and consistent expressions, or re- tion of valid, well-posed questions, we will focus

quire a different answer than the original. To gauge our study on these for the remainder of this paper.

the extent of this we inspect 100 successful attacks Characterising Successfully Attacked Sam-

conducted at ρ = 6 and η = 256 on SQuAD2.0, ples We observe that models are vulnerable to

both for PoS and NE perturbations. We label them undersensitivity adversaries, yet not all samples are

as either: i) Having a syntax error (e.g. What would successfully attacked. This raises the question of

platform lower if there were fewer people?). These what distinguishes samples that can and cannot be

are mostly due to cascading errors stemming from attacked. We thus examine various characteristics,

incorrect NE / PoS tag predictions. ii) Semantically aiming to understand model vulnerability causes.

incoherent (Who built the monks?). iii) Questions First, successfully attacked questions produce

that require the same correct answer as the orig- lower original prediction probabilities, on aver-

inal, e.g. due to a paraphrase. iv) Valid attacks: age 72.9% vs. 83.8% for unattackable questions.

Perturbed questions that would either demand a That is, there exists a direct inverse link between a

different answer or are unanswerable given the text model’s original prediction probability and sample

(e.g. When did the United States / Tuvalu withdraw vulnerability to an undersensitivity attack. The ad-

from the Bretton Woods Accord?) versarially chosen questions had an average prob-

Table 1 shows several example attacks along ability of 78.2% – a notable gap to the original

with their annotations, and in Table 2 the respective questions. It is worth noting that search halted

proportions are summarised. We observe that a once a single question with higher probability was

non-negligible portion of questions has some form found; continuing the search increases the respec-

of syntax error or incoherent semantics, especially tive probabilities.

for PoS perturbations. Questions with the identi- Second, vulnerable samples are furthermore less

cal correct answer are comparatively rare. Finally, likely to be given the correct prediction overall.

about half (51%) of the attacks in PoS, as well as Concretely, evaluation metrics for vulnerable ex-

84% for NE are valid questions that should either amples are 56.4%/69.6% EM/F1 , compared to

have a different answer, or are unanswerable. 73.0%/76.5% on the whole dataset (-16.6% and

Overall the NE perturbations result in cleaner -6.9% EM/F1 ).

questions than PoS perturbations, which suffer Attackable questions have on average 12.3 to-

from semantic inconsistencies in about a quarter kens, whereas unattackable ones are slightly shorter

1157Undersensitivity Error Rate HasAns NoAns Overall

Adv. budget η @32 @64 @128 @256 EM F1 EM/F1 EM F1

BERT L ARGE 44.0 50.3 52.7 54.7 70.1 77.1 76.0 73.0 76.5

SQuAD

+ Data Augment. 4.5 9.1 11.9 18.9 66.1 72.2 80.7 73.4 76.5

+ Adv. Training 11.0 15.9 22.8 28.3 69.0 76.4 77.1 73.0 76.7

BERT BASE 34.2 34.7 36.4 37.3 41.6 53.1 61.6 45.7 54.8

NewsQA

+ Data Augment. 7.1 11.6 17.5 20.8 41.5 53.6 62.1 45.8 55.3

+ Adv. Training 20.1 24.1 26.9 29.1 39.0 50.4 67.1 44.8 53.9

Table 3: Breakdown of undersensitivity error rate overall (lower is better), and standard performance metrics (EM,

F1 ; higher is better) on different subsets of SQuAD2.0 and NewsQA evaluation data, all in [%].

are particularly error-prone. A possible explanation

can be provided by observations regarding (non-

contextualised) word vectors, which cluster geopo-

litical entities (e.g. countries) together, thus making

them harder to distinguish for a model operating

on these embeddings (Mikolov et al., 2013).

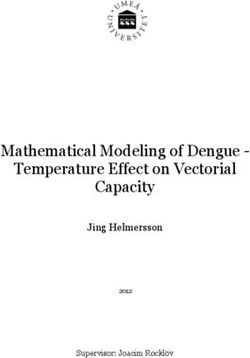

Figure 3: Named entity type characteristics of attack-

6 Robustness to Undersensitivity Attacks

able vs. unattackable samples. We will now investigate methods for mitigating

excessive model undersensitivity. Prior work has

considered both data augmentation and adversar-

with on average 11.1 tokens. ial training for more robust models; we will con-

Next we considered the distribution of differ- duct experiments with both. Adding a robustness

ent question types (What, Who, When, ...) for both objective can negatively impact standard test met-

attackable and unattackable samples and did not ob- rics (Tsipras et al., 2019), and it should be noted

serve notable differences apart from the single most that there exists a natural trade-off between perfor-

frequent question type What; it is a lot more preva- mance on one particular test set and performance

lent among the unattacked questions (56.4%) than on a dataset of adversarial inputs. We perform data

among successfully attacked questions (42.1%). augmentation and adversarial training by adding a

This is by far the most common question type, and corresponding loss term to the log-likelihood train-

furthermore one that is comparatively open-ended ing objective: LTotal = Lllh (Ω)+λ·Lllh (Ω0 ) where

and does not prescribe particular type expectations Ω is the standard training data, fit with a discrim-

to its answer, as e.g., a Where question would re- inative log-likelihood objective, Ω0 either a set of

quire a location. A possible explanation for the augmentation data points, or of successful adver-

prevalence of the What questions among unsuc- sarial attacks where they exist, and λ ∈ R+ a hy-

cessfully attacked samples is that the model cannot perparameter. In data augmentation, we randomly

rely on type constraints alone to arrive at its pre- sample perturbed input questions, whereas in adver-

dictions (Sugawara et al., 2018), and is thus less sarial training we perform an adversarial search to

prone to such exploitation - see Section 6 for a identify them. In both cases, alternative data points

more in-depth analysis. in Ω0 will be fit to a NULL label to represent the

Finally, Figure 3 shows a histogram of the 10 NoAnswer prediction – again using a log-likelihood

most common NE tags appearing in unsuccessfully objective. We continuously update Ω0 throughout

attacked samples, and the corresponding fraction of training to reflect adversarial samples with respect

replaced entities in successfully attacked samples. to the current model. We conduct experiments on

Besides one exception, the distributions are remark- both SQuAD2.0 and NewsQA; details on training

ably similar: Undersensitivity can be induced for and hyperparameters can be found in the appendix.

a variety of entity types used in the perturbation. Experimental Outcomes Results for these ex-

Notably, questions with geopolitical entities (GPE) periments can be found in Table 3 for the two da-

1158tasets, respectively. First, we observe that both Person Date Numerical

data augmentation and adversarial training substan- EM F1 EM F1 EM F1

tially reduce the number of undersensitivity errors BERT w/ bias 55.9 63.1 48.9 58.2 38.7 48.0

the model commits, consistently across adversar- + Data Augm. 59.1 66.6 58.4 65.6 48.7 58.9

ial search budgets, and consistently across the two BERT w/o bias 69.2 78.1 73.2 81.7 69.6 80.5

datasets. This demonstrates that both training meth-

ods are effective defences and can mitigate – but Table 4: Robust training leads to improved generalisa-

tion with train/test distribution mismatch (w/ bias). Bot-

not eliminate – the model’s undersensitivity prob-

tom: control experiment without train/test mismatch.

lem. Notably the improved robustness – especially

for data augmentation – is possible without sacrific-

ing performance in the overall standard metrics EM tation, and adversarial training, respectively. De-

and F1 , even slight improvements are possible. Sec- tailed results for different values of η, as well as

ond, data augmentation is a more effective defence for NewsQA can be found in Appendix D.

training strategy than adversarial training. This Generalisation in a Biased Data Setting Data-

holds true both for standard and adversarial metrics sets for high-level NLP tasks often come with an-

and hints at potential adversarial overfitting. notation and selection biases; models then learn

Finally, a closer inspection of how performance to exploit shortcut triggers which are dataset but

changes on answerable (HasAns) vs. unanswerable not task-specific (Gururangan et al., 2018). For

(NoAns) samples of the datasets reveals that models example, a model might be confronted with ques-

with modified training objectives show improved tion/paragraph pairs which only ever contain one

performance on unanswerable samples, while sacri- type-consistent answer span, e.g. mention one num-

ficing some performance on answerable samples.3 ber in a text with a How many...? question. It is

This suggests that the trained models – even though then sufficient to learn to pick out numbers from

similar in standard metrics – evolve on different text to solve the task, irrespective of other informa-

paths during training: the modified objectives pri- tion given in the question. Such a model might then

oritise unanswerable questions to a higher degree. have trouble generalising to articles that mention

Evaluation on Held-Out Perturbation Spaces several numbers, as it never learned that it is nec-

In Table 3 results are computed using the same per- essary to take into account other relevant question

turbation spaces also used during training. These information to determine the correct answer.

perturbation spaces are relatively large, and ques- We test models in such a scenario: a model is

tions are about a disjoint set of articles at evalu- trained on SQuAD1.1 questions with paragraphs

ation time. Nevertheless there is the potential of containing only a single type-consistent answer

overfitting to the particular perturbations used dur- expression for either a person, date, or numeri-

ing training. To measure the extent to which the cal answer. At test time, we present it with ques-

defences generalise also to new, held out sets of tion/article pairs of the same respective question

perturbations, we assemble a new, disjoint pertur- types, but now there are multiple possible type-

bation space of identical size per NE tag as those consistent answers in the paragraph. We obtain

used during training, and evaluate models on at- such data from Lewis and Fan (2019), who first

tacks with respect to these perturbations. Named described this biased data scenario. Previous ex-

entities are chosen from English Wikipedia using periments on this dataset were conducted without

the same method as for the training perturbation dedicated development set, so while using the same

spaces, and chosen such that they are disjoint from training data, we split the test set with a 40/60%

the training perturbation space. We then execute split into development and test data.4 We then test

adversarial attacks using these new attack spaces both a vanilla fine-tuned BERT BASE transformer

on the previously trained models, and find that both model, and a model trained to be less vulnerable to

vulnerability rates of the standard model, as well as undersensitivity attacks using data augmentation.

relative defence success transfer to the new attack Finally, we perform a control experiment, where we

spaces. For example, with η = 256 we observe join and shuffle all data points from train/dev/test

vulnerability ratios of 51.7%, 20.7%, and 23.8% (of each question type, respectively), and split the

on SQuAD2.0 for standard training, data augmen-

4

We also include an experiment with the previous data

3

The NoAns threshold is tuned on the respective valid. sets. setup used by Lewis and Fan (2019), see Appendix G.

1159AddSent AddOneSent Dev 2.0 stable in their predictions when given semantically

EM F1 EM F1 EM F1 altered questions. A model’s robustness to un-

BERT Large 61.3 66.0 70.1 74.9 78.3 81.4 dersensitivity attacks can be drastically improved

+ Data Augm. 64.0 70.3 70.2 76.5 78.9 82.1

with appropriate defences without sacrificing nom-

Table 5: Comparison between BERT L ARGE and

inal performance, and the resulting models become

BERT L ARGE + data augmentation using NE perturba- more robust also in other adversarial data settings.

tions, on two sets of adversarial examples: A DD S ENT

Acknowledgements

and A DD O NE S ENT from Jia and Liang (2017).

This research was supported by an Engineering

and Physical Sciences Research Council (EPSRC)

dataset into new parts of the same size as before, scholarship, and the European Union’s Horizon

which now follow the same data distribution (w/o 2020 research and innovation programme under

data bias setting). Table 4 shows the results. In this grant agreement no. 875160.

biased data scenario we observe a marked improve-

ment across metrics and answer type categories

when a model is trained with unanswerable sam- References

ples. This demonstrates that the negative training Chris Alberti, Daniel Andor, Emily Pitler, Jacob De-

signal stemming from related – but unanswerable – vlin, and Michael Collins. 2019. Synthetic QA

questions counterbalances the signal from answer- corpora generation with roundtrip consistency. In

able questions in such a way, that the model learns ACL (1), pages 6168–6173. Association for Compu-

tational Linguistics.

to better take into account relevant information in

the question, which allows it to correctly distin- Moustafa Alzantot, Yash Sharma, Ahmed Elgohary,

guish among several type-consistent answer possi- Bo-Jhang Ho, Mani Srivastava, and Kai-Wei Chang.

bilities in the text, which the standard BERT BASE 2018. Generating natural language adversarial ex-

amples. In Proceedings of the 2018 Conference on

model does not learn well. Empirical Methods in Natural Language Processing,

Evaluation on Adversarial SQuAD We next pages 2890–2896, Brussels, Belgium. Association

evaluate BERT L ARGE and BERT L ARGE + Aug- for Computational Linguistics.

mentation Training on A DD S ENT and A DD O NE -

Yonatan Belinkov and Yonatan Bisk. 2018. Synthetic

S ENT, which contain adversarially composed sam- and natural noise both break neural machine transla-

ples (Jia and Liang, 2017). Our results, summarised tion. In International Conference on Learning Rep-

in Table 5, show that including altered samples dur- resentations.

ing the training of BERT L ARGE improves EM/F1 Jacob Devlin, Ming-Wei Chang, Kenton Lee, and

scores by 2.7/4.3 and 0.1/1.6 points on the two Kristina Toutanova. 2019. BERT: Pre-training of

datasets, respectively. deep bidirectional transformers for language under-

Transferability of Attacks We train a Ro- standing. In Proceedings of the 2019 Conference

of the North American Chapter of the Association

BERTa model (Liu et al., 2019) on SQuAD2.0, and

for Computational Linguistics: Human Language

conduct undersensitivity attacks (ρ = 6; η = 256). Technologies, Volume 1 (Long and Short Papers),

Notably the resulting undersensitivity error rates pages 4171–4186, Minneapolis, Minnesota. Associ-

are considerably lower for RoBERTa (34.5%) than ation for Computational Linguistics.

for BERT (54.7%). Interestingly then, when con- Javid Ebrahimi, Anyi Rao, Daniel Lowd, and Dejing

sidering only those samples where RoBERTa was Dou. 2018. HotFlip: White-box adversarial exam-

found vulnerable, BERT has an undersensitivity ples for text classification. In Proceedings of the

error rate of 90.7%. That is, the same samples 56th Annual Meeting of the Association for Compu-

tational Linguistics (Volume 2: Short Papers), pages

tend to be vulnerable to undersensitivity attacks 31–36, Melbourne, Australia. Association for Com-

in both models. Even more, we find that concrete putational Linguistics.

adversarial inputs x0 selected with RoBERTa trans-

fer when evaluating them on BERT for 17.5% of Allyson Ettinger, Sudha Rao, Hal Daumé III, and

Emily M. Bender. 2017. Towards linguistically gen-

samples (i.e. satisfy Inequality 1). eralizable NLP systems: A workshop and shared

task. In Proceedings of the First Workshop on Build-

7 Conclusion ing Linguistically Generalizable NLP Systems.

We have investigated undersensitivity: a problem- Shi Feng, Eric Wallace, Alvin Grissom II, Mohit Iyyer,

atic behaviour of RC models, where they are overly Pedro Rodriguez, and Jordan Boyd-Graber. 2018.

1160Pathologies of neural models make interpretations Alexey Kurakin, Ian Goodfellow, and Samy Bengio.

difficult. In Proceedings of the 2018 Conference on 2016. Adversarial examples in the physical world.

Empirical Methods in Natural Language Processing, arXiv preprint arXiv:1607.02533.

pages 3719–3728, Brussels, Belgium. Association

for Computational Linguistics. Mike Lewis and Angela Fan. 2019. Generative ques-

tion answering: Learning to answer the whole ques-

Matt Gardner, Yoav Artzi, Victoria Basmova, Jonathan tion. In International Conference on Learning Rep-

Berant, Ben Bogin, Sihao Chen, Pradeep Dasigi, resentations.

Dheeru Dua, Yanai Elazar, Ananth Gottumukkala,

Nitish Gupta, Hanna Hajishirzi, Gabriel Ilharco, Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Man-

Daniel Khashabi, Kevin Lin, Jiangming Liu, Nel- dar Joshi, Danqi Chen, Omer Levy, Mike Lewis,

son F. Liu, Phoebe Mulcaire, Qiang Ning, Sameer Luke Zettlemoyer, and Veselin Stoyanov. 2019.

Singh, Noah A. Smith, Sanjay Subramanian, Reut Roberta: A robustly optimized BERT pretraining ap-

Tsarfaty, Eric Wallace, Ally Zhang, and Ben Zhou. proach. CoRR, abs/1907.11692.

2020. Evaluating nlp models via contrast sets. Kaiji Lu, Piotr Mardziel, Fangjing Wu, Preetam Aman-

charla, and Anupam Datta. 2018. Gender bias

Suchin Gururangan, Swabha Swayamdipta, Omer

in neural natural language processing. ArXiv,

Levy, Roy Schwartz, Samuel R. Bowman, and

abs/1807.11714.

Noah A. Smith. 2018. Annotation artifacts in nat-

ural language inference data. In NAACL-HLT (2), Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg S Cor-

pages 107–112. Association for Computational Lin- rado, and Jeff Dean. 2013. Distributed representa-

guistics. tions of words and phrases and their composition-

ality. In C. J. C. Burges, L. Bottou, M. Welling,

Hossein Hosseini, Baicen Xiao, and Radha Pooven-

Z. Ghahramani, and K. Q. Weinberger, editors, Ad-

dran. 2017. Deceiving google’s cloud video intel-

vances in Neural Information Processing Systems

ligence API built for summarizing videos. In CVPR

26, pages 3111–3119. Curran Associates, Inc.

Workshops, pages 1305–1309. IEEE Computer Soci-

ety. Tong Niu and Mohit Bansal. 2018. Adversarial over-

sensitivity and over-stability strategies for dialogue

Minghao Hu, Furu Wei, Yuxing Peng, Zhen Huang, models. In Proceedings of the 22nd Conference on

Nan Yang, and Dongsheng Li. 2019. Read + verify: Computational Natural Language Learning, pages

Machine reading comprehension with unanswerable 486–496, Brussels, Belgium. Association for Com-

questions. In AAAI, pages 6529–6537. AAAI Press. putational Linguistics.

Mohit Iyyer, John Wieting, Kevin Gimpel, and Luke Pranav Rajpurkar, Robin Jia, and Percy Liang. 2018.

Zettlemoyer. 2018a. Adversarial example gener- Know what you don’t know: Unanswerable ques-

ation with syntactically controlled paraphrase net- tions for SQuAD. In Proceedings of the 56th An-

works. In Proceedings of the 2018 Conference of the nual Meeting of the Association for Computational

North American Chapter of the Association for Com- Linguistics (Volume 2: Short Papers), pages 784–

putational Linguistics: Human Language Technolo- 789, Melbourne, Australia. Association for Compu-

gies, Volume 1 (Long Papers), pages 1875–1885, tational Linguistics.

New Orleans, Louisiana. Association for Computa-

tional Linguistics. Marco Tulio Ribeiro, Sameer Singh, and Carlos

Guestrin. 2018a. Anchors: High-precision model-

Mohit Iyyer, John Wieting, Kevin Gimpel, and Luke agnostic explanations. In AAAI Conference on Arti-

Zettlemoyer. 2018b. Adversarial example gener- ficial Intelligence (AAAI).

ation with syntactically controlled paraphrase net-

works. In NAACL-HLT, pages 1875–1885. Associ- Marco Tulio Ribeiro, Sameer Singh, and Carlos

ation for Computational Linguistics. Guestrin. 2018b. Semantically equivalent adversar-

ial rules for debugging NLP models. In Proceedings

Joern-Henrik Jacobsen, Jens Behrmann, Richard of the 56th Annual Meeting of the Association for

Zemel, and Matthias Bethge. 2019. Excessive in- Computational Linguistics (Volume 1: Long Papers),

variance causes adversarial vulnerability. In Interna- pages 856–865. Association for Computational Lin-

tional Conference on Learning Representations. guistics.

Robin Jia and Percy Liang. 2017. Adversarial exam- Marco Tulio Ribeiro, Tongshuang Wu, Carlos Guestrin,

ples for evaluating reading comprehension systems. and Sameer Singh. 2020. Beyond accuracy: Be-

In Proceedings of the 2017 Conference on Empiri- havioral testing of NLP models with CheckList. In

cal Methods in Natural Language Processing, pages Proceedings of the 58th Annual Meeting of the Asso-

2021–2031, Copenhagen, Denmark. Association for ciation for Computational Linguistics, pages 4902–

Computational Linguistics. 4912, Online. Association for Computational Lin-

guistics.

Divyansh Kaushik, Eduard Hovy, and Zachary Lipton.

2020. Learning the difference that makes a differ- Min Joon Seo, Aniruddha Kembhavi, Ali Farhadi, and

ence with counterfactually-augmented data. In Inter- Hannaneh Hajishirzi. 2017. Bidirectional attention

national Conference on Learning Representations. flow for machine comprehension. ICLR.

1161Saku Sugawara, Kentaro Inui, Satoshi Sekine, and Jieyu Zhao, Tianlu Wang, Mark Yatskar, Vicente Or-

Akiko Aizawa. 2018. What makes reading com- donez, and Kai-Wei Chang. 2018. Gender bias in

prehension questions easier? In Proceedings of coreference resolution: Evaluation and debiasing

the 2018 Conference on Empirical Methods in Nat- methods. In Proceedings of the 2018 Conference

ural Language Processing, pages 4208–4219, Brus- of the North American Chapter of the Association

sels, Belgium. Association for Computational Lin- for Computational Linguistics: Human Language

guistics. Technologies, Volume 2 (Short Papers), pages 15–20,

New Orleans, Louisiana. Association for Computa-

Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, tional Linguistics.

Joan Bruna, Dumitru Erhan, Ian Goodfellow, and

Rob Fergus. 2013. Intriguing properties of neural Haichao Zhu, Li Dong, Furu Wei, Wenhui Wang, Bing

networks. arXiv preprint arXiv:1312.6199. Qin, and Ting Liu. 2019. Learning to ask unan-

swerable questions for machine reading comprehen-

Alon Talmor and Jonathan Berant. 2019. MultiQA: An sion. In Proceedings of the 57th Annual Meeting

empirical investigation of generalization and trans- of the Association for Computational Linguistics,

fer in reading comprehension. In Proceedings of the pages 4238–4248, Florence, Italy. Association for

57th Annual Meeting of the Association for Com- Computational Linguistics.

putational Linguistics, pages 4911–4921, Florence,

Italy. Association for Computational Linguistics.

Adam Trischler, Tong Wang, Xingdi Yuan, Justin Har-

ris, Alessandro Sordoni, Philip Bachman, and Ka-

heer Suleman. 2017. NewsQA: A machine compre-

hension dataset. In Proceedings of the 2nd Work-

shop on Representation Learning for NLP, pages

191–200, Vancouver, Canada. Association for Com-

putational Linguistics.

Dimitris Tsipras, Shibani Santurkar, Logan Engstrom,

Alexander Turner, and Aleksander Madry. 2019. Ro-

bustness may be at odds with accuracy. In Interna-

tional Conference on Learning Representations.

Eric Wallace, Shi Feng, Nikhil Kandpal, Matt Gardner,

and Sameer Singh. 2019. Universal adversarial trig-

gers for NLP. CoRR, abs/1908.07125.

Yicheng Wang and Mohit Bansal. 2018. Robust ma-

chine comprehension models via adversarial train-

ing. In Proceedings of the 2018 Conference of the

North American Chapter of the Association for Com-

putational Linguistics: Human Language Technolo-

gies, Volume 2 (Short Papers), pages 575–581, New

Orleans, Louisiana. Association for Computational

Linguistics.

Johannes Welbl, Po-Sen Huang, Robert Stanforth,

Sven Gowal, Krishnamurthy (Dj) Dvijotham, Mar-

tin Szummer, and Pushmeet Kohli. 2020. Towards

verified robustness under text deletion interventions.

In International Conference on Learning Represen-

tations.

Adams Wei Yu, David Dohan, Quoc Le, Thang Luong,

Rui Zhao, and Kai Chen. 2018. Fast and accurate

reading comprehension by combining self-attention

and convolution. In International Conference on

Learning Representations.

Wei Emma Zhang, Quan Z. Sheng, and Ahoud Abdul-

rahmn F. Alhazmi. 2019. Generating textual adver-

sarial examples for deep learning models: A survey.

CoRR, abs/1901.06796.

1162SQuAD2.0 Undersensitivity Error Rate

Adv. budget η @32 @64 @128 @256

BERT L ARGE 40.7 45.2 48.6 51.7

+ Data Augment. 4.8 7.9 11.9 20.7

+ Adv. Training 9.2 12.2 16.5 23.8

Table 6: Breakdown of undersensitivity error rate on

SQuAD2.0 with a held-out attack space (lower is bet-

ter).

NewsQA Undersensitivity Error Rate

Adv. budget η @32 @64 @128 @256

BERT BASE 32.8 33.9 35.0 36.2

+ Data Augment. 3.9 6.5 11.9 17.5

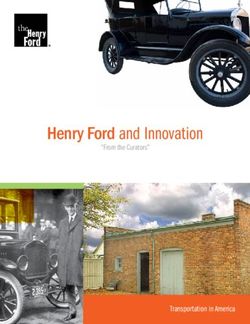

+ Adv. Training 17.6 20.7 25.4 28.5 Figure 4: Vulnerability to undersensitivity attacks on

NewsQA.

Table 7: Breakdown of undersensitivity error rate on

NewsQA with a held-out attack space (lower is better). perturbations are used for the defence methods,

and adversarial attacks with η = 32 and ρ = 1 in

A Appendix: PoS Perturbation Details. adversarial training. Where no attack is found for

a given question sampled during SGD training,

We exclude these PoS-tags when computing per- we instead consider a different sample from the

turbations: ‘IN’, ‘DT’, ‘.’, ‘VBD’, ‘VBZ’, ‘WP’, original training data. We evaluate the model on

‘WRB’, ‘WDT’, ‘CC’, ‘MD’, ‘TO’. its validation data every 5,000 steps and perform

early stopping with a patience of 5.

B Appendix: BERT Training Details - NewsQA Following the experimental protocol

SQuAD2.0 for SQuAD, we further test a BERT BASE model

We first train a BERT L ARGE model on the on NewsQA, which – like SQuAD2.0 – contains

full training set for 2 epochs, where it reaches unanswerable questions. As annotators often do not

78.32%EM and 81.44%F1 , largely comparable to fully agree on a single annotation in NewsQA, we

results (78.7%EM and 81.9%F1 ) reported by De- opt for a conservative choice and filter the dataset,

vlin et al. (2019). We then however choose a differ- such that only samples with the same majority an-

ent training setup as we would like to conduct ad- notation are retained, following the preprocessing

versarial attacks on data inaccessible during train- pipeline of Talmor and Berant (2019).

ing: we split off 5% from the original training set

D Appendix: Generalisation to Held-out

for development purposes and retain the remaining

Perturbations

95% for training, stratified by articles. We use this

development data to tune hyperparameters and per- Vulnerability results for new, held-out perturbation

form early stopping, evaluated every 5,000 steps spaces, disjoint from those used during training,

with batch size 16 and patience 5, and tune hyper- can be found in Table 6 for SQuAD2.0, and in

parameters for defence on it. Table 7 for NewsQA.

C Appendix: Adversarial Defence E Appendix: Adversarial Example from

Experiments a Question Collection

SQuAD2.0 We train the BERT L ARGE Searching in a large collection of (mostly unrelated)

model, tuning the hyperparameter λ ∈ natural language questions, e.g. among all ques-

{0.0, 0.01, 0.1, 0.25, 0.5, 0.75, 1.0, 2.0}, and tions in the SQuAD2.0 training set, yields several

find λ = 0.25 to work best for both defences. cases where the prediction of the model increases,

We tune the threshold for predicting NoAnswer compared to the original question, see Table 8 for

based on validation data and report results on one such example. These cases are however rela-

the test set (the original SQuAD2.0 Dev set). All tively rare, and we found the yield of this type of

experiments are executed with batch size 16, NE search to be very low.

1163F Appendix: Attack Examples

Table 9 shows more examples of successful adver-

sarial attacks on SQuAD2.0.

G Appendix: Biased Data Setup

For completeness and direct comparability, we also

include an experiment with the data setup of Lewis

and Fan (2019) (not holding aside a dedicated vali-

dation set). Results can be found in Table 10. We

again observe improvements in the biased data set-

ting. Furthermore, the robust model outperforms

GQA (Lewis and Fan, 2019) in two of the three

subtasks.

H Appendix: Vulnerability Analysis on

NewsQA

Fig. 4 depicts the vulnerability of a BERT L ARGE

model on NewsQA under attacks using NE pertur-

bations.

1164Given Text [...] The Normans were famed for their martial spirit and eventually for their

Christian piety, becoming exponents of the Catholic orthodoxy [...]

Q (orig) What religion were the Normans? 0.78

Q (adv.) IP and AM are most commonly defined by what type of proof system? 0.84

Table 8: Drastic example for lack of specificity: unrelated questions can trigger the same prediction (here: Catholic

orthodoxy), and with higher probability.

Original / Modified Question Prediction Annotation Scores

What ethnic neighborhood in Fresno Kilbride had Chinatown valid 0.998

primarily Japanese residents in 1940? 0.999

The Mitchell Tower MIT is designed to look Magdalen valid 0.96

like what Oxford tower? Tower 0.97

What are some of the accepted general principles of fundamental valid 0.59

European Union Al-Andalus law? rights [...] 0.61

What does the EU’s legitimacy digimon rest on? the ultimate valid 0.38

authority of [...] 0.40

What is Jacksonville’s hottest recorded 104◦ F valid 0.60

temperature atm ? 0.62

Who leads the Student commissioning Government? an Executive same answer 0.61

Committee 0.65

Table 9: Example adversarial questions ( original , attack ), together with their annotation as either a valid coun-

terexample or other type. Top 3 rows: Named entity (NE) perturbations. Bottom 3 rows: PoS perturbations.

Person Date Numerical

EM F1 EM F1 EM F1

GQA (Lewis and Fan, 2019) 53.1 61.9 64.7 72.5 58.5 67.6

BERT BASE - w/ data bias 66.0 72.5 67.1 72.0 46.6 54.5

+ Robust Training 67.4 72.8 68.1 74.4 56.3 64.5

Table 10: Robust training leads to improved generalisation under train/test distribution mismatch (data bias).

1165You can also read